A synthetic intelligence (AI) model that focuses on reading and understanding human emotions has emerged. The intention is to read 53 sorts of emotions, accurately discover human intentions, and help the large-scale language model (LLM) provide accurate answers tailored to intent and context.

VentureBeat reported on the twenty eighth (local time) that a startup called Hume AI claims to be ‘the primary conversational AI with emotional intelligence.’ Public demo of ‘EVI (Empathetic Voice Interface)’It was reported that it had been released.

In keeping with this, Hume AI, co-founded by Alan Cowen, a former Google DeepMind researcher, was led by EQT Ventures and succeeded in raising $50 million in Series B round from Union Square Ventures, Nett Friedman & Daniel Gross, Comcast Ventures, LG, etc.

This company, named after Scottish philosopher David Hume, unlike other AI corporations, uses a voice interface slightly than text prompts. It really works on devices with microphones, whether PCs or smartphones. Also, slightly than constructing our own model, our goal is to construct an AI assistant that supports other corporations' LLM-based chatbots in API form.

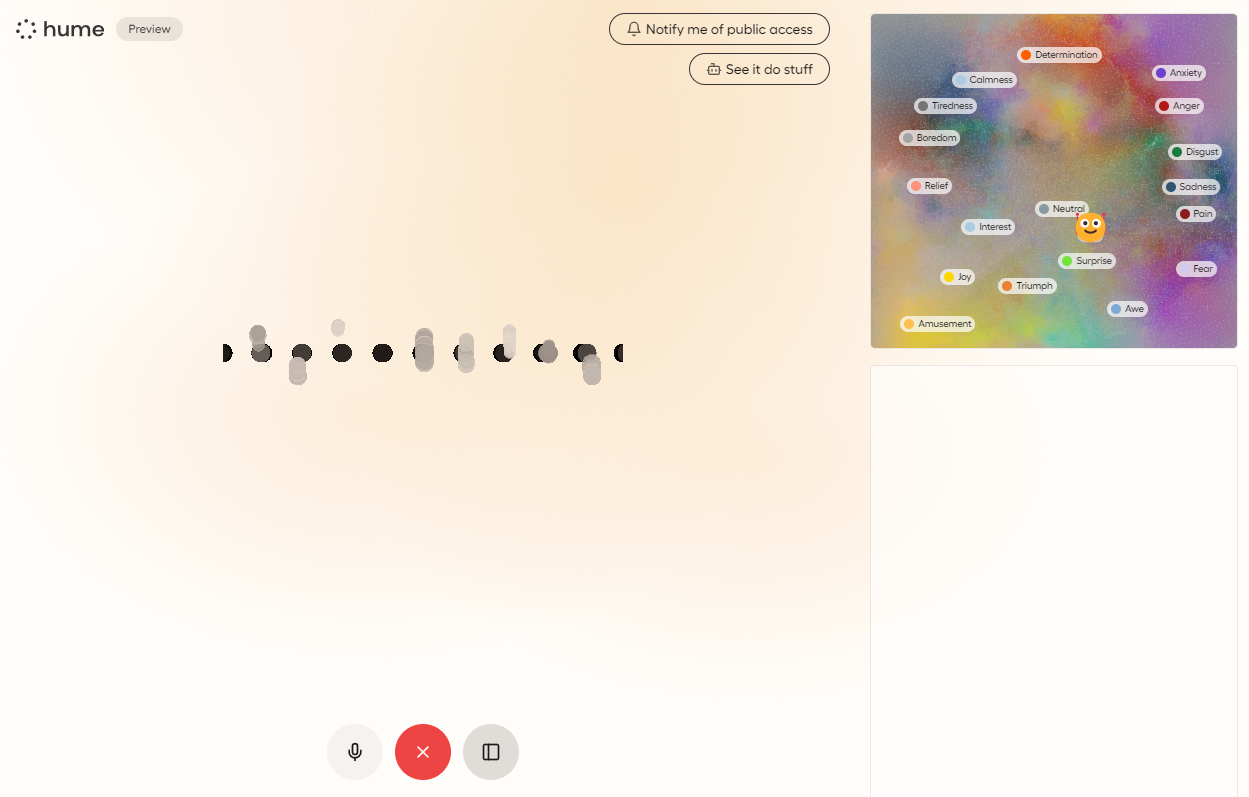

AI that reads emotions from human voices has already been attempted several times. Nonetheless, this company explained that it distinguishes a whopping 53 emotions beyond the 5 to 7 common emotions resembling happiness, sadness, anger, and fear. This includes various states resembling longing, awkwardness, boredom, annoyance, boredom, calmness, satisfaction, disappointment, confusion, doubt, ecstasy and embarrassment.

“Emotional intelligence includes the power to infer intentions and preferences from human behavior, and that is on the core of what AI interfaces try to attain,” said Cowen, co-founder. “Inferring what the user wants and executing it with precision has very real implications. “Emotional intelligence is a very powerful requirement for AI interfaces,” he said.

To attain this, the model was trained on controlled experimental data from tens of hundreds of individuals world wide. This process was explained in two papers. The primary paper included the voices of 16,000 people from the US, China, India, South Africa, and Venezuela, and the second paper even went so far as matching the voices and facial expressions of 5,833 people from 6 countries, including Ethiopia.

Hume AI trained EVI to measure 53 emotional tones through photos and audio obtained from two studies. It also provides an API that permits EVI to be applied to existing models.

Cowen's founders said they have already got enterprise customers in fields resembling health and wellness, customer support, education, clinical research and robotics.

He said, “EVI can function an interface for any app. “In truth, we're already using it as an interactive guide on our website,” he said. “Developers can use our API to construct personal AI assistants, agents, and wearables that may improve our each day lives.”

Moreover, regarding the potential of exploiting emotional technology, the corporate explained that it supports the 'Hume Initiative', a separate non-profit organization that brings together social scientists, ethicists, cyber law experts, and AI researchers to debate the moral use of empathic AI.

Among the many principles presented through this are: 'Algorithms that discover emotions should only serve goals consistent with well-being, respond appropriately to extreme cases, protect users from exploitation, and increase users' emotional awareness and agency. 'It includes provisions resembling:

The EVI demo can also be receiving favorable reviews. After its launch, various technical officials and firms have been sharing their experiences and results on X (Twitter), etc.

Bussell CEO Guillermo Roach said, “It’s the most effective AI demos I’ve ever seen,” and Web Activism President Abby Schiffman expressed her admiration, saying, “This might change all the things.”

In relation to this, in October last 12 months, Rayon, a non-profit organization that builds AI learning datasets, began constructing datasets that will be used to create chatbots that understand human emotions or AI models that convert text into speech.

The name of this project, which goals to construct a dataset reaching 1 million inside this 12 months, is ‘Open Empathic.’

Reporter Lim Da-jun ydj@aitimes.com