Intel has unveiled ‘LDM3D’, a generative AI model that creates 3D models with text descriptions. It’s the primary model able to generating 360-degree 3D images with depth information from text prompts.

On the annual conference ‘CVPR 2023’ on computer vision and pattern recognition held in Canada on the twenty second (local time), Intel announced LDM3D (Latent Diffusion Model for 3D), a latest diffusion model that creates realistic 3D visual content.

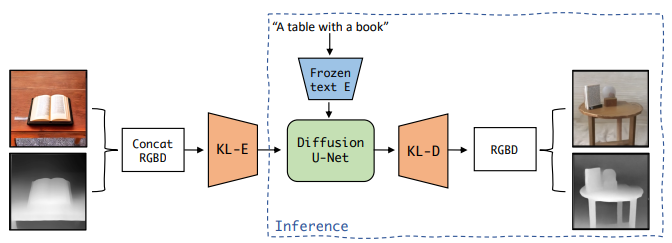

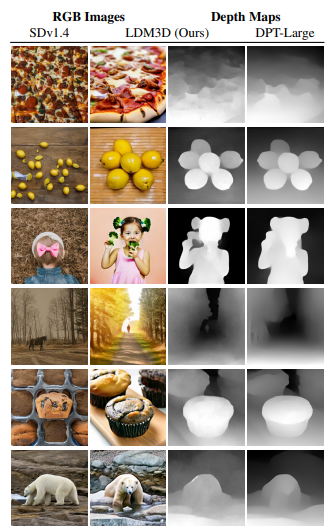

LDM3D is a type of latent stable diffusion model (LDM). Most generative AI in use today is restricted to 2D image creation. Intel worked with Blockade Labs to coach LDM3D on samples of the LAION-400M database containing over 400 million images and captions. This allows LDM3D to generate images and depth maps through text prompts with the identical variety of parameters as existing LDMs. Compared to straightforward processing methods for depth estimation, it might probably provide a more accurate relative depth for every pixel in a picture, saving significant time.

It may revolutionize the best way users interact with digital content by enabling them to experience text prompts in previously unimaginable ways.

Images and depth maps generated with LDM3D allow users to show textual depictions of serene tropical beaches, modern skyscrapers, or sci-fi world descriptions into 360-degree detailed panoramas.

This ability to capture vast amounts of data immediately enhances overall realism and immersion, enabling modern applications in entertainment, gaming, interior design, real estate listings, and industries starting from virtual museums to immersive virtual reality (VR) experiences. can

The LDM3D model was trained on an Intel AI supercomputer running an Intel Xeon processor and an Intel Havana Gaudi AI accelerator. The resulting model and pipeline mix the generated RGB images and depth maps to create an immersive 360-degree view.

Based on Intel Xeon processors and Havana Gaudi AI accelerators, LDM3D can create modern skyscrapers or 360-degree spaces that appear in science fiction movies. The 360-degree space created in this fashion is used for entertainment similar to virtual reality and augmented reality, real estate sales, and virtual museums.

To show the potential of LDM3D, Intel and Blockade Labs also developed DepthFusion, an application that utilizes standard 2D RGB photos and depth maps to create immersive, interactive 360-degree view experiences.

LDM3D VR demo where you may see a 360-degree 3D panorama by clicking the arrow within the video (Video=Intel)

DepthFusion leverages TouchDesigner, a node-based visual programming language for real-time multimedia content, to show text prompts into interactive and immersive digital experiences. The LDM3D model is a single model that creates each an RGB image and a depth map, saving memory space and improving latency.

LMD3D is provided as open source through Hugging Face. AI researchers can further refine the system and tailor it to the applying.

Meanwhile, LDM3D also won the Best Paper Award on the CVPR 2023 conference held in Vancouver, Canada from the 18th to the twenty second.

Reporter Park Chan cpark@aitimes.com

… [Trackback]

[…] Here you can find 79812 additional Information to that Topic: bardai.ai/artificial-intelligence/intel-unveils-ldm3d-model-that-creates-3d-models-from-text-prompts/ […]

… [Trackback]

[…] Find More Information here on that Topic: bardai.ai/artificial-intelligence/intel-unveils-ldm3d-model-that-creates-3d-models-from-text-prompts/ […]

… [Trackback]

[…] Here you can find 45140 additional Info to that Topic: bardai.ai/artificial-intelligence/intel-unveils-ldm3d-model-that-creates-3d-models-from-text-prompts/ […]

… [Trackback]

[…] There you can find 31326 more Info on that Topic: bardai.ai/artificial-intelligence/intel-unveils-ldm3d-model-that-creates-3d-models-from-text-prompts/ […]

… [Trackback]

[…] Information to that Topic: bardai.ai/artificial-intelligence/intel-unveils-ldm3d-model-that-creates-3d-models-from-text-prompts/ […]

… [Trackback]

[…] There you will find 1387 more Info on that Topic: bardai.ai/artificial-intelligence/intel-unveils-ldm3d-model-that-creates-3d-models-from-text-prompts/ […]

… [Trackback]

[…] Information to that Topic: bardai.ai/artificial-intelligence/intel-unveils-ldm3d-model-that-creates-3d-models-from-text-prompts/ […]

… [Trackback]

[…] Read More on that Topic: bardai.ai/artificial-intelligence/intel-unveils-ldm3d-model-that-creates-3d-models-from-text-prompts/ […]

… [Trackback]

[…] Find More to that Topic: bardai.ai/artificial-intelligence/intel-unveils-ldm3d-model-that-creates-3d-models-from-text-prompts/ […]

… [Trackback]

[…] Read More Info here on that Topic: bardai.ai/artificial-intelligence/intel-unveils-ldm3d-model-that-creates-3d-models-from-text-prompts/ […]

… [Trackback]

[…] Read More Information here on that Topic: bardai.ai/artificial-intelligence/intel-unveils-ldm3d-model-that-creates-3d-models-from-text-prompts/ […]