Recently, Large Vision Language Models (LVLMs) corresponding to LLava and MiniGPT-4 have demonstrated the power to grasp images and achieve high accuracy and efficiency in several visual tasks. While LVLMs excel at recognizing common objects on account of their extensive training datasets, they lack specific domain knowledge and have a limited understanding of localized details inside images. This limits their effectiveness in Industrial Anomaly Detection (IAD) tasks. Alternatively, existing IAD frameworks can only discover sources of anomalies and require manual threshold settings to tell apart between normal and anomalous samples, thereby restricting their practical implementation.

The first purpose of an IAD framework is to detect and localize anomalies in industrial scenarios and product images. Nevertheless, on account of the unpredictability and rarity of real-world image samples, models are typically trained only on normal data. They differentiate anomalous samples from normal ones based on deviations from the everyday samples. Currently, IAD frameworks and models primarily provide anomaly scores for test samples. Furthermore, distinguishing between normal and anomalous instances for every class of things requires the manual specification of thresholds, rendering them unsuitable for real-world applications.

To explore the use and implementation of Large Vision Language Models in addressing the challenges posed by IAD frameworks, AnomalyGPT, a novel IAD approach based on LVLM, was introduced. AnomalyGPT can detect and localize anomalies without the necessity for manual threshold settings. Moreover, AnomalyGPT can even offer pertinent information in regards to the image to interact interactively with users, allowing them to ask follow-up questions based on the anomaly or their specific needs.

Industry Anomaly Detection and Large Vision Language Models

Existing IAD frameworks could be categorized into two categories.

- Reconstruction-based IAD.

- Feature Embedding-based IAD.

In a Reconstruction-based IAD framework, the first aim is to reconstruct anomaly samples to their respective normal counterpart samples, and detect anomalies by reconstruction error calculation. SCADN, RIAD, AnoDDPM, and InTra make use of the several reconstruction frameworks starting from Generative Adversarial Networks (GAN) and autoencoders, to diffusion model & transformers.

Alternatively, in a Feature Embedding-based IAD framework, the first motive is to concentrate on modeling the feature embedding of normal data. Methods like PatchSSVD tries to search out a hypersphere that may encapsulate normal samples tightly, whereas frameworks like PyramidFlow and Cfl project normal samples onto a Gaussian distribution using normalizing flows. CFA and PatchCore frameworks have established a memory bank of normal samples from patch embeddings, and use the gap between the test sample embedding normal embedding to detect anomalies.

Each these methods follow the “one class one model”, a learning paradigm that requires a considerable amount of normal samples to learn the distributions of every object class. The requirement for a considerable amount of normal samples make it impractical for novel object categories, and with limited applications in dynamic product environments. Alternatively, the AnomalyGPT framework makes use of an in-context learning paradigm for object categories, allowing it to enable interference only with a handful of normal samples.

Moving ahead, now we have Large Vision Language Models or LVLMs. LLMs or Large Language Models have enjoyed tremendous success within the NLP industry, they usually at the moment are being explored for his or her applications in visual tasks. The BLIP-2 framework leverages Q-former to input visual features from Vision Transformer into the Flan-T5 model. Moreover, the MiniGPT framework connects the image segment of the BLIP-2 framework and the Vicuna model with a linear layer, and performs a two-stage finetuning process using image-text data. These approaches indicate that LLM frameworks may need some applications for visual tasks. Nevertheless, these models have been trained on general data, they usually lack the required domain-specific expertise for widespread applications.

How Does AnomalyGPT Work?

AnomalyGPT at its core is a novel conversational IAD large vision language model designed primarily for detecting industrial anomalies and pinpointing their exact location using images. The AnomalyGPT framework uses a LLM and a pre-trained image encoder to align images with their corresponding textual descriptions using stimulated anomaly data. The model introduces a decoder module, and a prompt learner module to reinforce the performance of the IAD systems, and achieve pixel-level localization output.

Model Architecture

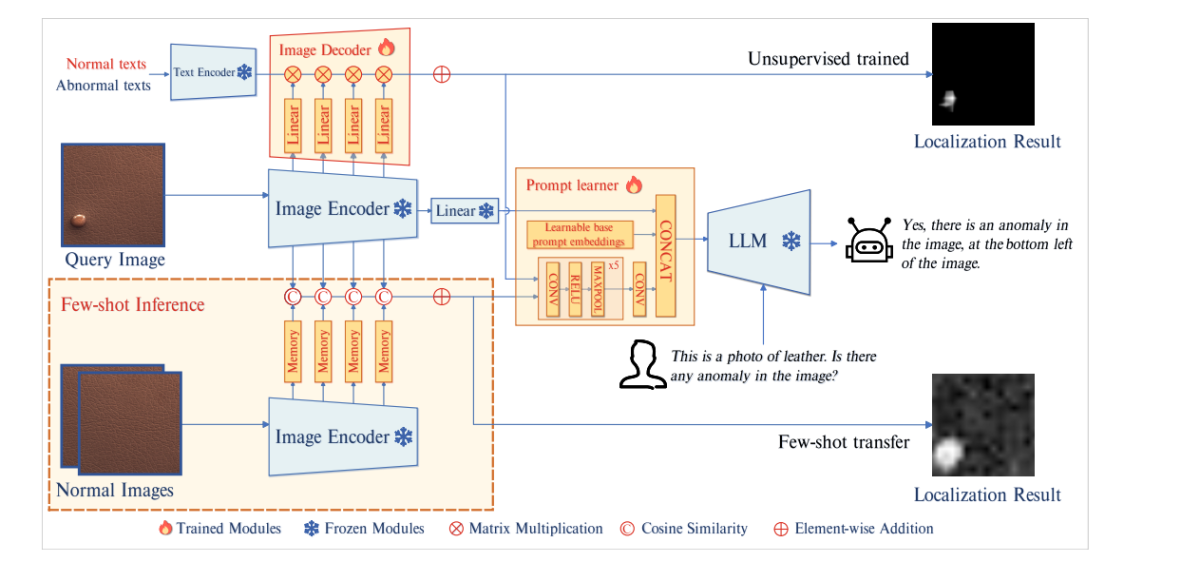

The above image depicts the architecture of AnomalyGPT. The model first passes the query image to the frozen image encoder. The model then extracts patch-level features from the intermediate layers, and feeds these features to a picture decoder to compute their similarity with abnormal and normal texts to acquire the outcomes for localization. The prompt learner then converts them into prompt embeddings which can be suitable for use as inputs into the LLM alongside the user text inputs. The LLM model then leverages the prompt embeddings, image inputs, and user-provided textual inputs to detect anomalies, and pinpoint their location, and create end-responses for the user.

Decoder

To realize pixel-level anomaly localization, the AnomalyGPT model deploys a light-weight feature matching based image decoder that supports each few-shot IAD frameworks, and unsupervised IAD frameworks. The design of the decoder utilized in AnomalyGPT is inspired by WinCLIP, PatchCore, and APRIL-GAN frameworks. The model partitions the image encoder into 4 stages, and extracts the intermediate patch level features by every stage.

Nevertheless, these intermediate features haven’t been through the ultimate image-text alignment which is why they can not be compared directly with features. To tackle this issue, the AnomalyGPT model introduces additional layers to project intermediate features, and align them with text features that represent normal and abnormal semantics.

Prompt Learner

The AnomalyGPT framework introduces a prompt learner that attempts to remodel the localization result into prompt embeddings to leverage fine-grained semantics from images, and in addition maintains the semantic consistency between the decoder & LLM outputs. Moreover, the model incorporates learnable prompt embeddings, unrelated to decoder outputs, into the prompt learner to offer additional information for the IAD task. Finally, the model feeds the embeddings and original image information to the LLM.

The prompt learner consists of learnable base prompt embeddings, and a convolutional neural network. The network converts the localization result into prompt embeddings, and forms a set of prompt embeddings which can be then combined with the image embeddings into the LLM.

Anomaly Simulation

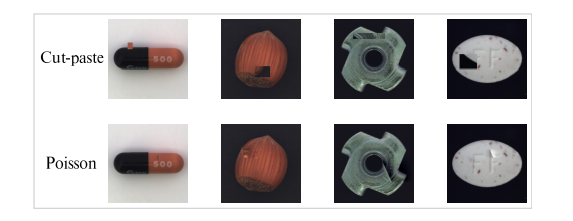

The AnomalyGPT model adopts the NSA method to simulate anomalous data. The NSA method uses the Cut-paste technique through the use of the Poisson image editing method to alleviate the discontinuity introduced by pasting image segments. Cut-paste is a commonly used technique in IAD frameworks to generate simulated anomaly images.

The Cut-paste method involves cropping a block region from a picture randomly, and pasting it right into a random location in one other image, thus making a portion of simulated anomaly. These simulated anomaly samples can enhance the performance of IAD models, but there’s a drawback, as they’ll often produce noticeable discontinuities. The Poisson editing method goals to seamlessly clone an object from one image to a different by solving the Poisson partial differential equations.

The above image illustrates the comparison between Poisson and Cut-paste image editing. As it may possibly be seen, there are visible discontinuities within the cut-paste method, while the outcomes from Poisson editing seem more natural.

Query and Answer Content

To conduct prompt tuning on the Large Vision Language Model, the AnomalyGPT model generates a corresponding textual query on the idea of the anomaly image. Each query consists of two major components. The primary a part of the query consists of an outline of the input image that gives information in regards to the objects present within the image together with their expected attributes. The second a part of the query is to detect the presence of anomalies inside the thing, or checking if there’s an anomaly within the image.

The LVLM first responds to the query of if there’s an anomaly within the image? If the model detects anomalies, it continues to specify the situation and the variety of the anomalous areas. The model divides the image right into a 3×3 grid of distinct regions to permit the LVLM to verbally indicate the position of the anomalies as shown within the figure below.

The LVLM model is fed the descriptive knowledge of the input with foundational knowledge of the input image that aids the model’s comprehension of image components higher.

Datasets and Evaluation Metrics

The model conducts its experiments totally on the VisA and MVTec-AD datasets. The MVTech-AD dataset consists of 3629 images for training purposes, and 1725 images for testing which can be split across 15 different categories which is why it’s one of the popular dataset for IAD frameworks. The training image features normal images only whereas the testing images feature each normal and anomalous images. Alternatively, the VisA dataset consists of 9621 normal images, and nearly 1200 anomalous images which can be split across 12 different categories.

Moving along, similar to the prevailing IAD framework, the AnomalyGPT model employs the AUC or Area Under the Receiver Operating Characteristics as its evaluation metric, with pixel-level and image-level AUC used to evaluate anomaly localization performance, and anomaly detection respectively. Nevertheless, the model also utilizes image-level accuracy to judge the performance of its proposed approach since it uniquely allows to find out the presence of anomalies without the requirement of organising the thresholds manually.

Results

Quantitative Results

Few-Shot Industrial Anomaly Detection

The AnomalyGPT model compares its results with prior few-shot IAD frameworks including PaDiM, SPADE, WinCLIP, and PatchCore because the baselines.

The above figure compares the outcomes of the AnomalyGPT model compared with few-shot IAD frameworks. Across each datasets, the tactic followed by AnomalyGPT outperforms the approaches adopted by previous models when it comes to image-level AUC, and in addition returns good accuracy.

Unsupervised Industrial Anomaly Detection

In an unsupervised training setting with a lot of normal samples, AnomalyGPT trains a single model on samples obtained from all classes inside a dataset. The developers of AnomalyGPT have opted for the UniAD framework since it is trained under the identical setup, and can act as a baseline for comparison. Moreover, the model also compares against JNLD and PaDim frameworks using the identical unified setting.

The above figure compares the performance of AnomalyGPT compared to other frameworks.

Qualitative Results

The above image illustrates the performance of the AnomalyGPT model in unsupervised anomaly detection method whereas the figure below demonstrates the performance of the model within the 1-shot in-context learning.

The AnomalyGPT model is able to indicating the presence of anomalies, marking their location, and providing pixel-level localization results. When the model is in 1-shot in-context learning method, the localization performance of the model is barely lower compared to unsupervised learning method due to absence of coaching.

Conclusion

AnomalyGPT is a novel conversational IAD-vision language model designed to leverage the powerful capabilities of enormous vision language models. It could possibly not only discover anomalies in a picture but in addition pinpoint their exact locations. Moreover, AnomalyGPT facilitates multi-turn dialogues focused on anomaly detection and showcases outstanding performance in few-shot in-context learning. AnomalyGPT delves into the potential applications of LVLMs in anomaly detection, introducing latest ideas and possibilities for the IAD industry.