A generative artificial intelligence (AI) model that has evolved over a whole bunch of generations has emerged through merging technology that selects only the strengths of multiple artificial intelligence (AI) models to create a recent model. The most important character is Lillian Jones' Sakana AI, considered one of the authors of the Transformer paper, who announced that she would introduce a collective intelligence system like a faculty of fish into AI.

On the twenty first, Sakana AI introduced a way called 'Evolutionary Model Merge' through an article titled 'Evolving Latest Foundation Model: Utilizing the Power of Model Development Automation' and introduced three models built through it. .

Sakana said his primary research focus is to create recent foundational models by applying ideas inspired by nature, akin to evolution and collective intelligence. The goal, she explained, isn’t to coach specific individual models, but to construct a machine that routinely generates a foundation model.

Model merging is a technology that mixes two or more LLMs right into a single model. Simply put, it’s an idea that mixes two or more models, eliminates conflicting or overlapping parts, and takes only the strengths of every model.

It is predicated on technologies akin to SLERP (Spherical Linear Interpolation), TIES-Merging, DARE, and Passthrough, which have appeared since last yr. Through this, merge models are appearing one after one other on Hugging Face’s open LLM leaderboard.

Particularly, the advantage of this method is that it doesn’t require learning like existing single models, so the model will be built inexpensively with no GPU.

Sakana adopted this approach of merging, constructing a model that evolves over generations. Specifically, two methods are used: ▲'data flow space (layer) merge' method, which selects and rearranges layers from the present model and imports them right into a recent model, and ▲'parameter space (weight) merge' method, which mixes parameters between existing models. did. The reason is that through this, only the outstanding parts were chosen and picked up, and the performance was constantly advanced by increasing the variety of generations.

In the course of the actual development process, Sakana took three open source models and 'crossed' them to create greater than 100 descendant models. The outcomes were benchmarked and only the models with the most effective performance were chosen and reused as material to create the following generation model. The reason is that this process was repeated for a whole bunch of generations until the ultimate model was chosen.

The models presented through this are the Japanese LLM and the Japanese Vision-Language Model (VLM), which enable mathematical reasoning.

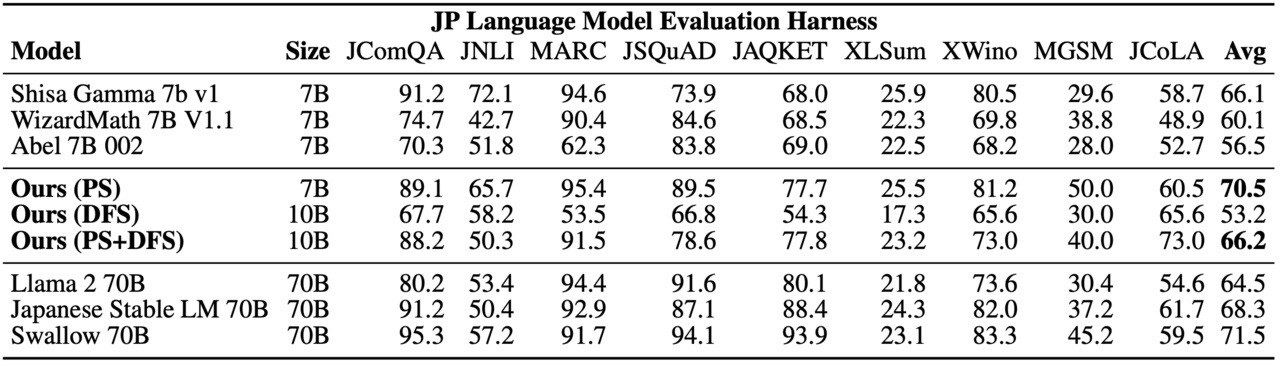

It was also reported that because of this of the benchmark test, each models achieved top-level results. Particularly, it was emphasized that the 7B parameter Japanese Mathematics LLM achieved the most effective performance in various Japanese LLM benchmarks and was sufficient to develop into a general-purpose Japanese LLM.

Moreover, Japanese VLM can handle specific cultural content surprisingly well and achieved the most effective results when tested on a Japanese source dataset of Japanese image-description pairs.

As well as, we discovered that this method also applies to the 'Diffusion Model' for image generation, and developed a picture generation model that evolved based on Stability AI's 'SDXL'. This explains that it’s optimized to perform inference in just 4 diffusion stages, and the generation speed could be very fast.

Finally, Sanaka introduced that through evolution through model merger, he developed three powerful base models in Japanese: ▲EvoLLM-JP (Japanese language) ▲EvoVLM-JP (vision-language) ▲EvoSDXL-JP (image generation) .

double LLMclass VLMIt was released as open source through Hugging Face, etc. He added that he plans to announce separate research results on the image generation model.

“We discovered that our approach can routinely merge models from completely different domains, akin to ‘Non-English Language and Mathematics’ and ‘Non-English Language and Imagery,’” Sakana said. “That is something that was previously considered difficult,” he said.

He also emphasized, “This merging method, which is routinely performed by our algorithm, is recent,” and “it could be difficult for experts to find through trial and error.”

He also said that this launch is just the start.

“We imagine this study is just the start of long-term development, and the trend toward model merging will only intensify given the skyrocketing cost of coaching foundation models.”

“By leveraging an unlimited open source ecosystem of diverse models, organizations akin to government agencies and enterprises can explore cost-effective, evolutionary approaches,” he said. “By having the ability to develop the bottom model for every step more quickly, we will result in a faster innovation cycle.”

Meanwhile, Sakana is a startup founded by Lillian Jones, who left Google last yr, together with her colleague David Ha. On the time of founding the corporate, they said, “The limitation of the present AI model is that it has a weak and unchangeable structure,” and added, “We are going to create an AI model that adapts well to the environment like a faculty of fish.” Sakana is a Japanese word meaning school of fish.

Particularly, he arrange an office in Tokyo and declared that he would develop a Japanese language model that was lagging behind in development in comparison with Western language models. It is understood that through this, they received support from the Japanese government.

Reporter Park Chan cpark@aitimes.com