Latest Atlas reported on the twenty third (local time) that Nvidia developed and released the ‘Video Latent Diffusion Model (Video LDM)’, a video generation artificial intelligence (AI) model, in collaboration with Cornell University in the US.

‘Video LDM’ developed by Nvidia this time is a video creation AI that may create videos with a resolution of as much as 2048×1280 pixels at a rate of 24 frames per second for as much as 4.7 seconds in line with the outline entered in text.

A Latent Diffusion Model (LDM), pre-trained to generate images from text based on ‘stable diffusion’, was fine-tuned with 1000’s of videos to learn animate images.

It does the duty of predicting the expected changes in each a part of the image over time for a particular time frame. It creates a series of keyframes out of the whole sequence of frames after which uses one other LDM to fill the frames in between the keyframes to finish the whole sequence.

VideoLDM has as much as 4.1 billion parameters, of which only 2.7 billion are used for fine-tuning the video. Although this is sort of small in comparison with the dimensions of recent generative AI models, it was possible to generate a wide range of temporally consistent, high-quality videos through an efficient LDM approach.

VideoLDM could be used to generate personalized videos by post-learning specific images. For instance, if you happen to tell it to make use of a picture of a cat to create a video of a cat playing within the grass, it would create a video featuring the identical cat as the unique image.

By utilizing the convolutional-in-time synthesis function of this model to extend the sampling of frames generated per unit time, it is feasible to generate a protracted video of seven.3 seconds at 24 frames per second, although the standard barely deteriorates. .

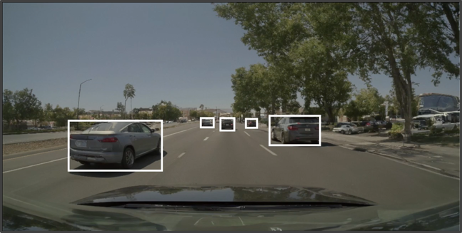

Specifically, by applying this method, driving scenes lasting as much as 5 minutes could be produced with a resolution of 1024×512 pixels. The next video could be created using the initial frames generated based on the bounding box to simulate specific driving scenarios.

As well as, this model can generate videos of varied scenarios from the identical initial frame.

VideoLDM shall be presented on the IEEE Conference on Computer Vision and Pattern Recognition in Vancouver, Canada, starting June 18, 2023. Since this can be a research-stage project, it shouldn’t be yet known how this may become Nvidia’s services or products.

Chan Park, cpark@aitimes.com

healing meditation