Meta has unveiled a latest AI model that generates speech from text by learning in an identical technique to generative AI for text or images.

Unlike existing models, it’s evaluated that it has taken a step forward in the sphere of voice generation AI by developing a model that may perform various voice generation tasks without special training.

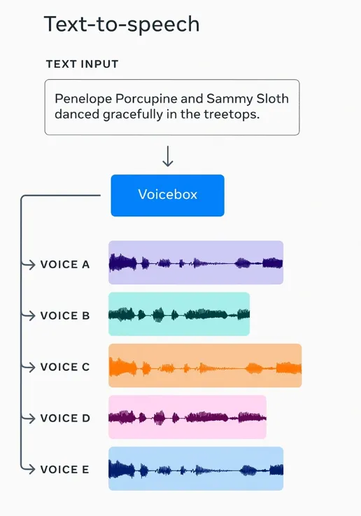

In a blog post on the sixteenth (local time), Meta announced “Voicebox,” a state-of-the-art generative AI model that features the power to convert text to speech, edit audio, and work in multiple languages.

Voicebox is a flexible voice generation model able to generating high-quality audio samples in quite a lot of styles. You’ll be able to create latest voices from text descriptions or modify existing audio samples. The model supports quite a lot of tasks, including speech synthesis in six languages, noise removal, content editing, style conversion, and various sample generation.

“Voicebox might be probably the most versatile voice generation model,” said Meta CEO Mark Zuckerberg.

Generally, generative AI models for speech are trained using training data suitable for the style of work to be performed. It’s difficult to perform multiple tasks in a single model because a model can only be trained with data specially prepared to perform a particular task.

For instance, text-to-speech models are trained using only small, highly curated, labeled data sets, so that they can’t be used to edit or style-transform audio samples at the identical time.

To beat these limitations, Meta built a voicebox based on a latest approach called ‘Flow Matching’ that improved the performance of the diffusion model.

Through this, it learns from a training data set consisting of raw audio and transcripts that will not be specifically labeled, and may perform various tasks without separate training for every task.

It has the advantage of with the ability to collect training data on a bigger scale since it doesn’t require labeling of coaching data.

In comparison with Microsoft’s Vall-E, a competing model, the voicebox based on flow matching lowered the text-to-speech error rate from 10.9% to five.2% and was 20 times faster.

Voicebox has learned from over 50,000 hours of audio and transcripts recorded thus far from public domain audiobooks in English, French, Spanish, German, Polish and Portuguese, with voice samples it references and transcripts of the voices it is going to generate as input. If given, generate a voice sample speaking the script with the referenced voice.

Beyond easy text-to-speech technology, the model can populate speech in text context, predict which words will be spoken, and generate snippets of audio recordings.

Voicebox (video=meta)

Voicebox can take a two-second long input sample and generate speech from text within the sample’s audio style. This feature is beneficial for helping individuals who cannot speak, or for customizing the voices of characters and virtual assistants.

Given a voice sample and a text passage in one in all English, French, German, Spanish, Polish or Portuguese, Voicebox can generate a text reading in that language. This feature will be used to permit people to speak in a natural way even in the event that they don’t speak the identical language.

Voicebox can be useful for removing voice noise and editing. The model can partially edit audio samples by generating speech using in-context learning.

As a substitute of getting to re-record all the voice, you possibly can replace erroneous words or simply create noise-damaged parts. This feature can simplify the technique of organizing and editing audio samples, much like the image editing tools used to regulate photos.

Learning from quite a lot of real-world data, Voicebox can generate voices that higher represent the way in which people speak in the actual world, across six languages. You should use this feature to generate synthetic data for training a voice assistant model.

Actually, it was found that a voice recognition model trained with synthetic voices generated by Voicebox showed almost the identical performance as a model trained with real voices. The error rate was reduced by 45-70% with synthesized speech generated from the present text-to-speech model, while the error rate drop was only one% within the voicebox.

Meta anticipates high utilization of the voice generation model, but has decided to not release the voice model or code to the general public as a consequence of the potential risk of misuse. We consider it is crucial to be open with the AI community and share research to advance the newest advances in AI, but it’s also needed to strike the precise balance between openness and accountability.

As a substitute, Meta shared a research paper outlining the voicebox model’s approach and results, in addition to how one can construct an efficient classifier to discriminate audio from real speech generated by voicebox to mitigate future risks.

Reporter Park Chan cpark@aitimes.com