Artificial intelligence (AI) glasses that take heed to conversations and inform you what to say have come out by mixing the large-scale language model ‘GPT-4’ with voice recognition and augmented reality (AR) technology.

Tom’s Hardware, a technology media, reported on the twentieth (local time) that Stanford University students developed AR smart glasses ‘RizzGPT’ using Open AI’s ChatGPT.

In other words, ChatGPT, which is used for chatting, has been made into a conveyable ChatGPT that may interact with other people in on a regular basis life.

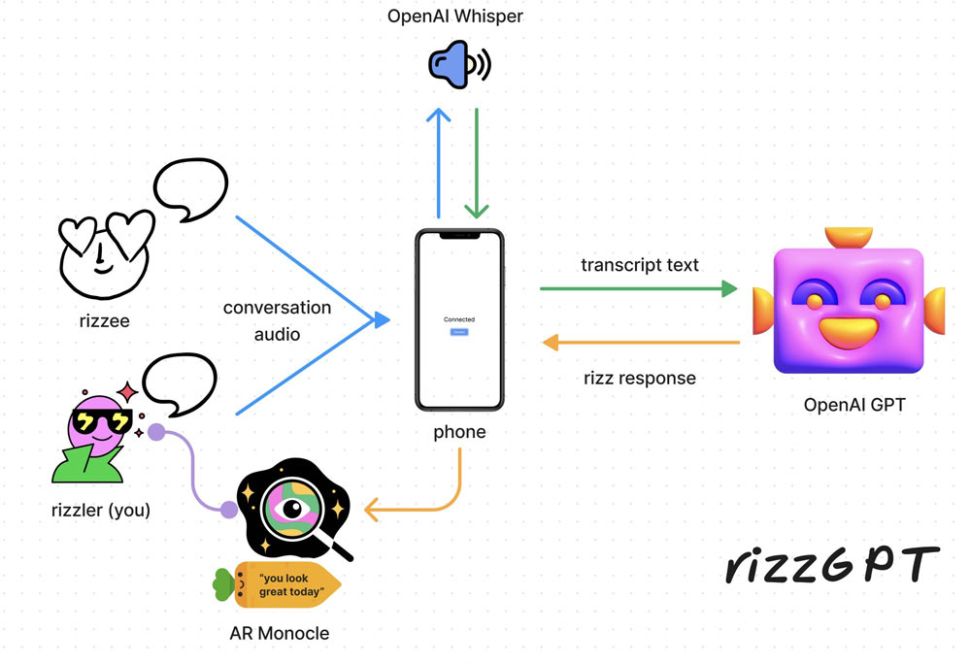

‘LizGPT’ is a product that mixes Open AI’s large-scale language model GPT-4 and automatic voice recognition model ‘Whisper’ with AR glasses through a smartphone.

It uses GPT-4 to acknowledge questions, opinions or images during conversations and generate real-time answers. Whisper converts voice into text.

The AR glasses used Sensible Lab’s clip-type ‘Monocle AR’, which consists of a camera, a microphone, and a high-resolution display. Like a prompter, it displays text that the user can read in real time.

LizGPT understands the voice query input through the microphone attached to the AR glasses or the image detected by the AR camera, and displays the derived answer on the glasses screen.

LizGPT utilizes multi-modal recognition of text, audio and pictures to know what is occurring around you.

Leeds GPT demo video (Video = Stanford University)

Live prompts created on the fly may help people in situations like preparing for a public speaking or job interview, meeting a friend, or ordering food at a restaurant.

For instance, if you run right into a friend, you need to use the GPT-4’s image recognition function to acknowledge the friend and initiate a conversation based on the smartphone messages exchanged with the friend.

Even when ordering food at a restaurant, for those who have a look at or read the menu and ask for a suggestion, OCR or voice recognition shall be used to recommend foods based in your likes and dislikes and what you already know about their dietary value.

Chan Park, cpark@aitimes.com