Google has developed a large-scale language model (LLM) that checks the answers of the LLM through search. This method is alleged to have recorded higher accuracy than human verification.

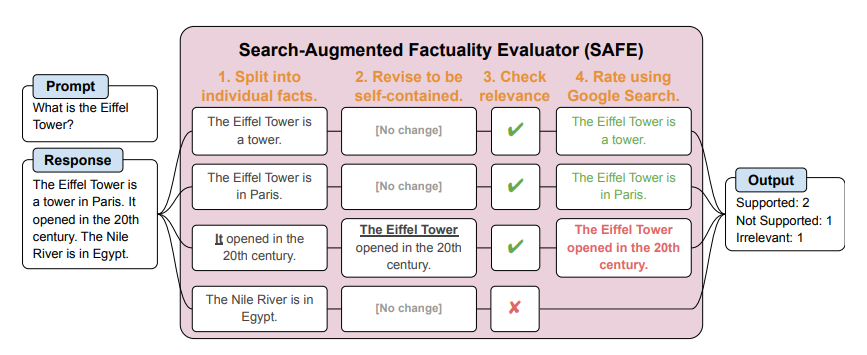

Enterprise Beat was announced on the twenty eighth (local time) by a team of artificial intelligence (AI) experts from Google DeepMind. ‘SAFE (Search Augmented Fact Evaluator)’It was reported that a system called

This method is similar as the way in which humans use Google engines like google, etc. to search out the source of the response to examine whether the LLM's answer is true. The researchers established a separate LLM and checked the right answers using Google search.

To check the system, about 16,000 questions included within the answers of 4 LLM products, including 'ChatGPT', 'Geminii', 'Claude', and 'Palm 2', were used through a benchmark called 'LongFact'. The facts were confirmed.

Consequently, we found that SAFE was 72% consistent with human verification results through crowdsourcing. Specifically, when SAFE and human checks didn’t match, SAFE was found to be correct in 76% of cases.

In addition they identified that larger models generally have fewer factual errors, but even models with one of the best performance output a major variety of false facts.

Specifically, the strength of this method was found to be cost-effective. SAFE is alleged to be about 20 times cheaper than a human check. As the quantity of knowledge generated by LLM continues to grow explosively, it is alleged to be attracting attention as a cost-effective and scalable approach to verifying it.

The researchers SAFE published on GitHubopen for everybody to make use of.

Reporter Lim Da-jun ydj@aitimes.com