American artificial intelligence (AI) startup God It AI has released a recent variety of miniature language model. In comparison with the present large language model (LLM), it’s characterised by significantly reducing operating costs while increasing efficiency.

VentureBeat reported on the thirtieth (local time) that Gotit AI has released ‘ELMAR’, a small language model for businesses that might be applied to chatbot applications.

Godit AI introduced Elma as “notably smaller than ‘GPT-3’ and a cheap solution in the shape of on-premise (in-house built) fairly than cloud.”

These small-scale LLMs are being released one after one other, starting with Meta’s ‘Rama’ that uses 7 billion variables. There are ‘Alpaca’ of Stanford University, ‘Dolly’ of Databricks, and an open source model of Cerebras.

Open AI also introduced a ‘GPT-3.5 Turbo’ model that’s 10 times cheaper than the present chat GPT for business use.

The product released by God It AI this time is characterised by its ability to enhance performance through a fine-tuning process. Training and operation costs are incomparably cheaper than large language models.

Peter Leran, Chairman of GoditAI, said, “Not all corporations need a big and powerful model, and lots of are not looking for their data to be exported. It’s a model that permits us to catch up.”

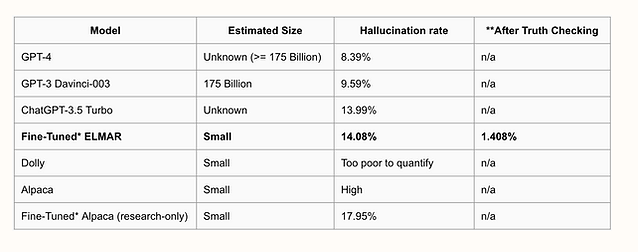

To prove that the performance doesn’t drop, the corporate conducted a benchmark test for ‘hallucination rate (incorrect answer rate)’ on 100 datasets, ‘ChatGPT (GPT-3.5 Turbo)’ and ‘GPT-3′ of OpenAI. GPT-4’, Dolly, and Alpaca were compared and evaluated.

Test results show that small language models perform significantly worse on certain tasks unless they’re fine-tuned.

In response, Godit AI added, “We added a fine-tuning process called ‘Truth Checker’ to complement it, and after fine-tuning, we obtained the identical results as GPT-3 Turbo.” reduced to one-third.”

Reporter Lim Dae-jun of AI Times ydj@aitimes.com