Because the importance of mathematical reasoning abilities of huge language models (LLMs) is emphasized, related technologies are step by step developing. This time, the model with only 20 billion parameters (20B) surpassed the performance of Google's 'Minerva (MInerva 62B)', which was previously considered the strongest.

Mark Tech Post reported on the twenty second (local time) that researchers on the Shanghai AI Laboratory, Tsinghua University, Fudan University, and the University of Southern California have unveiled a large-scale language model (LLM) called 'InternLM-Math'.

In line with this, this model is predicated on Shanghai AI Lab's open source large-scale language model (LLM) 'InternLM2', which is known for processing 300,000 Chinese characters.

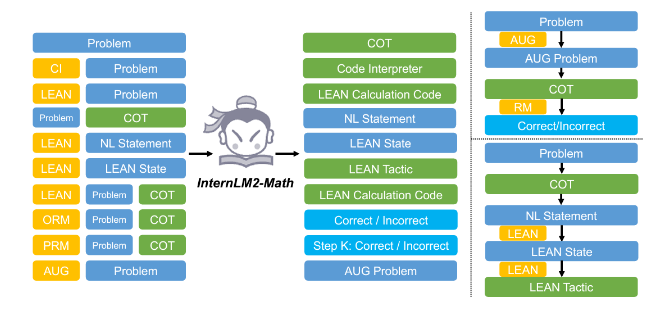

The researchers announced that they integrated functions resembling chain of thought (CoT), compensation modeling, formal inference, and data augmentation inside the sequence transformation model (seq2seq) framework. This comprehensive approach allows a wide selection of mathematical tasks to be handled with exceptional accuracy and depth, he explained.

He also said that he improved his reasoning ability through prior training specializing in mathematical data, and that CoT specifically allowed him to approach problems step-by-step by reflecting the human considering process. Here, it was introduced that coding integration technology was applied to unravel complex problems and create proofs naturally and intuitively.

In consequence of the benchmark, this model surpassed the prevailing open source powerhouses Eleuther AI's 'Llemma 34B' and Minerva 62B, which have larger parameters. Rema can also be a model that appeared in October last yr and achieved similar performance with fewer parameters than Minerva.

The benchmarks included ▲GSM8K (level of 8,500 elementary schools) ▲MATH (level of 12,500 high-level math competitions) ▲MiniF2F (Olympiad level). Specifically, with none high-quality tuning, it scored 30.3 points on the MiniF2F test set, which is one in every of the highest tier. He emphasized that he showed his skills.

After all, this model isn’t the perfect mathematical model. Amongst existing LLMs, the one which received the best math-related benchmark rating is 'GPT-4'. Nevertheless, GPT-4 is thought to have 175 billion parameters (175B), and InternLM-Math, which is simply 1/tenth the scale, claimed to have caught as much as 90% of GPT-4.

Also, on the seventeenth of last month, just before publication of the paper, Google DeepMind researchers released 'AlphaGeometry', which solved 25 out of 30 geometry problems within the International Mathematics Olympiad, as open source.

As well as, last month, domestic startup Upstage developed 'MathGPT' with 13 billion parameters (13B) and announced that it surpassed Microsoft's 'ToRA 13B' model within the benchmark.

As such, mathematical models have been rapidly becoming more compact and high-performance in recent months.

Shanghai AI Lab said, “InternLM-Mitsu has the power to synthesize recent problems, confirm solutions, and improve itself through data expansion, establishing itself as a pivotal tool in the continued quest to deepen understanding of mathematics.” “He said.

Reporter Lim Da-jun ydj@aitimes.com