While Nvidia GPUs are in brief supply because of the generative artificial intelligence (AI) boom, US startups are drawing attention by constructing AI supercomputers with self-developed chips.

Reuters reported on the twentieth (local time) that Silicon Valley’s AI semiconductor startup Cerebras signed a contract price $100 million (roughly 127.4 billion won) to provide one AI supercomputer to G42, a technology company within the United Arab Emirates (UAE).

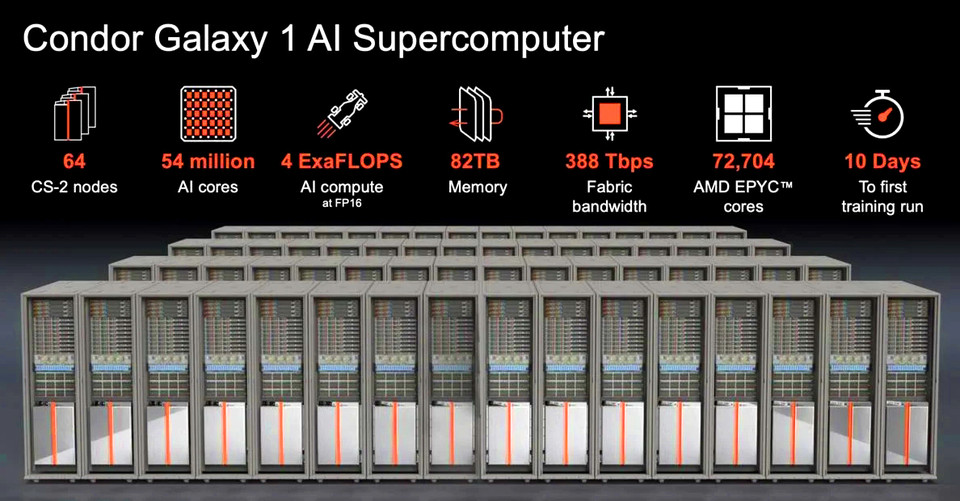

In keeping with the report, the product supplied this time is the ‘Condor Galaxy’, a supercomputer network by which G42 interconnects nine AI supercomputers. Each AI supercomputer that makes up the Condor Galaxy connects 64 Cerebras CS-2 systems into one, providing 4 exaflops of computing power with 54 million cores.

Cerebras said it plans to construct two more units within the US in early 2024 for the G42’s ‘Condor Galaxy’ project, and is discussing build up to 6 more units by the top of 2024.

Specifically, the deal comes as cloud computing providers world wide ride the generative AI boom and seek alternatives to Nvidia GPUs in brief supply.

Within the meantime, although big tech corporations resembling Nvidia have made AI supercomputers, it’s unusual for startups to develop them.

This AI supercomputer is provided with ‘WSE-2’, a large-capacity AI chip developed by Cerebra.

Typically, a whole lot or 1000’s of computer chips are manufactured on 12-inch (30 cm) silicon disks called wafers, that are then packaged by slicing into individual chips, but Cerebras’ WSE-2 is the world’s largest AI processor that processes neural network models with 120 trillion parameters, greater than the variety of neurons in a human brain, using a whole wafer slightly than slicing the chips that make up one wafer. It’s 56 times larger than the most important GPU, with 2.6 trillion transistors and 850,000 computing cores.

Each of the 64 CS-2s that make up this AI supercomputer is provided with one WSE-2 chip.

Because it is large, it provides the computing power of a whole lot of existing AI chips.

“We are able to train AI systems 100 to 1,000 times faster than existing chips,” said Cerebras CEO Andrew Feldman.

Cerebras goals to be an alternative choice to Nvidia within the AI chip market dominated by Nvidia. It’s because corporations world wide, centered on big tech corporations, are developing AI, and the provision of GPUs produced by Nvidia is in brief supply.

Abu Dhabi-based G42, a tech giant with nine operating corporations that include data center and cloud services businesses, said it plans to make use of Cerebras systems to sell AI computing services to healthcare and energy corporations.

Reporter Park Chan cpark@aitimes.com

… [Trackback]

[…] Find More on that Topic: bardai.ai/artificial-intelligence/cerebras-to-supply-4-exaflop-ai-supercomputers-to-uae-enterprises/ […]

chill jazz

… [Trackback]

[…] Find More here on that Topic: bardai.ai/artificial-intelligence/cerebras-to-supply-4-exaflop-ai-supercomputers-to-uae-enterprises/ […]

… [Trackback]

[…] There you will find 55341 additional Information on that Topic: bardai.ai/artificial-intelligence/cerebras-to-supply-4-exaflop-ai-supercomputers-to-uae-enterprises/ […]

… [Trackback]

[…] Info on that Topic: bardai.ai/artificial-intelligence/cerebras-to-supply-4-exaflop-ai-supercomputers-to-uae-enterprises/ […]