Artificial intelligence (AI) has come out that may read people’s thoughts and switch them into sentences.

Researchers on the University of Texas in america have developed an AI system that reconstructs thoughts or imaginations into sentences by measuring human brain activity with functional magnetic resonance imaging (fMRI) through the scientific journal Nature Neuroscience on the 2nd (local time). said it did

fMRI is a technology that measures brain activity by detecting changes related to cerebral blood flow through electromagnetic waves. Although there isn’t a must insert electrodes or implants into the top, interpretation takes longer and is less real-time than devices that directly recognize neuron signals.

The researchers solved the issue by utilizing an AI model to get the gist of what an individual heard or thought, quite than deciphering word by word.

The researchers first asked three participants to hearken to an audio drama or podcast while using the fMRI device. As well as, fMRI scan data and original sentences measured for a complete of 16 hours were trained on Open AI’s large-scale language model, GPT-1, to create an algorithm called ‘Semantic Decoder’ that interprets thoughts based on fMRI data.

Then, brain activity was measured while participants were told a recent story that had not been told during training, and the brain activity data was interpreted by a decoder.

The outcomes showed that the decoder was in a position to capture the words vital to know the meaning of the brand new story, and in some cases, even generate the precise words and phrases utilized in the story.

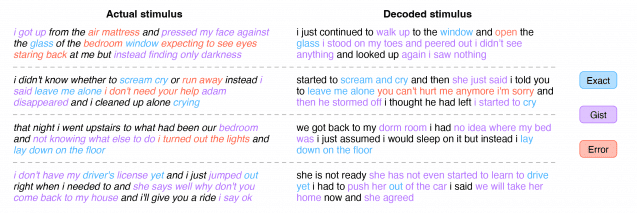

For instance, when a participant was told “I do not have a driver’s license yet,” the decoder decoded it as “She hasn’t even began learning to drive yet.” She also added that she “didn’t know whether to scream, cry, or run. I said ‘Leave me alone’ as an alternative,” the decoder that heard it deciphered as “I began screaming and crying after which said ‘Leave me alone'”.

The researchers said that the decoder was particularly good at getting the gist of the story even when it didn’t match the words. This technology just isn’t a approach to translate words literally, but a approach to convey meaning.

In a later experiment, the identical method was used to successfully reproduce the content of a silent movie that participants watched inside fMRI with high accuracy. You possibly can even reproduce what you’ve got imagined in your head.

The researchers found that the AI was in a position to dig deeper than language, despite the fact that the participants weren’t asked to talk. Because of this AI can understand thoughts, not only words.

The researchers hope that this technology can assist patients with brain damage, stroke, or general paralysis communicate with the people around them.

Meanwhile, some have raised concerns that the technology might be used to steal people’s thoughts. The researchers note that as these technologies advance, policies could also be needed to guard mental privacy in order that they are usually not used for bad purposes.

Chan Park, cpark@aitimes.com

winter sounds