It’s noteworthy that Apple has adopted an on-device model that runs individually from the product as an alternative of a synthetic intelligence (AI) service that connects a big language model (LLM) to the cloud like Microsoft (MS) or Google.

Apple introduced recent products reminiscent of the primary mixed reality (MR) headset ‘Vision Pro’ and the most recent chip ‘M2 Ultra’ on the keynote speech on the World Developer Conference 2023 (WWDC 2023) on the fifth (local time). Alternatively, it introduced greater than 10 AI technologies which were incorporated into products.

The highlight of the day’s presentation was the Vision Pro, but AI also made up a good portion of the presentation under a distinct name. Apple didn’t mention the word ‘AI’ even once, as an alternative using the somewhat academic term ‘transformer’ or ‘machine learning’ (ML).

Specifically, as an alternative of talking about specific AI models or training data or ways to enhance them in the long run, Apple simply referred to AI features included within the product.

“We integrate AI capabilities into our products, but people don’t consider it as AI,” said Apple CEO Tim Cook.

Apple has a distinct approach to AI than Google, Microsoft, or OpenAI. The strategy is to permeate AI throughout the product without putting AI technology within the foreground.

That is since it’s an organization based on hardware, specifically the iPhone and its ecosystem. It’s an evaluation that it’s prioritizing sales of iOS-based devices fairly than reorganizing search like Google or improving productivity software like Microsoft.

OpenAI’s ChatGPT can have logged greater than 100 million users inside two months of launch, but Apple’s logic is that it’s offering AI features that billion iPhone owners use day by day.

Subsequently, at this event, fairly than cloud-based AI that builds large models with supercomputers and large-scale data, on-device AI that runs directly on Apple devices was focused on.

Based on this, Apple in iOS 17 ▲higher auto-correction ▲Dictation and Live Voicemail functions ▲Personalized Volume function for AirPods ▲An improved Smart Stack on watchOS ▲ A recent iPad lock screen that animates live photos; prompt suggestions in the brand new Journal app; and the power to create 3D avatars for video calls in Vision Pro.

AI functions like these can run directly on Apple devices just like the iPhone, but models just like the ChatGPT require lots of of pricy GPUs to work together. By allowing models to run on phones, Apple not only requires less data to run, but additionally avoids data privacy concerns.

He also stressed that Apple is loading recent AI circuits and GPUs into its chips every 12 months, and that it could actually easily adapt to changes and recent technologies by controlling your complete architecture. All this on-device AI processing is a breeze for Apple, due to an Apple Silicon chip called the Neural Engine specifically designed to speed up ML applications.

Recent to iOS 17, Auto-Correction leverages a transformer model for word prediction to supply auto-correction to finish a word or entire sentence if you press the space bar. Transformer models run on-device in your device and protect your privacy when you write.

Dictation allows users to tap the small microphone icon on the iPhone’s keyboard and begin speaking, turning it into text. In iOS 17, the dictation function utilizes the neural engine to supply a transformer-based speech recognition model.

The Live Voicemail feature for the iPhone’s native Phone app signifies that if someone calls an iPhone recipient, gets unreachable and starts leaving a voicemail, the caller’s words are transcribed into text on the recipient’s screen in real time to find out in the event that they should answer the decision. can This feature is powered by the Neural Engine and occurs entirely on the device, and this information isn’t shared with Apple.

Apple also introduced a recent app called Journal that enables personal text and image journaling, like a form of interactive journal, and will be locked and encrypted on the iPhone. The brand new Journal app in iOS 17 robotically pulls recent photos, workouts and other activities from users’ phones, allowing users to edit the content and add text and recent multimedia as desired to create a digital journal.

As well as, the Live Photos feature can use advanced ML models to generate additional frames or discover fields in PDFs to fill with information reminiscent of names, addresses and emails from contacts. The custom volume feature for AirPods uses ML to know environmental conditions and listening preferences over time and robotically adjusts the quantity of AirPods to the user’s preference. An Apple Watch widget feature called Smart Stack uses ML to display relevant information when needed.

Apple also said that the moving image of the user’s eyes on the front of the goggles within the Headset Vision Pro is an element of a special 3D avatar created by scanning the face. After a fast registration process using the Vision Pro’s front-facing sensors, the system uses a complicated encoder-decoder neural network to create a 3D ‘digital persona’ on-device.

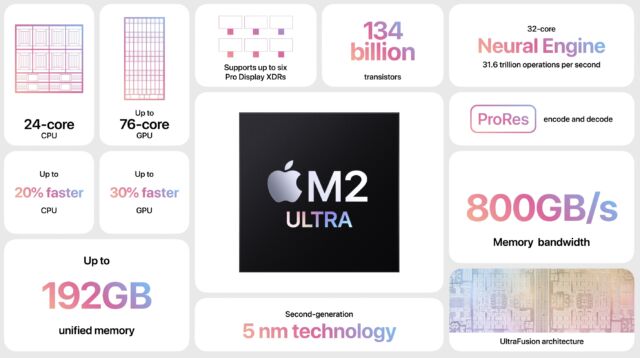

Finally, Apple unveiled the ‘M2 Ultra’, an Apple Silicon chip based on as much as 24 CPU cores, 76 GPU cores and a 32-core Neural Engine known to perform 31.6 trillion operations per second. That is 40% faster than its predecessor, the M1 Ultra.

And the M2 Ultra supports 192GB of integrated memory, 50% greater than the M1 Ultra. This permits training of enormous ML workloads, reminiscent of large transformer models, that probably the most powerful discrete GPUs cannot handle as a consequence of lack of memory in a single system.

A bigger amount of RAM here means larger and more capable AI models can slot in memory. The brand new ‘Mac Studio’ and ‘Mac Pro’ will be utilized in the shape factor of desktop and tower size machines for AI learning.

This has great implications for giant techs reminiscent of Google, Meta, MS, and IBM, which have jumped into the big language model lightweight business. Specifically, meta appears to be expanding a distinct segment market with an open source lightweight concept.

It’s an evaluation that in the event that they achieve applying all lightweight models to mobile like Apple, there’s a possibility that the generative AI industry and the mobile telecommunications industry may even change rapidly.

As well as, some are taking note of whether Apple will apply generative AI. It is predicted that iOS, a robust mobile OS, might be the important thing to reorganizing the industry.

Meanwhile, Apple CEO Tim Cook said in an interview with ABC News that day, “I personally use ChatGPT, and I’m looking forward to its unique application, and Apple is looking closely at it.” expressed

Reporter Park Chan cpark@aitimes.com