Apple has unveiled a latest language model that supports context-sensitive conversations with voice assistants reminiscent of Siri by understanding various sorts of reference information reminiscent of phone numbers, URL links, and email addresses displayed on the screen.

VentureBeat reported on the first (local time) about Apple's latest language model 'ReALM', which may understand various references displayed on the screen in addition to references in conversation and background context for natural interaction with voice assistants. thesis Posted in online archiveHe said he did it.

When talking to a voice assistant like Siri, users can reference any variety of contextual information to interact with, including background tasks, screen data, and conversation-related entities.

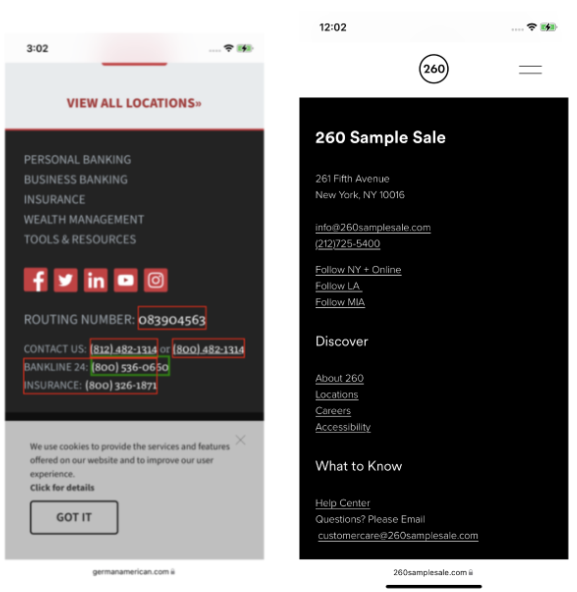

For instance, after a user requests a close-by pharmacy and Siri presents an inventory of nearby pharmacies on the screen, the user can ask again to 'Call the number at the underside.' At the moment, the present Siri couldn’t perform this request, but Siri with Realm can understand the context and make a call to the pharmacy listed at the underside of the list data on the screen.

Human speech often includes ambiguous objects reminiscent of 'they' or 'it'. While humans can clearly understand what these references discuss with based on context, this has been difficult for machines until now.

Reference resolution signifies that a pc program performs an motion based on ambiguous linguistic input, reminiscent of how the user says 'this' or 'that'.

This sort of reference resolution is an important ability for voice assistants to know various kinds of context and naturally communicate or successfully process user requests.

These contexts include contexts related to screen entities, dialog entities, and background entities. A screen entity is an entity that’s currently displayed on the user's screen. Examples include reference information reminiscent of phone numbers, URL links, and email addresses.

A conversation entity refers to a reference entity related to conversation content. For instance, when a user says 'Call Mom', Mom's contact information becomes the related entity.

A background entity is an entity running within the background, which might be an alarm that starts ringing, or music that plays within the background.

Realm transforms the complex task of reference resolution right into a language modeling problem, resolving various sorts of references without sacrificing performance.

While GPT-4 relies on image evaluation to know screen information, Realm simplifies its approach by converting every little thing to text. For screen entities, this involves dividing the screen right into a grid and encoding the capture data of the entities on the screen as text together with their relative spatial positions.

In comparison with the present system, the smallest model 'Realm-80M' with 80 million parameters showed significant improvement for various sorts of references, including greater than 5% performance improvement for screen references.

As well as, in benchmark tests with GPT-3.5 and GPT-4, the Realm-80M model recorded similar performance to GPT-4, with 'Realm-250M' with 250 million parameters and 'Realm-1B' with 1 billion parameters. ' and the 'Realm-3B' model with 3 billion parameters all showed outstanding performance, surpassing GPT-4.

The smallest Realm-80M model operates similarly to GPT-4 with much fewer parameters, making it suitable for on-device AI.

The discharge of this research suggests that Apple is repeatedly investing in making Siri and other products more familiar and contextually aware. In other words, the intention is to make use of this feature to enhance the functionality of the iPhone.

Nevertheless, the researchers warned that there are limitations to counting on automated screen evaluation. It’s identified that processing complex visual references, reminiscent of distinguishing between multiple images, ultimately requires integrating computer vision and multimodal technologies.

Reporter Park Chan cpark@aitimes.com