An evaluation has emerged that each generative artificial intelligence (AI) models corresponding to OpenAI’s ‘GPT-4’ and Google’s ‘Farm 2’ will likely be subject to AI law regulation by the European Union (EU).

Euronews reported on the tenth (local time) that the Basic Model Research Center under Stanford University announced the outcomes after examining whether the fundamental models of 10 corporations met the necessities of the Artificial Intelligence Act (AIA).

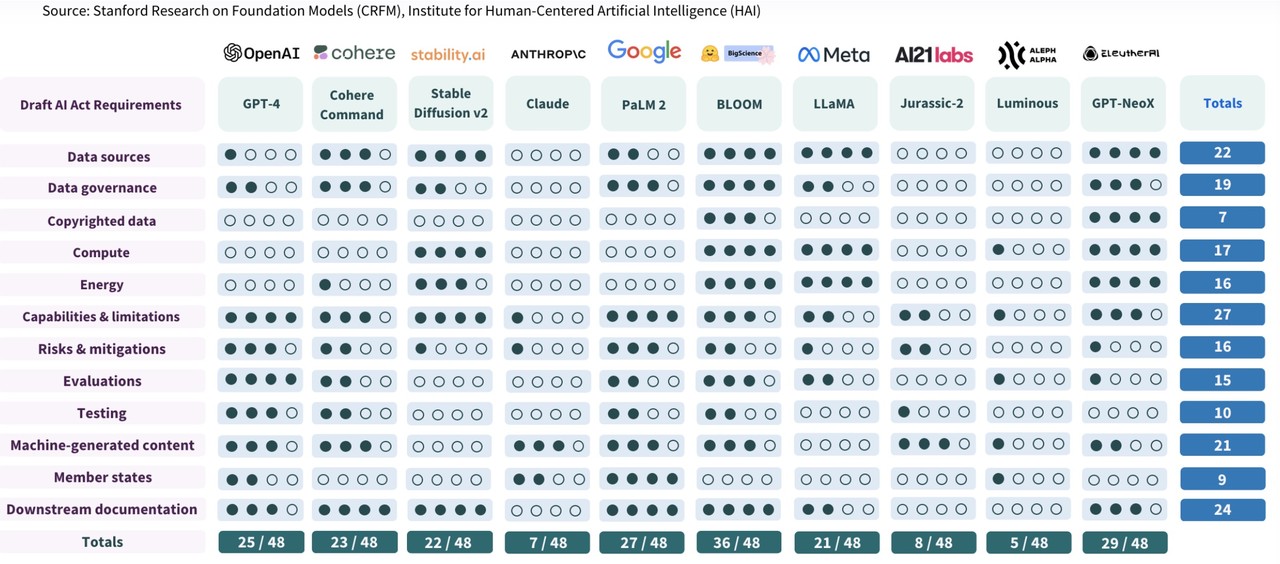

Among the many 22 items required by AIA, each model was analyzed specializing in 12 items that might be evaluated with publicly available information. These are regulatory items related to securing data transparency, marking copyrights, disclosing and managing energy consumption, preparing risk disclosure and adjustment measures, and marking AI-generated content.

Consequently of the evaluation, there was no model that satisfies all the necessities of AIA. ‘Bloom’, an open source language model of Hugging Face, received the very best rating (36 points out of 48) with a rating of 75 out of 100.

‘Palm 2’ and ‘GPT-4’, Eleuther AI’s large language model ‘GPT-NeoX’, Coher’s text generation model ‘Command’, Stability AI’s image generation model ‘Stable Diffusion’, Meta ‘s language model, ‘Rama’, received an evaluation of around 50 points.

As well as, Antropic’s AI chatbot ‘Claude’, Israeli startup AI21 Lab’s language model ‘Jurasic-2’, and German startup Aleph Alpha’s AI model ‘Luminus’ scored lower than 25 points.

Consequently of the evaluation, the research center identified that there have been no models that disclosed the copyright information of the training data aside from the open source ‘Bloom’ and ‘GPT-NeoX’. Greater than half don’t disclose energy use, and the law requires disclosure and explanation of the risks of models that can not be mitigated, but not one of the models achieve this.

The research center diagnosed that the transparency of AI basic models was generally poor, and predicted that the enactment and implementation of AIA would bring about positive changes.

The AIA is currently refining the ultimate bill through trilateral negotiations between the European Commission, Parliament and the Council of Ministers. On this negotiation process, which is targeted for the tip of this 12 months, among the contents of the bill may change. On this regard, the research center advised that policy makers and AI model providers should make efforts to secure transparency.

Particularly, it was revealed that the AI models judged that they might have the ability to comply with the AIA’s requirements as much as 90% in the event that they worked on improving transparency.

Reporter Jeong Byeong-il jbi@aitimes.com