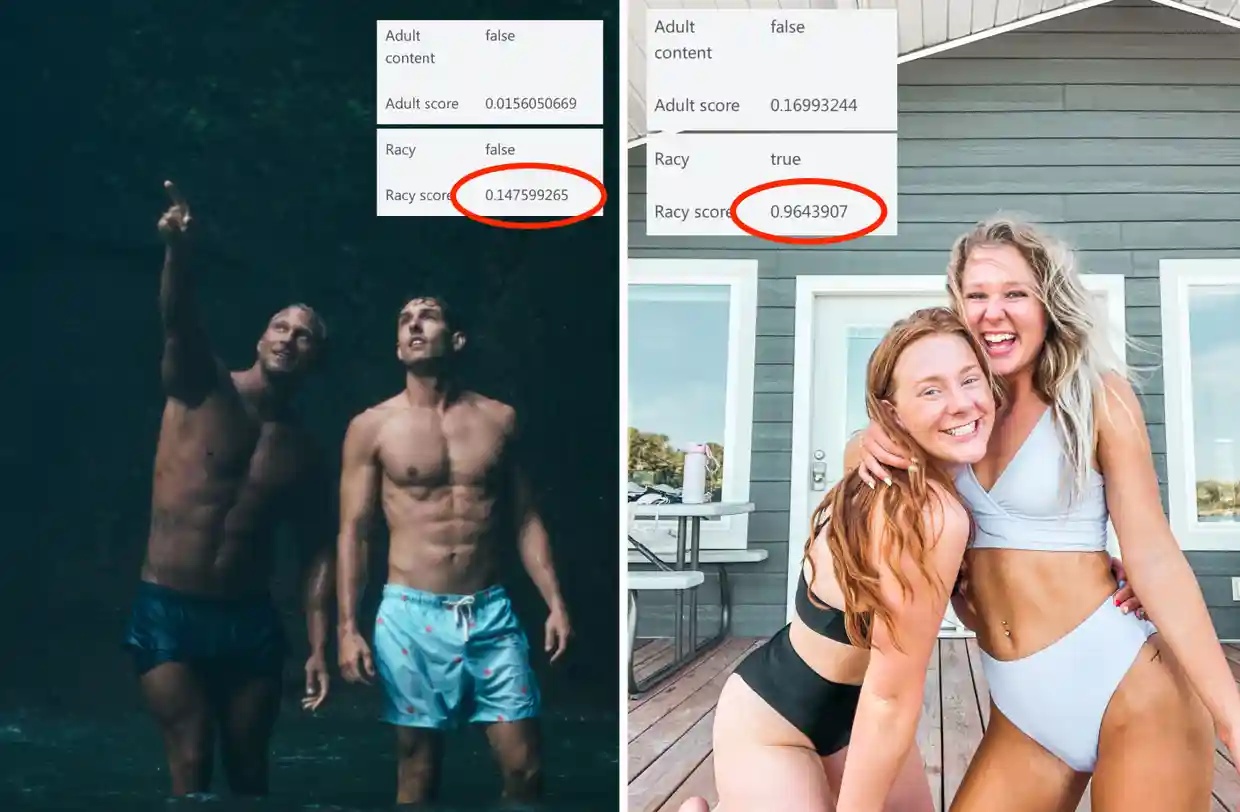

It has been confirmed that almost all artificial intelligence (AI) algorithms have a powerful tendency to judge images that reveal female bodies as unconditionally sensational.

In consequence, there are concerns about damage, similar to suppressing exposure of images containing female bodies on social media. Some indicate that that is the results of prejudice against women.

The Guardian reported on the eighth (local time) that Latest York University professor Hilke Schellman found such bias in consequence of testing the sensationalism evaluation criteria of AI algorithms developed by AI firms similar to Giannulka Mauro and Google and Microsoft (MS). .

Professor Schellman and others used a whole lot of photos of men and ladies exercising in underwear and medical photos with body exposure, similar to the upper body of a lady diagnosed with breast cancer or the belly of a pregnant woman, as data. In consequence of the experiment, the AI tools judged all pictures of ladies to be suggestive.

For a photograph of a lady stretching on the beach wearing long pants and a bra, Google AI gave it the best rating of 5 on a 5-point scale, and MS AI rated it 98% sensational.

Alternatively, for photos of men exposing their upper bodies indoors and exercising, the outcomes were 3 points and three%, respectively.

As well as, when Mauro, the experimenter, took an image together with his upper body exposed wearing long pants, the MS algorithm rated sexuality as lower than 22%, but when wearing a bra, it increased to 97%. The photo with the bra next to the experimenter was rated at 99%.

The experimenters also confirmed that social media using these algorithms suppress exposure of images containing female bodies with a ‘shadowbanning’ function. Shadow ban refers to suppressing the exposure of content without notifying the publisher.

For instance, when the experimenters posted pictures of men and women wearing underwear on LinkedIn, a social media site, they only viewed 8 pictures of ladies in an hour, while pictures of men recorded 655 views.

There are numerous examples of victims of this shadow ban. Australian photographer Beck Wood has been posting works on Instagram since 2018, showing intimate scenes between mother and child, similar to breastfeeding, but was soon banned from posting. He complained that this led to self-censorship of his photos.

Carolina Arere, a pole dance instructor and university researcher within the UK, has been recruiting members for dance classes through Instagram, but found that a few of her photos have been deleted or are usually not searchable by hashtag since 2019.

He emphasized that this shadow ban is damaging each to himself and to individuals with disabilities who earn a living by selling art on social media.

Margaret Mitchell, chief ethics scientist at Hugging Face, an AI developer, said the algorithms utilized by tech giants and social media are reproducing societal prejudices. He identified the harm of algorithms, saying, “It may make women feel that they should cover themselves greater than men, which in turn puts social pressure on women.”

Jeong Byeong-il, member jbi@aitimes.com