Since generative AI began to garner public interest, the pc vision research field has deepened its interest in developing AI models able to understanding and replicating physical laws; nonetheless, the challenge of teaching machine learning systems to simulate phenomena comparable to gravity and liquid dynamics has been a big focus of research efforts for not less than the past five years.

Since latent diffusion models (LDMs) got here to dominate the generative AI scene in 2022, researchers have increasingly focused on LDM architecture’s limited capability to grasp and reproduce physical phenomena. Now, this issue has gained additional prominence with the landmark development of OpenAI’s generative video model Sora, and the (arguably) more consequential recent release of the open source models Hunyuan Video and Wan 2.1.

Reflecting Badly

Most research aimed toward improving LDM understanding of physics has focused on areas comparable to gait simulation, particle physics, and other facets of Newtonian motion. These areas have attracted attention because inaccuracies in basic physical behaviors would immediately undermine the authenticity of AI-generated video.

Nevertheless, a small but growing strand of research concentrates on one in every of LDM’s biggest weaknesses – it’s relative inability to provide accurate .

Source: https://arxiv.org/pdf/2409.14677

This issue was also a challenge through the CGI era and stays so in the sphere of video gaming, where ray-tracing algorithms simulate the trail of sunshine because it interacts with surfaces. Ray-tracing calculates how virtual light rays bounce off or go through objects to create realistic reflections, refractions, and shadows.

Nevertheless, because each additional bounce greatly increases computational cost, real-time applications must trade off latency against accuracy by limiting the variety of allowed light-ray bounces.

![A representation of a virtually-calculated light-beam in a traditional 3D-based (i.e., CGI) scenario, using technologies and principles first developed in the 1960s, and which came to fulmination between 1982-93 (the span between Tron [1982] and Jurassic Park [1993]. Source: https://www.unrealengine.com/en-US/explainers/ray-tracing/what-is-real-time-ray-tracing](https://www.unite.ai/wp-content/uploads/2025/04/ray-tracing.jpg)

Source: https://www.unrealengine.com/en-US/explainers/ray-tracing/what-is-real-time-ray-tracing

As an illustration, depicting a chrome teapot in front of a mirror could involve a ray-tracing process where light rays bounce repeatedly between reflective surfaces, creating an almost infinite loop with little practical profit to the ultimate image. Normally, a mirrored image depth of two to 3 bounces already exceeds what the viewer can perceive. A single bounce would end in a black mirror, for the reason that light must complete not less than two journeys to form a visual reflection.

Each additional bounce sharply increases computational cost, often doubling render times, making faster handling of reflections one of the vital significant opportunities for improving ray-traced rendering quality.

Naturally, reflections occur, and are essential to photorealism, in far less obvious scenarios – comparable to the reflective surface of a city street or a battlefield after the rain; the reflection of the opposing street in a store window or glass doorway; or within the glasses of depicted characters, where objects and environments could also be required to seem.

Image Problems

For that reason, frameworks that were popular prior to the appearance of diffusion models, comparable to Neural Radiance Fields (NeRF), and a few newer challengers comparable to Gaussian Splatting have maintained their very own struggles to enact reflections in a natural way.

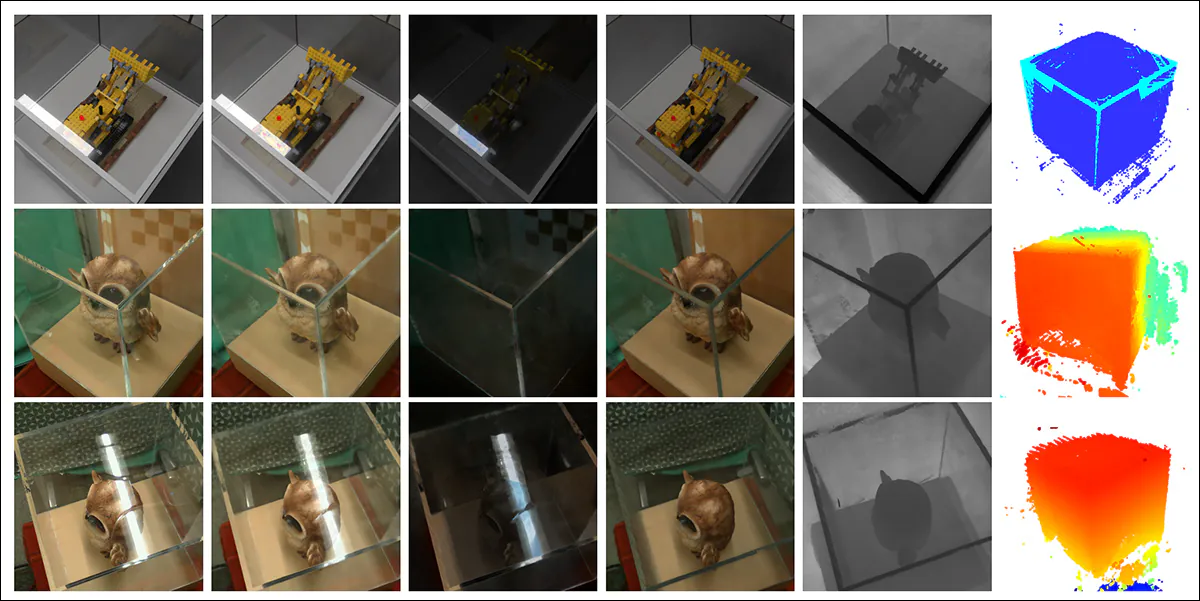

The REF2-NeRF project (pictured below) proposed a NeRF-based modeling method for scenes containing a glass case. On this method, refraction and reflection were modeled using elements that were dependent and independent of the viewer’s perspective. This approach allowed the researchers to estimate the surfaces where refraction occurred, specifically glass surfaces, and enabled the separation and modeling of each direct and reflected light components.

Source: https://arxiv.org/pdf/2311.17116

Other NeRF-facing reflection solutions of the last 4-5 years have included NeRFReN, Reflecting Reality, and Meta’s 2024 project.

For GSplat, papers comparable to Mirror-3DGS, Reflective Gaussian Splatting, and RefGaussian have offered solutions regarding the reflection problem, while the 2023 Nero project proposed a bespoke approach to incorporating reflective qualities into neural representations.

MirrorVerse

Getting a diffusion model to respect reflection logic is arguably harder than with explicitly structural, non-semantic approaches comparable to Gaussian Splatting and NeRF. In diffusion models, a rule of this sort is just more likely to change into reliably embedded if the training data incorporates many varied examples across a wide selection of scenarios, making it heavily depending on the distribution and quality of the unique dataset.

Traditionally, adding particular behaviors of this sort is the purview of a LoRA or the fine-tuning of the bottom model; but these are usually not ideal solutions, since a LoRA tends to skew output towards its own training data, even without prompting, while fine-tunes – besides being expensive – can fork a serious model irrevocably away from the mainstream, and engender a bunch of related custom tools that may never work with any strain of the model, including the unique one.

Basically, improving diffusion models requires that the training data pay greater attention to the physics of reflection. Nevertheless, many other areas are also in need of comparable special attention. Within the context of hyperscale datasets, where custom curation is expensive and difficult, addressing each weakness in this manner is impractical.

Nonetheless, solutions to the LDM reflection problem do crop up on occasion. One recent such effort, from India, is the project, which offers an improved dataset and training method able to improving of the state-of-the-art on this particular challenge in diffusion research.

Source: https://arxiv.org/pdf/2504.15397

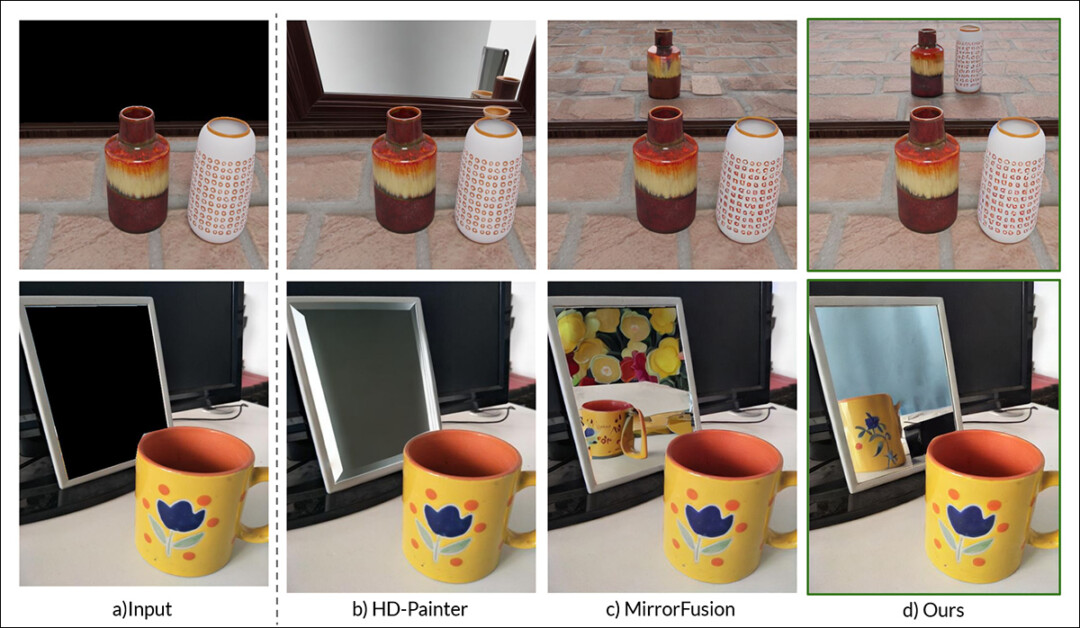

As we will see in the instance above (the feature image within the PDF of the brand new study), MirrorVerse improves on recent offerings tackling the identical problem, but is way from perfect.

Within the upper right image, we see that the ceramic jars are somewhat to the precise of where they ought to be, and within the image below, which should technically not feature a mirrored image of the cup in any respect, an inaccurate reflection has been shoehorned into the precise–hand area, against the logic of natural reflective angles.

Subsequently we’ll take a take a look at the brand new method not a lot because it could represent the present state-of-the-art in diffusion-based reflection, but equally for example the extent to which this may occasionally prove to be an intractable issue for latent diffusion models, static and video alike, for the reason that requisite data examples of reflectivity are most probably to be entangled with particular actions and scenarios.

Subsequently this particular function of LDMs may proceed to fall wanting structure-specific approaches comparable to NeRF, GSplat, and likewise traditional CGI.

The latest paper is titled , and comes from three researchers across Vision and AI Lab, IISc Bangalore, and the Samsung R&D Institute at Bangalore. The paper has an associated project page, in addition to a dataset at Hugging Face, with source code released at GitHub.

Method

The researchers note from the outset the issue that models comparable to Stable Diffusion and Flux have in respecting reflection-based prompts, illustrating the difficulty adroitly:

The researchers have developed , a diffusion-based generative model aimed toward improving the photorealism and geometric accuracy of mirror reflections in synthetic imagery. Training for the model was based on the researchers’ own newly-curated dataset, titled , designed to deal with the generalization weaknesses observed in previous approaches.

MirrorGen2 expands on earlier methodologies by introducing , , and , with the goal of ensuring that reflections remain plausible across a wider range of object poses and placements relative to the mirror surface.

To further strengthen the model’s ability to handle complex spatial arrangements, the MirrorGen2 pipeline incorporates object scenes, enabling the system to raised represent occlusions and interactions between multiple elements in reflective settings.

The paper states:

In regard to explicit object grounding, here the authors ensured that the generated objects were ‘anchored’ to the bottom within the output synthetic data, relatively than ‘hovering’ inappropriately, which might occur when synthetic data is generated at scale, or with highly automated methods.

Data and Tests

SynMirrorV2

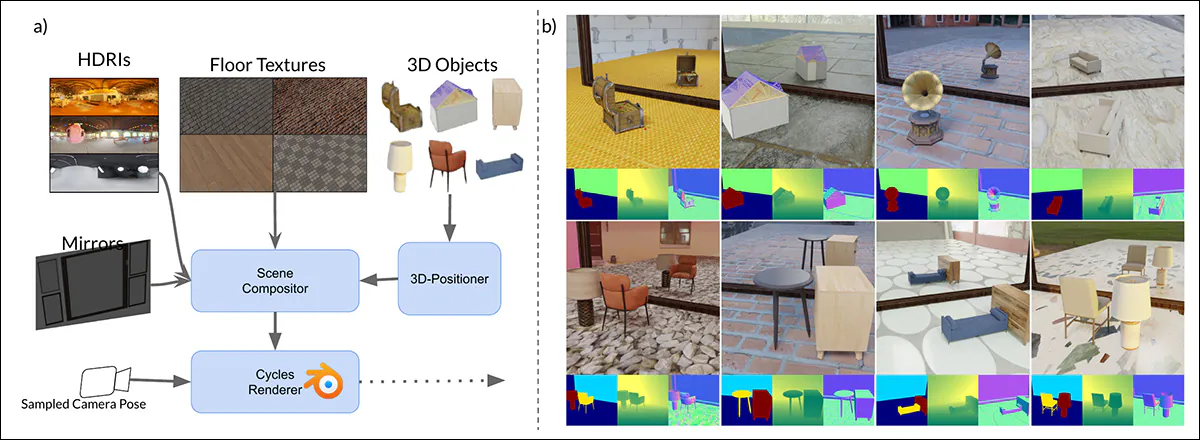

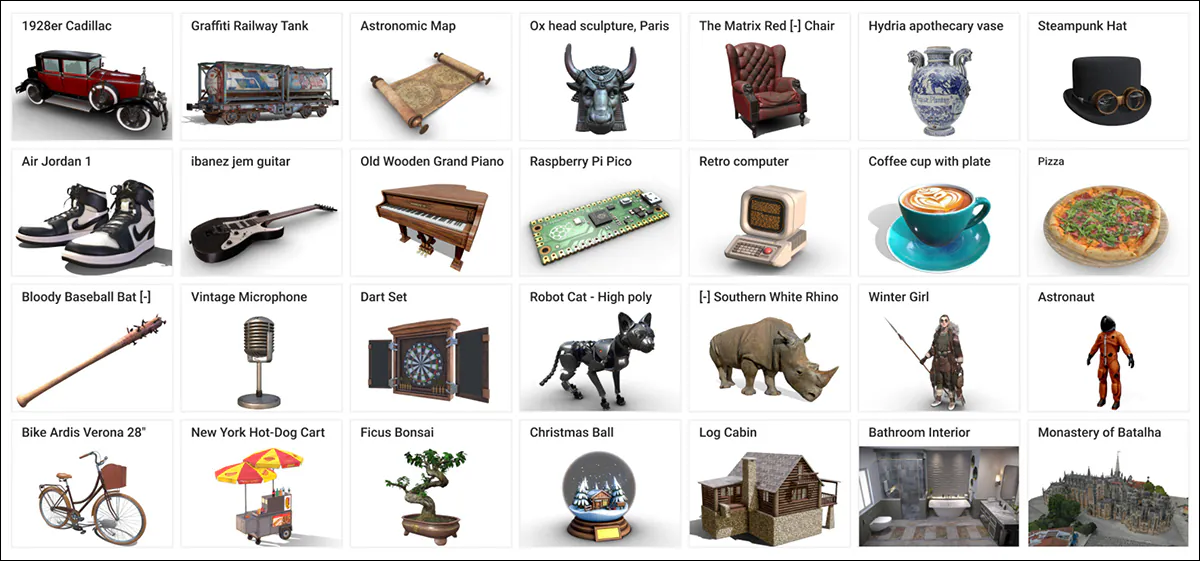

The researchers’ SynMirrorV2 dataset was conceived to enhance the variety and realism of mirror reflection training data, featuring 3D objects sourced from the Objaverse and Amazon Berkeley Objects (ABO) datasets, with these selections subsequently refined through OBJECT 3DIT, in addition to the filtering process from the V1 MirrorFusion project, to eliminate low-quality asset. This resulted in a refined pool of 66,062 objects.

Source: https://arxiv.org/pdf/2212.08051

Scene construction involved placing these objects onto textured floors from CC-Textures and HDRI backgrounds from the PolyHaven CGI repository, using either full-wall or tall rectangular mirrors. Lighting was standardized with an area-light positioned above and behind the objects, at a forty-five degree angle. Objects were scaled to suit inside a unit cube and positioned using a precomputed intersection of the mirror and camera viewing frustums, ensuring visibility.

Randomized rotations were applied across the y-axis, and a grounding technique used to forestall ‘floating artifacts’.

To simulate more complex scenes, the dataset also incorporated multiple objects arranged in response to semantically coherent pairings based on ABO categories. Secondary objects were placed to avoid overlap, creating 3,140 multi-object scenes designed to capture varied occlusions and depth relationships.

Training Process

Acknowledging that synthetic realism alone was insufficient for robust generalization to real-world data, the researchers developed a three-stage curriculum learning process for training MirrorFusion 2.0.

In Stage 1, the authors initialized the weights of each the conditioning and generation branches with the Stable Diffusion v1.5 checkpoint, and fine-tuned the model on the single-object training split of the SynMirrorV2 dataset. Unlike the above-mentioned project, the researchers didn’t freeze the generation branch. They then trained the model for 40,000 iterations.

In Stage 2, the model was fine-tuned for a further 10,000 iterations, on the multiple-object training split of SynMirrorV2, with a view to teach the system to handle occlusions, and the more complex spatial arrangements present in realistic scenes.

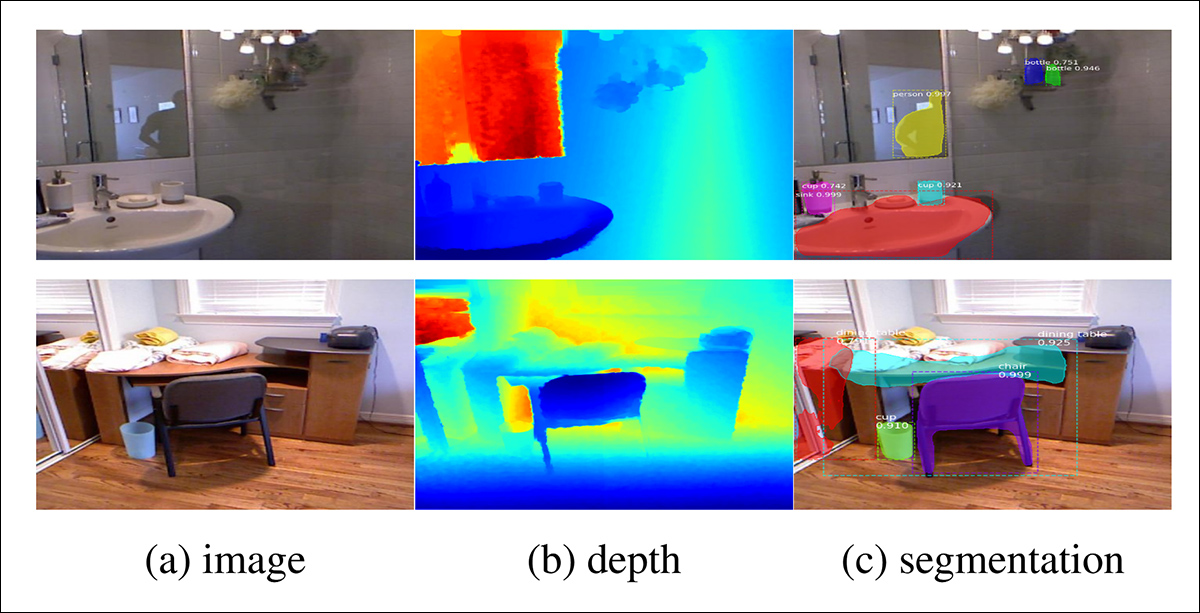

Finally, In Stage 3, a further 10,000 iterations of finetuning were conducted using real-world data from the MSD dataset, using depth maps generated by the Matterport3D monocular depth estimator.

Source: https://arxiv.org/pdf/1908.09101

During training, text prompts were omitted for 20 percent of the training time with a view to encourage the model to make optimum use of the available depth information (i.e., a ‘masked’ approach).

Training took place on 4 NVIDIA A100 GPUs for all stages (the VRAM spec will not be supplied, though it will have been 40GB or 80GB per card). A learning rate of 1e-5 was used on a batch size of 4 per GPU, under the AdamW optimizer.

This training scheme progressively increased the issue of tasks presented to the model, starting with simpler synthetic scenes and advancing toward more difficult compositions, with the intention of developing robust real-world transferability.

Testing

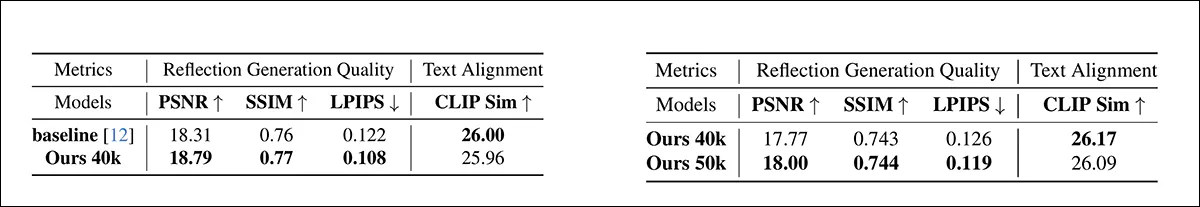

The authors evaluated MirrorFusion 2.0 against the previous state-of-the-art, MirrorFusion, which served because the baseline, and conducted experiments on the MirrorBenchV2 dataset, covering each single and multi-object scenes.

Additional qualitative tests were conducted on samples from the MSD dataset, and the Google Scanned Objects (GSO) dataset.

The evaluation used 2,991 single-object images from seen and unseen categories, and 300 two-object scenes from ABO. Performance was measured using Peak Signal-to-Noise Ratio (PSNR); Structural Similarity Index (SSIM); and Learned Perceptual Image Patch Similarity (LPIPS) scores, to evaluate reflection quality on the masked mirror region. CLIP similarity was used to guage textual alignment with the input prompts.

In quantitative tests, the authors generated images using 4 seeds for a selected prompt, and choosing the resulting image with the perfect SSIM rating. The 2 reported tables of results for the quantitative tests are shown below.

The authors comment:

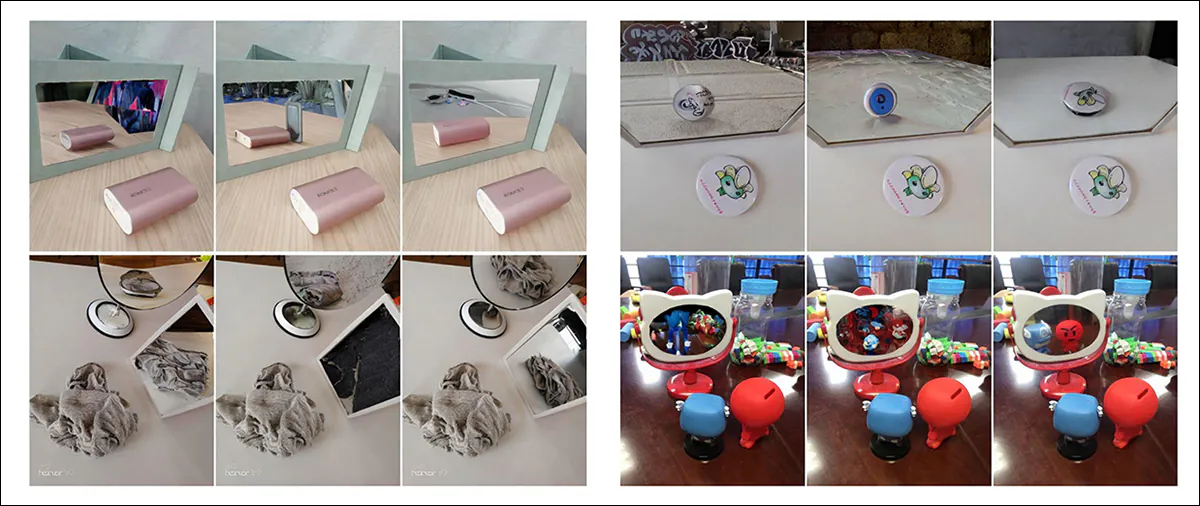

The majority of results, and people emphasized by the authors, regard qualitative testing. As a consequence of the scale of those illustrations, we will only partially reproduce the paper’s examples.

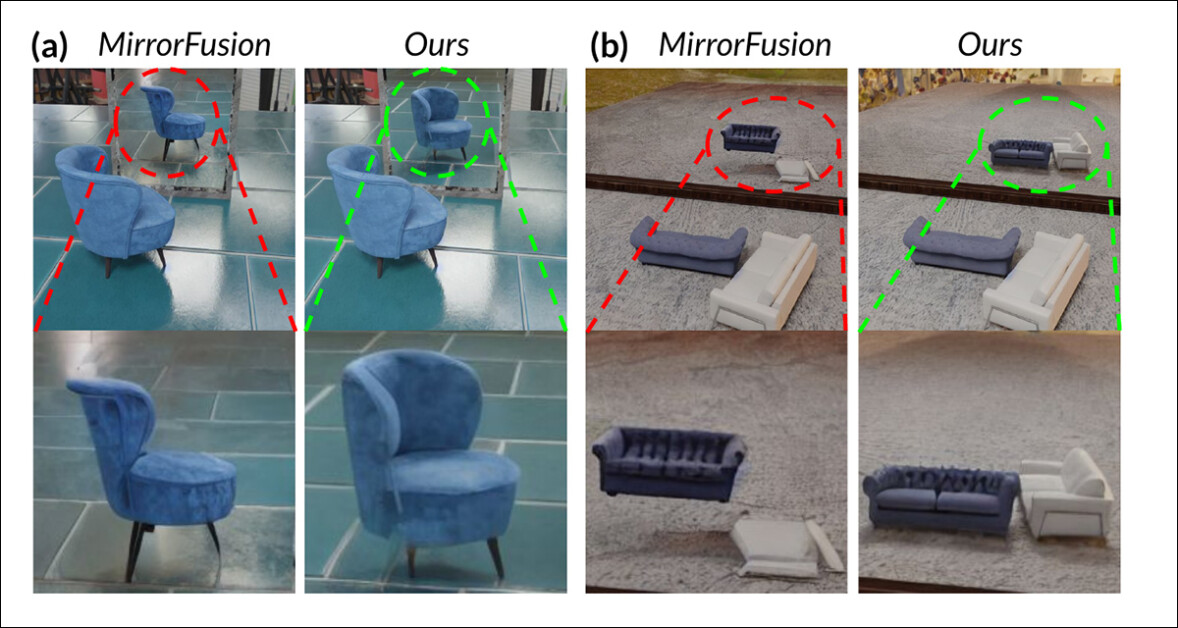

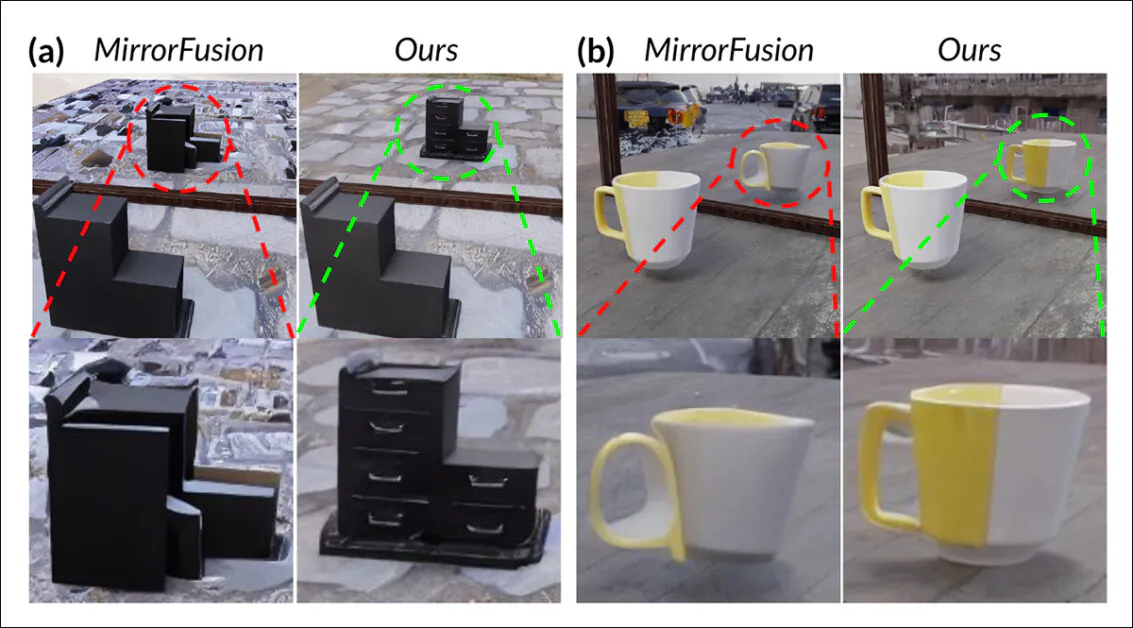

Of those subjective results, the researchers opine that the baseline model did not accurately render object orientation and spatial relationships in reflections, often producing artifacts comparable to incorrect rotation and floating objects. MirrorFusion 2.0, trained on SynMirrorV2, the authors contend, preserves correct object orientation and positioning in each single-object and multi-object scenes, leading to more realistic and coherent reflections.

Below we see qualitative results on the aforementioned GSO dataset:

Here the authors comment:

The ultimate qualitative test was against the aforementioned real-world MSD dataset (partial results shown below):

Here the authors observe that while MirrorFusion 2.0 performed well on MirrorBenchV2 and GSO data, it initially struggled with complex real-world scenes within the MSD dataset. High-quality-tuning the model on a subset of MSD improved its ability to handle cluttered environments and multiple mirrors, leading to more coherent and detailed reflections on the held-out test split.

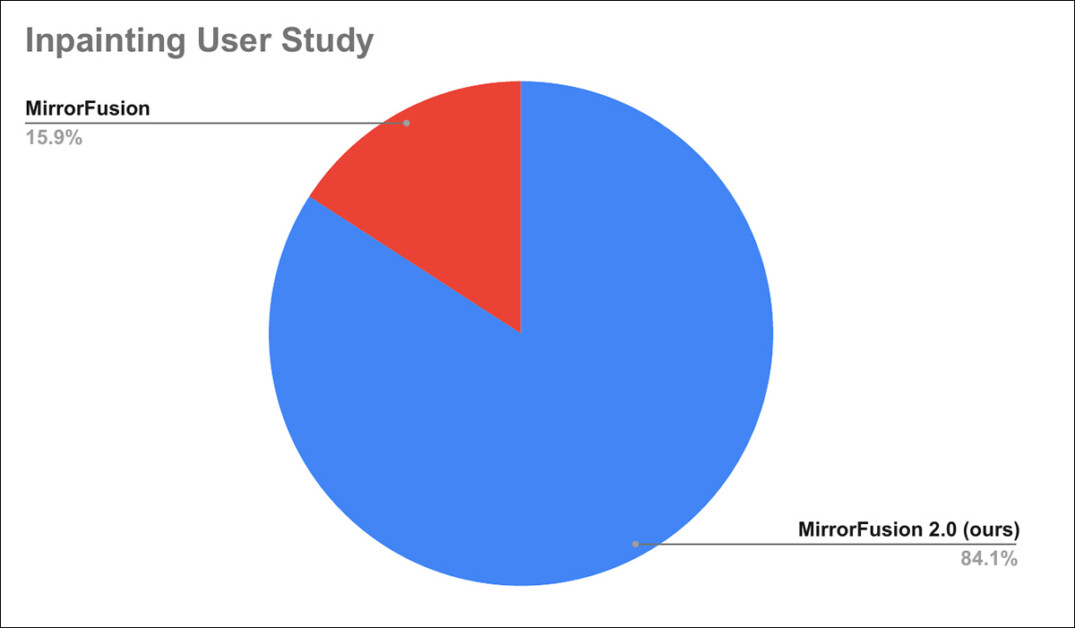

Moreover, a user study was conducted, where 84% of users are reported to have preferred generations from MirrorFusion 2.0 over the baseline method.

Since details of the user study have been relegated to the appendix of the paper, we refer the reader to that for the specifics of the study.

Conclusion

Although several of the outcomes shown within the paper are impressive improvements on the state-of-the-art, the state-of-the-art for this particular pursuit is so abysmal that even an unconvincing aggregate solution can win out with a modicum of effort. The basic architecture of a diffusion model is so inimical to the reliable learning and demonstration of consistent physics, that the issue itself is actually posed, and never apparently not disposed toward a chic solution.

Further, adding data to existing models is already the usual approach to remedying shortfalls in LDM performance, with all of the disadvantages listed earlier. It is affordable to assume that if future high-scale datasets were to pay more attention to the distribution (and annotation) of reflection-related data points, we could expect that the resulting models would handle this scenario higher.

Yet the identical is true of multiple other bugbears in LDM output – who can say which ones most deserves the hassle and money involved within the type of solution that the authors of the brand new paper propose here?