It is predicted that even small and medium-sized businesses will find a way to utilize super-giant artificial intelligence (AI) and supercomputing infrastructure for research and development. OpenAI presents an enterprise developer platform service for this purpose.

By utilizing this service, even firms without their very own infrastructure can conduct research activities by utilizing super-large AI at the extent of Open AI like a cloud service.

The service is provided in the shape of providing a virtual server instance that may handle virtual large-scale tasks through the Microsoft (MS) cloud ‘Azure’. 3 months or 1 12 months contracts.

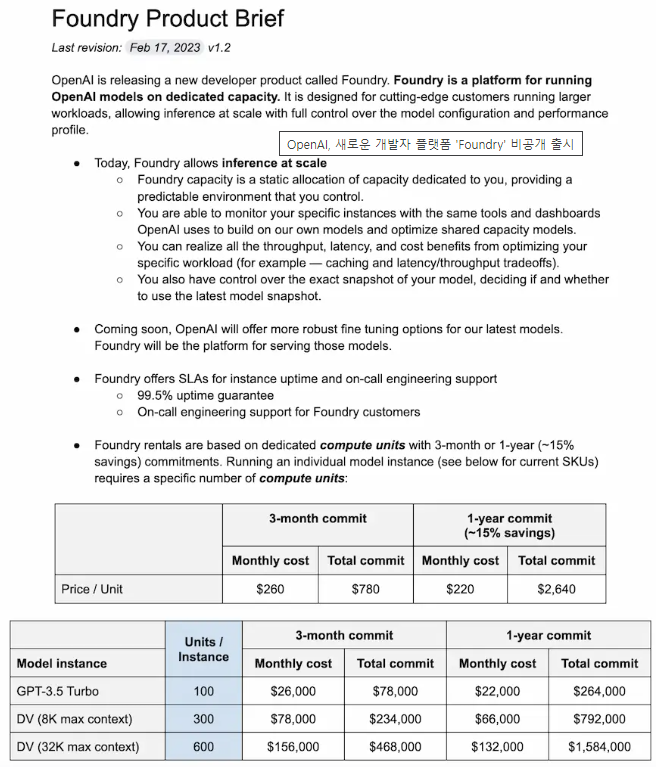

Usage costs vary depending on uptime and support history. A light-weight version of GPT-3.5 instances costs $78,000 (about 102 million won) for 3 months and $264,000 (about 340 million won) for one 12 months.

TechCrunch said on the twenty second (local time) that OpenAI plans to launch a “Foundry” service, a developer platform that allows enterprise customers to run the newest machine learning models, including GPT-3.5, on dedicated computing infrastructure. Reported.

‘Foundry’ is a platform designed for firms that require state-of-the-art technology to handle large-scale operations. The service proceeds in the shape of fixedly providing a virtual server instance during which computing infrastructure is configured using virtual machine technology within the Microsoft (MS) Azure cloud to a single customer. It’s an analogous concept to customers having their very own server systems within the cloud.

It lets you monitor your assigned cloud instances using the identical tools and dashboard as OpenAI’s. OpenAI supports the power to upgrade to the newest model.

The foundry requires a service commitment based on instance uptime and schedule engineering support. Lease is a technique of using a dedicated computing device on a 3-month or 1-year contract.

Fees are usually not low-cost. Running a light-weight version of GPT-3.5 instances costs $78,000 for a three-month contract and $264,000 for a one-year contract. For reference, considered one of Nvidia’s newest supercomputers, the DGX Station, costs $149,000 per unit (roughly 190 million won).

Interestingly, among the many text generation models listed within the instance pricing chart, there’s a model with a maximum 32k context window of 32k. It is feasible that this model shall be the long awaited ‘GPT-4’.

The context window is the variety of words referenced to predict the subsequent word. In essence, an extended text window allows the model to recollect more text. The dimensions of the context window of GPT-3.5, the newest text generation model of OpenAI, is as much as 4k.

Foundries are models for profit, and may ask for thousands and thousands of dollars or more to coach state-of-the-art AI models.

Meanwhile, OpenAI has been making recent moves toward monetization, including the launch of ChatGPT Plus, a professional version of ChatGPT that starts at $20 per 30 days. It decided to introduce the mobile ChatGPT app and introduce AI language technology to MS apps akin to Word, PowerPoint, and Outlook. As well as, it provides paid services using Microsoft Azure and maintains CoPilot, a code generation service developed with Github.

Chan Park, cpark@aitimes.com