Meta has devised a recent method to unravel the 'Reversal Curse' problem of huge language models (LLM). The reversal curse is an issue through which, regardless that you’ve learned that ‘A is B’, you can’t reverse it and answer ‘B is A’.

Mark Tech Post reported on the twenty fourth (local time) that researchers from FAIR, Meta's AI research department, published a paper on a way called 'Reverse Training' to unravel the issue of the reverse curse in LLM. Posted in Online ArchiveHe said he did it.

The reversal curse is a cognitive bias through which artificial intelligence (AI) models, especially LLMs, have difficulty understanding sentence equivalence when learning components are reversed. For instance, an LLM may accurately discover 'Tom Cruise's mother is Mary Lee Pfeiffer', but may not recognize 'Mary Lee Pfeiffer's son is Tom Cruise'.

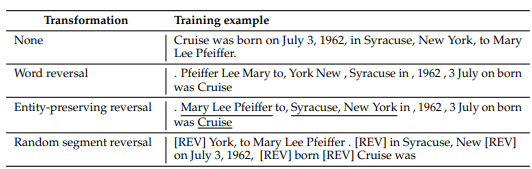

The choice training method called reverse training that the researchers got here up with as an answer is surprisingly easy. By doubling the quantity of tokens for each word within the string, it’s learned twice.

In other words, it is a method of coaching LLM by literally ‘reversing’ the unique string. For instance, changing the sentence ‘Cruz was born on July 3, 1962 in Syracuse, Latest York, to Mary Lee Pfeiffer’ to ‘Mary Lee Piper gave birth to Cruz on July 3, 1962 in Syracuse, Latest York.’ It's like that.

This approach allows the LLM to be trained in each the forward and reverse directions by displaying the knowledge in each the unique and inverted formats concurrently, doubling the usefulness of the info.

This method yielded notable results in comparison with existing models in tests to measure understanding of connections. When tested on the duty of recognizing family relationships between celebrities and their descendants, the backward-trained model achieved an accuracy of 10.4%, in comparison with the 1.6% accuracy recorded by a historically trained model.

“We were in a position to successfully avoid the inversion curse by applying a backward training technique to develop a language model that may process information each forward and backward,” the researchers said. “Through this approach, we not only strengthen cognitive abilities, but in addition higher understand the complex world.” “I’m now in a position to navigate skillfully,” he said.

Reporter Park Chan cpark@aitimes.com