Unlike OpenAI, it has unveiled a ‘Consistency Model’ that’s more efficient than the ‘Diffusion Model’ that’s the idea for image creation tools similar to Midjourney and Stable Diffusion.

TechCrunch published a paper on the archive (arXiv) on the twelfth (local time) discussing a latest form of image-generating artificial intelligence (AI) model called the ‘consistency model’ through which OpenAI outperforms the diffusion model.

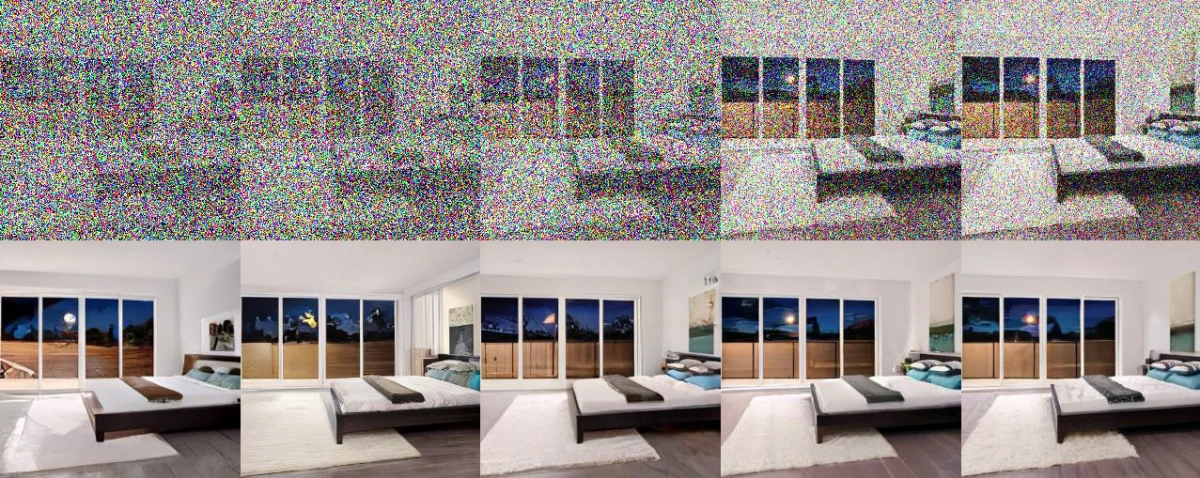

To know the coherence model, it is beneficial to match it to the diffusion model. The diffusion model learns to progressively remove noise from the unique image, which is entirely made up of noise, while generating a picture that’s step-by-step closer to the unique image.

This approach has been successful in generating impressive AI images, nevertheless it may take 1000’s of steps to get good results. This is pricey and impractical for real-time applications.

For instance, the diffusion model requires 1500 iterations over 1-2 minutes using a cluster of GPUs to get the specified result.

The goal of consistency models is to supply acceptable leads to a single computational step or at most two steps.

Just like the diffusion model, the coherence model observes the strategy of destroying a picture by noise, but learns to generate the unique image in only one step, whatever the noise level.

The resulting image may not come as a surprise, however the incontrovertible fact that it was created in a single step is critical.

The consistency model can be applied to a wide range of tasks similar to colorization, upscaling, inpainting and ultra-high resolution.

As well as, depending on the design, it may well support fast one-step generation while improving the standard of the resulting image through several-step sampling.

What sets the consistency model apart is its efficiency. Diffusion models require a major amount of computation to get good results, whereas coherence models can produce decent results with minimal computation.

This makes it ideal for applications that require real-time results, similar to a live chat interface or running a picture generator on a phone without draining the battery.

OpenAI’s give attention to developing latest technologies, similar to coherence models, indicates that they’re actively searching for next-generation use cases beyond diffusion models.

It remains to be too early to directly compare coherence models with diffusion models, however the potential of this latest technique is critical.

Chan Park, cpark@aitimes.com