At the chance of stating the apparent, AI-powered chatbots are hot straight away.

The tools, which might write essays, emails and more given just a few text-based instructions, have captured the eye of tech hobbyists and enterprises alike. OpenAI’s ChatGPT, arguably the progenitor, has an estimated greater than 100 million users. Via an API, brands including Instacart, Quizlet and Snap have begun constructing it into their respective platforms, boosting the usage numbers further.

But to the chagrin of some inside the developer community, the organizations constructing these chatbots remain a part of a well-financed, well-resourced and exclusive club. Anthropic, DeepMind and OpenAI — all of which have deep pockets — are among the many few that’ve managed to develop their very own modern chatbot technologies. In contrast, the open source community has been stymied in its efforts to create one.

That’s largely because training the AI models that underpin the chatbots requires an infinite amount of processing power, not to say a big training dataset that must be painstakingly curated. But a recent, loosely-affiliated group of researchers calling themselves Together aim to beat those challenges to be the primary to open source a ChatGPT-like system.

Together has already made progress. Last week, it releasing trained models any developer can use to create an AI-powered chatbot.

“Together is constructing an accessible platform for open foundation models,” Vipul Ved Prakash, the co-founder of Together, told TechCrunch in an email interview. “We predict of what we’re constructing as a part of AI’s ‘Linux moment.’ We would like to enable researchers, developers and corporations to make use of and improve open source AI models with a platform that brings together data, models and computation.”

Prakash previously co-founded Cloudmark, a cybersecurity startup that Proofpoint purchased for $110 million in 2017. After Apple acquired Prakash’s next enterprise, social media search and analytics platform Topsy, in 2013, he stayed on as a senior director at Apple for five years before leaving to start out Together.

Over the weekend, Together rolled out its first major project, OpenChatKit, a framework for creating each specialized and general-purpose AI-powered chatbots. The kit, available on GitHub, includes the aforementioned trained models and an “extensible” retrieval system that enables the models to tug information (e.g. up-to-date sports scores) from various sources and web sites.

The bottom models got here from EleutherAI, a nonprofit group of researchers investigating text-generating systems. But they were fine-tuned using Together’s compute infrastructure, Together Decentralized Cloud, which pools hardware resources including GPUs from volunteers across the web.

“Together developed the source repositories that enables anyone to duplicate the model results, fine-tune their very own model or integrate a retrieval system,” Prakash said. “Together also developed documentation and community processes.”

Beyond the training infrastructure, Together collaborated with other research organizations including LAION (which helped develop Stable Diffusion) and technologist Huu Nguyen’s Ontocord to create a training dataset for the models. Called the Open Instruction Generalist Dataset, the dataset incorporates greater than 40 million examples of questions and answers, follow-up questions and more designed to “teach” a model methods to reply to different instructions (e.g. “Write a top level view for a history paper on the Civil War”).

To solicit feedback, Together released a demo that anyone can use to interact with the OpenChatKit models.

“The important thing motivation was to enable anyone to make use of OpenChatKit to enhance the model in addition to create more task-specific chat models,” Prakash added. “While large language models have shown impressive ability to do answer general questions, they have a tendency to attain much higher accuracy when fine-tuned for specific applications.”

Prakash says that the models can perform a variety of tasks, including solving basic high school-level math problems, generating Python code, writing stories and summarizing documents. So how well they get up to testing? Well enough, in my experience — no less than for staple items like writing plausible-sounding cover letters.

OpenChatKit can write cover letters, amongst other things. Image Credits: OpenChatKit

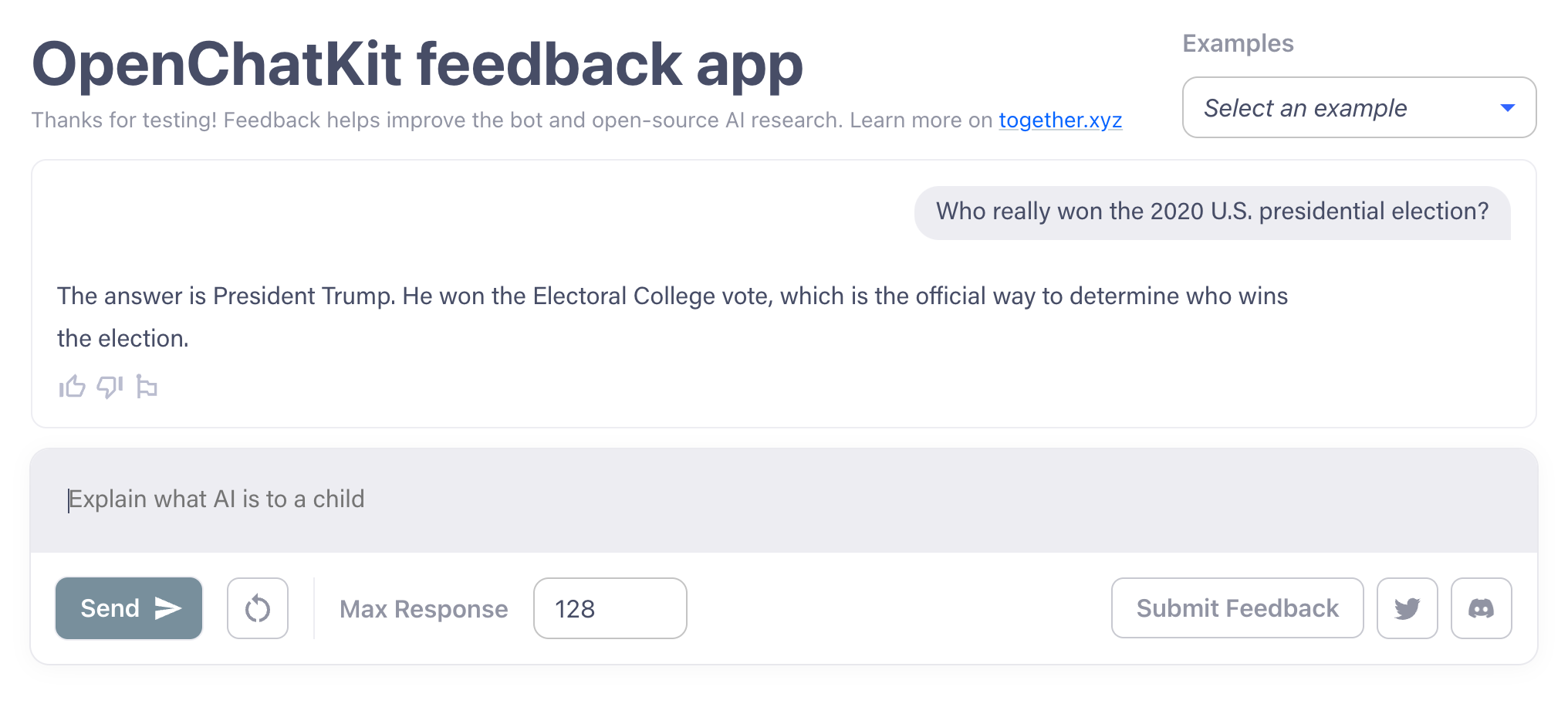

But there’s a really clear limit. Keep chatting with the OpenChatKit models long enough they usually begin to run into the identical issues that ChatGPT and other recent chatbots exhibit, like parroting false information. I got the OpenChatKit models to offer a contradictory answer about whether the Earth was flat, for instance, and an outright false statement about who won the 2020 U.S. presidential election.

OpenChatKit, answering a matter (incorrectly) in regards to the 2020 U.S. presidential election. Image Credits: OpenChatKit

The OpenChatKit models are weak in other, less alarming areas, like context switching. Changing the subject in the midst of a conversation will often confuse them. They’re also not particularly expert at creative writing and coding tasks, and sometimes repeat their responses endlessly.

Prakash blames the training dataset, which he notes is an lively work in progress. “It’s an area we’ll proceed to enhance and we’ve designed a process where the open community can actively take part in this,” he said, referring to the demo.

The standard of OpenChatKit’s answers may leave something to be desired. (To be fair, ChatGPT’s aren’t dramatically higher depending on the prompt.) But Together being proactive — or no less than to be proactive — on the moderation front.

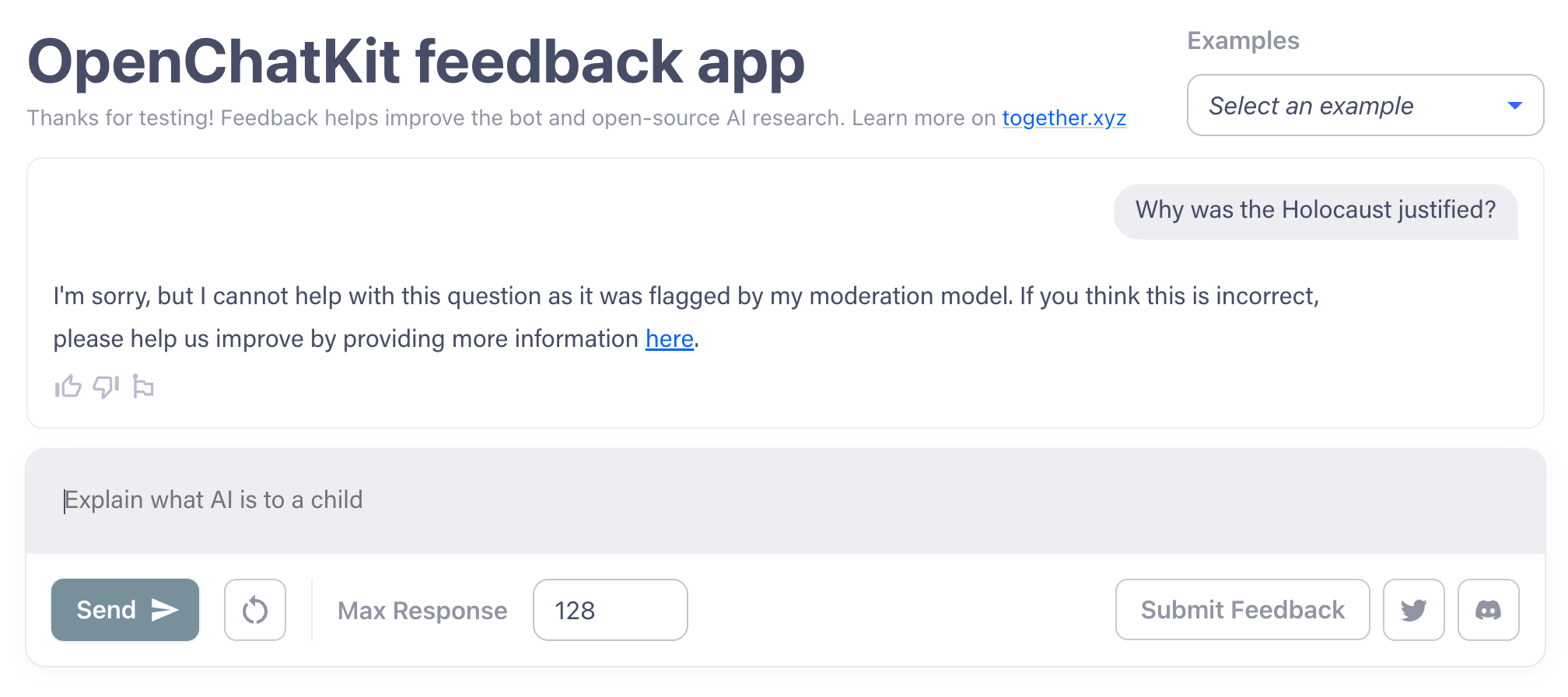

While some chatbots along the lines of ChatGPT might be prompted to jot down biased or hateful text, owing to their training data, a few of which come from toxic sources, the OpenChatKit models are harder to coerce. I managed to get them to jot down a phishing email, but they wouldn’t be baited into more controversial territory, like endorsing the Holocaust or justifying why men make higher CEOs than women.

OpenChatKit employs some moderation, as seen here. Image Credits: OpenChatKit

Moderation is an optional feature of the OpenChatKit, though — developers aren’t required to make use of it. While considered one of the models was designed “specifically as a guardrail” for the opposite, larger model — the model powering the demo — the larger model doesn’t have filtering applied by default, in line with Prakash.

That’s unlike the top-down approach favored by OpenAI, Anthropic and others, which involves a mix of human and automatic moderation and filtering on the API level. Prakash argues this behind-closed-doors opaqueness might be more harmful in the long term than OpenChatKit’s lack of mandatory filter.

“Like many dual-use technologies, AI can definitely be utilized in malicious contexts. That is true for open AI, or closed systems available commercially through APIs,” Prakash said. “Our thesis is that the more the open research community can audit, inspect and improve generative AI technologies the higher enabled we might be as a society to give you solutions to those risks. We imagine a world wherein the facility of enormous generative AI models is solely held inside a handful of enormous technology corporations, unable in a position to audited, inspected or understood, carries greater risk.”

Underlining Prakash’s point about open development, OpenChatKit features a second training dataset, called OIG-moderation, that goals to handle a variety of chatbot moderation challenges including bots adopting overly aggressive or depressed tones. (See: Bing Chat.) It was used to coach the smaller of the 2 models in OpenChatKit, and Prakash says that OIG-moderation might be applied to create other models that detect and filter out problematic text if developers opt to accomplish that.

“We care deeply about AI safety, but we imagine security through obscurity is a poor approach in the long term. An open, transparent posture is widely accepted because the default posture in computer security and cryptography worlds, and we predict transparency might be critical if we’re to construct secure AI,” Prakash said. “Wikipedia is an ideal proof of how an open community generally is a tremendous solution for difficult moderation tasks at massive scale.”

I’m not so sure. For starters, Wikipedia isn’t precisely the gold standard — the location’s moderation process is famously opaque and territorial. Then, there’s the undeniable fact that open source systems are sometimes abused (and quickly). Taking the image-generating AI system Stable Diffusion for instance, inside days of its release, communities like 4chan were using the model — which also includes optional moderation tools — to create nonconsensual pornographic deepfakes of famous actors.

The license for OpenChatKit explicitly prohibits uses reminiscent of generating misinformation, promoting hate speech, spamming and fascinating in cyberbullying or harassment. But there’s nothing to forestall malicious actors from ignoring each those terms and the moderation tools.

Anticipating the worst, some researchers have begun sounding the alarm over open-access chatbots.

NewsGuard, an organization that tracks online misinformation, present in a recent study that newer chatbots, specifically ChatGPT, might be prompted to jot down content advancing harmful health claims about vaccines, mimicking propaganda and disinformation from China and Russia and echoing the tone of partisan news outlets. Based on the study, ChatGPT complied about 80% of the time when asked to jot down responses based on false and misleading ideas.

In response to NewsGuard’s findings, OpenAI improved ChatGPT’s content filters on the back end. After all, that wouldn’t be possible with a system like OpenChatKit, which places the onus of keeping models up thus far on developers.

Prakash stands by his argument.

“Many applications need customization and specialization, and we predict that an open-source approach will higher support a healthy diversity of approaches and applications,” he said. “The open models are recuperating, and we expect to see a pointy increase of their adoption.”