Transformer models have proven to be extremely efficient on a wide selection of machine learning tasks, akin to natural language processing, audio processing, and computer vision. Nonetheless, the prediction speed of those large models could make them impractical for latency-sensitive use cases like conversational applications or search. Moreover, optimizing their performance in the actual world requires considerable time, effort and skills which might be beyond the reach of many corporations and organizations.

Luckily, Hugging Face has introduced Optimum, an open source library which makes it much easier to cut back the prediction latency of Transformer models on quite a lot of hardware platforms. On this blog post, you’ll learn methods to speed up Transformer models for the Graphcore Intelligence Processing Unit (IPU), a highly flexible, easy-to-use parallel processor designed from the bottom up for AI workloads.

Optimum Meets Graphcore IPU

Through this partnership between Graphcore and Hugging Face, we at the moment are introducing BERT as the primary IPU-optimized model. We might be introducing many more of those IPU-optimized models in the approaching months, spanning applications akin to vision, speech, translation and text generation.

Graphcore engineers have implemented and optimized BERT for our IPU systems using Hugging Face transformers to assist developers easily train, fine-tune and speed up their state-of-the-art models.

Getting began with IPUs and Optimum

Let’s use BERT for example to allow you to start with using Optimum and IPUs.

On this guide, we’ll use an IPU-POD16 system in Graphcloud, Graphcore’s cloud-based machine learning platform and follow PyTorch setup instructions present in Getting Began with Graphcloud.

Graphcore’s Poplar SDK is already installed on the Graphcloud server. If you’ve got a unique setup, you could find the instructions that apply to your system within the PyTorch for the IPU: User Guide.

Arrange the Poplar SDK Environment

You’ll need to run the next commands to set several environment variables that enable Graphcore tools and Poplar libraries. On the most recent system running Poplar SDK version 2.3 on Ubuntu 18.04, you could find within the folder /opt/gc/poplar_sdk-ubuntu_18_04-2.3.0+774-b47c577c2a/.

You would wish to run each enable scripts for Poplar and PopART (Poplar Advanced Runtime) to make use of PyTorch:

$ cd /opt/gc/poplar_sdk-ubuntu_18_04-2.3.0+774-b47c577c2a/

$ source poplar-ubuntu_18_04-2.3.0+774-b47c577c2a/enable.sh

$ source popart-ubuntu_18_04-2.3.0+774-b47c577c2a/enable.sh

Arrange PopTorch for the IPU

PopTorch is a component of the Poplar SDK. It provides functions that allow PyTorch models to run on the IPU with minimal code changes. You’ll be able to create and activate a PopTorch environment following the guide Establishing PyTorch for the IPU:

$ virtualenv -p python3 ~/workspace/poptorch_env

$ source ~/workspace/poptorch_env/bin/activate

$ pip3 install -U pip

$ pip3 install /opt/gc/poplar_sdk-ubuntu_18_04-2.3.0+774-b47c577c2a/poptorch-.whl

Install Optimum Graphcore

Now that your environment has all of the Graphcore Poplar and PopTorch libraries available, you must install the most recent 🤗 Optimum Graphcore package on this environment. This might be the interface between the 🤗 Transformers library and Graphcore IPUs.

Please be sure that the PopTorch virtual environment you created within the previous step is activated. Your terminal must have a prefix showing the name of the poptorch environment like below:

(poptorch_env) user@host:~/workspace/poptorch_env$ pip3 install optimum[graphcore] optuna

Clone Optimum Graphcore Repository

The Optimum Graphcore repository incorporates the sample code for using Optimum models in IPU. It’s best to clone the repository and alter the directory to the example/question-answering folder which incorporates the IPU implementation of BERT.

$ git clone https://github.com/huggingface/optimum-graphcore.git

$ cd optimum-graphcore/examples/question-answering

Now, we’ll use run_qa.py to fine-tune the IPU implementation of BERT on the SQUAD1.1 dataset.

Run a sample to fine-tune BERT on SQuAD1.1

The run_qa.py script only works with models which have a quick tokenizer (backed by the 🤗 Tokenizers library), because it uses special features of those tokenizers. That is the case for our BERT model, and it is best to pass its name because the input argument to --model_name_or_path. As a way to use the IPU, Optimum will search for the ipu_config.json file from the trail passed to the argument --ipu_config_name.

$ python3 run_qa.py

--ipu_config_name=./

--model_name_or_path bert-base-uncased

--dataset_name squad

--do_train

--do_eval

--output_dir output

--overwrite_output_dir

--per_device_train_batch_size 2

--per_device_eval_batch_size 2

--learning_rate 6e-5

--num_train_epochs 3

--max_seq_length 384

--doc_stride 128

--seed 1984

--lr_scheduler_type linear

--loss_scaling 64

--weight_decay 0.01

--warmup_ratio 0.1

--output_dir /tmp/debug_squad/

A more in-depth take a look at Optimum-Graphcore

Getting the info

A quite simple solution to get datasets is to make use of the Hugging Face Datasets library, which makes it easy for developers to download and share datasets on the Hugging Face hub. It also has pre-built data versioning based on git and git-lfs, so you possibly can iterate on updated versions of the info by just pointing to the identical repo.

Here, the dataset comes with the training and validation files, and dataset configs to assist facilitate which inputs to make use of in each model execution phase. The argument --dataset_name==squad points to SQuAD v1.1 on the Hugging Face Hub. You can also provide your personal CSV/JSON/TXT training and evaluation files so long as they follow the identical format because the SQuAD dataset or one other question-answering dataset in Datasets library.

Loading the pretrained model and tokenizer

To show words into tokens, this script would require a quick tokenizer. It is going to show an error in the event you didn’t pass one. For reference, here’s the list of supported tokenizers.

# Tokenizer check: this script requires a quick tokenizer.

if not isinstance(tokenizer, PreTrainedTokenizerFast):

raise ValueError("This instance script only works for models which have a quick tokenizer. Checkout the massive table of models

"at https://huggingface.co/transformers/index.html#supported-frameworks to seek out the model types that meet this "

"requirement"

)

The argument “`–model_name_or_path==bert-base-uncased“ loads the bert-base-uncased model implementation available within the Hugging Face Hub.

From the Hugging Face Hub description:

“BERT base model (uncased): Pretrained model on English language using a masked language modeling (MLM) objective. It was introduced on this paper and first released on this repository. This model is uncased: it doesn’t make a difference between english and English.“

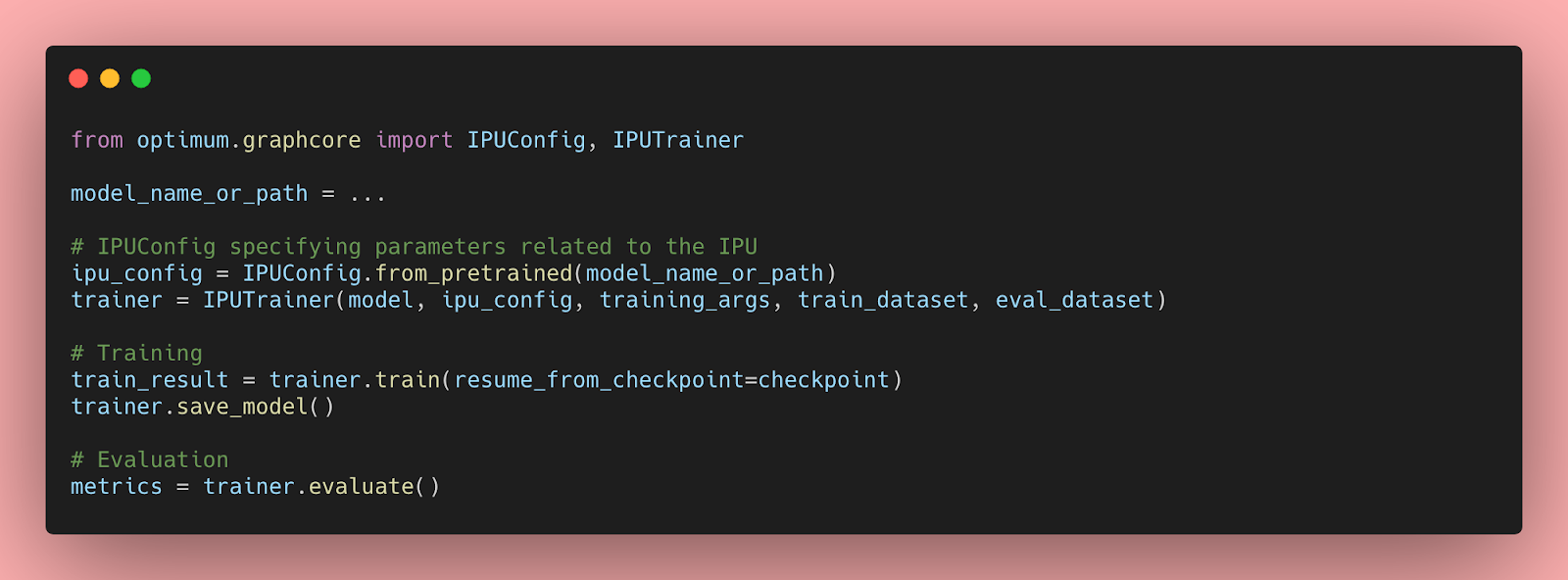

Training and Validation

You’ll be able to now use the IPUTrainer class available in Optimum to leverage all the Graphcore software and hardware stack, and train your models in IPUs with minimal code changes. Because of Optimum, you possibly can plug-and-play cutting-edge hardware to coach your cutting-edge models.

As a way to train and validate the BERT model, you possibly can pass the arguments --do_train and --do_eval to the run_qa.py script. After executing the script with the hyper-parameters above, it is best to see the next training and validation results:

"epoch": 3.0,

"train_loss": 0.9465060763888888,

"train_runtime": 368.4015,

"train_samples": 88524,

"train_samples_per_second": 720.877,

"train_steps_per_second": 2.809

The validation step yields the next results:

***** eval metrics *****

epoch = 3.0

eval_exact_match = 80.6623

eval_f1 = 88.2757

eval_samples = 10784

You’ll be able to see the remainder of the IPU BERT implementation within the Optimum-Graphcore: SQuAD Examples.