A few weeks ago, we have had the pleasure to announce that Habana Labs and Hugging Face would partner to speed up Transformer model training.

Habana Gaudi accelerators deliver as much as 40% higher price performance for training machine learning models in comparison with the newest GPU-based Amazon EC2 instances. We’re super excited to bring this price performance benefits to Transformers 🚀

On this hands-on post, I’ll show you methods to quickly arrange a Habana Gaudi instance on Amazon Web Services, after which fine-tune a BERT model for text classification. As usual, all code is provided in order that it’s possible you’ll reuse it in your projects.

Let’s start!

Establishing an Habana Gaudi instance on AWS

The only approach to work with Habana Gaudi accelerators is to launch an Amazon EC2 DL1 instance. These instances are equipped with 8 Habana Gaudi processors that may easily be put to work because of the Habana Deep Learning Amazon Machine Image (AMI). This AMI comes preinstalled with the Habana SynapseAI® SDK, and the tools required to run Gaudi accelerated Docker containers. When you’d wish to use other AMIs or containers, instructions can be found within the Habana documentation.

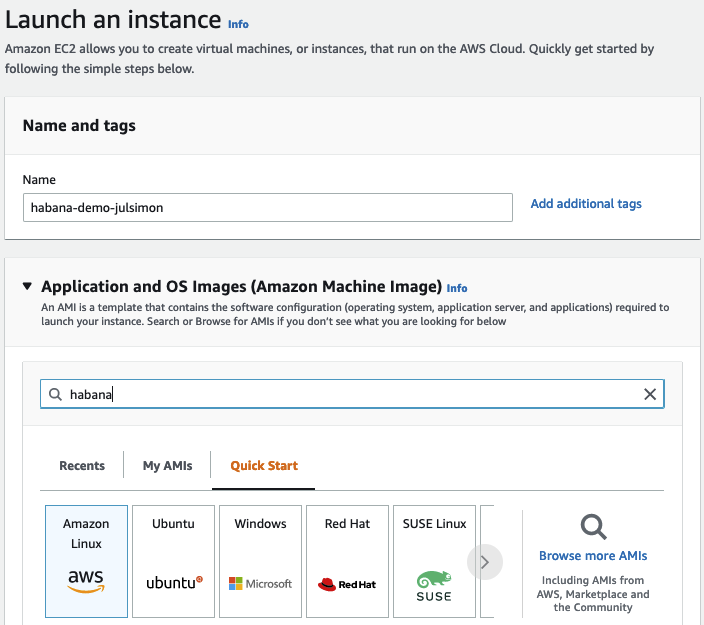

Ranging from the EC2 console within the us-east-1 region, I first click on Launch an instance and define a reputation for the instance (“habana-demo-julsimon”).

Then, I search the Amazon Marketplace for Habana AMIs.

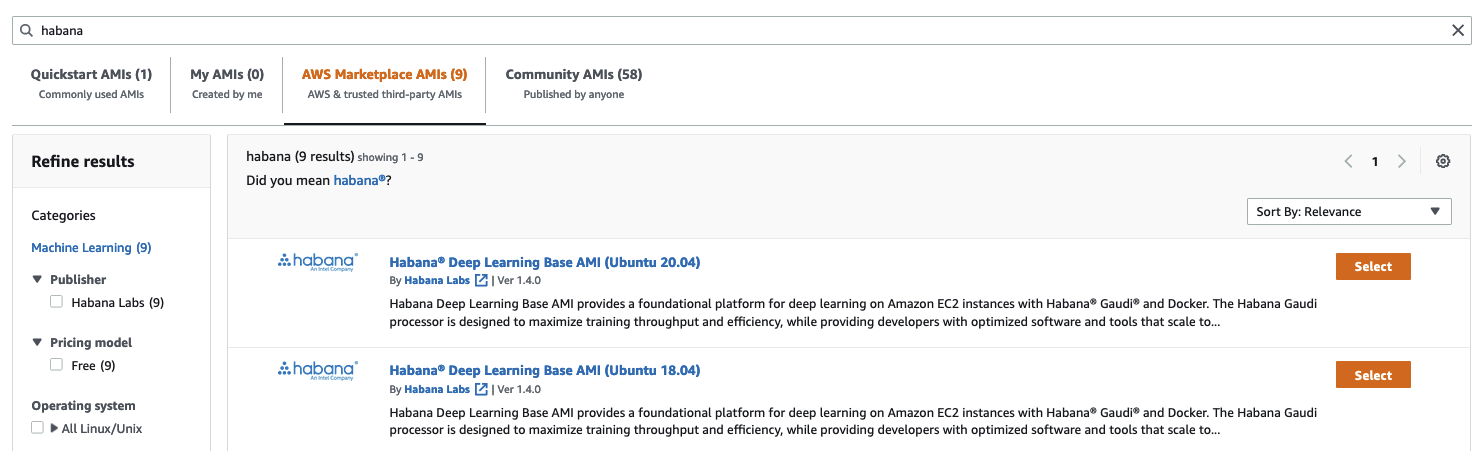

I pick the Habana Deep Learning Base AMI (Ubuntu 20.04).

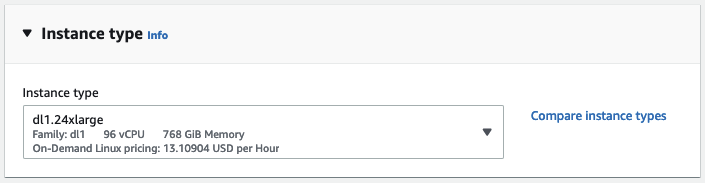

Next, I pick the dl1.24xlarge instance size (the one size available).

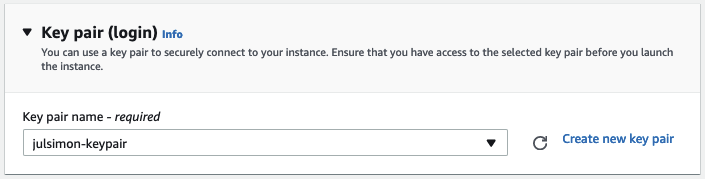

Then, I choose the keypair that I’ll use to connect with the instance with ssh. When you haven’t got a keypair, you may create one in place.

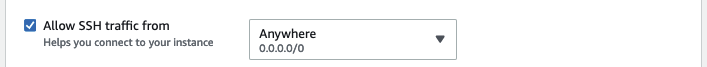

As a next step, I be certain that the instance allows incoming ssh traffic. I don’t restrict the source address for simplicity, but it is best to definitely do it in your account.

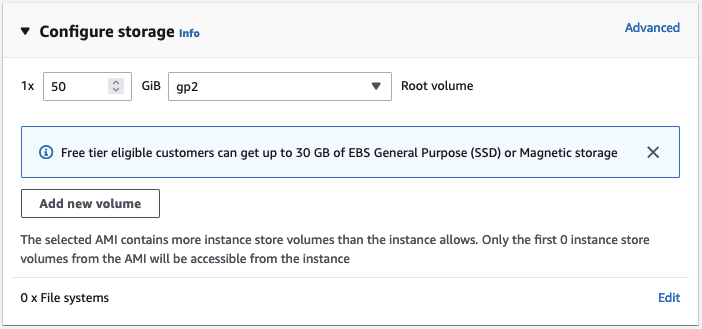

By default, this AMI will start an instance with 8GB of Amazon EBS storage, which won’t be enough here. I bump storage to 50GB.

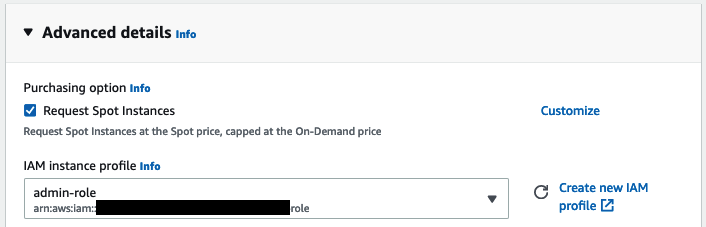

Next, I assign an Amazon IAM role to the instance. In real life, this role must have the minimum set of permissions required to run your training job, resembling the flexibility to read data from one in all your Amazon S3 buckets. This role shouldn’t be needed here because the dataset will likely be downloaded from the Hugging Face hub. When you’re not acquainted with IAM, I highly recommend reading the Getting Began documentation.

Then, I ask EC2 to provision my instance as a Spot Instance, an amazing approach to reduce the $13.11 per hour cost.

Finally, I launch the instance. A few minutes later, the instance is prepared and I can hook up with it with ssh. Windows users can do the identical with PuTTY by following the documentation.

ssh -i ~/.ssh/julsimon-keypair.pem ubuntu@ec2-18-207-189-109.compute-1.amazonaws.com

On this instance, the last setup step is to drag the Habana container for PyTorch, which is the framework I’ll use to fine-tune my model. Yow will discover information on other prebuilt containers and on methods to construct your individual within the Habana documentation.

docker pull

vault.habana.ai/gaudi-docker/1.5.0/ubuntu20.04/habanalabs/pytorch-installer-1.11.0:1.5.0-610

Once the image has been pulled to the instance, I run it in interactive mode.

docker run -it

--runtime=habana

-e HABANA_VISIBLE_DEVICES=all

-e OMPI_MCA_btl_vader_single_copy_mechanism=none

--cap-add=sys_nice

--net=host

--ipc=host vault.habana.ai/gaudi-docker/1.5.0/ubuntu20.04/habanalabs/pytorch-installer-1.11.0:1.5.0-610

I’m now able to fine-tune my model.

Wonderful-tuning a text classification model on Habana Gaudi

I first clone the Optimum Habana repository contained in the container I’ve just began.

git clone https://github.com/huggingface/optimum-habana.git

Then, I install the Optimum Habana package from source.

cd optimum-habana

pip install .

Then, I move to the subdirectory containing the text classification example and install the required Python packages.

cd examples/text-classification

pip install -r requirements.txt

I can now launch the training job, which downloads the bert-large-uncased-whole-word-masking model from the Hugging Face hub, and fine-tunes it on the MRPC task of the GLUE benchmark.

Please note that I’m fetching the Habana Gaudi configuration for BERT from the Hugging Face hub, and you can also use your individual. As well as, other popular models are supported, and you’ll find their configuration file within the Habana organization.

python run_glue.py

--model_name_or_path bert-large-uncased-whole-word-masking

--gaudi_config_name Habana/bert-large-uncased-whole-word-masking

--task_name mrpc

--do_train

--do_eval

--per_device_train_batch_size 32

--learning_rate 3e-5

--num_train_epochs 3

--max_seq_length 128

--use_habana

--use_lazy_mode

--output_dir ./output/mrpc/

After 2 minutes and 12 seconds, the job is complete and has achieved a wonderful F1 rating of 0.9181, which could actually improve with more epochs.

***** train metrics *****

epoch = 3.0

train_loss = 0.371

train_runtime = 0:02:12.85

train_samples = 3668

train_samples_per_second = 82.824

train_steps_per_second = 2.597

***** eval metrics *****

epoch = 3.0

eval_accuracy = 0.8505

eval_combined_score = 0.8736

eval_f1 = 0.8968

eval_loss = 0.385

eval_runtime = 0:00:06.45

eval_samples = 408

eval_samples_per_second = 63.206

eval_steps_per_second = 7.901

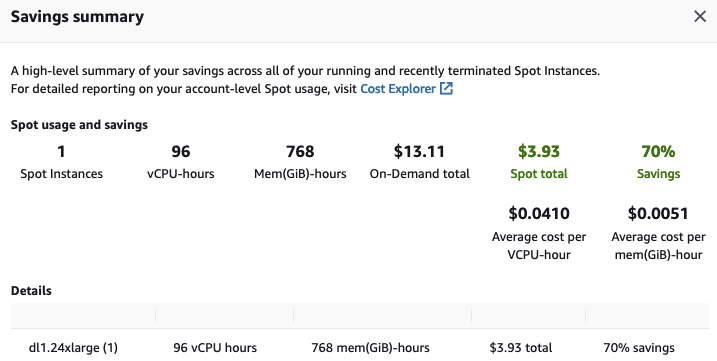

Last but not least, I terminate the EC2 instance to avoid unnecessary charges. Taking a look at the Savings Summary within the EC2 console, I see that I saved 70% because of Spot Instances, paying only $3.93 per hour as a substitute of $13.11.

As you may see, the mix of Transformers, Habana Gaudi, and AWS instances is powerful, easy, and cost-effective. Give it a attempt to tell us what you think that. We definitely welcome your questions and feedback on the Hugging Face Forum.

Please reach out to Habana to learn more about training Hugging Face models on Gaudi processors.