Understanding your users’ needs is crucial in any user-related business. But it surely also requires a variety of labor and evaluation, which is sort of expensive. Why not leverage Machine Learning then? With much less coding through the use of Auto ML.

In this text, we are going to leverage HuggingFace AutoTrain and Kili to construct an energetic learning pipeline for text classification. Kili is a platform that empowers a data-centric approach to Machine Learning through quality training data creation. It provides collaborative data annotation tools and APIs that enable quick iterations between reliable dataset constructing and model training. Energetic learning is a process by which you add labeled data to the information set after which retrain a model iteratively. Due to this fact, it’s countless and requires humans to label the information.

As a concrete example use case for this text, we are going to construct our pipeline through the use of user reviews of Medium from the Google Play Store. After that, we’re going to categorize the reviews with the pipeline we built. Finally, we are going to apply sentiment evaluation to the classified reviews. Then we are going to analyze the outcomes, understanding the users’ needs and satisfaction will likely be much easier.

AutoTrain with HuggingFace

Automated Machine Learning is a term for automating a Machine Learning pipeline. It also includes data cleansing, model selection, and hyper-parameter optimization too. We are able to use 🤗 transformers for automated hyper-parameter searching. Hyper-parameter optimization is a difficult and time-consuming process.

While we are able to construct our pipeline ourselves through the use of transformers and other powerful APIs, it is usually possible to completely automate this with AutoTrain. AutoTrain is built on many powerful APIs like transformers, datasets and inference-api.

Cleansing the information, model selection, and hyper-parameter optimization steps are all fully automated in AutoTrain. One can fully utilize this framework to construct production-ready SOTA transformer models for a particular task. Currently, AutoTrain supports binary and multi-label text classification, token classification, extractive query answering, text summarization, and text scoring. It also supports many languages like English, German, French, Spanish, Finnish, Swedish, Hindi, Dutch, and more. In case your language will not be supported by AutoTrain, it is usually possible to make use of custom models with custom tokenizers.

Kili

Kili is an end-to-end AI training platform for data-centric businesses. Kili provides optimized labeling features and quality management tools to administer your data. You may quickly annotate the image, video, text, pdf, and voice data while controlling the standard of the dataset. It also has powerful APIs for GraphQL and Python which eases data management quite a bit.

It is offered either online or on-premise and it enables modern Machine Learning technics either on computer vision or on NLP and OCR. It supports text classification, named entity recognition (NER), relation extraction, and more NLP/OCR tasks. It also supports computer vision tasks like object detection, image transcription, video classification, semantic segmentation, and plenty of more!

Kili is a industrial tool but you too can create a free developer account to try Kili’s tools. You may learn more from the pricing page.

Project

We are going to work on an example of review classification, together with sentiment evaluation, to get insights a couple of mobile application.

We’ve got extracted around 40 thousand reviews of Medium from the Google Play Store. We are going to annotate the review texts on this dataset step-by-step. After which we’re going to construct a pipeline for review classification. Within the modeling, the primary model will likely be prepared with AutoTrain. Then we may also construct a model without using AutoTrain.

All of the code and the dataset will be found on the GitHub repository of the project.

Dataset

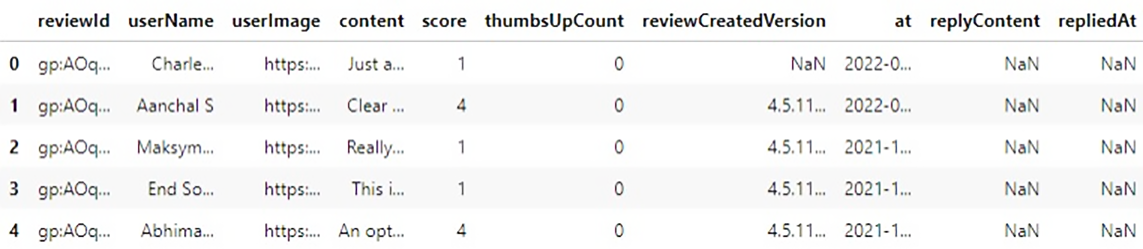

Let’s start by taking a take a look at the raw dataset,

There are 10 columns and 40130 samples on this dataset. The one column we want is content which is the review of the user. Before starting, we want to define some categories.

We’ve got defined 4 categories,

- Subscription: Since medium has a subscription option, anything related to users’ opinions about subscription features should belong here.

- Content: Medium is a sharing platform, there are plenty of writings from poetry to advanced artificial intelligence research. Users’ opinions about quite a lot of topics, the standard of the content should belong here.

- Interface: Thoughts about UI, searching articles, advice engine, and anything related to the interface should belong here. This also includes payment-related issues.

- User Experience: The user’s general thoughts and opinions concerning the application. Which needs to be generally abstract without indicating one other category.

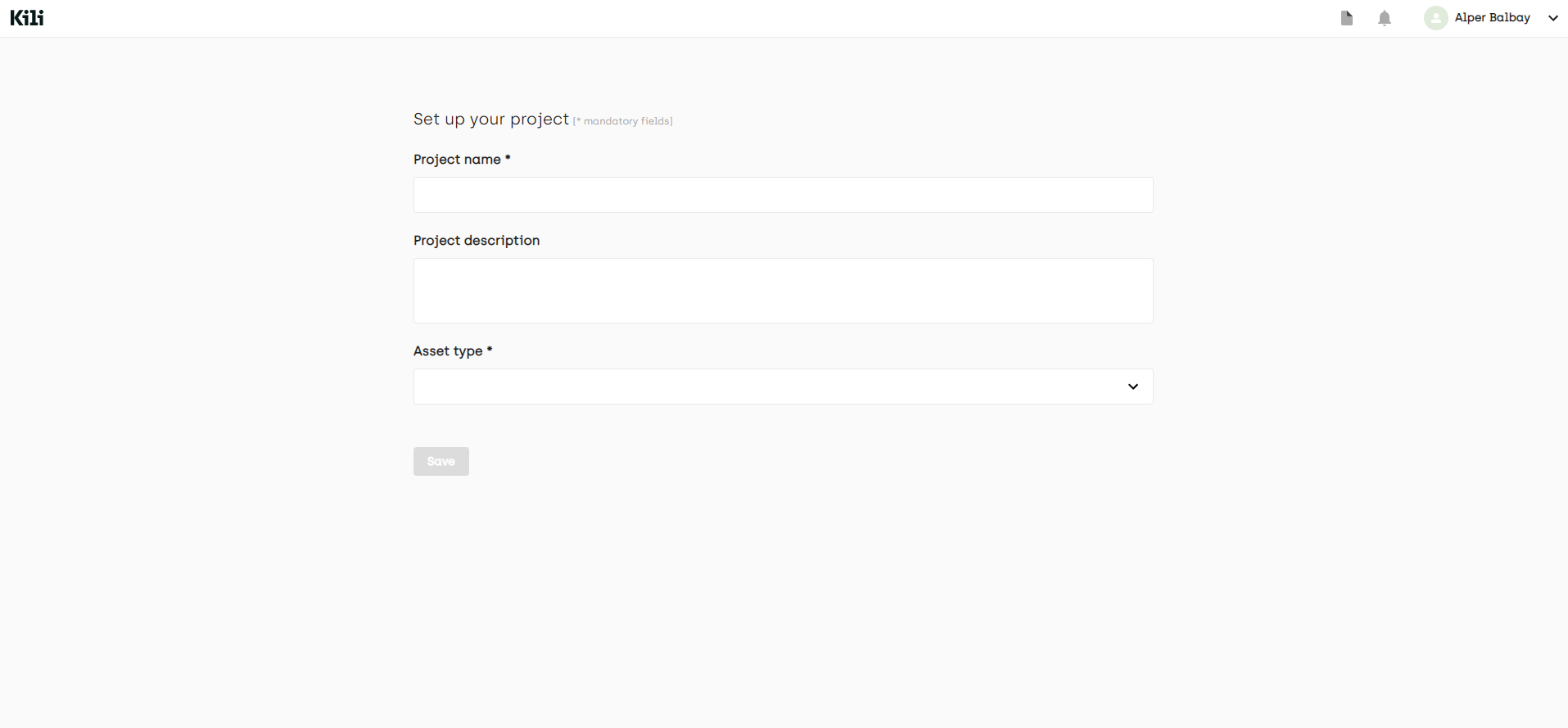

For the labeling part, we want to create a project in Kili’s platform at first. We are able to use either the online interface of the platform or APIs. Let’s have a look at each.

From the online interface:

From the project list page, we create a multi-class text classification project.

After that, on the project’s page, you may add your data by clicking the Add assets button. Currently, you may add at most 25000 samples, but you may extend this limit in case you contact the Kili sales team.

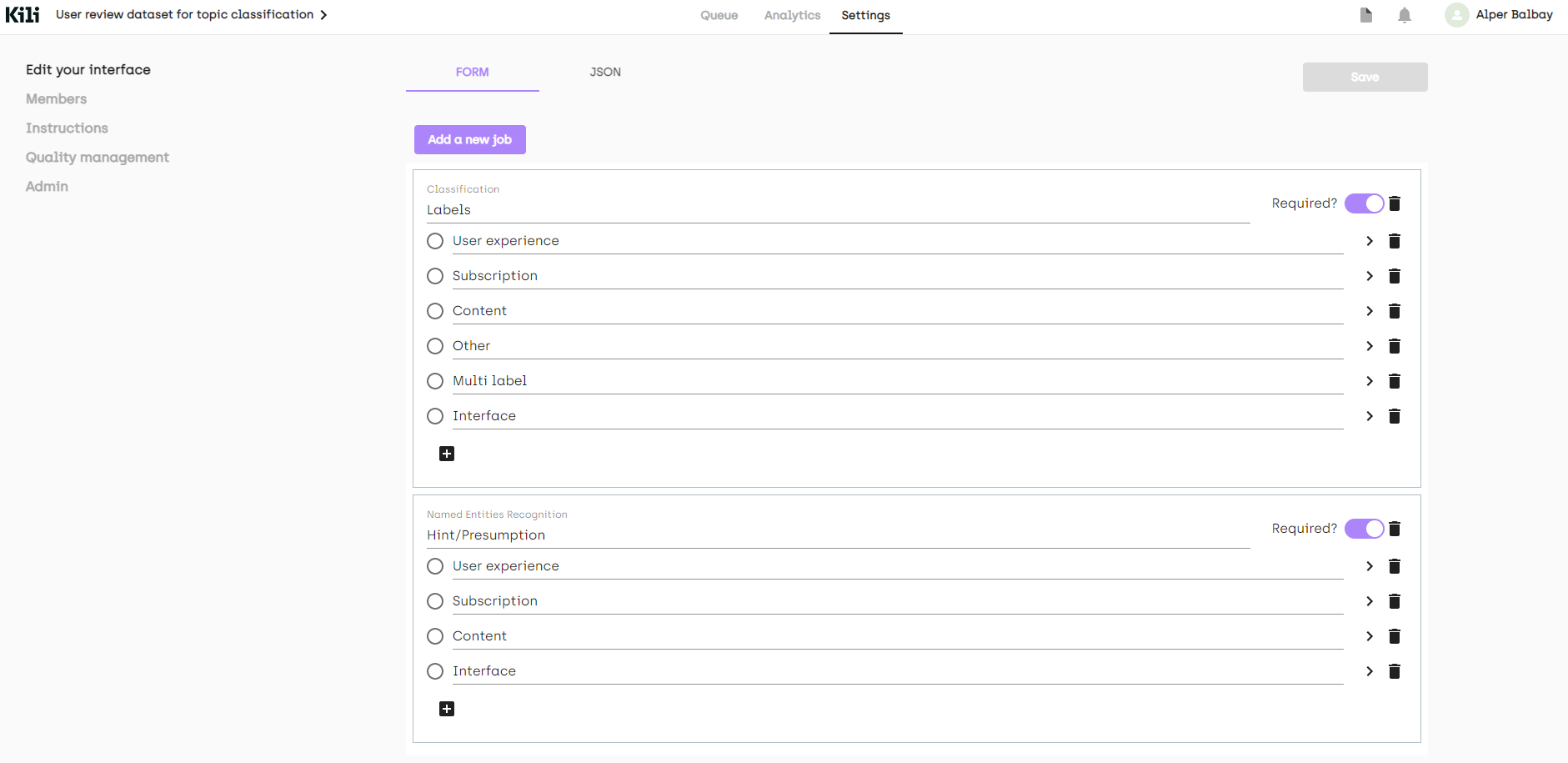

After we create our project, we want so as to add jobs. We are able to prepare a labeling interface from the Settings page

Although we now have defined 4 categories, it’s inevitable to return across reviews that ought to have multiple categories or completely weird ones. I’ll add two more labels (which aren’t to make use of in modeling) to catch these cases too.

In our example, we added two more labels (Other, Multi-label). We also added a named entity recognition (NER) job simply to specify how we selected a label while labeling. The ultimate interface is shown below

As you may see from the menu on the left, it is usually possible to drop a link that describes your labels on the Instructions page. We also can add other members to our project from Members or add quality measures from the Quality management pages. More information will be present in the documentation.

Now, let’s create our project with Python API:

At first, we want to import needed libraries

(notebooks/kili_project_management.ipynb)

import os

import pandas as pd

from kili.client import Kili

from tqdm import tqdm

from dotenv import load_dotenv

load_dotenv()

So as to access the platform, we want to authenticate our client

API_KEY = os.getenv('KILI_API_KEY')

kili = Kili(api_key = API_KEY)

Now we are able to start to arrange our interface, the interface is only a dictionary in Python. We are going to define our jobs, then fill the labels up. Since all labels also could have children labels, we are going to pass labels as dictionaries too.

labels = ['User experience', 'Subscription', 'Content', 'Other', 'Multi label']

entity_dict = {

'User experience': '#cc4125',

'Subscription': '#4543e6',

'Content': '#3edeb6',

}

project_name = 'User review dataset for topic classification'

project_description = "Medium's app reviews fetched from google play store for topic classification"

interface = {

'jobs': {

'JOB_0': {

'mlTask': 'CLASSIFICATION',

'instruction': 'Labels',

'required': 1,

'content': {

"categories": {},

"input": "radio",

},

},

'JOB_1': {

'mlTask': "NAMED_ENTITIES_RECOGNITION",

'instruction': 'Entities',

'required': 1,

'content': {

'categories': {},

"input": "radio"

},

},

}

}

for label in labels:

label_upper = label.strip().upper().replace(' ', '_')

content_dict_0 = interface['jobs']['JOB_0']['content']

categories_0 = content_dict_0['categories']

category = {'name': label, 'children': []}

categories_0[label_upper] = category

for label, color in entity_dict.items():

label_upper = label.strip().upper().replace(' ', '_')

content_dict_1 = interface['jobs']['JOB_1']['content']

categories_1 = content_dict_1['categories']

category = {'name': label, 'children': [], 'color': color}

categories_1[label_upper] = category

project_id = kili.create_project(json_interface=interface,

input_type='TEXT',

title=project_name,

description=project_description)['id']

We’re able to upload our data to the project. The append_many_to_dataset method will be used to import the information into the platform. Through the use of the Python API, we are able to import the information by batch of 100 maximum. Here is an easy function to upload the information:

def import_dataframe(project_id:str, dataset:pd.DataFrame, text_data_column:str, external_id_column:str, subset_size:int=100) -> bool:

"""

Arguments:

Inputs

- project_id (str): specifies the project to load the information, this can be returned after we create our project

- dataset (pandas DataFrame): Dataset that has proper columns for id and text inputs

- text_data_column (str): specifies which column has the text input data

- external_id_column (str): specifies which column has the ids

- subset_size (int): specifies the variety of samples to import at a time. Can't be higher than 100

Outputs:

None

Returns:

True or False regards to process succession

"""

assert subset_size <= 100, "Kili only allows to upload 100 assets at most at a time onto the app"

L = len(dataset)

if L>25000:

print('Kili Projects currently supports maximum 25000 samples as default. Importing first 25000 samples...')

L=25000

i = 0

while i+subset_size < L:

subset = dataset.iloc[i:i+subset_size]

externalIds = subset[external_id_column].astype(str).to_list()

contents = subset[text_data_column].astype(str).to_list()

kili.append_many_to_dataset(project_id=project_id,

content_array=contents,

external_id_array=externalIds)

i += subset_size

return True

It simply imports the given dataset DataFrame to a project specified by project_id.

We are able to see the arguments from docstring, we just have to pass our dataset together with the corresponding column names. We’ll just use the sample indices we get after we load the information. After which voila, uploading the information is completed!

dataset_path = '../data/processed/lowercase_cleaned_dataset.csv'

df = pd.read_csv(dataset_path).reset_index()

import_dataframe(project_id, df, 'content', 'index')

It wasn’t difficult to make use of the Python API, the helper methods we used covered many difficulties. We also used one other script to envision the brand new samples after we updated the dataset. Sometimes the model performance drop down after the dataset update. That is resulting from easy mistakes like mislabeling and introducing bias to the dataset. The script simply authenticates after which moves distinct samples of two given dataset versions to To Review. We are able to change the property of a sample through update_properties_in_assets method:

(scripts/move_diff_to_review.py)

from kili.client import Kili

from dotenv import load_dotenv

import os

import argparse

import pandas as pd

load_dotenv()

parser = argparse.ArgumentParser()

parser.add_argument('--first',

required=True,

type=str,

help='Path to first dataframe')

parser.add_argument('--second',

required=True,

type=str,

help='Path to second dataframe')

args = vars(parser.parse_args())

API_KEY = os.getenv('KILI_API_KEY')

kili = Kili(API_KEY)

df1 = pd.read_csv(args['first'])

df2 = pd.read_csv(args['second'])

diff_df = pd.concat((df1, df2)).drop_duplicates(keep=False)

diff_ids = diff_df['id'].to_list()

kili.update_properties_in_assets(diff_ids,

status_array=['TO_REVIEW'] * len(diff_ids))

print('SET %d ENTRIES TO BE REVIEWED!' % len(diff_df))

Labeling

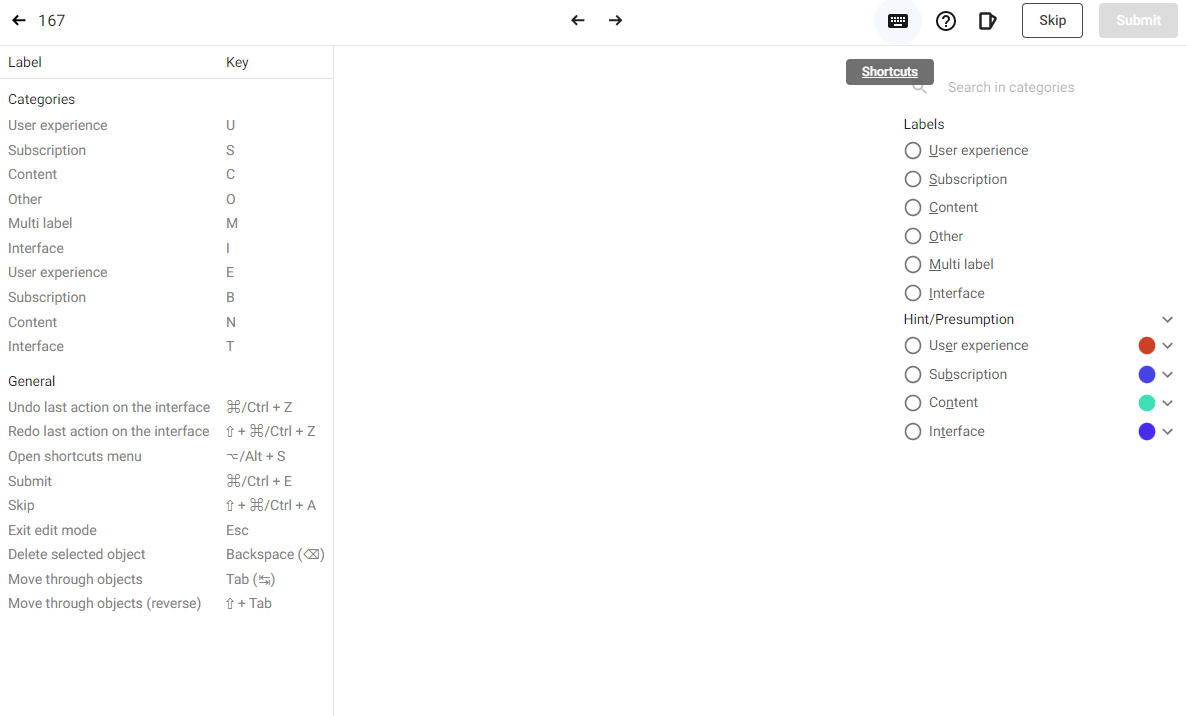

Now that we now have the source data uploaded, the platform has a built-in labeling interface which is pretty easy to make use of. Available keyboard shortcuts helped while annotating the information. We used the interface without breaking a sweat, there are routinely defined shortcuts and it simplifies the labeling. We are able to see the shortcuts by clicking the keyboard icon on the right-upper a part of the interface, also they are shown by underlined characters within the labeling interface at the correct.

Some samples were very weird, so we decided to skip them while labeling. On the whole, the method was way easier due to Kili’s built-in platform.

Exporting the Labeled Data

The labeled data is exported with ease through the use of Python API. The script below exports the labeled and reviewed samples right into a dataframe, then saves it with a given name as a CSV file.

import argparse

import os

import pandas as pd

from dotenv import load_dotenv

from kili.client import Kili

load_dotenv()

parser = argparse.ArgumentParser()

parser.add_argument('--output_name',

required=True,

type=str,

default='dataset.csv')

parser.add_argument('--remove', required=False, type=str)

args = vars(parser.parse_args())

API_KEY = os.getenv('KILI_API_KEY')

dataset_path = '../data/processed/lowercase_cleaned_dataset.csv'

output_path = os.path.join('../data/processed', args['output_name'])

def extract_labels(labels_dict):

response = labels_dict[-1]

label_job_dict = response['jsonResponse']['JOB_0']

categories = label_job_dict['categories']

label = categories[0]['name']

return label

kili = Kili(API_KEY)

print('Authenticated!')

project = kili.projects(

search_query='User review dataset for topic classification')[0]

project_id = project['id']

returned_fields = [

'id', 'externalId', 'labels.jsonResponse', 'skipped', 'status'

]

dataset = pd.read_csv(dataset_path)

df = kili.assets(project_id=project_id,

status_in=['LABELED', 'REVIEWED'],

fields=returned_fields,

format='pandas')

print('Got the samples!')

df_ns = df[~df['skipped']].copy()

df_ns.loc[:, 'label'] = df_ns['labels'].apply(extract_labels)

df_ns.loc[:, 'content'] = dataset.loc[df_ns.externalId.astype(int), 'content']

df_ns = df_ns.drop(columns=['labels'])

df_ns = df_ns[df_ns['label'] != 'MULTI_LABEL'].copy()

if args['remove']:

df_ns = df_ns.drop(index=df_ns[df_ns['label'] == args['remove']].index)

print(‘DATA FETCHING DONE')

print('DATASET HAS %d SAMPLES' % (len(df_ns)))

print('SAVING THE PROCESSED DATASET TO: %s' % os.path.abspath(output_path))

df_ns.to_csv(output_path, index=False)

print('DONE!')

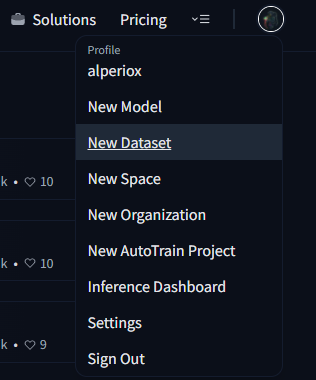

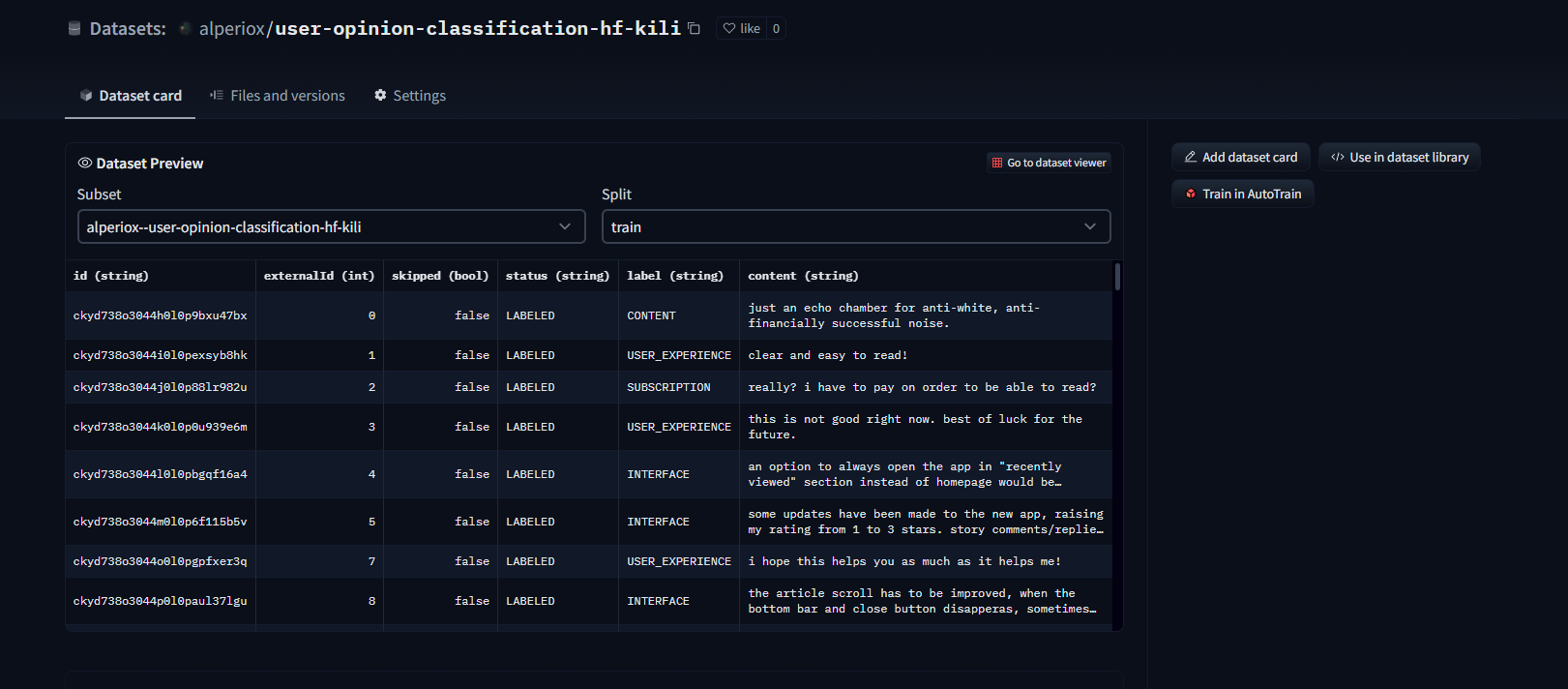

Nice! We now have the labeled data as a csv file. Let’s create a dataset repository in HuggingFace and upload the information there!

It’s really easy, just click your profile picture and choose Latest Dataset option.

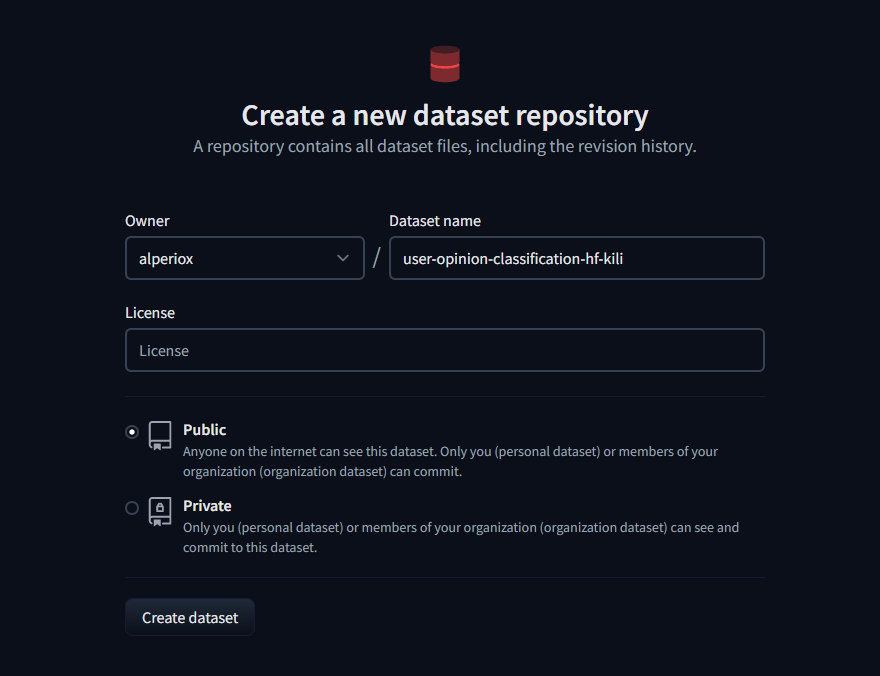

Then enter the repository name, pick a license in case you want and it’s done!

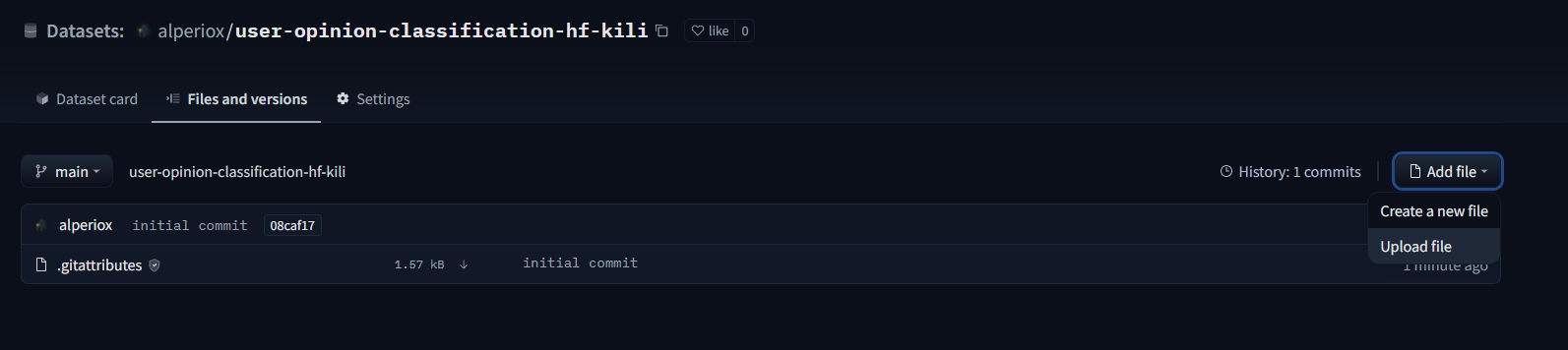

Now we are able to upload the dataset from Add file within the Files and versions tab.

Dataset viewer is routinely available after you upload the information, we are able to easily check the samples!

It is usually possible to upload the dataset to Hugging Face’s dataset hub through the use of datasets package.

Modeling

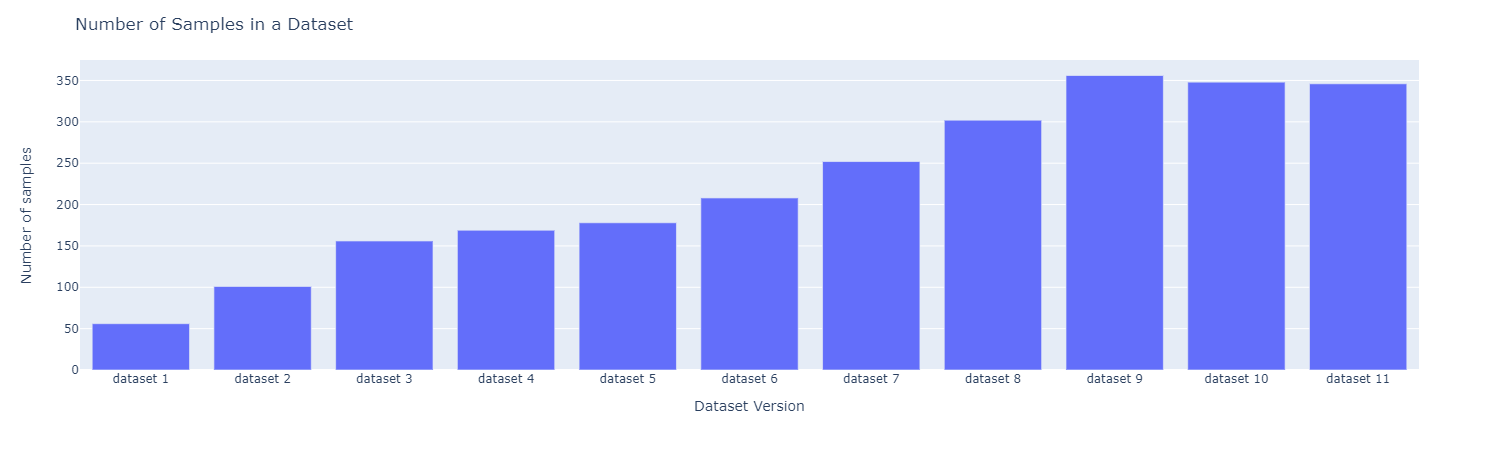

Let’s use energetic learning. We iteratively label and fine-tune the model. In each iteration, we label 50 samples within the dataset. The variety of samples is shown below:

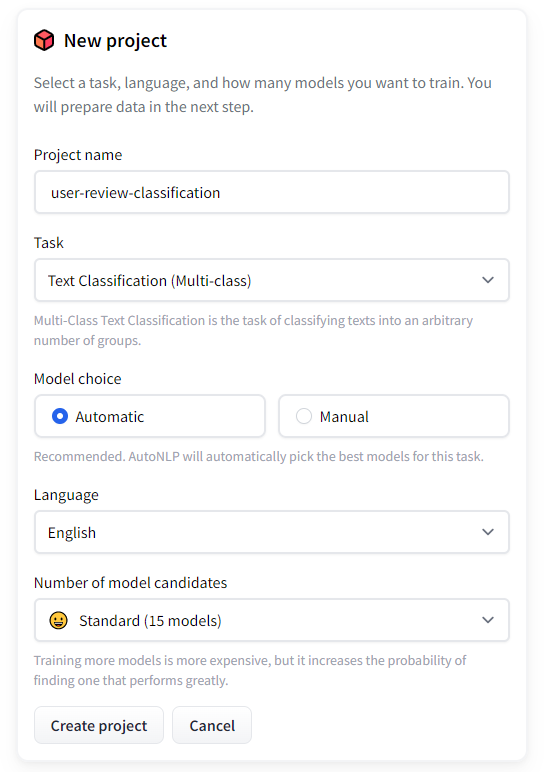

Let’s check out AutoTrain first:

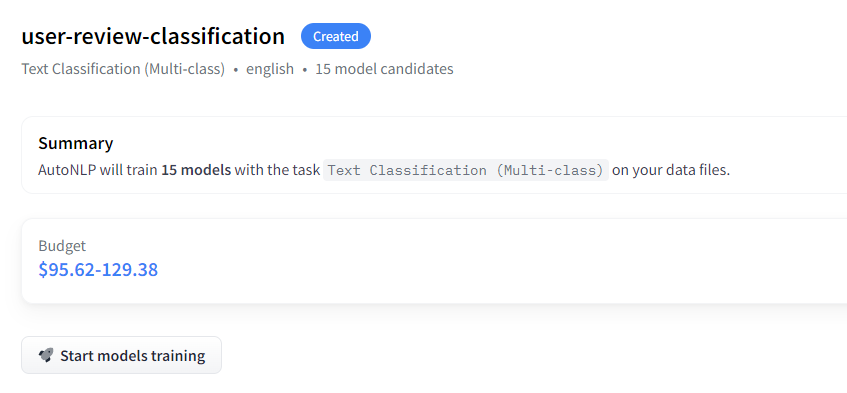

First, open the AutoTrain

- Create a project

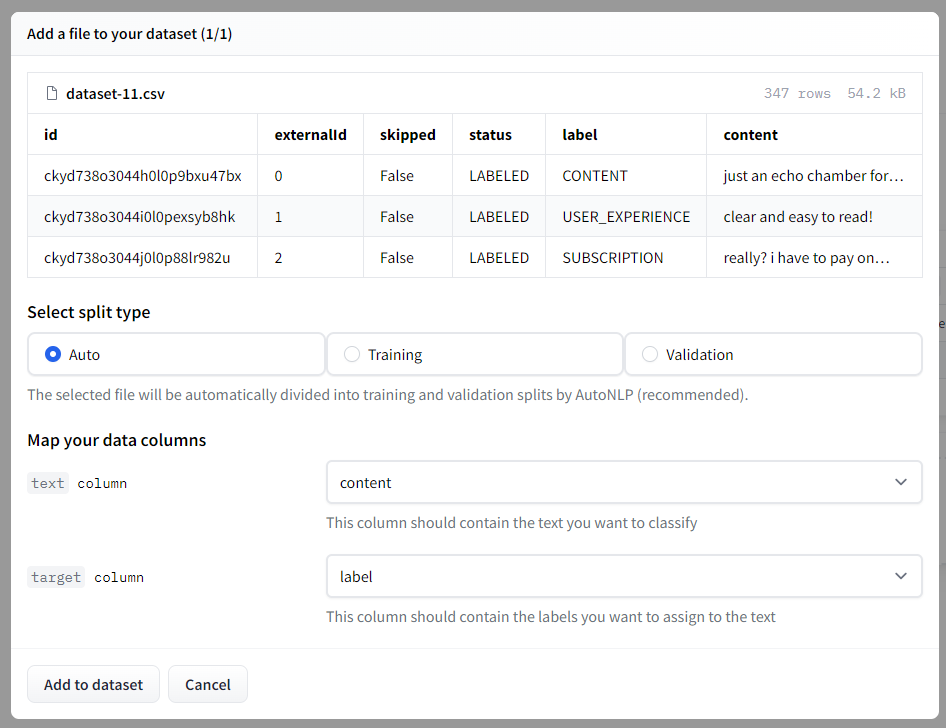

- We are able to select the dataset repository we created before or upload the dataset again. Then we want to decide on the split type, I’ll leave it as Auto.

- Train the models

AutoTrain will try different models and choose the very best models. Then performs hyper-parameter optimization routinely. The dataset can be processed routinely.

The value totally relies on your use case. It may be as little as $10 or it may well be costlier than the present value.

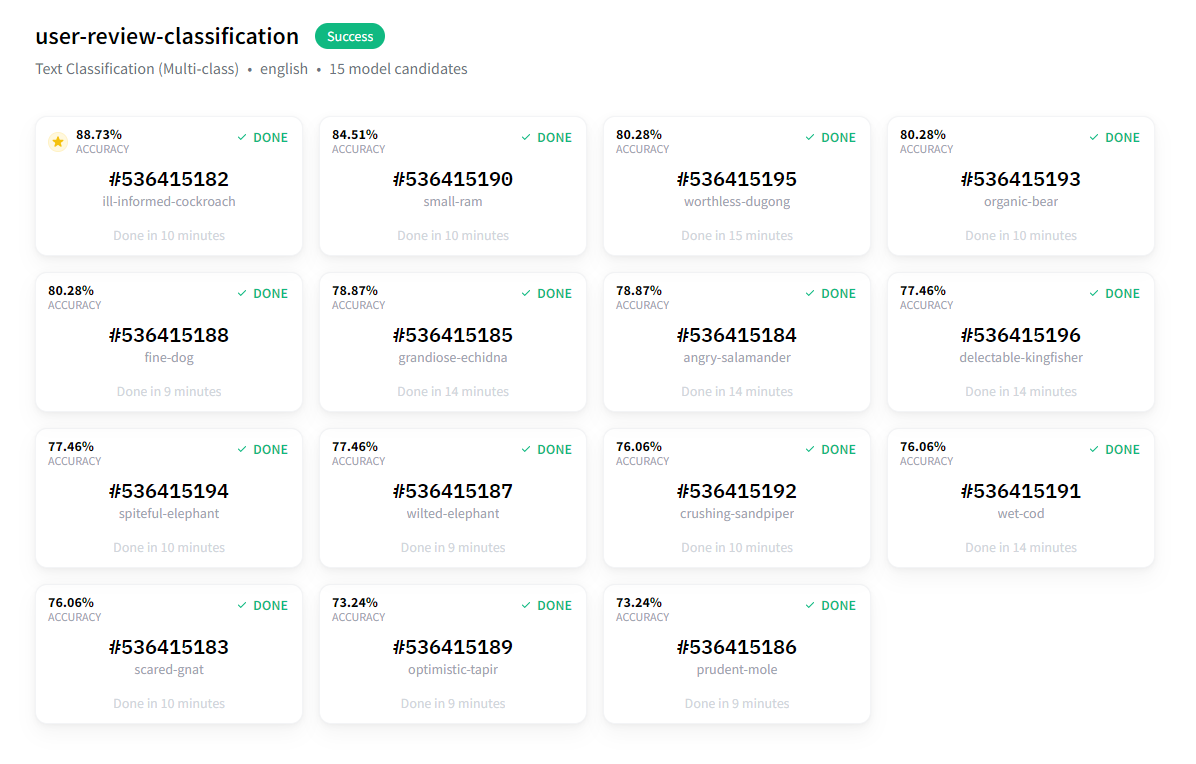

The training is completed after around 20 minutes, the outcomes are pretty good!

The perfect model’s accuracy is sort of %89.

Now we are able to use this model to perform the evaluation, it only took about half-hour to establish the entire thing.

Modeling without AutoTrain

We are going to use Ray Tune and Hugging Face’s Trainer API to go looking hyper-parameters and fine-tune a pre-trained deep learning model. We’ve got chosen roBERTa base sentiment classification model which is trained on tweets for fine-tuning. We have fine-tuned the model on google collaboratory and it may well be found on the notebooks folder within the GitHub repository.

Ray tune is a well-liked library for hyper-parameter optimization which comes with many SOTA algorithms out of the box. It is usually possible to make use of Optuna and SigOpt.

We also used [Async Successive Halving Algorithm (ASHA) as the scheduler and HyperOpt as the search algorithm. Which is pretty much a starting point. You can use different schedulers and search algorithms.

What will we do?

- Import the necessary libraries (a dozen of them) and prepare a dataset class

- Define needed functions and methods to process the data

- Load the pre-trained model and tokenizer

- Run hyper-parameter search

- Use the best results for evaluation

Let’s start with importing necessary libraries!

(all the code is in notebooks/modeling.ipynb and google collaboratory notebook)

import json

import os

import random

from tqdm import tqdm

import numpy as np

import pandas as pd

import plotly.express as px

import matplotlib.pyplot as plt

from sklearn.metrics import (accuracy_score, f1_score,

precision_score, recall_score)

from sklearn.model_selection import train_test_split

import torch

import torch.nn as nn

from torch.utils.data import DataLoader, Dataset, random_split

import transformers

from datasets import load_metric

from transformers import (AutoModelForSequenceClassification, AutoTokenizer,

Trainer, TrainingArguments)

from ray.tune.schedulers import ASHAScheduler, PopulationBasedTraining

from ray.tune.suggest.hyperopt import HyperOptSearch

We will set a seed for the libraries we use for reproducibility

def seed_all(seed):

torch.manual_seed(seed)

random.seed(seed)

np.random.seed(seed)

SEED=42

seed_all(SEED)

Now let’s define our dataset class!

class TextClassificationDataset(Dataset):

def __init__(self, dataframe):

self.labels = dataframe.label.to_list()

self.inputs = dataframe.content.to_list()

self.labels_to_idx = {k:v for k,v in labels_dict.items()}

def __len__(self):

return len(self.inputs)

def __getitem__(self, idx):

if type(idx)==torch.Tensor:

idx = list(idx)

input_data = self.inputs[idx]

goal = self.labels[idx]

goal = self.labels_to_idx[target]

return {'text': input_data, 'label':goal}

We are able to download the model easily by specifying HuggingFace hub repository. It is usually needed to import the tokenizer for the desired model. We’ve got to supply a function to initialize the model during hyper-parameter optimization. The model will likely be defined there.

The metric to optimize is accuracy, we wish this value to be as high as possible. Due to that, we want to load the metric, then define a function to get the predictions and calculate the popular metric.

model_name = 'cardiffnlp/twitter-roberta-base-sentiment'

metric = load_metric("accuracy")

tokenizer = AutoTokenizer.from_pretrained(model_name)

def model_init():

"""

Hyperparameter optimization is performed by newly initialized models,

due to this fact we are going to have to initialize the model again for each single search run.

This function initializes and returns the pre-trained model chosen with `model_name`

"""

return AutoModelForSequenceClassification.from_pretrained(model_name, num_labels=4, return_dict=True, ignore_mismatched_sizes=True)

def compute_metrics(eval_pred):

logits, labels = eval_pred

predictions = np.argmax(logits, axis=-1)

return metric.compute(predictions=predictions, references=labels)

After defining metric calculation and model initialization function, we are able to load the information:

file_name = "dataset-11.csv"

dataset_path = os.path.join('data/processed', file_name)

dataset = pd.read_csv(dataset_path)

I also defined two dictionaries for mapping labels to indices and indices to labels.

idx_to_label = dict(enumerate(dataset.label.unique()))

labels_dict = {v:k for k,v in idx_to_label.items()}

Now we are able to define the search algorithm and the scheduler for the hyper-parameter-search.

scheduler = ASHAScheduler(metric='objective', mode='max')

search_algorithm = HyperOptSearch(metric='objective', mode='max', random_state_seed=SEED)

n_trials = 40

We also have to tokenize the text data before passing it to the model, we are able to easily do that through the use of the loaded tokenizer. Ray Tune works in a black-box setting so I used tokenizer as a default argument for a work-around. Otherwise, an error about tokenizer definition would arise.

def tokenize(sample, tokenizer=tokenizer):

tokenized_sample = tokenizer(sample['text'], padding=True, truncation=True)

tokenized_sample['label'] = sample['label']

return tokenized_sample

One other utility function that returns stratified and tokenized Torch dataset splits:

def prepare_datasets(dataset_df, test_size=.2, val_size=.2):

train_set, test_set = train_test_split(dataset_df, test_size=test_size,

stratify=dataset_df.label, random_state=SEED)

train_set, val_set = train_test_split(train_set, test_size=val_size,

stratify=train_set.label, random_state=SEED)

train_set = train_set.sample(frac=1, random_state=SEED)

val_set = val_set.sample(frac=1, random_state=SEED)

test_set = test_set.sample(frac=1, random_state=SEED)

train_dataset = TextClassificationDataset(train_set)

val_dataset = TextClassificationDataset(val_set)

test_dataset = TextClassificationDataset(test_set)

tokenized_train_set = train_dataset.map(tokenize)

tokenized_val_set = val_dataset.map(tokenize)

tokenized_test_set = test_dataset.map(tokenize)

return tokenized_train_set, tokenized_val_set, tokenized_test_set

Now we are able to perform the search! Let’s start by processing the information:

tokenized_train_set, tokenized_val_set, tokenized_test_set = prepare_datasets(dataset)

training_args = TrainingArguments(

'trial_results',

evaluation_strategy="steps",

disable_tqdm=True,

skip_memory_metrics=True,

)

trainer = Trainer(

args=training_args,

tokenizer=tokenizer,

train_dataset=tokenized_train_set,

eval_dataset=tokenized_val_set,

model_init=model_init,

compute_metrics=compute_metrics

)

best_run = trainer.hyperparameter_search(

direction="maximize",

n_trials=n_trials,

backend="ray",

search_alg=search_algorithm,

scheduler=scheduler

)

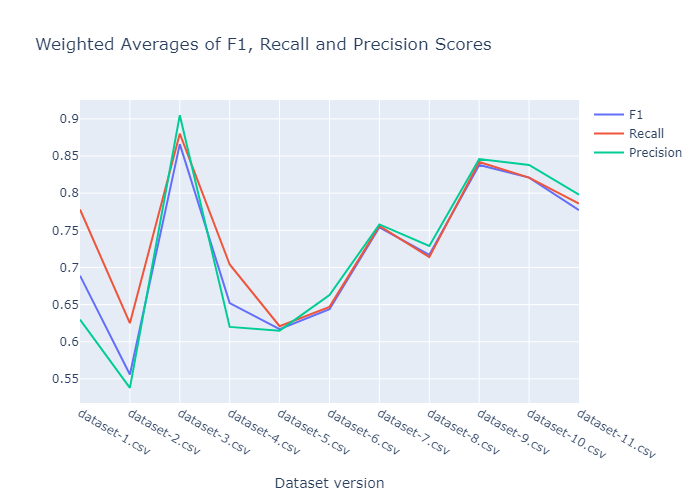

We performed the search with 20 and 40 trials respectively, the outcomes are shown below. The weighted average of F1, Recall, and Precision scores for 20 runs.

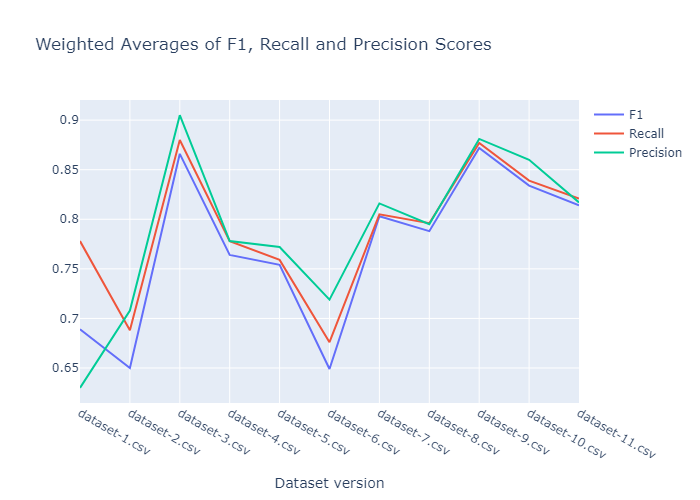

The weighted average of F1, Recall, and Precision scores for 40 runs.

The performance spiked up on the third dataset version. In some unspecified time in the future in data labeling, I’ve introduced an excessive amount of bias to the dataset mistakingly. As we are able to see its performance becomes more reasonable because the sample variance increased afterward. The ultimate model is saved at Google Drive and will be downloaded from here, it is usually possible to download via the download_models.py script.

Final Evaluation

We are able to use the fine-tuned model to conduct the ultimate evaluation now. All we now have to do is load the information, process it, and get the prediction results from the model. Then we are able to use a pre-trained model for sentiment evaluation and hopefully get insights.

We use Google Colab for the inference (here) after which exported the outcomes to result.csv. It may be present in results within the GitHub repository. We then analyzed the leads to one other google collaboratory notebook for an interactive experience. So you too can use it easily and interactively.

Let’s check the outcomes now!

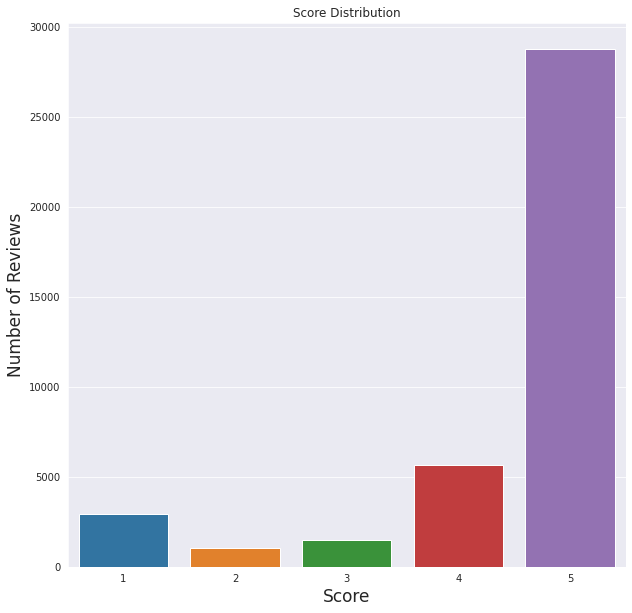

We are able to see that the given scores are highly positive. On the whole, the appliance is liked by the users.

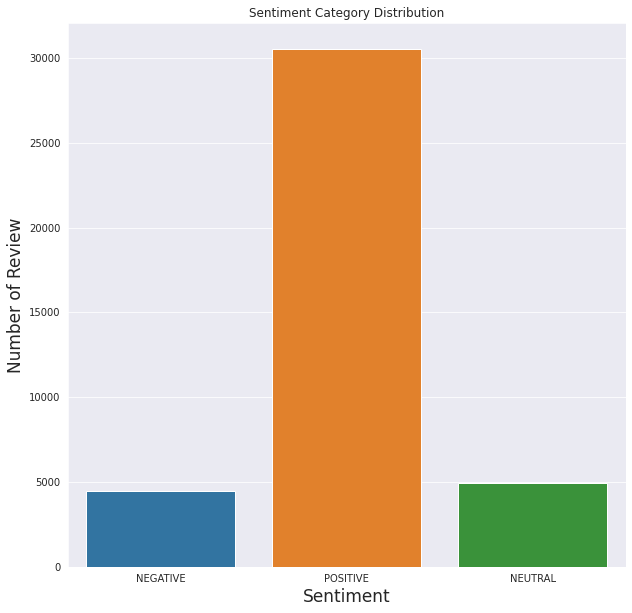

This also matches with the sentiment evaluation, a lot of the reviews are positive and the smallest amount of reviews are classified as negative.

As we are able to see from above, the model’s performance is type of comprehensible. Positive scores are dominantly higher than the others, similar to the sentimental evaluation graph shows.

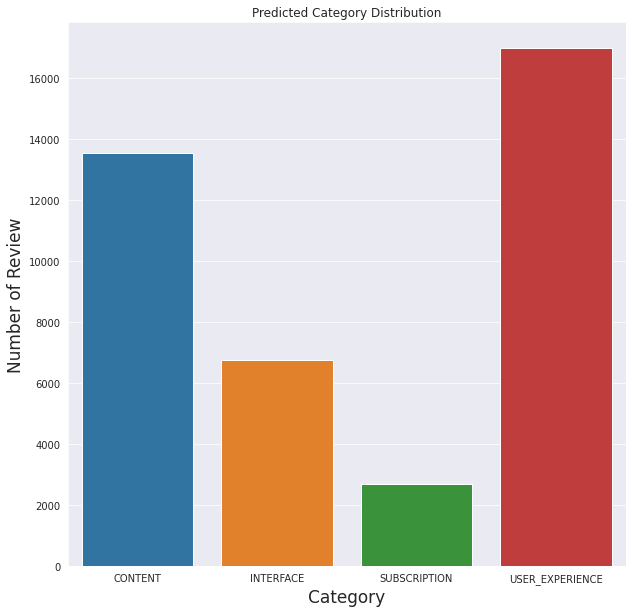

Because it involves the categories defined before, evidently the model predicts a lot of the reviews are about users’ experiences (excluding experiences related to other categories):

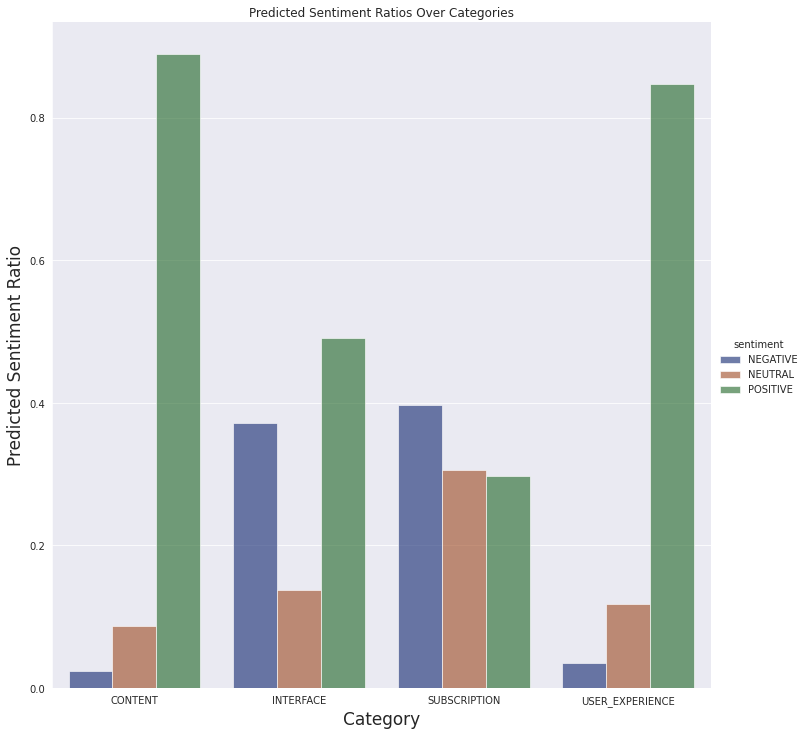

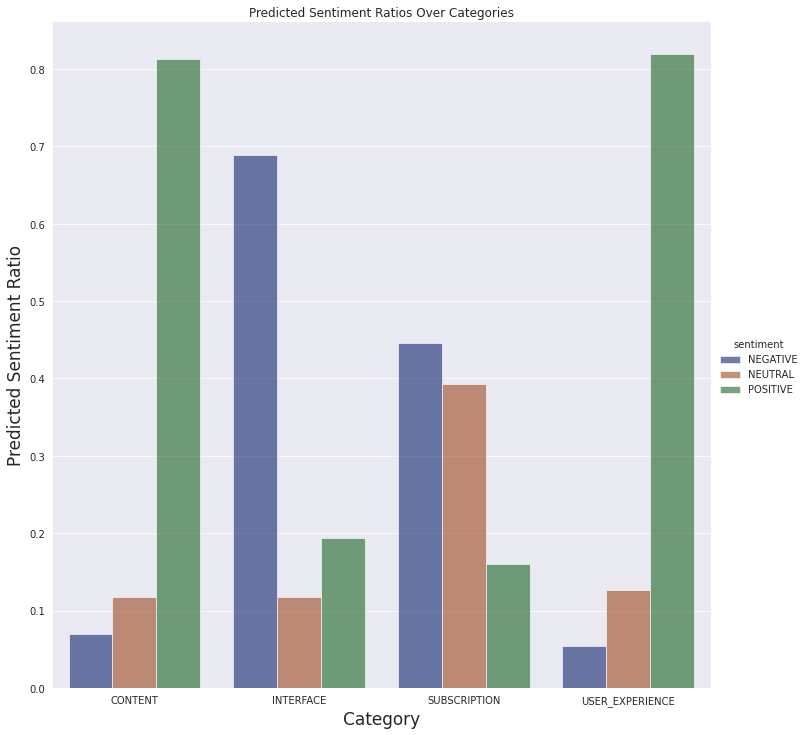

We also can see the sentiment predictions over defined categories below:

We cannot do an in depth evaluation of the reviews, a basic understanding of potential problems would suffice. Due to this fact, it is sufficient to conclude easy results from the ultimate data:

- It’s comprehensible that the majority of the reviews concerning the subscription are negative. Paid content generally will not be welcomed in mobile applications.

- There are numerous negative reviews concerning the interface. This will be a clue for further evaluation. Perhaps there’s a misconception about features, or a feature doesn’t work as users thought.

- People have generally liked the articles and most of them had good experiences.

Necessary note concerning the plot: we have not filtered the reviews by application version. Once we take a look at the outcomes of the newest current version (4.5), evidently the interface of the appliance confuses the users or has annoying bugs.

Conclusion

Now we are able to use the pre-trained model to try to know the potential shortcomings of the mobile application. Then it will be easier to research a particular feature.

We used HuggingFace’s powerful APIs and AutoTrain together with Kili’s easy-to-use interface in this instance. The modeling with AutoTrain just took half-hour, it selected the models and trained them for our use. AutoTrain is unquestionably rather more efficient since I spent more time as I develop the model by myself.

All of the code, datasets, and scripts will be present in github. You too can try the AutoTrain model.

While we are able to consider this as a sound start line, we must always collect more data and check out to construct higher pipelines. Higher pipelines would end in more efficient improvements.