Open and reproducible datasets are essential for advancing good machine learning. At the identical time, datasets have grown tremendously in size as rocket fuel for giant language models. In 2020, Hugging Face launched 🤗 Datasets, a library dedicated to:

- Providing access to standardized datasets with a single line of code.

- Tools for rapidly and efficiently processing large-scale datasets.

Due to the community, we added a whole bunch of NLP datasets in lots of languages and dialects in the course of the Datasets Sprint! 🤗 ❤️

But text datasets are just the start. Data is represented in richer formats like 🎵 audio, 📸 images, and even a mix of audio and text or image and text. Models trained on these datasets enable awesome applications like describing what’s in a picture or answering questions on a picture.

The 🤗 Datasets team has been constructing tools and features to make working with these dataset types so simple as possible for the very best developer experience. We added recent documentation along the solution to aid you learn more about loading and processing audio and image datasets.

Quickstart

The Quickstart is certainly one of the primary places recent users visit for a TLDR a few library’s features. That’s why we updated the Quickstart to incorporate how you should utilize 🤗 Datasets to work with audio and image datasets. Select a dataset modality you should work with and see an end-to-end example of the right way to load and process the dataset to get it ready for training with either PyTorch or TensorFlow.

Also recent within the Quickstart is the to_tf_dataset function which takes care of converting a dataset right into a tf.data.Dataset like a mama bear caring for her cubs. This implies you don’t have to put in writing any code to shuffle and cargo batches out of your dataset to get it to play nicely with TensorFlow. When you’ve converted your dataset right into a tf.data.Dataset, you possibly can train your model with the same old TensorFlow or Keras methods.

Take a look at the Quickstart today to learn the right way to work with different dataset modalities and take a look at out the brand new to_tf_dataset function!

Dedicated guides

Each dataset modality has specific nuances on the right way to load and process them. For instance, once you load an audio dataset, the audio signal is robotically decoded and resampled on-the-fly by the Audio feature. This is kind of different from loading a text dataset!

To make all the modality-specific documentation more discoverable, there are recent dedicated sections with guides focused on showing you the right way to load and process each modality. For those who’re on the lookout for specific details about working with a dataset modality, take a have a look at these dedicated sections first. Meanwhile, functions which are non-specific and may be used broadly are documented within the General Usage section. Reorganizing the documentation in this manner will allow us to raised scale to other dataset types we plan to support in the long run.

Take a look at the dedicated guides to learn more about loading and processing datasets for various modalities.

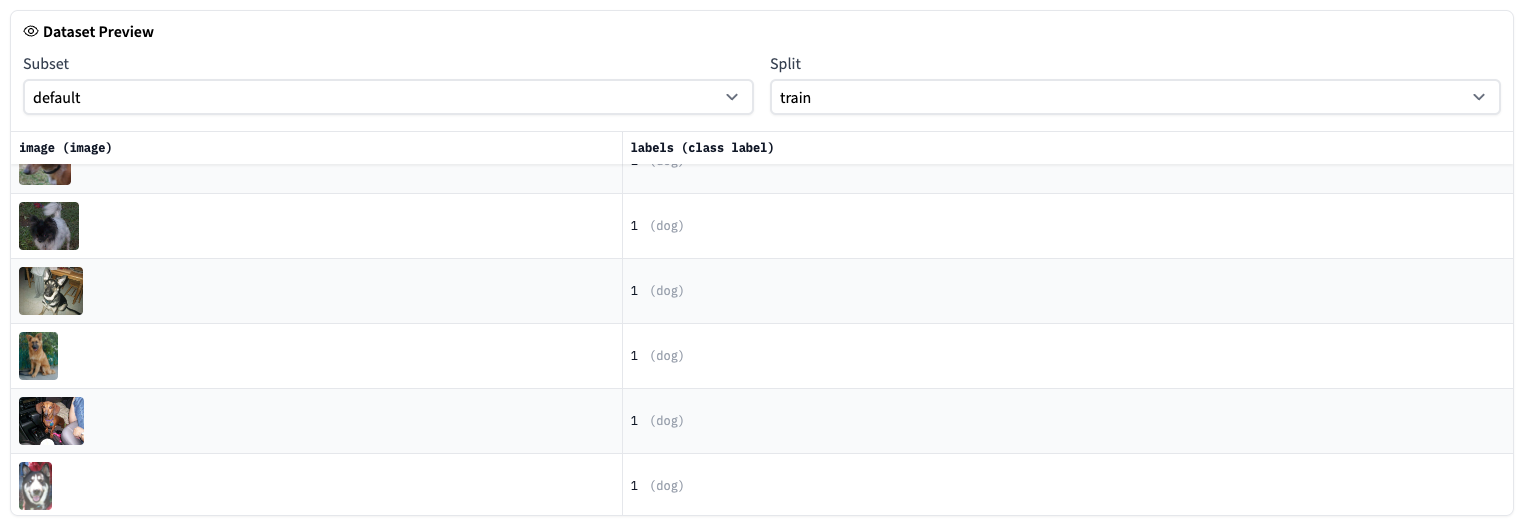

ImageFolder

Typically, 🤗 Datasets users write a dataset loading script to download and generate a dataset with the suitable train and test splits. With the ImageFolder dataset builder, you don’t need to put in writing any code to download and generate a picture dataset. Loading a picture dataset for image classification is so simple as ensuring your dataset is organized in a folder like:

folder/train/dog/golden_retriever.png

folder/train/dog/german_shepherd.png

folder/train/dog/chihuahua.png

folder/train/cat/maine_coon.png

folder/train/cat/bengal.png

folder/train/cat/birman.png

Image labels are generated in a label column based on the directory name. ImageFolder permits you to start immediately with a picture dataset, eliminating the effort and time required to put in writing a dataset loading script.

But wait, it gets even higher! If you’ve got a file containing some metadata about your image dataset, ImageFolder may be used for other image tasks like image captioning and object detection. For instance, object detection datasets commonly have bounding boxes, coordinates in a picture that discover where an object is. ImageFolder can use this file to link the metadata concerning the bounding box and category for every image to the corresponding images within the folder:

{"file_name": "0001.png", "objects": {"bbox": [[302.0, 109.0, 73.0, 52.0]], "categories": [0]}}

{"file_name": "0002.png", "objects": {"bbox": [[810.0, 100.0, 57.0, 28.0]], "categories": [1]}}

{"file_name": "0003.png", "objects": {"bbox": [[160.0, 31.0, 248.0, 616.0], [741.0, 68.0, 202.0, 401.0]], "categories": [2, 2]}}

dataset = load_dataset("imagefolder", data_dir="/path/to/folder", split="train")

dataset[0]["objects"]

{"bbox": [[302.0, 109.0, 73.0, 52.0]], "categories": [0]}

You should utilize ImageFolder to load a picture dataset for nearly any kind of image task if you’ve got a metadata file with the required information. Take a look at the ImageFolder guide to learn more.

What’s next?

Much like how the primary iteration of the 🤗 Datasets library standardized text datasets and made them super easy to download and process, we’re very excited to bring this same level of user-friendliness to audio and image datasets. In doing so, we hope it’ll be easier for users to coach, construct, and evaluate models and applications across all different modalities.

In the approaching months, we’ll proceed so as to add recent features and tools to support working with audio and image datasets. Word on the 🤗 Hugging Face street is that there’ll be something called AudioFolder coming soon! 🤫 When you wait, be at liberty to try the audio processing guide after which get hands-on with an audio dataset like GigaSpeech.

Join the forum for any questions and feedback about working with audio and image datasets. For those who discover any bugs, please open a GitHub Issue, so we are able to maintain it.

Feeling slightly more adventurous? Contribute to the growing community-driven collection of audio and image datasets on the Hub! Create a dataset repository on the Hub and upload your dataset. For those who need a hand, open a discussion in your repository’s Community tab and ping certainly one of the 🤗 Datasets team members to aid you cross the finish line!