This text has been translated to Chinese 简体中文 and Vietnamese đọc tiếng việt.

Language models have shown impressive capabilities previously few years by generating diverse and compelling text from human input prompts. Nevertheless, what makes a “good” text is inherently hard to define because it is subjective and context dependent. There are various applications similar to writing stories where you wish creativity, pieces of informative text which needs to be truthful, or code snippets that we wish to be executable.

Writing a loss function to capture these attributes seems intractable and most language models are still trained with a straightforward next token prediction loss (e.g. cross entropy). To compensate for the shortcomings of the loss itself people define metrics which might be designed to raised capture human preferences similar to BLEU or ROUGE. While being higher suited than the loss function itself at measuring performance these metrics simply compare generated text to references with easy rules and are thus also limited. Would not it’s great if we use human feedback for generated text as a measure of performance or go even one step further and use that feedback as a loss to optimize the model? That is the thought of Reinforcement Learning from Human Feedback (RLHF); use methods from reinforcement learning to directly optimize a language model with human feedback. RLHF has enabled language models to start to align a model trained on a general corpus of text data to that of complex human values.

RLHF’s most up-to-date success was its use in ChatGPT. Given ChatGPT’s impressive abilities, we asked it to clarify RLHF for us:

It does surprisingly well, but doesn’t quite cover every thing. We’ll fill in those gaps!

RLHF: Let’s take it step-by-step

Reinforcement learning from Human Feedback (also referenced as RL from human preferences) is a difficult concept since it involves a multiple-model training process and different stages of deployment. On this blog post, we’ll break down the training process into three core steps:

- Pretraining a language model (LM),

- gathering data and training a reward model, and

- fine-tuning the LM with reinforcement learning.

To start out, we’ll take a look at how language models are pretrained.

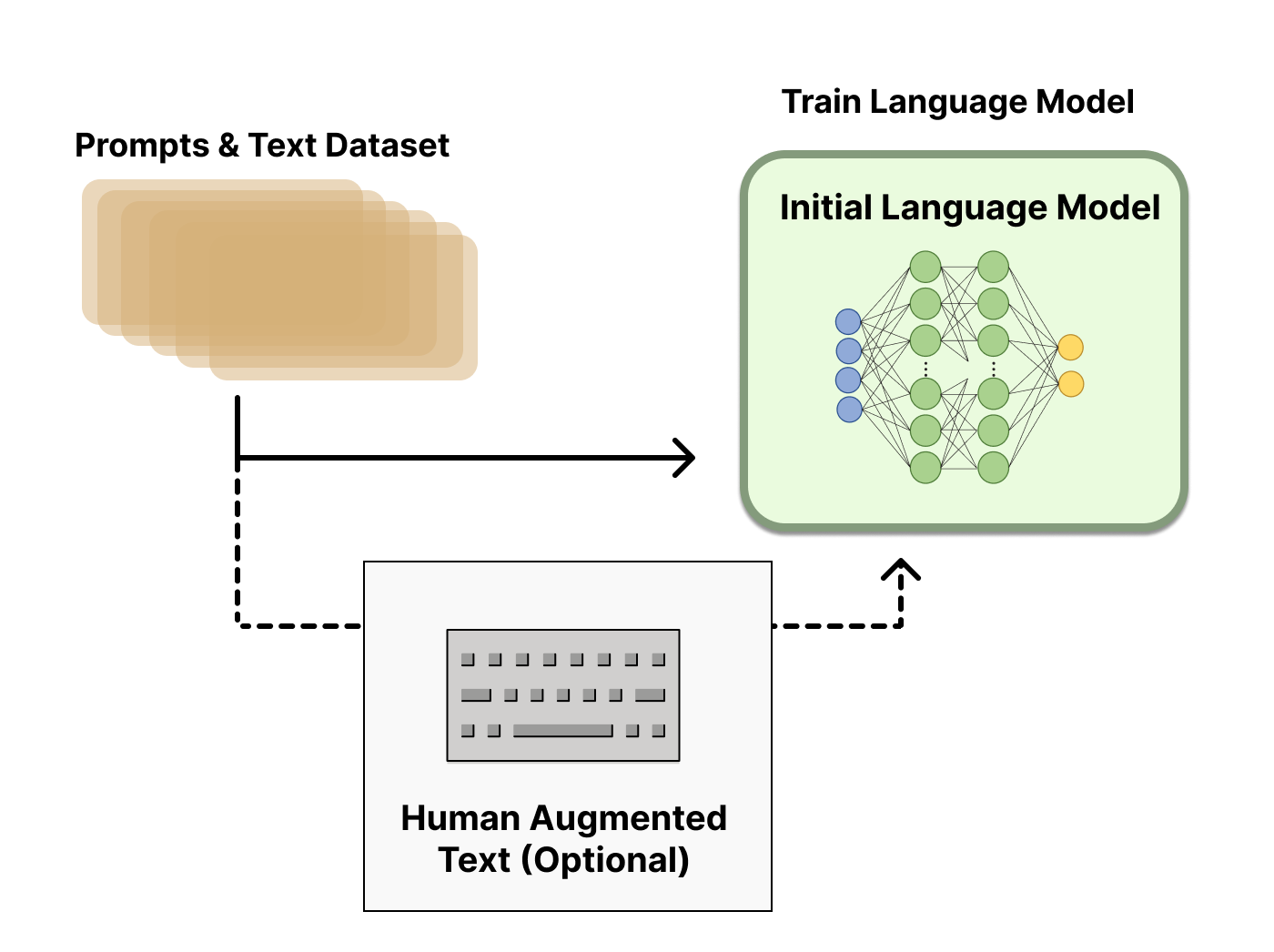

Pretraining language models

As a start line RLHF use a language model that has already been pretrained with the classical pretraining objectives (see this blog post for more details). OpenAI used a smaller version of GPT-3 for its first popular RLHF model, InstructGPT. Of their shared papers, Anthropic used transformer models from 10 million to 52 billion parameters trained for this task. DeepMind has documented using as much as their 280 billion parameter model Gopher. It is probably going that each one these firms use much larger models of their RLHF-powered products.

This initial model can even be fine-tuned on additional text or conditions, but doesn’t necessarily must be. For instance, OpenAI fine-tuned on human-generated text that was “preferable” and Anthropic generated their initial LM for RLHF by distilling an original LM on context clues for his or her “helpful, honest, and harmless” criteria. These are each sources of what we seek advice from as expensive, augmented data, however it is just not a required technique to grasp RLHF. Core to starting the RLHF process is having a model that responds well to diverse instructions.

Generally, there is just not a transparent answer on “which model” is the perfect for the start line of RLHF. This will likely be a typical theme on this blog – the design space of options in RLHF training usually are not thoroughly explored.

Next, with a language model, one must generate data to coach a reward model, which is how human preferences are integrated into the system.

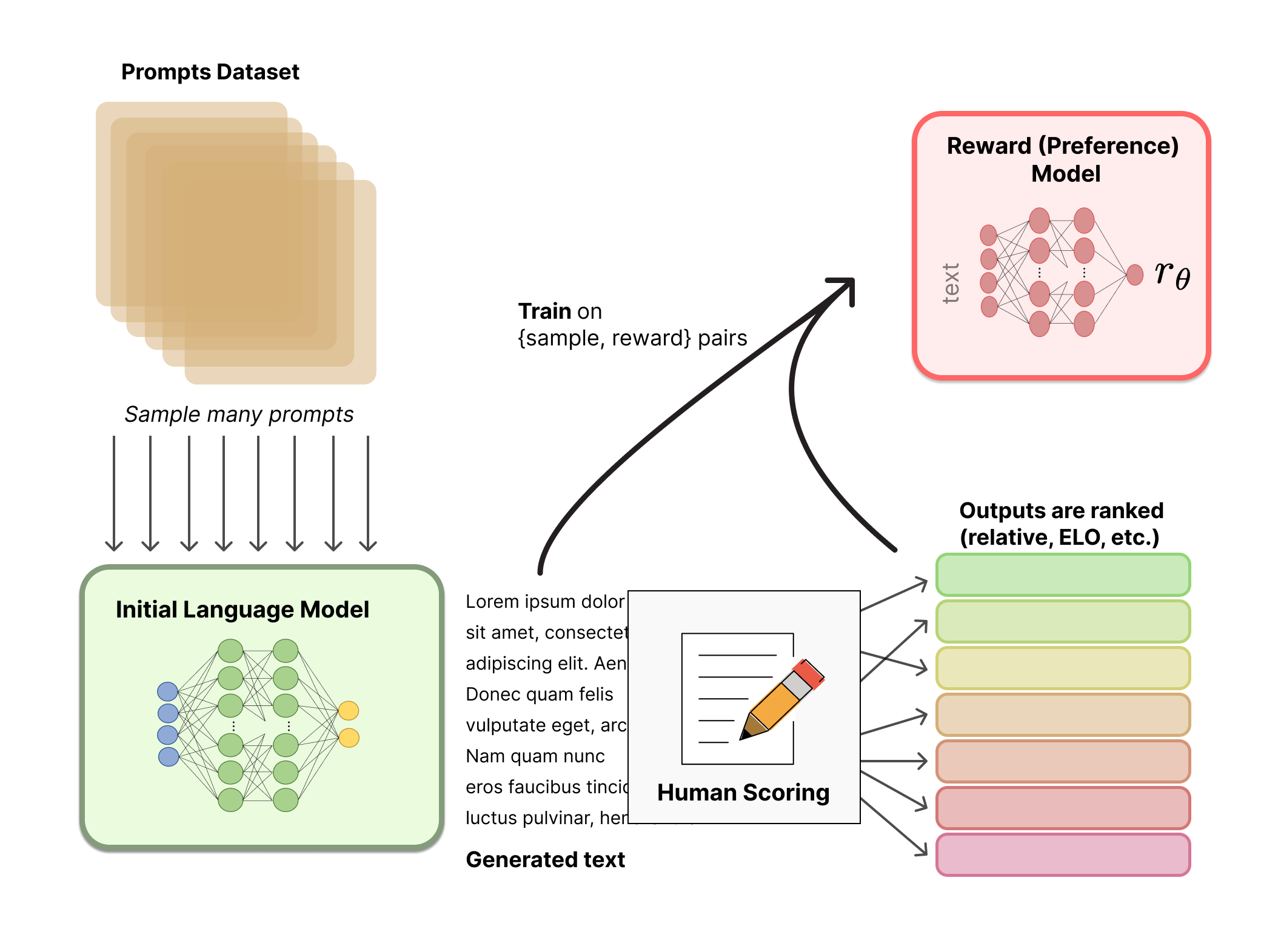

Reward model training

Generating a reward model (RM, also known as a preference model) calibrated with human preferences is where the relatively recent research in RLHF begins. The underlying goal is to get a model or system that takes in a sequence of text, and returns a scalar reward which should numerically represent the human preference. The system will be an end-to-end LM, or a modular system outputting a reward (e.g. a model ranks outputs, and the rating is converted to reward). The output being a scalar reward is crucial for existing RL algorithms being integrated seamlessly later within the RLHF process.

These LMs for reward modeling will be each one other fine-tuned LM or a LM trained from scratch on the preference data. For instance, Anthropic has used a specialized approach to fine-tuning to initialize these models after pretraining (preference model pretraining, PMP) because they found it to be more sample efficient than fine-tuning, but nobody base model is taken into account the clear best option for reward models.

The training dataset of prompt-generation pairs for the RM is generated by sampling a set of prompts from a predefined dataset (Anthropic’s data generated primarily with a chat tool on Amazon Mechanical Turk is available on the Hub, and OpenAI used prompts submitted by users to the GPT API). The prompts are passed through the initial language model to generate recent text.

Human annotators are used to rank the generated text outputs from the LM. One may initially think that humans should apply a scalar rating on to every bit of text with the intention to generate a reward model, but that is difficult to do in practice. The differing values of humans cause these scores to be uncalibrated and noisy. As a substitute, rankings are used to match the outputs of multiple models and create a significantly better regularized dataset.

There are multiple methods for rating the text. One method that has been successful is to have users compare generated text from two language models conditioned on the identical prompt. By comparing model outputs in head-to-head matchups, an Elo system will be used to generate a rating of the models and outputs relative to each-other. These different methods of rating are normalized right into a scalar reward signal for training.

An interesting artifact of this process is that the successful RLHF systems so far have used reward language models with various sizes relative to the text generation (e.g. OpenAI 175B LM, 6B reward model, Anthropic used LM and reward models from 10B to 52B, DeepMind uses 70B Chinchilla models for each LM and reward). An intuition could be that these preference models have to have similar capability to grasp the text given to them as a model would want with the intention to generate said text.

At this point within the RLHF system, we’ve an initial language model that will be used to generate text and a preference model that takes in any text and assigns it a rating of how well humans perceive it. Next, we use reinforcement learning (RL) to optimize the unique language model with respect to the reward model.

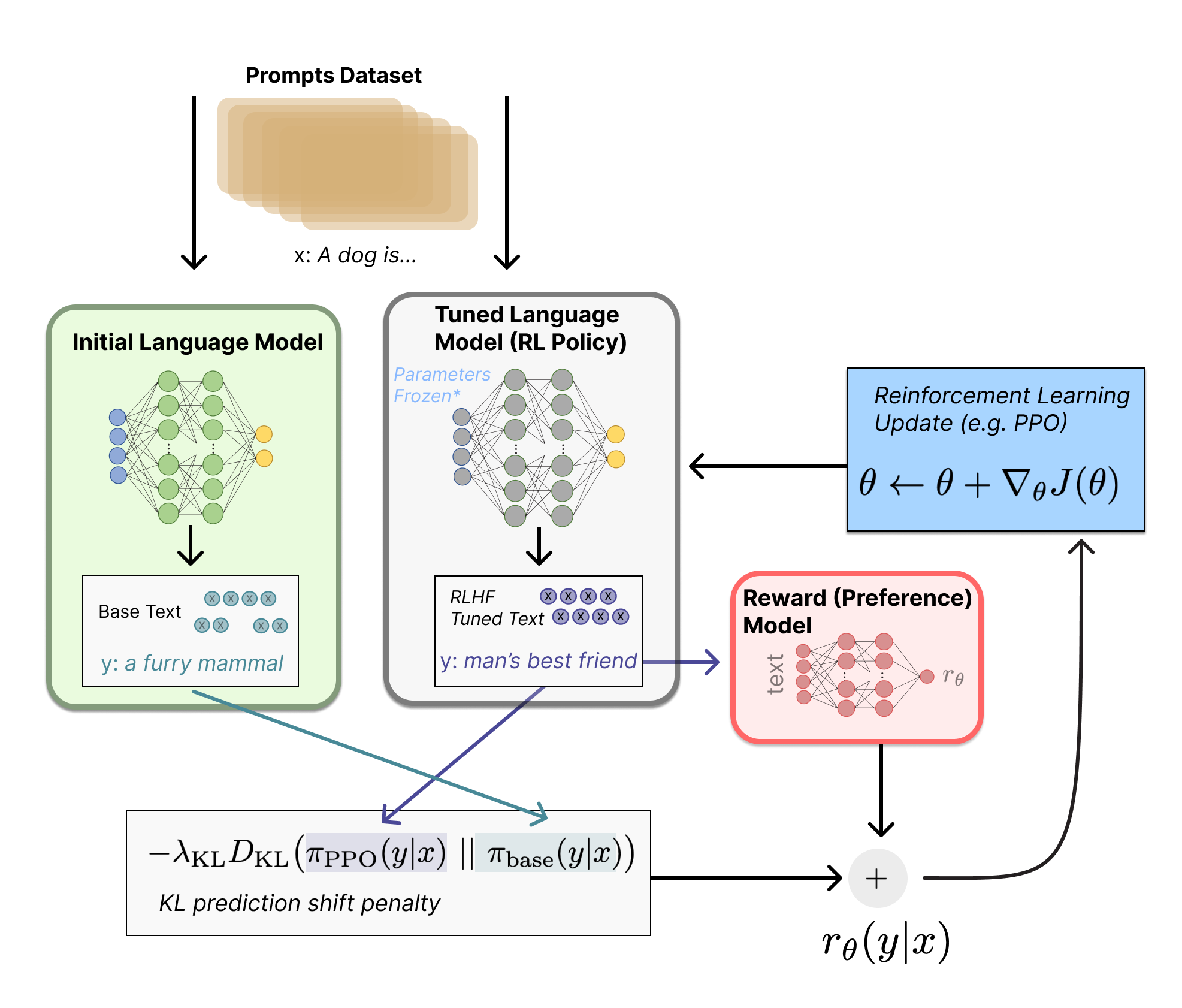

Advantageous-tuning with RL

Training a language model with reinforcement learning was, for a very long time, something that individuals would have thought as inconceivable each for engineering and algorithmic reasons.

What multiple organizations appear to have gotten to work is fine-tuning some or all the parameters of a copy of the initial LM with a policy-gradient RL algorithm, Proximal Policy Optimization (PPO).

Some parameters of the LM are frozen because fine-tuning a whole 10B or 100B+ parameter model is prohibitively expensive (for more, see Low-Rank Adaptation (LoRA) for LMs or the Sparrow LM from DeepMind) — depending on the size of the model and infrastructure getting used. The precise dynamics of what number of parameters to freeze, or not, is taken into account an open research problem.

PPO has been around for a comparatively very long time – there are tons of guides on how it really works. The relative maturity of this method made it a positive selection for scaling as much as the brand new application of distributed training for RLHF. It seems that lots of the core RL advancements to do RLHF have been determining methods to update such a big model with a well-recognized algorithm (more on that later).

Let’s first formulate this fine-tuning task as a RL problem. First, the policy is a language model that takes in a prompt and returns a sequence of text (or simply probability distributions over text). The motion space of this policy is all of the tokens corresponding to the vocabulary of the language model (often on the order of 50k tokens) and the statement space is the distribution of possible input token sequences, which can be quite large given previous uses of RL (the dimension is roughly the dimensions of vocabulary ^ length of the input token sequence). The reward function is a mix of the preference model and a constraint on policy shift.

The reward function is where the system combines all the models we’ve discussed into one RLHF process. Given a prompt, x, from the dataset, the text y is generated by the present iteration of the fine-tuned policy. Concatenated with the unique prompt, that text is passed to the preference model, which returns a scalar notion of “preferability”, . As well as, per-token probability distributions from the RL policy are in comparison with those from the initial model to compute a penalty on the difference between them. In multiple papers from OpenAI, Anthropic, and DeepMind, this penalty has been designed as a scaled version of the Kullback–Leibler (KL) divergence between these sequences of distributions over tokens, . The KL divergence term penalizes the RL policy from moving substantially away from the initial pretrained model with each training batch, which will be useful to ensure the model outputs reasonably coherent text snippets. Without this penalty the optimization can begin to generate text that’s gibberish but fools the reward model to provide a high reward. In practice, the KL divergence is approximated via sampling from each distributions (explained by John Schulman here). The ultimate reward sent to the RL update rule is .

Some RLHF systems have added additional terms to the reward function. For instance, OpenAI experimented successfully on InstructGPT by mixing in additional pre-training gradients (from the human annotation set) into the update rule for PPO. It is probably going as RLHF is further investigated, the formulation of this reward function will proceed to evolve.

Finally, the update rule is the parameter update from PPO that maximizes the reward metrics in the present batch of knowledge (PPO is on-policy, which implies the parameters are only updated with the present batch of prompt-generation pairs). PPO is a trust region optimization algorithm that uses constraints on the gradient to make sure the update step doesn’t destabilize the educational process. DeepMind used the same reward setup for Gopher but used synchronous advantage actor-critic (A2C) to optimize the gradients, which is notably different but has not been reproduced externally.

Technical detail note: The above diagram makes it appear to be each models generate different responses for a similar prompt, but what really happens is that the RL policy generates text, and that text is fed into the initial model to provide its relative probabilities for the KL penalty. This initial model is untouched by gradient updates during training.

Optionally, RLHF can proceed from this point by iteratively updating the reward model and the policy together. Because the RL policy updates, users can proceed rating these outputs versus the model’s earlier versions. Most papers have yet to debate implementing this operation, because the deployment mode needed to gather the sort of data only works for dialogue agents with access to an engaged user base. Anthropic discusses this selection as Iterated Online RLHF (see the unique paper), where iterations of the policy are included within the ELO rating system across models. This introduces complex dynamics of the policy and reward model evolving, which represents a fancy and open research query.

Open-source tools for RLHF

The primary code released to perform RLHF on LMs was from OpenAI in TensorFlow in 2019.

Today, there are already just a few energetic repositories for RLHF in PyTorch that grew out of this. The first repositories are Transformers Reinforcement Learning (TRL), TRLX which originated as a fork of TRL, and Reinforcement Learning for Language models (RL4LMs).

TRL is designed to fine-tune pretrained LMs within the Hugging Face ecosystem with PPO. TRLX is an expanded fork of TRL built by CarperAI to handle larger models for online and offline training. In the mean time, TRLX has an API able to production-ready RLHF with PPO and Implicit Language Q-Learning ILQL on the scales required for LLM deployment (e.g. 33 billion parameters). Future versions of TRLX will allow for language models as much as 200B parameters. As such, interfacing with TRLX is optimized for machine learning engineers with experience at this scale.

RL4LMs offers constructing blocks for fine-tuning and evaluating LLMs with a wide selection of RL algorithms (PPO, NLPO, A2C and TRPO), reward functions and metrics. Furthermore, the library is definitely customizable, which allows training of any encoder-decoder or encoder transformer-based LM on any arbitrary user-specified reward function. Notably, it’s well-tested and benchmarked on a broad range of tasks in recent work amounting as much as 2000 experiments highlighting several practical insights on data budget comparison (expert demonstrations vs. reward modeling), handling reward hacking and training instabilities, etc.

RL4LMs current plans include distributed training of larger models and recent RL algorithms.

Each TRLX and RL4LMs are under heavy further development, so expect more features beyond these soon.

There’s a big dataset created by Anthropic available on the Hub.

What’s next for RLHF?

While these techniques are extremely promising and impactful and have caught the eye of the largest research labs in AI, there are still clear limitations. The models, while higher, can still output harmful or factually inaccurate text with none uncertainty. This imperfection represents a long-term challenge and motivation for RLHF – operating in an inherently human problem domain means there won’t ever be a transparent final line to cross for the model to be labeled as complete.

When deploying a system using RLHF, gathering the human preference data is kind of expensive as a consequence of the direct integration of other human staff outside the training loop. RLHF performance is barely pretty much as good as the standard of its human annotations, which takes on two varieties: human-generated text, similar to fine-tuning the initial LM in InstructGPT, and labels of human preferences between model outputs.

Generating well-written human text answering specific prompts may be very costly, because it often requires hiring part-time staff (slightly than having the ability to depend on product users or crowdsourcing). Thankfully, the size of knowledge utilized in training the reward model for many applications of RLHF (~50k labeled preference samples) is just not as expensive. Nevertheless, it continues to be the next cost than academic labs would likely have the option to afford. Currently, there only exists one large-scale dataset for RLHF on a general language model (from Anthropic) and a few smaller-scale task-specific datasets (similar to summarization data from OpenAI). The second challenge of knowledge for RLHF is that human annotators can often disagree, adding a considerable potential variance to the training data without ground truth.

With these limitations, huge swaths of unexplored design options could still enable RLHF to take substantial strides. A lot of these fall throughout the domain of improving the RL optimizer. PPO is a comparatively old algorithm, but there aren’t any structural reasons that other algorithms couldn’t offer advantages and permutations on the prevailing RLHF workflow. One large cost of the feedback portion of fine-tuning the LM policy is that each generated piece of text from the policy must be evaluated on the reward model (because it acts like a part of the environment in the usual RL framework). To avoid these costly forward passes of a giant model, offline RL may very well be used as a policy optimizer. Recently, recent algorithms have emerged, similar to implicit language Q-learning (ILQL) [Talk on ILQL at CarperAI], that fit particularly well with the sort of optimization. Other core trade-offs within the RL process, like exploration-exploitation balance, have also not been documented. Exploring these directions would at the least develop a considerable understanding of how RLHF functions and, if not, provide improved performance.

We hosted a lecture on Tuesday 13 December 2022 that expanded on this post; you’ll be able to watch it here!

Further reading

Here is a listing of essentially the most prevalent papers on RLHF so far. The sector was recently popularized with the emergence of DeepRL (around 2017) and has grown right into a broader study of the applications of LLMs from many large technology firms.

Listed here are some papers on RLHF that pre-date the LM focus:

And here’s a snapshot of the growing set of “key” papers that show RLHF’s performance for LMs:

- Advantageous-Tuning Language Models from Human Preferences (Zieglar et al. 2019): An early paper that studies the impact of reward learning on 4 specific tasks.

- Learning to summarize with human feedback (Stiennon et al., 2020): RLHF applied to the duty of summarizing text. Also, Recursively Summarizing Books with Human Feedback (OpenAI Alignment Team 2021), follow on work summarizing books.

- WebGPT: Browser-assisted question-answering with human feedback (OpenAI, 2021): Using RLHF to coach an agent to navigate the online.

- InstructGPT: Training language models to follow instructions with human feedback (OpenAI Alignment Team 2022): RLHF applied to a general language model [Blog post on InstructGPT].

- GopherCite: Teaching language models to support answers with verified quotes (Menick et al. 2022): Train a LM with RLHF to return answers with specific citations.

- Sparrow: Improving alignment of dialogue agents via targeted human judgements (Glaese et al. 2022): Advantageous-tuning a dialogue agent with RLHF

- ChatGPT: Optimizing Language Models for Dialogue (OpenAI 2022): Training a LM with RLHF for suitable use as an all-purpose chat bot.

- Scaling Laws for Reward Model Overoptimization (Gao et al. 2022): studies the scaling properties of the learned preference model in RLHF.

- Training a Helpful and Harmless Assistant with Reinforcement Learning from Human Feedback (Anthropic, 2022): An in depth documentation of coaching a LM assistant with RLHF.

- Red Teaming Language Models to Reduce Harms: Methods, Scaling Behaviors, and Lessons Learned (Ganguli et al. 2022): An in depth documentation of efforts to “discover, measure, and attempt to cut back [language models] potentially harmful outputs.”

- Dynamic Planning in Open-Ended Dialogue using Reinforcement Learning (Cohen at al. 2022): Using RL to boost the conversational skill of an open-ended dialogue agent.

- Is Reinforcement Learning (Not) for Natural Language Processing?: Benchmarks, Baselines, and Constructing Blocks for Natural Language Policy Optimization (Ramamurthy and Ammanabrolu et al. 2022): Discusses the design space of open-source tools in RLHF and proposes a brand new algorithm NLPO (Natural Language Policy Optimization) as an alternative choice to PPO.

- Llama 2 (Touvron et al. 2023): Impactful open-access model with substantial RLHF details.

The sector is the convergence of multiple fields, so you may as well find resources in other areas:

Citation:

For those who found this convenient to your academic work, please consider citing our work, in text:

Lambert, et al., "Illustrating Reinforcement Learning from Human Feedback (RLHF)", Hugging Face Blog, 2022.

BibTeX citation:

@article{lambert2022illustrating,

creator = {Lambert, Nathan and Castricato, Louis and von Werra, Leandro and Havrilla, Alex},

title = {Illustrating Reinforcement Learning from Human Feedback (RLHF)},

journal = {Hugging Face Blog},

12 months = {2022},

note = {https://huggingface.co/blog/rlhf},

}

Due to Robert Kirk for fixing some factual errors regarding specific implementations of RLHF. Due to Stas Bekman for fixing some typos or confusing phrases Due to Peter Stone, Khanh X. Nguyen and Yoav Artzi for helping expand the related works further into history. Due to Igor Kotenkov for stating a technical error within the KL-penalty term of the RLHF procedure, its diagram, and textual description.