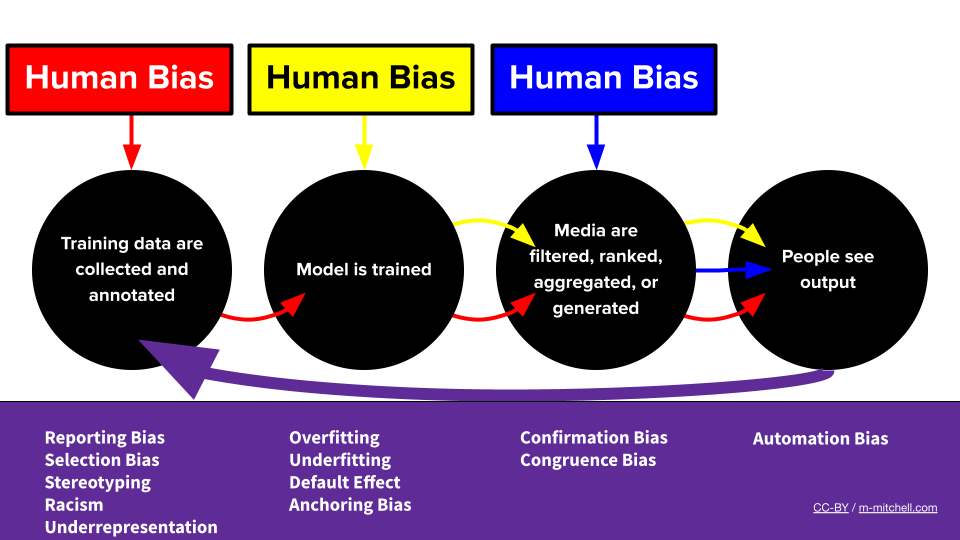

Bias in ML is ubiquitous, and Bias in ML is complex; so complex in incontrovertible fact that no single technical intervention is more likely to meaningfully address the issues it engenders. ML models, as sociotechnical systems, amplify social trends that will exacerbate inequities and harmful biases in ways in which rely on their deployment context and are always evolving.

Which means developing ML systems with care requires vigilance and responding to feedback from those deployment contexts, which in turn we will facilitate by sharing lessons across contexts and developing tools to investigate signs of bias at every level of ML development.

This blog post from the Ethics and Society regulars @🤗 shares a number of the lessons we have now learned together with tools we have now developed to support ourselves and others in our community’s efforts to raised address bias in Machine Learning. The primary part is a broader reflection on bias and its context. If you happen to’ve already read it and are coming back specifically for the tools, be happy to leap to the datasets or models

section!

Number of tools developed by 🤗 team members to handle bias in ML

Table of contents:

- On Machine Biases

- Tools and Recommendations

Machine Bias: from ML Systems to Personal and Social Risks

ML systems allow us to automate complex tasks at a scale never seen before as they’re deployed in additional sectors and use cases. When the technology works at its best, it could help smooth interactions between people and technical systems, remove the necessity for highly repetitive work, or unlock latest ways of processing information to support research.

These same systems are also more likely to reproduce discriminatory and abusive behaviors represented of their training data, especially when the info encodes human behaviors.

The technology then has the potential to make these issues significantly worse. Automation and deployment at scale can indeed:

- lock in behaviors in time and hinder social progress from being reflected in technology,

- spread harmful behaviors beyond the context of the unique training data,

- amplify inequities by overfocusing on stereotypical associations when making predictions,

- remove possibilities for recourse by hiding biases inside “black-box” systems.

With a purpose to higher understand and address these risks, ML researchers and developers have began studying machine bias or algorithmic bias, mechanisms which may lead systems to, for instance, encode negative stereotypes or associations or to have disparate performance for various population groups of their deployment context.

These issues are deeply personal for lots of us ML researchers and developers at Hugging Face and within the broader ML community. Hugging Face is a global company, with lots of us existing between countries and cultures. It is difficult to completely express our sense of urgency once we see the technology we work on developed without sufficient concern for shielding people like us; especially when these systems result in discriminatory wrongful arrests or undue financial distress and are being increasingly sold to immigration and law enforcement services world wide. Similarly, seeing our identities routinely suppressed in training datasets or underrepresented within the outputs of “generative AI” systems connects these concerns to our every day lived experiences in ways which might be concurrently enlightening and taxing.

While our own experiences don’t come near covering the myriad ways during which ML-mediated discrimination can disproportionately harm people whose experiences differ from ours, they supply an entry point into considerations of the trade-offs inherent within the technology. We work on these systems because we strongly imagine in ML’s potential — we expect it could shine as a beneficial tool so long as it’s developed with care and input from people in its deployment context, somewhat than as a one-size-fits-all panacea. Specifically, enabling this care requires developing a greater understanding of the mechanisms of machine bias across the ML development process, and developing tools that support people with all levels of technical knowledge of those systems in participating within the needed conversations about how their advantages and harms are distributed.

The current blog post from the Hugging Face Ethics and Society regulars provides an summary of how we have now worked, are working, or recommend users of the HF ecosystem of libraries may fit to handle bias at the assorted stages of the ML development process, and the tools we develop to support this process. We hope you’ll discover it a useful resource to guide concrete considerations of the social impact of your work and may leverage the tools referenced here to assist mitigate these issues after they arise.

Putting Bias in Context

The primary and perhaps most significant concept to think about when coping with machine bias is context. Of their foundational work on bias in NLP, Su Lin Blodgett et al. indicate that: “[T]he majority of [academic works on machine bias] fail to have interaction critically with what constitutes “bias” in the primary place”, including by constructing their work on top of “unspoken assumptions about what sorts of system behaviors are harmful, in what ways, to whom, and why”.

This may occasionally not come as much of a surprise given the ML research community’s concentrate on the worth of “generalization” — essentially the most cited motivation for work in the sphere after “performance”. Nonetheless, while tools for bias assessment that apply to a wide selection of settings are beneficial to enable a broader evaluation of common trends in model behaviors, their ability to focus on the mechanisms that result in discrimination in concrete use cases is inherently limited. Using them to guide specific decisions inside the ML development cycle often requires an additional step or two to take the system’s specific use context and affected people into consideration.

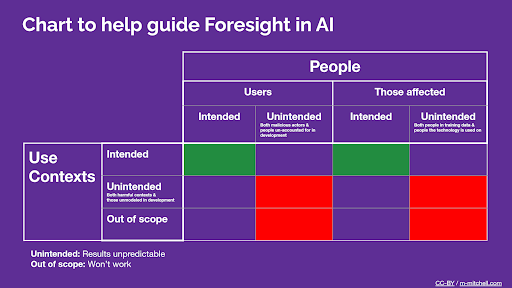

Excerpt on considerations of ML uses context and other people from the Model Card Guidebook

Now let’s dive deeper into the problem of linking biases in stand-alone/context-less ML artifacts to specific harms. It will possibly be useful to think about machine biases as risk aspects for discrimination-based harms. Take the instance of a text-to-image model that over-represents light skin tones when prompted to create an image of an individual in an expert setting, but produces darker skin tones when the prompts mention criminality. These tendencies could be what we call machine biases on the model level. Now let’s take into consideration a couple of systems that use such a text-to-image model:

- The model is integrated into an internet site creation service (e.g. SquareSpace, Wix) to assist users generate backgrounds for his or her pages. The model explicitly disables images of individuals within the generated background.

- On this case, the machine bias “risk factor” doesn’t result in discrimination harm because the main focus of the bias (images of individuals) is absent from the use case.

- Further risk mitigation will not be required for machine biases, although developers should pay attention to ongoing discussions in regards to the legality of integrating systems trained on scraped data in business systems.

- The model is integrated right into a stock images website to supply users with synthetic images of individuals (e.g. in skilled settings) that they’ll use with fewer privacy concerns, for instance, to function illustrations for Wikipedia articles

- On this case, machine bias acts to lock in and amplify existing social biases. It reinforces stereotypes about people (“CEOs are all white men”) that then feed back into complex social systems where increased bias results in increased discrimination in many alternative ways (equivalent to reinforcing implicit bias within the workplace).

- Mitigation strategies may include educating the stock image users about these biases, or the stock image website may curate generated images to intentionally propose a more diverse set of representations.

- The model is integrated right into a “virtual sketch artist” software marketed to police departments that may use it to generate pictures of suspects based on verbal testimony

- On this case, the machine biases directly cause discrimination by systematically directing police departments to darker-skinned people, putting them at increased risk of harm including physical injury and illegal imprisonment.

- In cases like this one, there could also be no level of bias mitigation that makes the danger acceptable. Specifically, such a use case could be closely related to face recognition within the context of law enforcement, where similar bias issues have led several business entities and legislatures to adopt moratoria pausing or banning its use across the board.

So, who’s on the hook for machine biases in ML? These three cases illustrate one in every of the the explanation why discussions in regards to the responsibility of ML developers in addressing bias can get so complicated: depending on decisions made at other points within the ML system development process by other people, the biases in an ML dataset or model may land anywhere between being irrelevant to the appliance settings and directly resulting in grievous harm. Nonetheless, in all of those cases, stronger biases within the model/dataset increase the danger of negative outcomes. The European Union has began to develop frameworks that address this phenomenon in recent regulatory efforts: briefly, an organization that deploys an AI system based on a measurably biased model is responsible for harm brought on by the system.

Conceptualizing bias as a risk factor then allows us to raised understand the shared responsibility for machine biases between developers in any respect stages. Bias can never be fully removed, not least since the definitions of social biases and the ability dynamics that tie them to discrimination vary vastly across social contexts. Nonetheless:

- Each stage of the event process, from task specification, dataset curation, and model training, to model integration and system deployment, can take steps to attenuate the points of machine bias** that the majority directly rely on its selections** and technical decisions, and

- Clear communication and information flow between the assorted ML development stages could make the difference between making selections that construct on top of one another to attenuate the negative potential of bias (multipronged approach to bias mitigation, as in deployment scenario 1 above) versus making selections that compound this negative potential to exacerbate the danger of harm (as in deployment scenario 3).

In the subsequent section, we review these various stages together with a number of the tools that may help us address machine bias at each of them.

Addressing Bias throughout the ML Development Cycle

Ready for some practical advice yet? Here we go 🤗

There is no such thing as a one single technique to develop ML systems; which steps occur in what order is dependent upon quite a few aspects including the event setting (university, large company, startup, grassroots organization, etc…), the modality (text, tabular data, images, etc…), and the preeminence or scarcity of publicly available ML resources. Nonetheless, we will discover three common stages of particular interest in addressing bias. These are the duty definition, the info curation, and the model training. Let’s have a take a look at how bias handling may differ across these various stages.

The Bias ML Pipeline by Meg

I’m defining the duty of my ML system, how can I address bias?

Whether and to what extent bias within the system concretely affects people ultimately is dependent upon what the system is used for. As such, the primary place developers can work to mitigate bias is when deciding how ML suits of their system, e.g., by deciding what optimization objective it would use.

For instance, let’s return to one in every of the primary highly-publicized cases of a Machine Learning system utilized in production for algorithmic content advice. From 2006 to 2009, Netflix ran the Netflix Prize, a contest with a 1M$ money prize difficult teams world wide to develop ML systems to accurately predict a user’s rating for a brand new movie based on their past rankings. The winning submission improved the RMSE (Root-mean-square-error) of predictions on unseen user-movie pairs by over 10% over Netflix’s own CineMatch algorithm, meaning it got significantly better at predicting how users would rate a brand new movie based on their history. This approach opened the door for much of contemporary algorithmic content advice by bringing the role of ML in modeling user preferences in recommender systems to public awareness.

So what does this should do with bias? Doesn’t showing people content that they’re more likely to enjoy sound like a very good service from a content platform? Well, it seems that showing people more examples of what they’ve liked prior to now finally ends up reducing the variety of the media they eat. Not only does it lead users to be less satisfied in the long run, but it surely also signifies that any biases or stereotypes captured by the initial models — equivalent to when modeling the preferences of Black American users or dynamics that systematically drawback some artists — are more likely to be reinforced if the model is further trained on ongoing ML-mediated user interactions. This reflects two of the forms of bias-related concerns we’ve mentioned above: the training objective acts as a risk factor for bias-related harms because it makes pre-existing biases rather more more likely to show up in predictions, and the duty framing has the effect of locking in and exacerbating past biases.

A promising bias mitigation strategy at this stage has been to reframe the duty to explicitly model each engagement and variety when applying ML to algorithmic content advice. Users are more likely to get more long-term satisfaction and the danger of exacerbating biases as outlined above is reduced!

This instance serves as an instance that the impact of machine biases in an ML-supported product depends not only on where we determine to leverage ML, but additionally on how ML techniques are integrated into the broader technical system, and with what objective. When first investigating how ML can fit right into a product or a use case you’re curious about, we first recommend searching for the failure modes of the system through the lens of bias before even diving into the available models or datasets – which behaviors of existing systems within the space can be particularly harmful or more more likely to occur if bias is exacerbated by ML predictions?

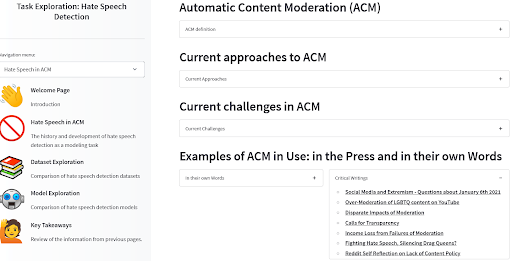

We built a tool to take users through these questions in one other case of algorithmic content management: hate speech detection in automatic content moderation. We found for instance that searching through news and scientific articles that didn’t particularly concentrate on the ML a part of the technology was already an incredible technique to get a way of where bias is already at play. Definitely go take a look for an example of how the models and datasets fit with the deployment context and the way they’ll relate to known bias-related harms!

ACM Task Exploration tool by Angie, Amandalynne, and Yacine

Task definition: recommendations

There are as some ways for the ML task definition and deployment to affect the danger of bias-related harms as there are applications for ML systems. As within the examples above, some common steps that will help determine whether and apply ML in a way that minimizes bias-related risk include:

- Investigate:

- Reports of bias in the sphere pre-ML

- At-risk demographic categories on your specific use case

- Examine:

- The impact of your optimization objective on reinforcing biases

- Alternative objectives that favor diversity and positive long-term impacts

I’m curating/picking a dataset for my ML system, how can I address bias?

While training datasets are not the only source of bias within the ML development cycle, they do play a major role. Does your dataset disproportionately associate biographies of ladies with life events but those of men with achievements? Those stereotypes are probably going to point out up in your full ML system! Does your voice recognition dataset only feature specific accents? Not a very good sign for the inclusivity of technology you construct with it when it comes to disparate performance! Whether you’re curating a dataset for ML applications or choosing a dataset to coach an ML model, checking out, mitigating, and communicating to what extent the info exhibits these phenomena are all needed steps to reducing bias-related risks.

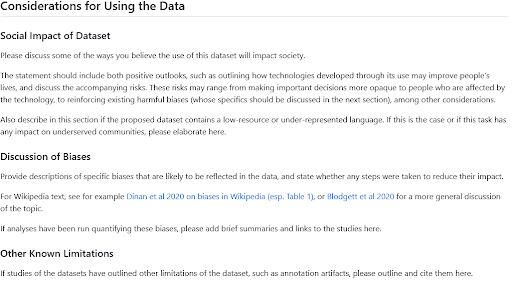

You may often get a fairly good sense of likely biases in a dataset by reflecting on where it comes from, who’re the people represented on the info, and what the curation process was. Several frameworks for this reflection and documentation have been proposed equivalent to Data Statements for NLP or Datasheets for Datasets. The Hugging Face Hub features a Dataset Card template and guide inspired by these works; the section on considerations for using the info is generally a very good place to search for details about notable biases for those who’re browsing datasets, or to write down a paragraph sharing your insights on the subject for those who’re sharing a brand new one. And for those who’re searching for more inspiration on what to place there, take a look at these sections written by Hub users within the BigLAM organization for historical datasets of legal proceedings, image classification, and newspapers.

HF Dataset Card guide for the Social Impact and Bias Sections

While describing the origin and context of a dataset is at all times a very good start line to grasp the biases at play, quantitatively measuring phenomena that encode those biases may be just as helpful. If you happen to’re selecting between two different datasets for a given task or selecting between two ML models trained on different datasets, knowing which one higher represents the demographic makeup of your ML system’s user base can allow you to make an informed decision to attenuate bias-related risks. If you happen to’re curating a dataset iteratively by filtering data points from a source or choosing latest sources of knowledge so as to add, measuring how these selections affect the variety and biases present in your overall dataset could make it safer to make use of normally.

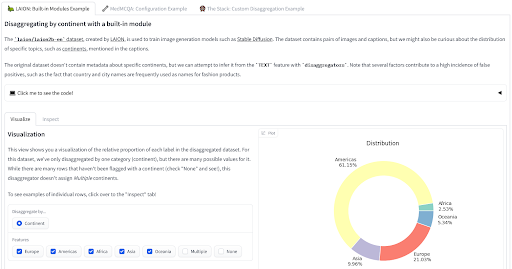

We’ve recently released two tools you’ll be able to leverage to measure your data through a bias-informed lens. The disaggregators🤗 library provides utilities to quantify the composition of your dataset, using either metadata or leveraging models to infer properties of knowledge points. This may be particularly useful to attenuate risks of bias-related representation harms or disparate performances of trained models. Have a look at the demo to see it applied to the LAION, MedMCQA, and The Stack datasets!

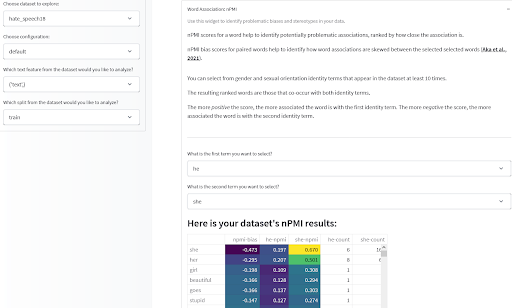

Once you have got some helpful statistics in regards to the composition of your dataset, you’ll also want to have a look at associations between features in your data items, particularly at associations that will encode derogatory or otherwise negative stereotypes. The Data Measurements Tool we originally introduced last yr lets you do that by the normalized Pointwise Mutual Information (nPMI) between terms in your text-based dataset; particularly associations between gendered pronouns that will denote gendered stereotypes. Run it yourself or try it here on a couple of pre-computed datasets!

Data Measurements tool by Meg, Sasha, Bibi, and the Gradio team

Dataset selection/curation: recommendations

These tools aren’t full solutions by themselves, somewhat, they’re designed to support critical examination and improvement of datasets through several lenses, including the lens of bias and bias-related risks. On the whole, we encourage you to maintain the next steps in mind when leveraging these and other tools to mitigate bias risks on the dataset curation/selection stage:

- Discover:

- Features of the dataset creation that will exacerbate specific biases

- Demographic categories and social variables which might be particularly vital to the dataset’s task and domain

- Measure:

- The demographic distribution in your dataset

- Pre-identified negative stereotypes represented

- Document:

- Share what you’ve Identified and Measured in your Dataset Card so it could profit other users, developers, and otherwise affected people

- Adapt:

- By selecting the dataset least more likely to cause bias-related harms

- By iteratively improving your dataset in ways in which reduce bias risks

I’m training/choosing a model for my ML system, how can I address bias?

Just like the dataset curation/selection step, documenting and measuring bias-related phenomena in models may help each ML developers who’re choosing a model to make use of as-is or to finetune and ML developers who need to train their very own models. For the latter, measures of bias-related phenomena within the model may help them learn from what has worked or what hasn’t for other models and function a signal to guide their very own development selections.

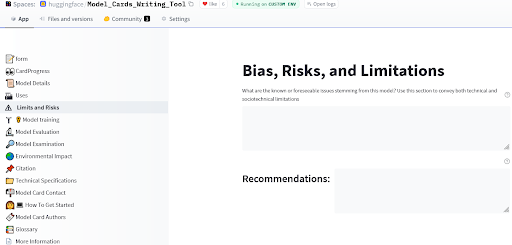

Model cards were originally proposed by (Mitchell et al., 2019) and supply a framework for model reporting that showcases information relevant to bias risks, including broad ethical considerations, disaggregated evaluation, and use case advice. The Hugging Face Hub provides much more tools for model documentation, with a model card guidebook within the Hub documentation, and an app that helps you to create extensive model cards easily on your latest model.

Model Card writing tool by Ezi, Marissa, and Meg

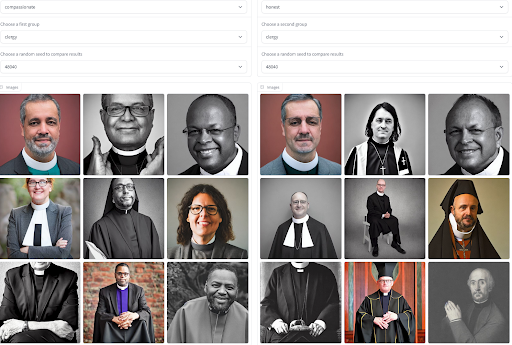

Documentation is an incredible first step for sharing general insights a few model’s behavior, but it surely is generally static and presents the identical information to all users. In lots of cases, especially for generative models that may generate outputs to approximate the distribution of their training data, we will gain a more contextual understanding of bias-related phenomena and negative stereotypes by visualizing and contrasting model outputs. Access to model generations may help users bring intersectional issues within the model behavior corresponding to their lived experience, and evaluate to what extent a model reproduces gendered stereotypes for various adjectives. To facilitate this process, we built a tool that helps you to compare generations not only across a set of adjectives and professions, but additionally across different models! Go try it out to get a way of which model might carry the least bias risks in your use case.

Visualize Adjective and Occupation Biases in Image Generation by Sasha

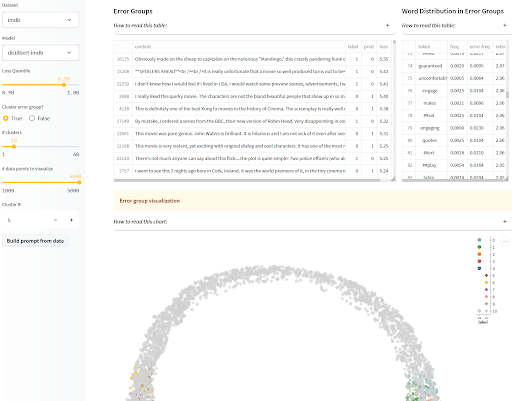

Visualization of model outputs isn’t only for generative models though! For classification models, we also need to look out for bias-related harms brought on by a model’s disparate performance on different demographics. If you happen to know what protected classes are most vulnerable to discrimination and have those annotated in an evaluation set, then you definately can report disaggregated performance over different categories in your model card as mentioned above, so users could make informed decisions. If nonetheless, you’re apprehensive that you just haven’t identified all populations vulnerable to bias-related harms, or for those who wouldn’t have access to annotated test examples to measure the biases you watched, that’s where interactive visualizations of where and the way the model fails come in useful! To allow you to with this, the SEAL app groups similar mistakes by your model and shows you some common features in each cluster. If you should go further, you’ll be able to even mix it with the disaggregators library we introduced within the datasets section to seek out clusters which might be indicative of bias-related failure modes!

Systematic Error Evaluation and Labeling (SEAL) by Nazneen

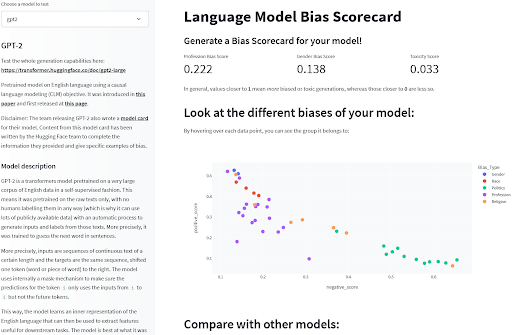

Finally, a couple of benchmarks exist that may measure bias-related phenomena in models. For language models, benchmarks equivalent to BOLD, HONEST, or WinoBias provide quantitative evaluations of targeted behaviors which might be indicative of biases within the models. While the benchmarks have their limitations, they do provide a limited view into some pre-identified bias risks that may help describe how the models function or choose from different models. You will discover these evaluations pre-computed on a variety of common language models on this exploration Space to get a primary sense of how they compare!

Language Model Bias Detection by Sasha

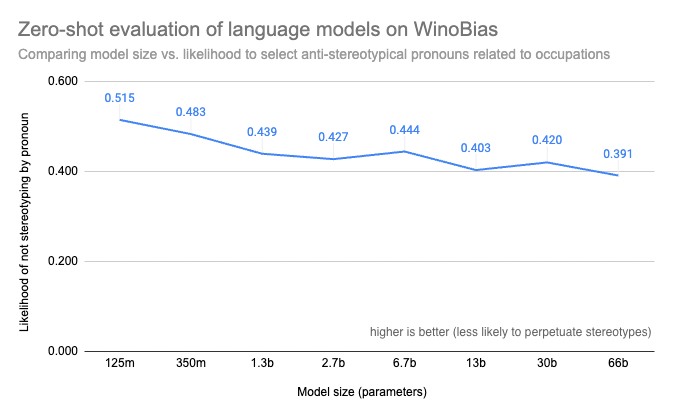

Even with access to a benchmark for the models you’re considering, you would possibly find that running evaluations of the larger language models you’re considering may be prohibitively expensive or otherwise technically inconceivable along with your own computing resources. The Evaluation on the Hub tool we released this yr may help with that: not only will it run the evaluations for you, but it would also help connect them to the model documentation so the outcomes can be found once and for all — so everyone can see, for instance, that size measurably increases bias risks in models like OPT!

Large model WinoBias scores computed with Evaluation on the Hub by Helen, Tristan, Abhishek, Lewis, and Douwe

Model selection/development: recommendations

For models just as for datasets, different tools for documentation and evaluation will provide different views of bias risks in a model which all have an element to play in helping developers select, develop, or understand ML systems.

- Visualize

- Generative model: visualize how the model’s outputs may reflect stereotypes

- Classification model: visualize model errors to discover failure modes that may lead to disparate performance

- Evaluate

- When possible, evaluate models on relevant benchmarks

- Document

- Share your learnings from visualization and qualitative evaluation

- Report your model’s disaggregated performance and results on applicable fairness benchmarks

Conclusion and Overview of Bias Evaluation and Documentation Tools from 🤗

As we learn to leverage ML systems in increasingly applications, reaping their advantages equitably will rely on our ability to actively mitigate the risks of bias-related harms related to the technology. While there isn’t a single answer to the query of how this could best be done in any possible setting, we will support one another on this effort by sharing lessons, tools, and methodologies to mitigate and document those risks. The current blog post outlines a number of the ways Hugging Face team members have addressed this query of bias together with supporting tools, we hope that you’ll discover them helpful and encourage you to develop and share your individual!

Summary of linked tools:

- Tasks:

- Explore our directory of ML Tasks to grasp what technical framings and resources can be found to pick from

- Use tools to explore the full development lifecycle of specific tasks

- Datasets:

- Models:

Thanks for reading! 🤗

~ Yacine, on behalf of the Ethics and Society regulars

If you should cite this blog post, please use the next:

@inproceedings{hf_ethics_soc_blog_2,

creator = {Yacine Jernite and

Alexandra Sasha Luccioni and

Irene Solaiman and

Giada Pistilli and

Nathan Lambert and

Ezi Ozoani and

Brigitte Toussignant and

Margaret Mitchell},

title = {Hugging Face Ethics and Society Newsletter 2: Let's Discuss Bias!},

booktitle = {Hugging Face Blog},

yr = {2022},

url = {https://doi.org/10.57967/hf/0214},

doi = {10.57967/hf/0214}

}