🤗 Datasets is an open-source library for downloading and preparing datasets from all domains. Its minimalistic API

allows users to download and prepare datasets in only one line of Python code, with a set of functions that

enable efficient pre-processing. The variety of datasets available is unparalleled, with all the most well-liked

machine learning datasets available to download.

Not only this, but 🤗 Datasets comes prepared with multiple audio-specific features that make working

with audio datasets easy for researchers and practitioners alike. On this blog, we’ll display these features, showcasing

why 🤗 Datasets is the go-to place for downloading and preparing audio datasets.

Contents

- The Hub

- Load an Audio Dataset

- Easy to Load, Easy to Process

- Streaming Mode: The Silver Bullet

- A Tour of Audio Datasets on the Hub

- Closing Remarks

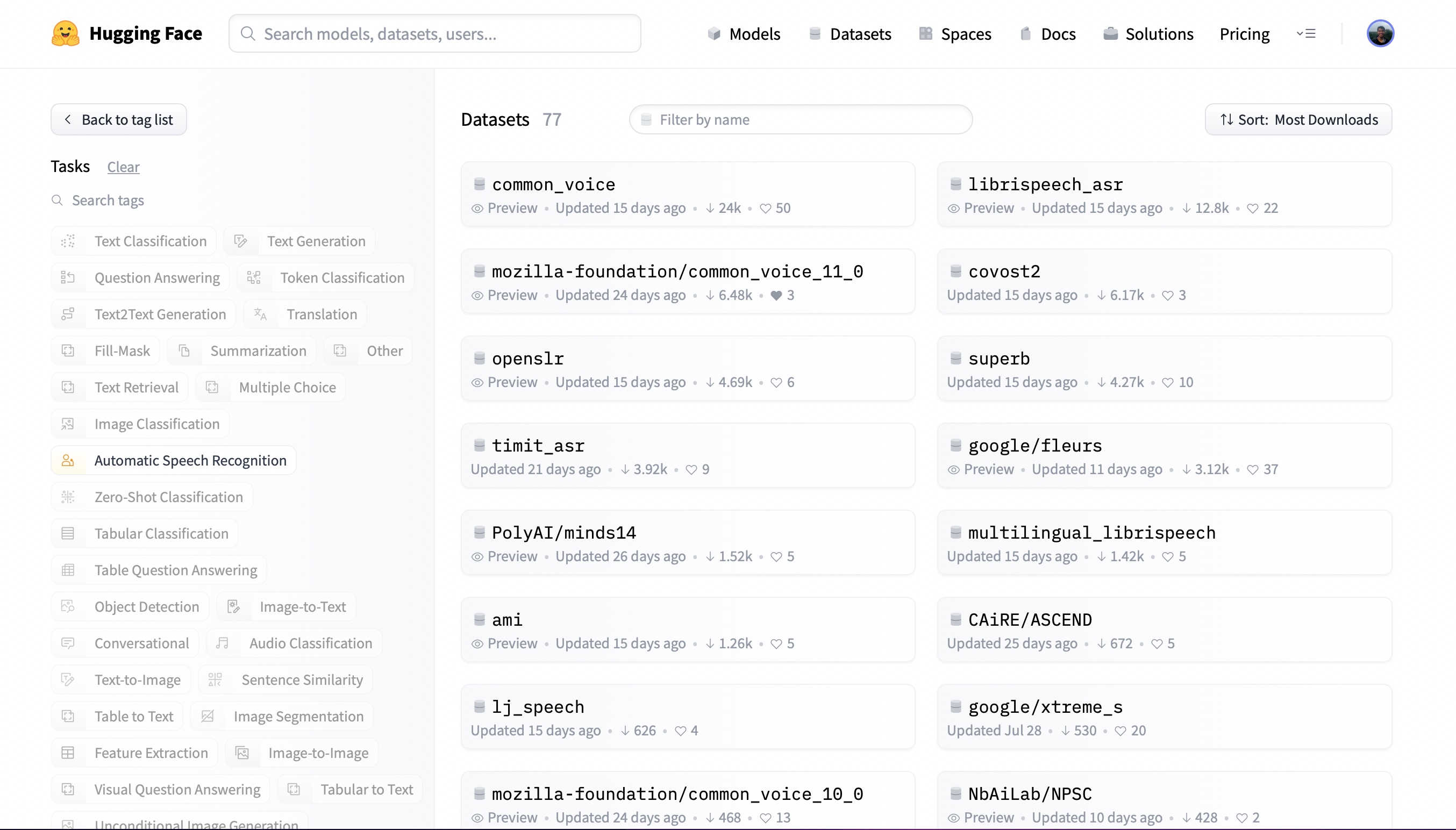

The Hub

The Hugging Face Hub is a platform for hosting models, datasets and demos, all open source and publicly available.

It’s home to a growing collection of audio datasets that span a wide range of domains, tasks and languages. Through

tight integrations with 🤗 Datasets, all of the datasets on the Hub might be downloaded in a single line of code.

Let’s head to the Hub and filter the datasets by task:

On the time of writing, there are 77 speech recognition datasets and 28 audio classification datasets on the Hub,

with these numbers ever-increasing. You possibly can select any one in all these datasets to fit your needs. Let’s try the primary

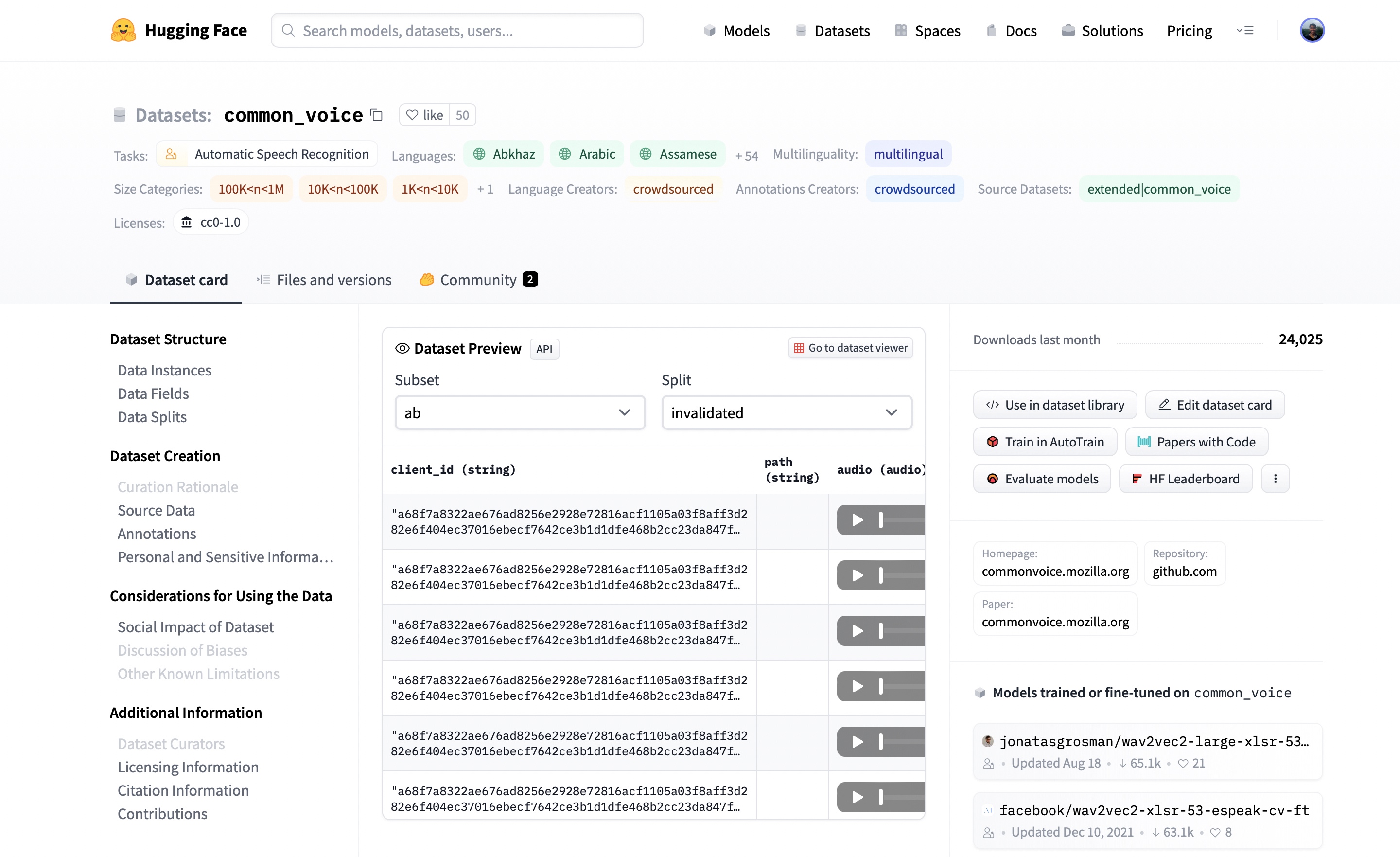

speech recognition result. Clicking on common_voice

brings up the dataset card:

Here, we are able to find additional information concerning the dataset, see what models are trained on the dataset and, most

excitingly, take heed to actual audio samples. The Dataset Preview is presented in the midst of the dataset card.

It shows us the primary 100 samples for every subset and split. What’s more, it’s loaded up the audio samples ready for us

to take heed to in real-time. If we hit the play button on the primary sample, we are able to take heed to the audio and see the

corresponding text.

The Dataset Preview is an excellent way of experiencing audio datasets before committing to using them. You possibly can pick any

dataset on the Hub, scroll through the samples and take heed to the audio for the several subsets and splits, gauging whether

it’s the precise dataset on your needs. Once you’ve got chosen a dataset, it’s trivial to load the information so that you may

start using it.

Load an Audio Dataset

Considered one of the important thing defining features of 🤗 Datasets is the power to download and prepare a dataset in only one line of

Python code. That is made possible through the load_dataset

function. Conventionally, loading a dataset involves: i) downloading the raw data, ii) extracting it from its

compressed format, and iii) preparing individual samples and splits. Using load_dataset, the entire heavy lifting is finished

under the hood.

Let’s take the instance of loading the GigaSpeech dataset from

Speech Colab. GigaSpeech is a comparatively recent speech recognition dataset for benchmarking academic speech systems and is

one in all many audio datasets available on the Hugging Face Hub.

To load the GigaSpeech dataset, we simply take the dataset’s identifier on the Hub (speechcolab/gigaspeech) and specify it

to the load_dataset function. GigaSpeech is available in five configurations

of accelerating size, starting from xs (10 hours) to xl(10,000 hours). For the aim of this tutorial, we’ll load the smallest

of those configurations. The dataset’s identifier and the specified configuration are all that we require to download the dataset:

from datasets import load_dataset

gigaspeech = load_dataset("speechcolab/gigaspeech", "xs")

print(gigaspeech)

Print Output:

DatasetDict({

train: Dataset({

features: ['segment_id', 'speaker', 'text', 'audio', 'begin_time', 'end_time', 'audio_id', 'title', 'url', 'source', 'category', 'original_full_path'],

num_rows: 9389

})

validation: Dataset({

features: ['segment_id', 'speaker', 'text', 'audio', 'begin_time', 'end_time', 'audio_id', 'title', 'url', 'source', 'category', 'original_full_path'],

num_rows: 6750

})

test: Dataset({

features: ['segment_id', 'speaker', 'text', 'audio', 'begin_time', 'end_time', 'audio_id', 'title', 'url', 'source', 'category', 'original_full_path'],

num_rows: 25619

})

})

And identical to that, we have now the GigaSpeech dataset ready! There simply isn’t any easier way of loading an audio dataset. We

can see that we have now the training, validation and test splits pre-partitioned, with the corresponding information for

each.

The item gigaspeech returned by the load_dataset function is a DatasetDict.

We are able to treat it in much the identical way as an abnormal Python dictionary. To get the train split, we pass the corresponding

key to the gigaspeech dictionary:

print(gigaspeech["train"])

Print Output:

Dataset({

features: ['segment_id', 'speaker', 'text', 'audio', 'begin_time', 'end_time', 'audio_id', 'title', 'url', 'source', 'category', 'original_full_path'],

num_rows: 9389

})

This returns a Dataset

object, which incorporates the information for the training split. We are able to go one level deeper and get the primary item of the split.

Again, this is feasible through standard Python indexing:

print(gigaspeech["train"][0])

Print Output:

{'segment_id': 'YOU0000000315_S0000660',

'speaker': 'N/A',

'text': "AS THEY'RE LEAVING CAN KASH PULL ZAHRA ASIDE REALLY QUICKLY " ,

'audio': {'path': '/home/sanchit_huggingface_co/.cache/huggingface/datasets/downloads/extracted/7f8541f130925e9b2af7d37256f2f61f9d6ff21bf4a94f7c1a3803ec648d7d79/xs_chunks_0000/YOU0000000315_S0000660.wav',

'array': array([0.0005188 , 0.00085449, 0.00012207, ..., 0.00125122, 0.00076294,

0.00036621], dtype=float32),

'sampling_rate': 16000

},

'begin_time': 2941.889892578125,

'end_time': 2945.070068359375,

'audio_id': 'YOU0000000315',

'title': 'Return to Vasselheim | Critical Role: VOX MACHINA | Episode 43',

'url': 'https://www.youtube.com/watch?v=zr2n1fLVasU',

'source': 2,

'category': 24,

'original_full_path': 'audio/youtube/P0004/YOU0000000315.opus',

}

We are able to see that there are a lot of features returned by the training split, including segment_id, speaker, text,

audio and more. For speech recognition, we’ll be concerned with the text and audio columns.

Using 🤗 Datasets’ remove_columns method, we are able to remove the

dataset features not required for speech recognition:

COLUMNS_TO_KEEP = ["text", "audio"]

all_columns = gigaspeech["train"].column_names

columns_to_remove = set(all_columns) - set(COLUMNS_TO_KEEP)

gigaspeech = gigaspeech.remove_columns(columns_to_remove)

Let’s check that we have successfully retained the text and audio columns:

print(gigaspeech["train"][0])

Print Output:

{'text': "AS THEY'RE LEAVING CAN KASH PULL ZAHRA ASIDE REALLY QUICKLY " ,

'audio': {'path': '/home/sanchit_huggingface_co/.cache/huggingface/datasets/downloads/extracted/7f8541f130925e9b2af7d37256f2f61f9d6ff21bf4a94f7c1a3803ec648d7d79/xs_chunks_0000/YOU0000000315_S0000660.wav',

'array': array([0.0005188 , 0.00085449, 0.00012207, ..., 0.00125122, 0.00076294,

0.00036621], dtype=float32),

'sampling_rate': 16000}}

Great! We are able to see that we have the 2 required columns text and audio. The text is a string with the sample

transcription and the audio a 1-dimensional array of amplitude values at a sampling rate of 16KHz. That is our

dataset loaded!

Easy to Load, Easy to Process

Loading a dataset with 🤗 Datasets is just half of the fun. We are able to now use the suite of tools available to efficiently

pre-process our data ready for model training or inference. On this Section, we’ll perform three stages of information

pre-processing:

1. Resampling the Audio Data

The load_dataset function prepares audio samples with the sampling rate that they were published with. This shouldn’t be

at all times the sampling rate expected by our model. On this case, we’d like to resample the audio to the proper sampling

rate.

We are able to set the audio inputs to our desired sampling rate using 🤗 Datasets’

cast_column

method. This operation doesn’t change the audio in-place, but quite signals to datasets to resample the audio samples

on the fly once they are loaded. The next code cell will set the sampling rate to 8kHz:

from datasets import Audio

gigaspeech = gigaspeech.cast_column("audio", Audio(sampling_rate=8000))

Re-loading the primary audio sample within the GigaSpeech dataset will resample it to the specified sampling rate:

print(gigaspeech["train"][0])

Print Output:

{'text': "AS THEY'RE LEAVING CAN KASH PULL ZAHRA ASIDE REALLY QUICKLY " ,

'audio': {'path': '/home/sanchit_huggingface_co/.cache/huggingface/datasets/downloads/extracted/7f8541f130925e9b2af7d37256f2f61f9d6ff21bf4a94f7c1a3803ec648d7d79/xs_chunks_0000/YOU0000000315_S0000660.wav',

'array': array([ 0.00046338, 0.00034808, -0.00086153, ..., 0.00099299,

0.00083484, 0.00080221], dtype=float32),

'sampling_rate': 8000}

}

We are able to see that the sampling rate has been downsampled to 8kHz. The array values are also different, as we have now only

got roughly one amplitude value for each two that we had before. Let’s set the dataset sampling rate back to

16kHz, the sampling rate expected by most speech recognition models:

gigaspeech = gigaspeech.cast_column("audio", Audio(sampling_rate=16000))

print(gigaspeech["train"][0])

Print Output:

{'text': "AS THEY'RE LEAVING CAN KASH PULL ZAHRA ASIDE REALLY QUICKLY " ,

'audio': {'path': '/home/sanchit_huggingface_co/.cache/huggingface/datasets/downloads/extracted/7f8541f130925e9b2af7d37256f2f61f9d6ff21bf4a94f7c1a3803ec648d7d79/xs_chunks_0000/YOU0000000315_S0000660.wav',

'array': array([0.0005188 , 0.00085449, 0.00012207, ..., 0.00125122, 0.00076294,

0.00036621], dtype=float32),

'sampling_rate': 16000}

}

Easy! cast_column provides a simple mechanism for resampling audio datasets as and when required.

2. Pre-Processing Function

Some of the difficult elements of working with audio datasets is preparing the information in the precise format for our

model. Using 🤗 Datasets’ map method, we are able to write a

function to pre-process a single sample of the dataset, after which apply it to each sample with none code changes.

First, let’s load a processor object from 🤗 Transformers. This processor pre-processes the audio to input features and

tokenises the goal text to labels. The AutoProcessor class is used to load a processor from a given model checkpoint.

In the instance, we load the processor from OpenAI’s Whisper medium.en

checkpoint, but you possibly can change this to any model identifier on the Hugging Face Hub:

from transformers import AutoProcessor

processor = AutoProcessor.from_pretrained("openai/whisper-medium.en")

Great! Now we are able to write a function that takes a single training sample and passes it through the processor to organize

it for our model. We’ll also compute the input length of every audio sample, information that we’ll need for the subsequent

data preparation step:

def prepare_dataset(batch):

audio = batch["audio"]

batch = processor(audio["array"], sampling_rate=audio["sampling_rate"], text=batch["text"])

batch["input_length"] = len(audio["array"]) / audio["sampling_rate"]

return batch

We are able to apply the information preparation function to all of our training examples using 🤗 Datasets’ map method. Here, we also

remove the text and audio columns, since we have now pre-processed the audio to input features and tokenised the text to

labels:

gigaspeech = gigaspeech.map(prepare_dataset, remove_columns=gigaspeech["train"].column_names)

3. Filtering Function

Prior to training, we may need a heuristic for filtering our training data. For example, we would need to filter any

audio samples longer than 30s to forestall truncating the audio samples or risking out-of-memory errors. We are able to do that in

much the identical way that we prepared the information for our model within the previous step.

We start by writing a function that indicates which samples to maintain and which to discard. This

function, is_audio_length_in_range, returns a boolean: samples which might be shorter than 30s return True, and people

which might be longer False.

MAX_DURATION_IN_SECONDS = 30.0

def is_audio_length_in_range(input_length):

return input_length < MAX_DURATION_IN_SECONDS

We are able to apply this filtering function to all of our training examples using 🤗 Datasets’ filter

method, keeping all samples which might be shorter than 30s (True) and discarding those which might be longer (False):

gigaspeech["train"] = gigaspeech["train"].filter(is_audio_length_in_range, input_columns=["input_length"])

And with that, we have now the GigaSpeech dataset fully prepared for our model! In total, this process required 13 lines of

Python code, right from loading the dataset to the ultimate filtering step.

Keeping the notebook as general as possible, we only performed the basic data preparation steps. Nevertheless, there

isn’t any restriction to the functions you possibly can apply to your audio dataset. You possibly can extend the function prepare_dataset

to perform way more involved operations, resembling data augmentation, voice activity detection or noise reduction. With

🤗 Datasets, when you can write it in a Python function, you possibly can apply it to your dataset!

Streaming Mode: The Silver Bullet

Considered one of the most important challenges faced with audio datasets is their sheer size. The xs configuration of GigaSpeech contained just 10

hours of coaching data, but amassed over 13GB of space for storing for download and preparation. So what happens once we

need to train on a bigger split? The total xl configuration incorporates 10,000 hours of coaching data, requiring over 1TB of

space for storing. For many speech researchers, this well exceeds the specifications of a typical hard disk drive disk.

Do we’d like to fork out and buy additional storage? Or is there a way we are able to train on these datasets with no disk space

constraints?

🤗 Datasets allow us to just do this. It’s made possible through using streaming

mode, depicted graphically in Figure 1. Streaming allows us to load the information progressively as we iterate over the dataset.

Moderately than downloading the entire dataset directly, we load the dataset sample by sample. We iterate over the dataset,

loading and preparing samples on the fly once they are needed. This manner, we only ever load the samples that we’re using,

and never those that we’re not! Once we’re done with a sample, we proceed iterating over the dataset and cargo the subsequent one.

That is analogous to downloading a TV show versus streaming it. Once we download a TV show, we download the complete video

offline and put it aside to our disk. We’ve to attend for the complete video to download before we are able to watch it and require as

much disk space as size of the video file. Compare this to streaming a TV show. Here, we don’t download any a part of the

video to disk, but quite iterate over the distant video file and cargo each part in real-time as required. We haven’t got

to attend for the total video to buffer before we are able to start watching, we are able to start as soon as the primary portion of the video

is prepared! This is identical streaming principle that we apply to loading datasets.

Streaming mode has three primary benefits over downloading the complete dataset directly:

- Disk space: samples are loaded to memory one-by-one as we iterate over the dataset. Because the data shouldn’t be downloaded locally, there aren’t any disk space requirements, so you need to use datasets of arbitrary size.

- Download and processing time: audio datasets are large and want a big period of time to download and process. With streaming, loading and processing is finished on the fly, meaning you possibly can start using the dataset as soon as the primary sample is prepared.

- Easy experimentation: you possibly can experiment on a handful samples to ascertain that your script works without having to download the complete dataset.

There’s one caveat to streaming mode. When downloading a dataset, each the raw data and processed data are saved locally

to disk. If we wish to re-use this dataset, we are able to directly load the processed data from disk, skipping the download and

processing steps. Consequently, we only should perform the downloading and processing operations once, after which we

can re-use the prepared data. With streaming mode, the information shouldn’t be downloaded to disk. Thus, neither the downloaded nor

pre-processed data are cached. If we wish to re-use the dataset, the streaming steps should be repeated, with the audio

files loaded and processed on the fly again. Because of this, it is suggested to download datasets that you simply are prone to use

multiple times.

How are you going to enable streaming mode? Easy! Just set streaming=True once you load your dataset. The remainder can be taken

take care of you:

gigaspeech = load_dataset("speechcolab/gigaspeech", "xs", streaming=True)

All of the steps covered up to now on this tutorial might be applied to the streaming dataset with none code changes.

The one change is that you may not access individual samples using Python indexing (i.e. gigaspeech["train"][sample_idx]).

As an alternative, you will have to iterate over the dataset, using a for loop for instance.

Streaming mode can take your research to the subsequent level: not only are the most important datasets accessible to you, but you

can easily evaluate systems over multiple datasets in a single go without worrying about your disk space. Compared

to evaluating on a single dataset, multi-dataset evaluation gives a greater metric for the generalisation

abilities of a speech recognition system (c.f. End-to-end Speech Benchmark (ESB)).

The accompanying Google Colab

provides an example for evaluating the Whisper model on eight English speech recognition datasets in a single script using

streaming mode.

A Tour of Audio Datasets on The Hub

This Section serves as a reference guide for the most well-liked speech recognition, speech translation and audio

classification datasets on the Hugging Face Hub. We are able to apply all the pieces that we have covered for the GigaSpeech dataset

to any of the datasets on the Hub. All we have now to do is switch the dataset identifier within the load_dataset function.

It’s that easy!

English Speech Recognition

Speech recognition, or speech-to-text, is the duty of mapping from spoken speech to written text, where each the speech

and text are in the identical language. We offer a summary of the most well-liked English speech recognition datasets on the Hub:

| Dataset | Domain | Speaking Style | Train Hours | Casing | Punctuation | License | Really helpful Use |

|---|---|---|---|---|---|---|---|

| LibriSpeech | Audiobook | Narrated | 960 | ❌ | ❌ | CC-BY-4.0 | Academic benchmarks |

| Common Voice 11 | Wikipedia | Narrated | 2300 | ✅ | ✅ | CC0-1.0 | Non-native speakers |

| VoxPopuli | European Parliament | Oratory | 540 | ❌ | ✅ | CC0 | Non-native speakers |

| TED-LIUM | TED talks | Oratory | 450 | ❌ | ❌ | CC-BY-NC-ND 3.0 | Technical topics |

| GigaSpeech | Audiobook, podcast, YouTube | Narrated, spontaneous | 10000 | ❌ | ✅ | apache-2.0 | Robustness over multiple domains |

| SPGISpeech | Fincancial meetings | Oratory, spontaneous | 5000 | ✅ | ✅ | User Agreement | Fully formatted transcriptions |

| Earnings-22 | Fincancial meetings | Oratory, spontaneous | 119 | ✅ | ✅ | CC-BY-SA-4.0 | Diversity of accents |

| AMI | Meetings | Spontaneous | 100 | ✅ | ✅ | CC-BY-4.0 | Noisy speech conditions |

Confer with the Google Colab

for a guide on evaluating a system on all eight English speech recognition datasets in a single script.

The next dataset descriptions are largely taken from the ESB Benchmark paper.

LibriSpeech ASR

LibriSpeech is an ordinary large-scale dataset for evaluating ASR systems. It consists of roughly 1,000

hours of narrated audiobooks collected from the LibriVox project. LibriSpeech has been

instrumental in facilitating researchers to leverage a big body of pre-existing transcribed speech data. As such, it

has turn out to be one of the crucial popular datasets for benchmarking academic speech systems.

librispeech = load_dataset("librispeech_asr", "all")

Common Voice

Common Voice is a series of crowd-sourced open-licensed speech datasets where speakers record text from Wikipedia in

various languages. Since anyone can contribute recordings, there is critical variation in each audio quality and

speakers. The audio conditions are difficult, with recording artefacts, accented speech, hesitations, and the presence

of foreign words. The transcriptions are each cased and punctuated. The English subset of version 11.0 incorporates

roughly 2,300 hours of validated data. Use of the dataset requires you to conform to the Common Voice terms of use,

which might be found on the Hugging Face Hub: mozilla-foundation/common_voice_11_0.

Once you will have agreed to the terms of use, you can be granted access to the dataset. You’ll then need to offer an

authentication token from the Hub once you load the dataset.

common_voice = load_dataset("mozilla-foundation/common_voice_11", "en", use_auth_token=True)

VoxPopuli

VoxPopuli is a large-scale multilingual speech corpus consisting of information sourced from 2009-2020 European Parliament

event recordings. Consequently, it occupies the unique domain of oratory, political speech, largely sourced from

non-native speakers. The English subset incorporates roughly 550 hours labelled speech.

voxpopuli = load_dataset("facebook/voxpopuli", "en")

TED-LIUM

TED-LIUM is a dataset based on English-language TED Talk conference videos. The speaking style is oratory educational

talks. The transcribed talks cover a spread of various cultural, political, and academic topics, leading to a

technical vocabulary. The Release 3 (latest) edition of the dataset incorporates roughly 450 hours of coaching data.

The validation and test data are from the legacy set, consistent with earlier releases.

tedlium = load_dataset("LIUM/tedlium", "release3")

GigaSpeech

GigaSpeech is a multi-domain English speech recognition corpus curated from audiobooks, podcasts and YouTube. It covers

each narrated and spontaneous speech over a wide range of topics, resembling arts, science and sports. It incorporates training

splits various from 10 hours – 10,000 hours and standardised validation and test splits.

gigaspeech = load_dataset("speechcolab/gigaspeech", "xs", use_auth_token=True)

SPGISpeech

SPGISpeech is an English speech recognition corpus composed of company earnings calls which were manually

transcribed by S&P Global, Inc. The transcriptions are fully-formatted in response to an expert style guide for

oratory and spontaneous speech. It incorporates training splits starting from 200 hours – 5,000 hours, with canonical

validation and test splits.

spgispeech = load_dataset("kensho/spgispeech", "s", use_auth_token=True)

Earnings-22

Earnings-22 is a 119-hour corpus of English-language earnings calls collected from global firms. The dataset was

developed with the goal of aggregating a broad range of speakers and accents covering a spread of real-world financial

topics. There’s large diversity within the speakers and accents, with speakers taken from seven different language regions.

Earnings-22 was published primarily as a test-only dataset. The Hub incorporates a version of the dataset that has been

partitioned into train-validation-test splits.

earnings22 = load_dataset("revdotcom/earnings22")

AMI

AMI comprises 100 hours of meeting recordings captured using different recording streams. The corpus incorporates manually

annotated orthographic transcriptions of the meetings aligned on the word level. Individual samples of the AMI dataset

contain very large audio files (between 10 and 60 minutes), that are segmented to lengths feasible for training most

speech recognition systems. AMI incorporates two splits: IHM and SDM. IHM (individual headset microphone) incorporates easier

near-field speech, and SDM (single distant microphone) harder far-field speech.

ami = load_dataset("edinburghcstr/ami", "ihm")

Multilingual Speech Recognition

Multilingual speech recognition refers to speech recognition (speech-to-text) for all languages except English.

Multilingual LibriSpeech

Multilingual LibriSpeech is the multilingual equivalent of the LibriSpeech ASR corpus.

It comprises a big corpus of read audiobooks taken from the LibriVox project, making

it an acceptable dataset for educational research. It incorporates data split into eight high-resource languages – English,

German, Dutch, Spanish, French, Italian, Portuguese and Polish.

Common Voice

Common Voice is a series of crowd-sourced open-licensed speech datasets where speakers record text from Wikipedia in

various languages. Since anyone can contribute recordings, there is critical variation in each audio quality and

speakers. The audio conditions are difficult, with recording artefacts, accented speech, hesitations, and the presence

of foreign words. The transcriptions are each cased and punctuated. As of version 11, there are over 100 languages

available, each low and high-resource.

VoxPopuli

VoxPopuli is a large-scale multilingual speech corpus consisting of information sourced from 2009-2020 European Parliament

event recordings. Consequently, it occupies the unique domain of oratory, political speech, largely sourced from

non-native speakers. It incorporates labelled audio-transcription data for 15 European languages.

FLEURS

FLEURS (Few-shot Learning Evaluation of Universal Representations of Speech) is a dataset for evaluating speech recognition

systems in 102 languages, including many which might be classified as ‘low-resource’. The information is derived from the FLoRes-101

dataset, a machine translation corpus with 3001 sentence translations from English to 101 other languages. Native speakers

are recorded narrating the sentence transcriptions of their native language. The recorded audio data is paired with the

sentence transcriptions to yield multilingual speech recognition over all 101 languages. The training sets contain

roughly 10 hours of supervised audio-transcription data per language.

Speech Translation

Speech translation is the duty of mapping from spoken speech to written text, where the speech and text are in numerous

languages (e.g. English speech to French text).

CoVoST 2

CoVoST 2 is a large-scale multilingual speech translation corpus covering translations from 21 languages into English

and from English into 15 languages. The dataset is created using Mozilla’s open-source Common Voice database of

crowd-sourced voice recordings. There are 2,900 hours of speech represented within the corpus.

FLEURS

FLEURS (Few-shot Learning Evaluation of Universal Representations of Speech) is a dataset for evaluating speech recognition

systems in 102 languages, including many which might be classified as ‘low-resource’. The information is derived from the FLoRes-101

dataset, a machine translation corpus with 3001 sentence translations from English to 101 other languages. Native

speakers are recorded narrating the sentence transcriptions of their native languages. An -way parallel corpus of

speech translation data is constructed by pairing the recorded audio data with the sentence transcriptions for every of the

101 languages. The training sets contain roughly 10 hours of supervised audio-transcription data per source-target

language combination.

Audio Classification

Audio classification is the duty of mapping a raw audio input to a category label output. Practical applications of audio

classification include keyword spotting, speaker intent and language identification.

SpeechCommands

SpeechCommands is a dataset comprised of one-second audio files, each containing either a single spoken word in English

or background noise. The words are taken from a small set of commands and are spoken by a lot of different speakers.

The dataset is designed to assist train and evaluate small on-device keyword spotting systems.

Multilingual Spoken Words

Multilingual Spoken Words is a large-scale corpus of one-second audio samples, each containing a single spoken word. The

dataset consists of fifty languages and greater than 340,000 keywords, totalling 23.4 million one-second spoken examples or

over 6,000 hours of audio. The audio-transcription data is sourced from the Mozilla Common Voice project. Time stamps

are generated for each utterance on the word-level and used to extract individual spoken words and their corresponding

transcriptions, thus forming a brand new corpus of single spoken words. The dataset’s intended use is academic research and

industrial applications in multilingual keyword spotting and spoken term search.

FLEURS

FLEURS (Few-shot Learning Evaluation of Universal Representations of Speech) is a dataset for evaluating speech recognition

systems in 102 languages, including many which might be classified as ‘low-resource’. The information is derived from the FLoRes-101

dataset, a machine translation corpus with 3001 sentence translations from English to 101 other languages. Native

speakers are recorded narrating the sentence transcriptions of their native languages. The recorded audio data is paired

with a label for the language wherein it’s spoken. The dataset might be used as an audio classification dataset for

language identification: systems are trained to predict the language of every utterance within the corpus.

Closing Remarks

On this blog post, we explored the Hugging Face Hub and experienced the Dataset Preview, an efficient technique of

listening to audio datasets before downloading them. We loaded an audio dataset with one line of Python

code and performed a series of generic pre-processing steps to organize it for a machine learning model. In

total, this required just 13 lines of code, counting on easy Python functions to perform the essential

operations. We introduced streaming mode, a way for loading and preparing samples of audio data on the fly. We

concluded by summarising the most well-liked speech recognition, speech translation and audio classification datasets on

the Hub.

Having read this blog, we hope you agree that 🤗 Datasets is the primary place for downloading and preparing audio

datasets. 🤗 Datasets is made possible through the work of the community. In case you would love to contribute a dataset,

consult with the Guide for Adding a Recent Dataset.

Thanks to the next individuals who help contribute to the blog post: Vaibhav Srivastav, Polina Kazakova, Patrick von Platen, Omar Sanseviero and Quentin Lhoest.