We’re excited to introduce a brand new tool we created: ⚔️ AI vs. AI ⚔️, a deep reinforcement learning multi-agents competition system.

This tool, hosted on Spaces, allows us to create multi-agent competitions. It consists of three elements:

- A Space with a matchmaking algorithm that runs the model fights using a background task.

- A Dataset containing the outcomes.

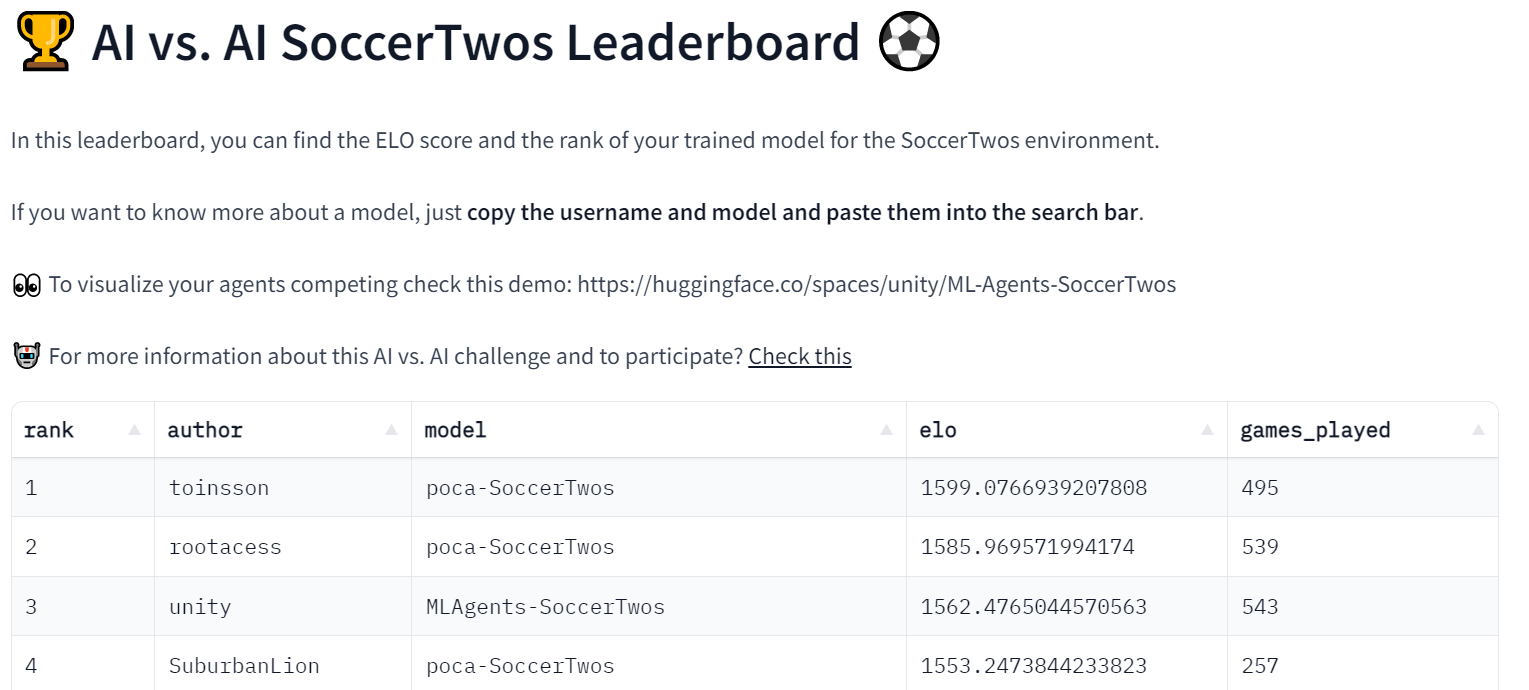

- A Leaderboard that gets the match history results and displays the models’ ELO.

Then, when a user pushes a trained model to the Hub, it gets evaluated and ranked against others. Due to that, we are able to evaluate your agents against other’s agents in a multi-agent setting.

Along with being a great tool for hosting multi-agent competitions, we predict this tool can be a robust evaluation technique in multi-agent settings. By playing against a number of policies, your agents are evaluated against a wide selection of behaviors. This could provide you with a very good idea of the standard of your policy.

Let’s see how it really works with our first competition host: SoccerTwos Challenge.

How does AI vs. AI works?

AI vs. AI is an open-source tool developed at Hugging Face to rank the strength of reinforcement learning models in a multi-agent setting.

The thought is to get a relative measure of skill relatively than an objective one by making the models play against one another repeatedly and use the matches results to evaluate their performance in comparison with all the opposite models and consequently get a view of the standard of their policy without requiring classic metrics.

The more agents are submitted for a given task or environment, the more representative the rating becomes.

To generate a rating based on match leads to a competitive environment, we decided to base the rankings on the ELO rating system.

The core concept is that after a match ends, the rating of each players are updated based on the result and the rankings they’d before the sport. When a user with a high rating beats one with a low rating, they will not get many points. Likewise, the loser wouldn’t lose many points on this case.

Conversely, if a low-rated player wins in an upset against a high-rated player, it’ll cause a more significant effect on each of their rankings.

In our context, we kept the system so simple as possible by not adding any alteration to the quantities gained or lost based on the starting rankings of the player. As such, gain and loss will all the time be the right opposite (+10 / -10, as an example), and the typical ELO rating will stay constant on the starting rating. The alternative of a 1200 ELO rating start is entirely arbitrary.

If you ought to learn more about ELO and see some calculation example, we wrote a proof in our Deep Reinforcement Learning Course here

Using this rating, it is feasible to generate matches between models with comparable strengths routinely. There are several ways you may go about making a matchmaking system, but here we decided to maintain it fairly easy while guaranteeing a minimum amount of diversity within the matchups and in addition keeping most matches with fairly close opposing rankings.

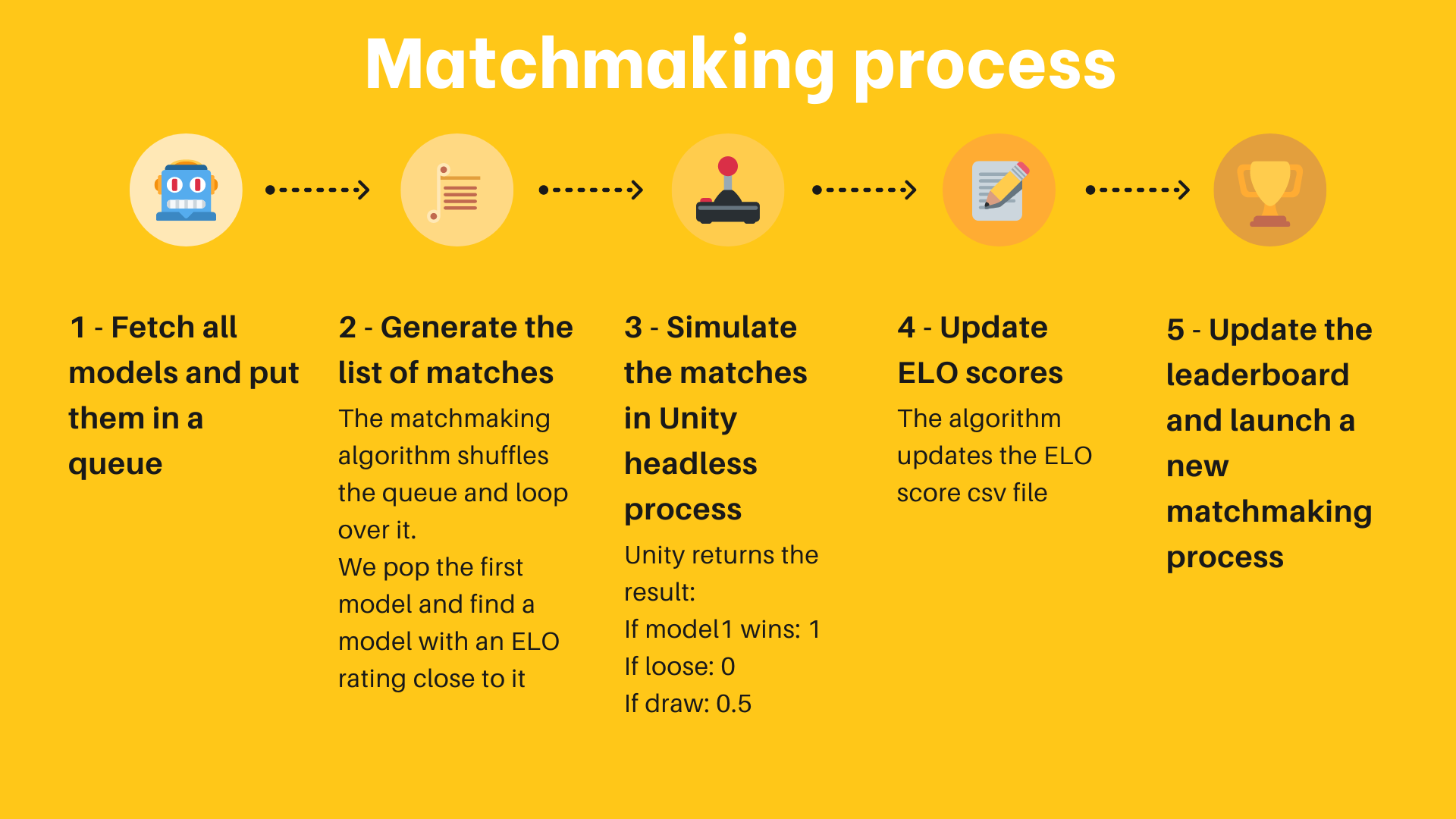

Here’s how works the algorithm:

- Gather all of the available models on the Hub. Recent models get a starting rating of 1200, while others keep the rating they’ve gained/lost through their previous matches.

- Create a queue from all these models.

- Pop the primary element (model) from the queue, after which pop one other random model on this queue from the n models with the closest rankings to the primary model.

- Simulate this match by loading each models within the environment (a Unity executable, as an example) and gathering the outcomes. For this implementation, we sent the outcomes to a Hugging Face Dataset on the Hub.

- Compute the brand new rating of each models based on the received result and the ELO formula.

- Proceed popping models two by two and simulating the matches until just one or zero models are within the queue.

- Save the resulting rankings and return to step 1

To run this matchmaking process repeatedly, we use free Hugging Face Spaces hardware with a Scheduler to maintain running the matchmaking process as a background task.

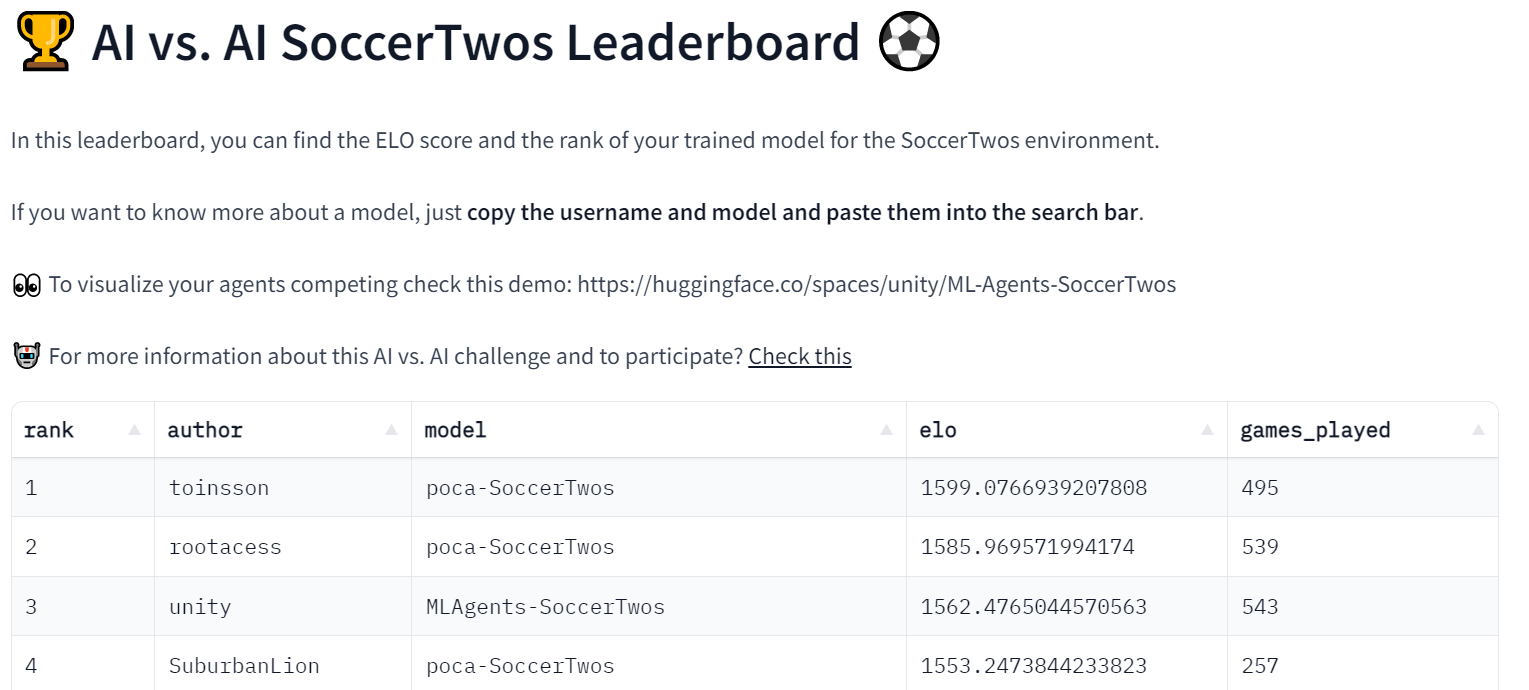

The Spaces can also be used to fetch the ELO rankings of every model which have already been played and, from it display a leaderboard from which everyone can check the progress of the models.

The method generally uses several Hugging Face Datasets to offer data persistence (here, matches history and model rankings).

For the reason that process also saves the matches’ history, it is feasible to see precisely the outcomes of any given model. This may, as an example, help you check why your model struggles with one other one, most notably using one other demo Space to visualise matches like this one.

For now, this experiment is running with the MLAgent environment SoccerTwos for the Hugging Face Deep RL Course, nonetheless, the method and implementation, typically, are very much environment agnostic and could possibly be used to guage free of charge a wide selection of adversarial multi-agent settings.

In fact, it will be important to remind again that this evaluation is a relative rating between the strengths of the submitted agents, and the rankings by themselves don’t have any objective meaning contrary to other metrics. It only represents how good or bad a model performs in comparison with the opposite models within the pool. Still, given a big and varied enough pool of models (and enough matches played), this evaluation becomes a really solid technique to represent the overall performance of a model.

Our first AI vs. AI challenge experimentation: SoccerTwos Challenge ⚽

This challenge is Unit 7 of our free Deep Reinforcement Learning Course. It began on February 1st and can end on April thirtieth.

When you’re interested, you don’t have to take part in the course to give you the chance to take part in the competition. You possibly can start here 👉 https://huggingface.co/deep-rl-course/unit7/introduction

On this Unit, readers learned the fundamentals of multi-agent reinforcement learning (MARL)by training a 2vs2 soccer team. ⚽

The environment used was made by the Unity ML-Agents team. The goal is straightforward: your team needs to attain a goal. To try this, they should beat the opponent’s team and collaborate with their teammate.

Along with the leaderboard, we created a Space demo where people can select two teams and visualize them playing 👉https://huggingface.co/spaces/unity/SoccerTwos

This experimentation goes well since we have already got 48 models on the leaderboard

We also created a discord channel called ai-vs-ai-competition so that folks can exchange with others and share advice.

Conclusion and what’s next?

For the reason that tool we developed is environment agnostic, we would like to host more challenges in the long run with PettingZoo and other multi-agent environments. If you could have some environments or challenges you ought to do, don’t hesitate to succeed in out to us.

In the long run, we are going to host multiple multi-agent competitions with this tool and environments we created, resembling SnowballFight.

Along with being a great tool for hosting multi-agent competitions, we predict that this tool can be a strong evaluation technique in multi-agent settings: by playing against a number of policies, your agents are evaluated against a wide selection of behaviors, and also you’ll get a very good idea of the standard of your policy.

The most effective technique to keep up a correspondence is to join our discord server to exchange with us and with the community.

Citation

Citation: When you found this handy to your academic work, please consider citing our work, in text:

Cochet, Simonini, "Introducing AI vs. AI a deep reinforcement learning multi-agents competition system", Hugging Face Blog, 2023.

BibTeX citation:

@article{cochet-simonini2023,

writer = {Cochet, Carl and Simonini, Thomas},

title = {Introducing AI vs. AI a deep reinforcement learning multi-agents competition system},

journal = {Hugging Face Blog},

12 months = {2023},

note = {https://huggingface.co/blog/aivsai},

}