If you happen to’re inquisitive about constructing ML solutions faster, visit the Expert Acceleration Program landing page and call us here!

Business Context

As IT continues to evolve and reshape our world, making a more diverse and inclusive environment inside the industry is imperative. Witty Works was inbuilt 2018 to handle this challenge. Starting as a consulting company advising organizations on becoming more diverse, Witty Works first helped them write job ads using inclusive language. To scale this effort, in 2019, they built an online app to help users in writing inclusive job ads in English, French and German. They enlarged the scope rapidly with a writing assistant working as a browser extension that mechanically fixes and explains potential bias in emails, Linkedin posts, job ads, etc. The aim was to supply an answer for internal and external communication that fosters a cultural change by providing micro-learning bites that specify the underlying bias of highlighted words and phrases.

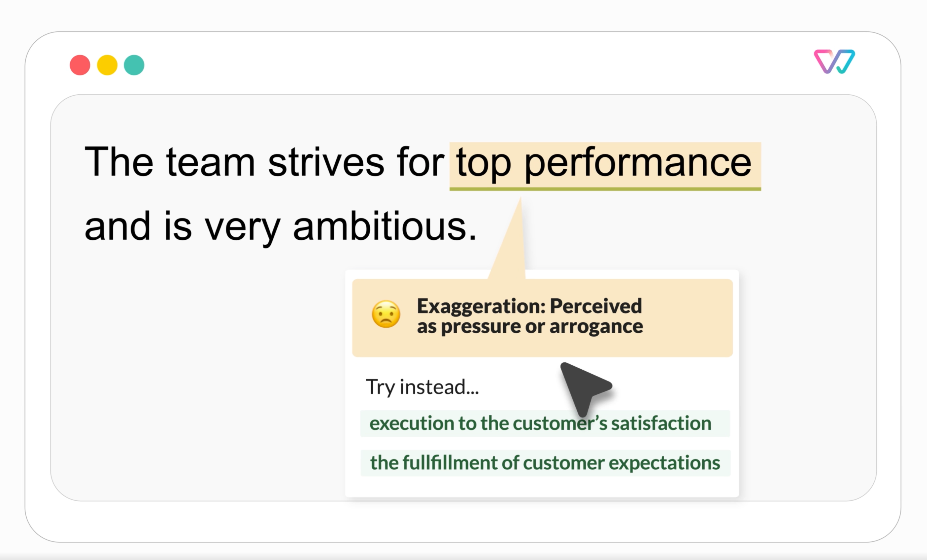

Example of suggestions by the writing assistant

First experiments

Witty Works first selected a basic machine learning approach to construct their assistant from scratch. Using transfer learning with pre-trained spaCy models, the assistant was capable of:

- Analyze text and transform words into lemmas,

- Perform a linguistic evaluation,

- Extract the linguistic features from the text (plural and singular forms, gender), part-of-speech tags (pronouns, verbs, nouns, adjectives, etc.), word dependencies labels, named entity recognition, etc.

By detecting and filtering words in line with a particular knowledge base using linguistic features, the assistant could highlight non-inclusive words and suggest alternatives in real-time.

Challenge

The vocabulary had around 2300 non-inclusive words and idioms in German and English correspondingly. And the above described basic approach worked well for 85% of the vocabulary but failed for context-dependent words. Subsequently the duty was to construct a context-dependent classifier of non-inclusive words. Such a challenge (understanding the context reasonably than recognizing linguistic features) led to using Hugging Face transformers.

Example of context dependent non-inclusive words:

Fossil fuels usually are not renewable resources. Vs He's an old fossil

You should have a versatile schedule. Vs It is best to keep your schedule flexible.

Solutions provided by the Hugging Face Experts

The initial chosen approach was vanilla transformers (used to extract token embeddings of specific non-inclusive words). The Hugging Face Expert really helpful switching from contextualized word embeddings to contextualized sentence embeddings. On this approach, the representation of every word in a sentence is dependent upon its surrounding context.

Hugging Face Experts suggested using a Sentence Transformers architecture. This architecture generates embeddings for sentences as an entire. The gap between semantically similar sentences is minimized and maximized for distant sentences.

On this approach, Sentence Transformers use Siamese networks and triplet network structures to change the pre-trained transformer models to generate “semantically meaningful” sentence embeddings.

The resulting sentence embedding serves as input for a classical classifier based on KNN or logistic regression to construct a context-dependent classifier of non-inclusive words.

Elena Nazarenko, Lead Data Scientist at Witty Works:

“We generate contextualized embedding vectors for each word depending on its

sentence (BERT embedding). Then, we keep only the embedding for the “problem”

word’s token, and calculate the smallest angle (cosine similarity)”

To fine-tune a vanilla transformers-based classifier, corresponding to a straightforward BERT model, Witty Works would have needed a considerable amount of annotated data. Tons of of samples for every category of flagged words would have been vital. Nevertheless, such an annotation process would have been costly and time-consuming, which Witty Works couldn’t afford.

The Hugging Face Expert suggested using the Sentence Transformers Positive-tuning library (aka SetFit), an efficient framework for few-shot fine-tuning of Sentence Transformers models. Combining contrastive learning and semantic sentence similarity, SetFit achieves high accuracy on text classification tasks with little or no labeled data.

Julien Simon, Chief Evangelist at Hugging Face:

“SetFit for text classification tasks is an ideal tool so as to add to the ML toolbox”

The Witty Works team found the performance was adequate with as little as 15-20 labeled sentences per specific word.

Elena Nazarenko, Lead Data Scientist at Witty Works:

“At the tip of the day, we saved money and time by not creating this massive data set”

Reducing the variety of sentences was essential to make sure that model training remained fast and that running the model was efficient. Nevertheless, it was also vital for one more reason: Witty explicitly takes a highly supervised/rule-based approach to actively manage bias. Reducing the variety of sentences could be very essential to cut back the trouble in manually reviewing the training sentences.

One major challenge for Witty Works was deploying a model with low latency. Nobody expects to attend 3 minutes to get suggestions to enhance one’s text! Each Hugging Face and Witty Works experimented with a number of sentence transformers models and settled for mpnet-base-v2 combined with logistic regression and KNN.

After a primary test on Google Colab, the Hugging Face experts guided Witty Works on deploying the model on Azure. No optimization was vital because the model was fast enough.

Elena Nazarenko, Lead Data Scientist at Witty Works:

“Working with Hugging Face saved us plenty of money and time.

One can feel lost when implementing complex text classification use cases.

Because it is one of the vital popular tasks, there are plenty of models on the Hub.

The Hugging Face experts guided me through the large amount of transformer-based

models to decide on the most effective possible approach.

Plus, I felt thoroughly supported through the model deployment”

Results and conclusion

The number of coaching sentences dropped from 100-200 per word to 15-20 per word. Witty Works achieved an accuracy of 0.92 and successfully deployed a custom model on Azure with minimal DevOps effort!

Lukas Kahwe Smith CTO & Co-founder of Witty Works:

“Working on an IT project by oneself could be difficult and even when

the EAP is a major investment for a startup, it's the cheaper

and most meaningful technique to get a sparring partner“

With the guidance of the Hugging Face experts, Witty Works saved money and time by implementing a brand new ML workflow within the Hugging Face way.

Julien Simon, Chief Evangelist at Hugging Face:

“The Hugging technique to construct workflows:

find open-source pre-trained models,

evaluate them instantly,

see what works, see what doesn't.

By iterating, you begin learning things immediately”

🤗 If you happen to or your team are inquisitive about accelerating your ML roadmap with Hugging Face Experts, please visit hf.co/support to learn more.