In our mission to democratize good machine learning (ML), we examine how supporting ML community work also empowers examining and stopping possible harms. Open development and science decentralizes power in order that many individuals can collectively work on AI that reflects their needs and values. While openness enables broader perspectives to contribute to research and AI overall, it faces the stress of less risk control.

Moderating ML artifacts presents unique challenges as a result of the dynamic and rapidly evolving nature of those systems. In reality, as ML models grow to be more advanced and capable of manufacturing increasingly diverse content, the potential for harmful or unintended outputs grows, necessitating the event of strong moderation and evaluation strategies. Furthermore, the complexity of ML models and the vast amounts of information they process exacerbate the challenge of identifying and addressing potential biases and ethical concerns.

As hosts, we recognize the responsibility that comes with potentially amplifying harm to our users and the world more broadly. Often these harms disparately impact minority communities in a context-dependent manner. We have now taken the approach of analyzing the tensions in play for every context, open to discussion across the corporate and Hugging Face community. While many models can amplify harm, especially discriminatory content, we’re taking a series of steps to discover highest risk models and what motion to take. Importantly, lively perspectives from many backgrounds is essential to understanding, measuring, and mitigating potential harms that affect different groups of individuals.

We’re crafting tools and safeguards along with improving our documentation practices to make sure open source science empowers individuals and continues to reduce potential harms.

Ethical Categories

The primary major aspect of our work to foster good open ML consists in promoting the tools and positive examples of ML development that prioritize values and consideration for its stakeholders. This helps users take concrete steps to handle outstanding issues, and present plausible alternatives to de facto damaging practices in ML development.

To assist our users discover and interact with ethics-related ML work, we now have compiled a set of tags. These 6 high-level categories are based on our evaluation of Spaces that community members had contributed. They’re designed to offer you a jargon-free way of occupied with ethical technology:

- Rigorous work pays special attention to developing with best practices in mind. In ML, this will mean examining failure cases (including conducting bias and fairness audits), protecting privacy through security measures, and ensuring that potential users (technical and non-technical) are informed in regards to the project’s limitations.

- Consentful work supports the self-determination of people that use and are affected by these technologies.

- Socially Conscious work shows us how technology can support social, environmental, and scientific efforts.

- Sustainable work highlights and explores techniques for making machine learning ecologically sustainable.

- Inclusive work broadens the scope of who builds and advantages within the machine learning world.

- Inquisitive work shines a lightweight on inequities and power structures which challenge the community to rethink its relationship to technology.

Read more at https://huggingface.co/ethics

Search for these terms as we’ll be using these tags, and updating them based on community contributions, across some latest projects on the Hub!

Safeguards

Taking an “all-or-nothing” view of open releases ignores the big variety of contexts that determine an ML artifact’s positive or negative impacts. Having more levers of control over how ML systems are shared and re-used supports collaborative development and evaluation with less risk of promoting harmful uses or misuses; allowing for more openness and participation in innovation for shared advantages.

We engage directly with contributors and have addressed pressing issues. To bring this to the subsequent level, we’re constructing community-based processes. This approach empowers each Hugging Face contributors, and people affected by contributions, to tell the restrictions, sharing, and extra mechanisms obligatory for models and data made available on our platform. The three important elements we’ll concentrate to are: the origin of the artifact, how the artifact is handled by its developers, and the way the artifact has been used. In that respect we:

- launched a flagging feature for our community to find out whether ML artifacts or community content (model, dataset, space, or discussion) violate our content guidelines,

- monitor our community discussion boards to make sure Hub users abide by the code of conduct,

- robustly document our most-downloaded models with model cards that detail social impacts, biases, and intended and out-of-scope use cases,

- create audience-guiding tags, similar to the “Not For All Audiences” tag that might be added to the repository’s card metadata to avoid un-requested violent and sexual content,

- promote use of Open Responsible AI Licenses (RAIL) for models, similar to with LLMs (BLOOM, BigCode),

- conduct research that analyzes which models and datasets have the best potential for, or track record of, misuse and malicious use.

The right way to use the flagging function:

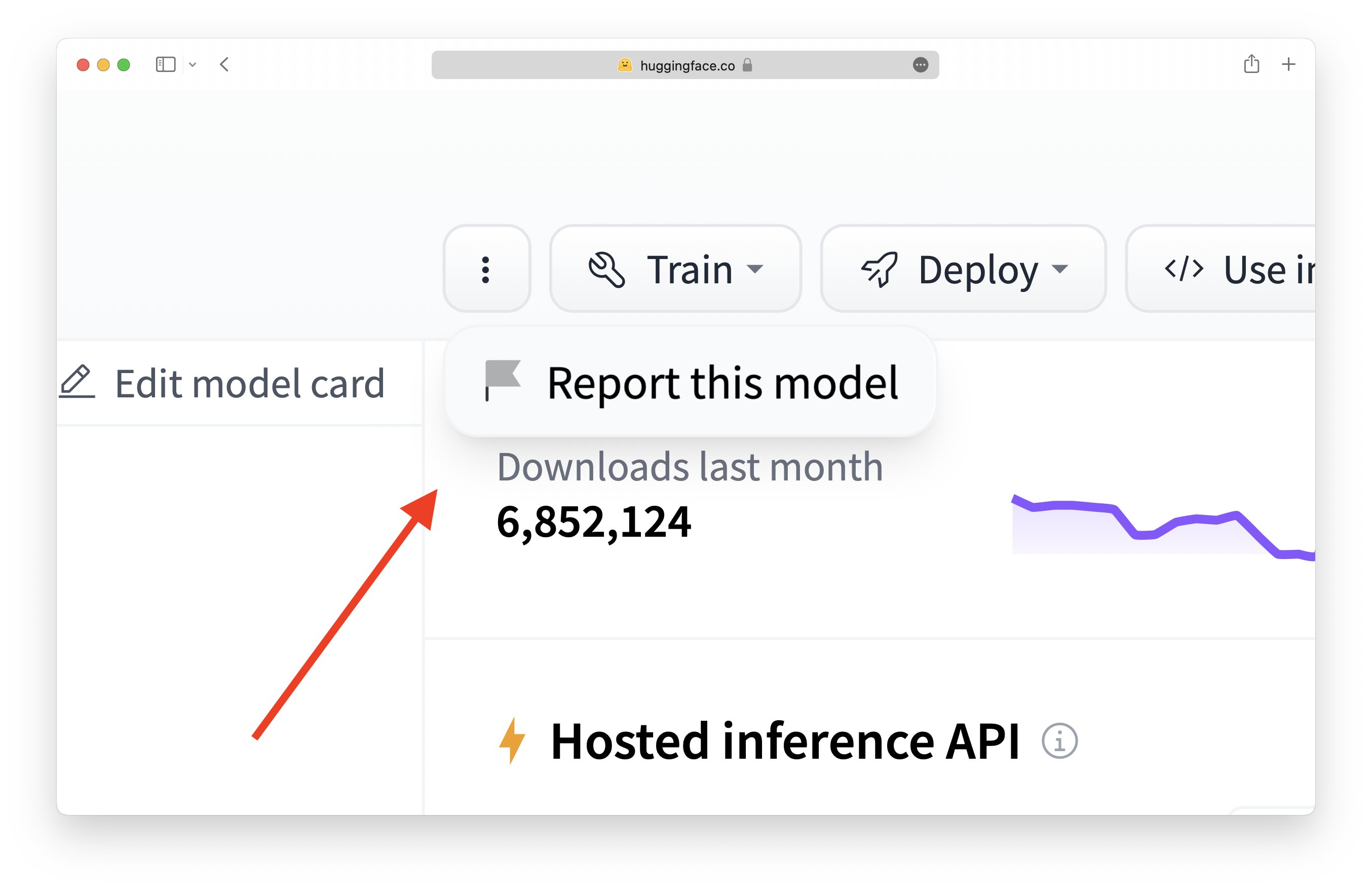

Click on the flag icon on any Model, Dataset, Space, or Discussion:

While logged in, you may click on the “three dots” button to bring up the power to report (or flag) a repository. This can open a conversation within the repository’s community tab.

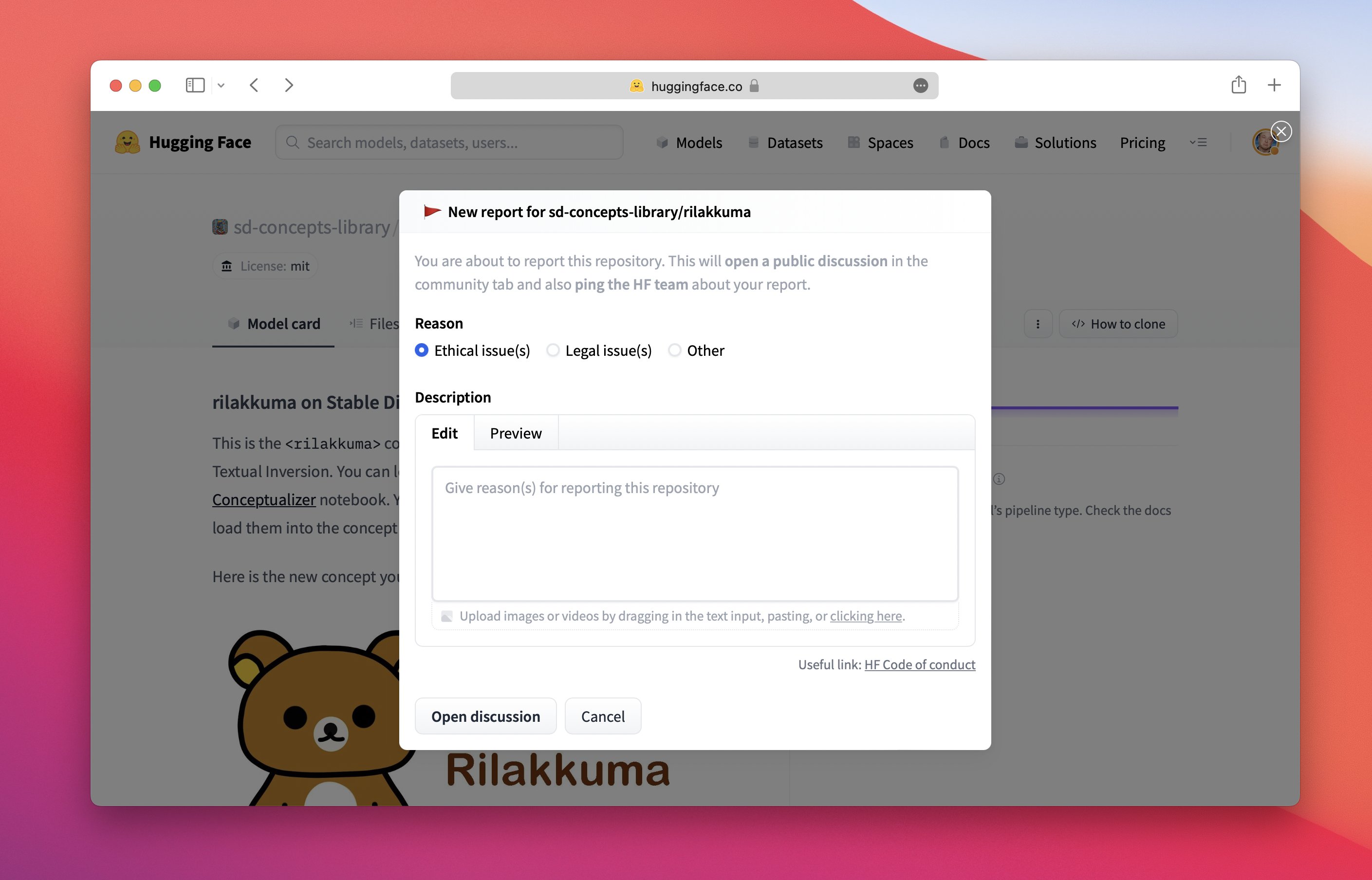

Share why you flagged this item:

Please add as much relevant context as possible in your report! This can make it much easier for the repo owner and HF team to begin taking motion.

In prioritizing open science, we examine potential harm on a case-by-case basis and supply a possibility for collaborative learning and shared responsibility.

When users flag a system, developers can directly and transparently reply to concerns.

On this spirit, we ask that repository owners make reasonable efforts to handle reports, especially when reporters take the time to supply an outline of the difficulty.

We also stress that the reports and discussions are subject to the identical communication norms as the remaining of the platform.

Moderators are capable of disengage from or close discussions should behavior grow to be hateful and/or abusive (see code of conduct).

Should a selected model be flagged as high risk by our community, we consider:

- Downgrading the ML artifact’s visibility across the Hub within the trending tab and in feeds,

- Requesting that the gating feature be enabled to administer access to ML artifacts (see documentation for models and datasets),

- Requesting that the models be made private,

- Disabling access.

The right way to add the “Not For All Audiences” tag:

Edit the model/data card → add not-for-all-audiences within the tags section → open the PR and wait for the authors to merge it. Once merged, the next tag will probably be displayed on the repository:

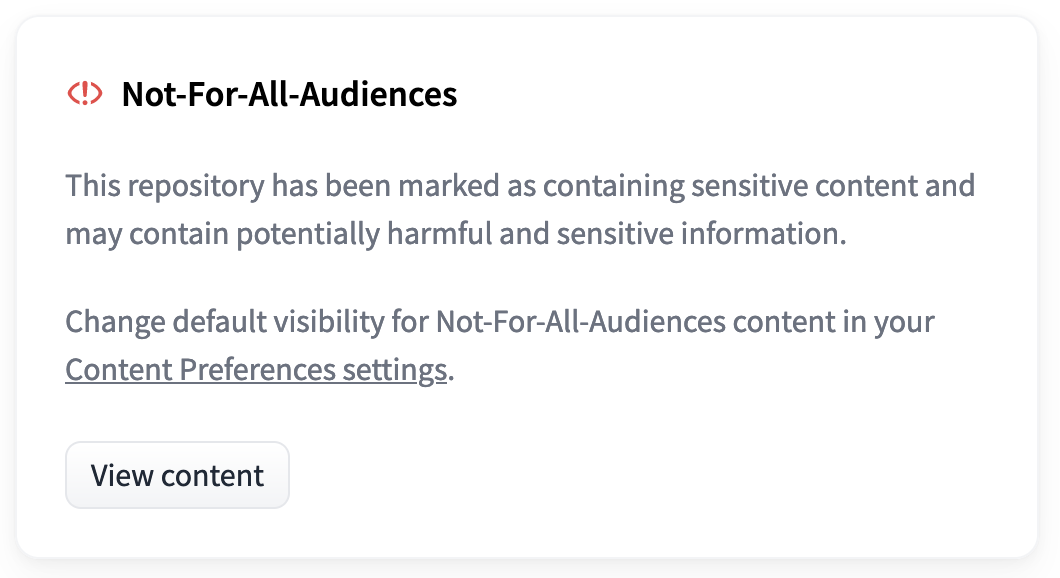

Any repository tagged not-for-all-audiences will display the next popup when visited:

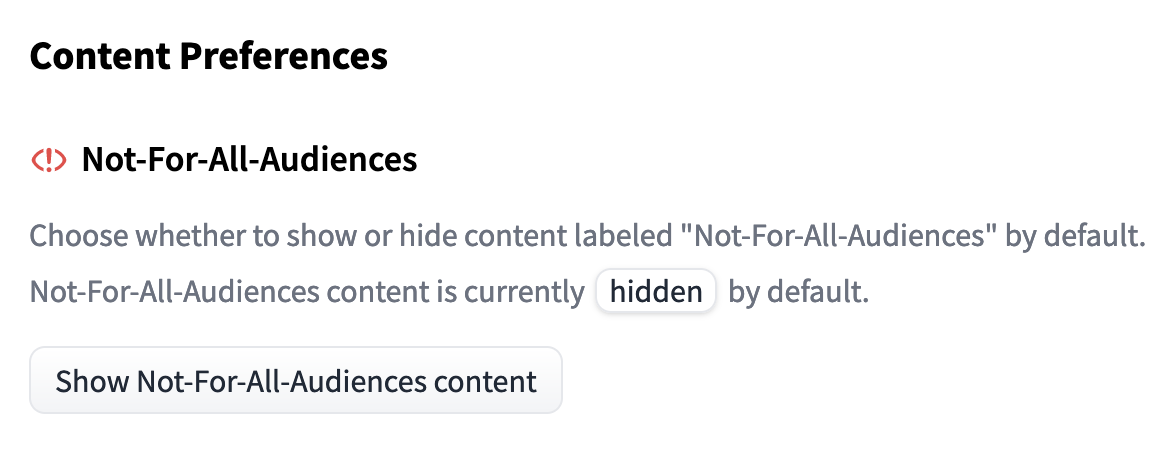

Clicking “View Content” will help you view the repository as normal. In the event you want to all the time view not-for-all-audiences-tagged repositories without the popup, this setting might be modified in a user’s Content Preferences

Open science requires safeguards, and considered one of our goals is to create an environment informed by tradeoffs with different values. Hosting and providing access to models along with cultivating community and discussion empowers diverse groups to evaluate social implications and guide what is sweet machine learning.

Are you working on safeguards? Share them on Hugging Face Hub!

A very powerful a part of Hugging Face is our community. In the event you’re a researcher working on making ML safer to make use of, especially for open science, we wish to support and showcase your work!

Listed here are some recent demos and tools from researchers within the Hugging Face community:

Thanks for reading! 🤗

~ Irene, Nima, Giada, Yacine, and Elizabeth, on behalf of the Ethics and Society regulars

If you wish to cite this blog post, please use the next (in descending order of contribution):

@misc{hf_ethics_soc_blog_3,

creator = {Irene Solaiman and

Giada Pistilli and

Nima Boscarino and

Yacine Jernite and

Elizabeth Allendorf and

Margaret Mitchell and

Carlos Muñoz Ferrandis and

Nathan Lambert and

Alexandra Sasha Luccioni

},

title = {Hugging Face Ethics and Society Newsletter 3: Ethical Openness at Hugging Face},

booktitle = {Hugging Face Blog},

yr = {2023},

url = {https://doi.org/10.57967/hf/0487},

doi = {10.57967/hf/0487}

}