Video samples generated with ModelScope.

Text-to-video is next in line within the long list of incredible advances in generative models. As self-descriptive because it is, text-to-video is a reasonably latest computer vision task that involves generating a sequence of images from text descriptions which can be each temporally and spatially consistent. While this task might sound extremely just like text-to-image, it’s notoriously tougher. How do these models work, how do they differ from text-to-image models, and how much performance can we expect from them?

On this blog post, we are going to discuss the past, present, and way forward for text-to-video models. We’ll start by reviewing the differences between the text-to-video and text-to-image tasks, and discuss the unique challenges of unconditional and text-conditioned video generation. Moreover, we are going to cover probably the most recent developments in text-to-video models, exploring how these methods work and what they’re able to. Finally, we are going to speak about what we’re working on at Hugging Face to facilitate the combination and use of those models and share some cool demos and resources each on and outdoors of the Hugging Face Hub.

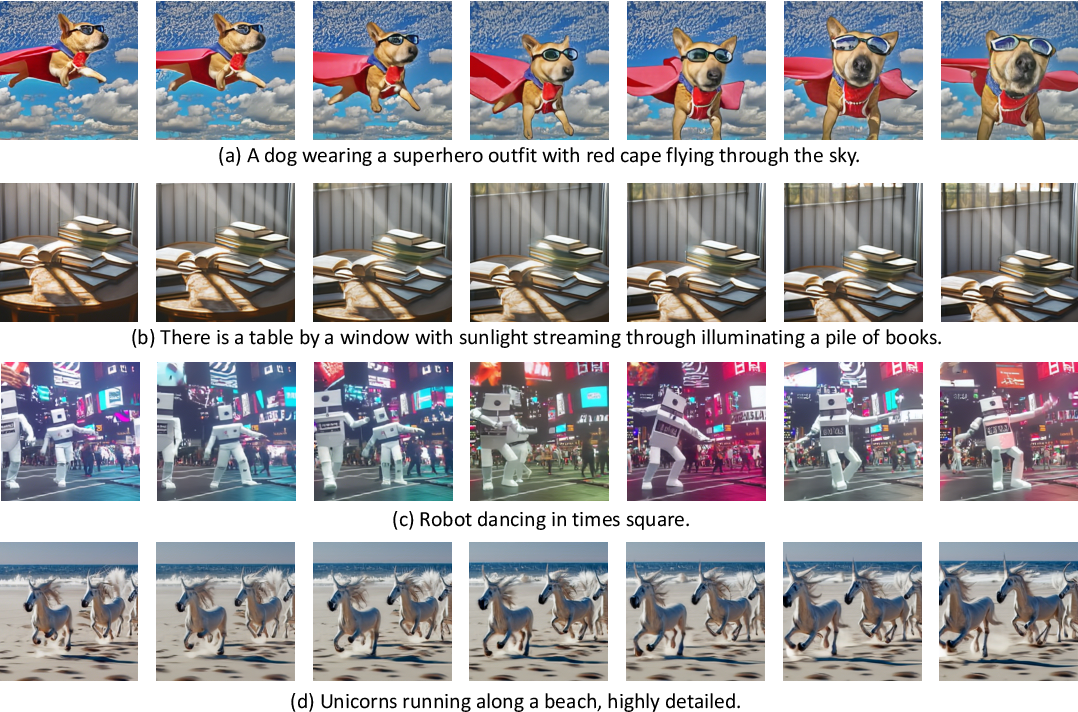

Examples of videos generated from various text description inputs, image taken from Make-a-Video.

Text-to-Video vs. Text-to-Image

With so many recent developments, it may possibly be difficult to maintain up with the present state of text-to-image generative models. Let’s do a fast recap first.

Just two years ago, the primary open-vocabulary, high-quality text-to-image generative models emerged. This primary wave of text-to-image models, including VQGAN-CLIP, XMC-GAN, and GauGAN2, all had GAN architectures. These were quickly followed by OpenAI’s massively popular transformer-based DALL-E in early 2021, DALL-E 2 in April 2022, and a brand new wave of diffusion models pioneered by Stable Diffusion and Imagen. The large success of Stable Diffusion led to many productionized diffusion models, akin to DreamStudio and RunwayML GEN-1, and integration with existing products, akin to Midjourney.

Despite the impressive capabilities of diffusion models in text-to-image generation, diffusion and non-diffusion based text-to-video models are significantly more limited of their generative capabilities. Text-to-video are typically trained on very short clips, meaning they require a computationally expensive and slow sliding window approach to generate long videos. Because of this, these models are notoriously difficult to deploy and scale and remain limited in context and length.

The text-to-video task faces unique challenges on multiple fronts. A few of these essential challenges include:

- Computational challenges: Ensuring spatial and temporal consistency across frames creates long-term dependencies that include a high computation cost, making training such models unaffordable for many researchers.

- Lack of high-quality datasets: Multi-modal datasets for text-to-video generation are scarce and infrequently sparsely annotated, making it difficult to learn complex movement semantics.

- Vagueness around video captioning: Describing videos in a way that makes them easier for models to learn from is an open query. Greater than a single short text prompt is required to supply a whole video description. A generated video should be conditioned on a sequence of prompts or a story that narrates what happens over time.

In the subsequent section, we are going to discuss the timeline of developments within the text-to-video domain and the varied methods proposed to deal with these challenges individually. On a better level, text-to-video works propose certainly one of these:

- Recent, higher-quality datasets which can be easier to learn from.

- Methods to coach such models without paired text-video data.

- More computationally efficient methods to generate longer and better resolution videos.

Easy methods to Generate Videos from Text?

Let’s take a have a look at how text-to-video generation works and the most recent developments on this field. We’ll explore how text-to-video models have evolved, following an analogous path to text-to-image research, and the way the precise challenges of text-to-video generation have been tackled up to now.

Just like the text-to-image task, early work on text-to-video generation dates back only a couple of years. Early research predominantly used GAN and VAE-based approaches to auto-regressively generate frames given a caption (see Text2Filter and TGANs-C). While these works provided the muse for a brand new computer vision task, they’re limited to low resolutions, short-range, and singular, isolated motions.

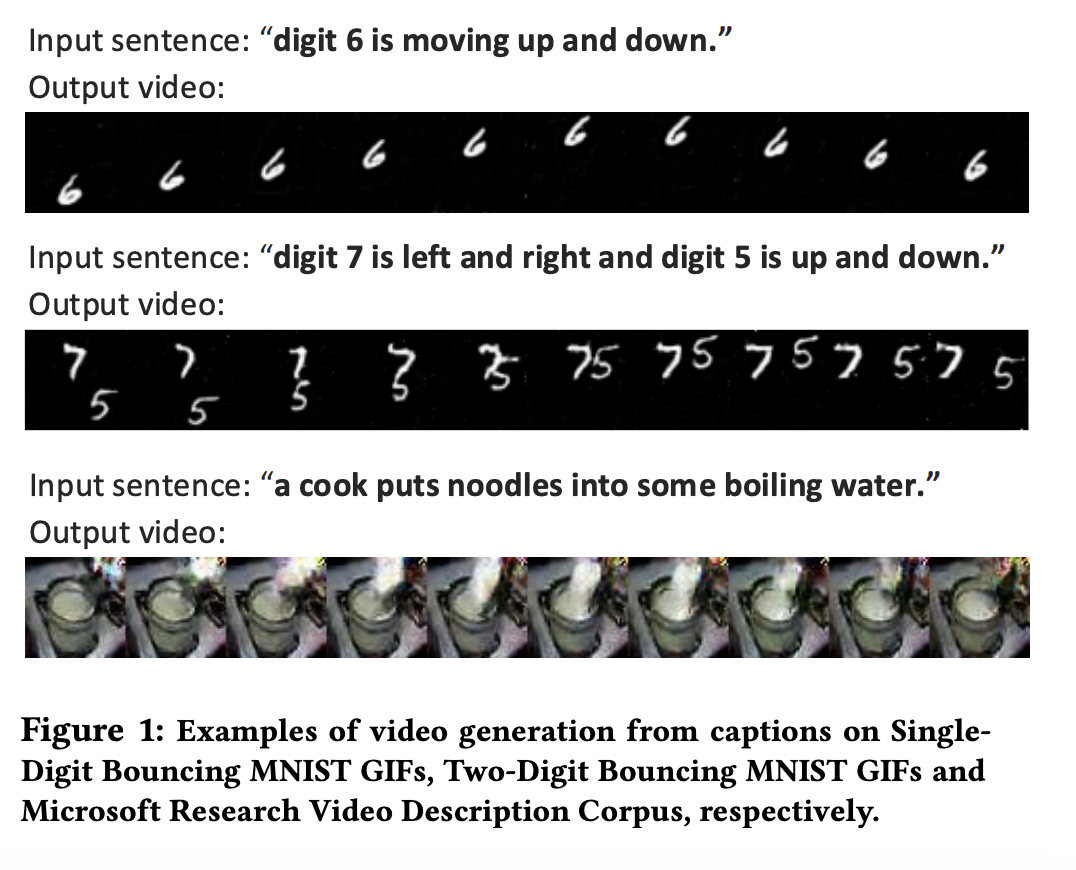

Initial text-to-video models were extremely limited in resolution, context and length, image taken from TGANs-C.

Taking inspiration from the success of large-scale pretrained transformer models in text (GPT-3) and image (DALL-E), the subsequent surge of text-to-video generation research adopted transformer architectures. Phenaki, Make-A-Video, NUWA, VideoGPT and CogVideo all propose transformer-based frameworks, while works akin to TATS propose hybrid methods that mix VQGAN for image generation and a time-sensitive transformer module for sequential generation of frames. Out of this second wave of works, Phenaki is especially interesting because it enables generating arbitrary long videos conditioned on a sequence of prompts, in other words, a story line. Similarly, NUWA-Infinity proposes an autoregressive over autoregressive generation mechanism for infinite image and video synthesis from text inputs, enabling the generation of long, HD quality videos. Nevertheless, neither Phenaki or NUWA models are publicly available.

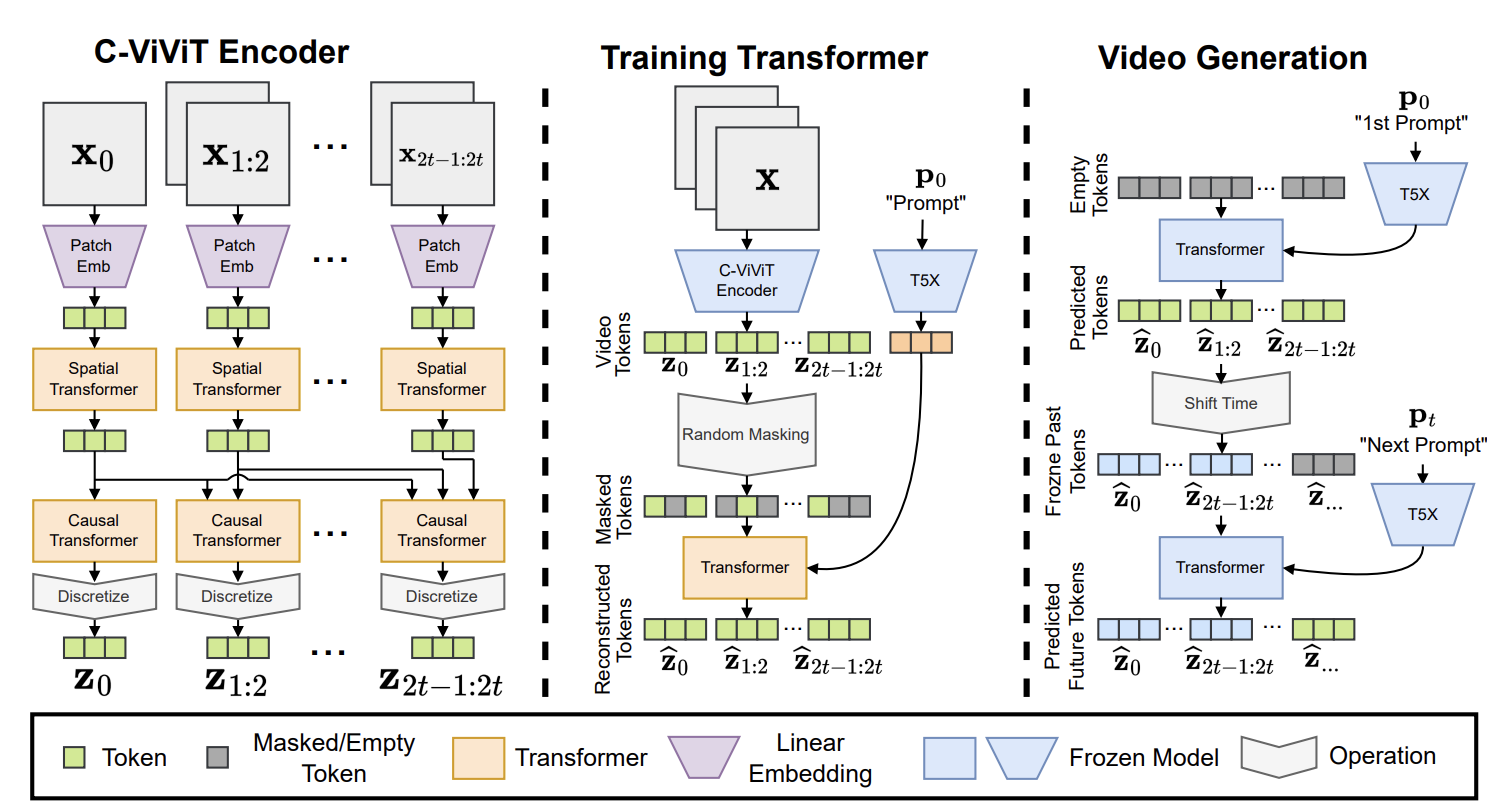

Phenaki contains a transformer-based architecture, image taken from here.

The third and current wave of text-to-video models features predominantly diffusion-based architectures. The remarkable success of diffusion models in diverse, hyper-realistic, and contextually wealthy image generation has led to an interest in generalizing diffusion models to other domains akin to audio, 3D, and, more recently, video. This wave of models is pioneered by Video Diffusion Models (VDM), which extend diffusion models to the video domain, and MagicVideo, which proposes a framework to generate video clips in a low-dimensional latent space and reports huge efficiency gains over VDM. One other notable mention is Tune-a-Video, which fine-tunes a pretrained text-to-image model with a single text-video pair and enables changing the video content while preserving the motion. The repeatedly expanding list of text-to-video diffusion models that followed include Video LDM, Text2Video-Zero, Runway Gen1 and Gen2, and NUWA-XL.

Text2Video-Zero is a text-guided video generation and manipulation framework that works in a fashion just like ControlNet. It might directly generate (or edit) videos based on text inputs, in addition to combined text-pose or text-edge data inputs. As implied by its name, Text2Video-Zero is a zero-shot model that mixes a trainable motion dynamics module with a pre-trained text-to-image Stable Diffusion model without using any paired text-video data. Similarly to Text2Video-Zero, Runway’s Gen-1 and Gen-2 models enable synthesizing videos guided by content described through text or images. Most of those works are trained on short video clips and depend on autoregressive generation with a sliding window to generate longer videos, inevitably leading to a context gap. NUWA-XL addresses this issue and proposes a “diffusion over diffusion” method to coach models on 3376 frames. Finally, there are open-source text-to-video models and frameworks akin to Alibaba / DAMO Vision Intelligence Lab’s ModelScope and Tencel’s VideoCrafter, which have not been published in peer-reviewed conferences or journals.

Datasets

Like other vision-language models, text-to-video models are typically trained on large paired datasets videos and text descriptions. The videos in these datasets are typically split into short, fixed-length chunks and infrequently limited to isolated actions with a couple of objects. While that is partly on account of computational limitations and partly on account of the issue of describing video content in a meaningful way, we see that developments in multimodal video-text datasets and text-to-video models are sometimes entwined. While some work focuses on developing higher, more generalizable datasets which can be easier to learn from, works akin to Phenaki explore alternative solutions akin to combining text-image pairs with text-video pairs for the text-to-video task. Make-a-Video takes this even further by proposing using only text-image pairs to learn what the world looks like and unimodal video data to learn spatio-temporal dependencies in an unsupervised fashion.

These large datasets experience similar issues to those present in text-to-image datasets. Essentially the most commonly used text-video dataset, WebVid, consists of 10.7 million pairs of text-video pairs (52K video hours) and comprises a good amount of noisy samples with irrelevant video descriptions. Other datasets try to beat this issue by specializing in specific tasks or domains. For instance, the Howto100M dataset consists of 136M video clips with captions that describe the best way to perform complex tasks akin to cooking, handcrafting, gardening, and fitness step-by-step. Similarly, the QuerYD dataset focuses on the event localization task such that the captions of videos describe the relative location of objects and actions intimately. CelebV-Text is a large-scale facial text-video dataset of over 70K videos to generate videos with realistic faces, emotions, and gestures.

Text-to-Video at Hugging Face

Using Hugging Face Diffusers, you’ll be able to easily download, run and fine-tune various pretrained text-to-video models, including Text2Video-Zero and ModelScope by Alibaba / DAMO Vision Intelligence Lab. We’re currently working on integrating other exciting works into Diffusers and 🤗 Transformers.

Hugging Face Demos

At Hugging Face, our goal is to make it easier to make use of and construct upon state-of-the-art research. Head over to our hub to see and mess around with Spaces demos contributed by the 🤗 team, countless community contributors and research authors. In the mean time, we host demos for VideoGPT, CogVideo, ModelScope Text-to-Video, and Text2Video-Zero with many more to return. To see what we are able to do with these models, let’s take a have a look at the Text2Video-Zero demo. This demo not only illustrates text-to-video generation but additionally enables multiple other generation modes for text-guided video editing and joint conditional video generation using pose, depth and edge inputs together with text prompts.

Other than using demos to experiment with pretrained text-to-video models, it’s also possible to use the Tune-a-Video training demo to fine-tune an existing text-to-image model together with your own text-video pair. To try it out, upload a video and enter a text prompt that describes the video. Once the training is completed, you’ll be able to upload it to the Hub under the Tune-a-Video community or your individual username, publicly or privately. Once the training is completed, simply head over to the Run tab of the demo to generate videos from any text prompt.

All Spaces on the 🤗 Hub are Git repos you’ll be able to clone and run in your local or deployment environment. Let’s clone the ModelScope demo, install the necessities, and run it locally.

git clone https://huggingface.co/spaces/damo-vilab/modelscope-text-to-video-synthesis

cd modelscope-text-to-video-synthesis

pip install -r requirements.txt

python app.py

And that is it! The Modelscope demo is now running locally in your computer. Note that the ModelScope text-to-video model is supported in Diffusers and you’ll be able to directly load and use the model to generate latest videos with a couple of lines of code.

import torch

from diffusers import DiffusionPipeline, DPMSolverMultistepScheduler

from diffusers.utils import export_to_video

pipe = DiffusionPipeline.from_pretrained("damo-vilab/text-to-video-ms-1.7b", torch_dtype=torch.float16, variant="fp16")

pipe.scheduler = DPMSolverMultistepScheduler.from_config(pipe.scheduler.config)

pipe.enable_model_cpu_offload()

prompt = "Spiderman is browsing"

video_frames = pipe(prompt, num_inference_steps=25).frames

video_path = export_to_video(video_frames)

Community Contributions and Open Source Text-to-Video Projects

Finally, there are numerous open source projects and models that should not on the hub. Some notable mentions are Phil Wang’s (aka lucidrains) unofficial implementations of Imagen, Phenaki, NUWA, Make-a-Video and Video Diffusion Models. One other exciting project by ExponentialML builds on top of 🤗 diffusers to finetune ModelScope Text-to-Video.

Conclusion

Text-to-video research is progressing exponentially, but existing work continues to be limited in context and faces many challenges. On this blog post, we covered the constraints, unique challenges and the present state of text-to-video generation models. We also saw how architectural paradigms originally designed for other tasks enable giant leaps within the text-to-video generation task and what this implies for future research. While the developments are impressive, text-to-video models still have an extended technique to go in comparison with text-to-image models. Finally, we also showed how you should use these models to perform various tasks using the demos available on the Hub or as a component of 🤗 Diffusers pipelines.

That was it! We’re continuing to integrate probably the most impactful computer vision and multi-modal models and would love to listen to back from you. To not sleep so far with the most recent news in computer vision and multi-modal research, you’ll be able to follow us on Twitter: @adirik, @a_e_roberts, @osanseviero, @risingsayak and @huggingface.