Large language models are all the craze lately, with many firms investing significant resources to scale them up and unlock recent capabilities. Nevertheless, as humans with ever-decreasing attention spans, we also dislike their slow response times. Latency is critical for a great user experience, and smaller models are sometimes used despite their lower quality (e.g. in code completion).

Why is text generation so slow? What’s stopping you from deploying low-latency large language models without going bankrupt? On this blog post, we’ll revisit the bottlenecks for autoregressive text generation and introduce a brand new decoding method to tackle the latency problem. You’ll see that by utilizing our recent method, assisted generation, you possibly can reduce latency as much as 10x in commodity hardware!

Understanding text generation latency

The core of recent text generation is easy to grasp. Let’s take a look at the central piece, the ML model. Its input incorporates a text sequence, which incorporates the text generated thus far, and potentially other model-specific components (as an example, Whisper also has an audio input). The model takes the input and runs a forward pass: the input is fed to the model and passed sequentially along its layers until the unnormalized log probabilities for the following token are predicted (also often called logits). A token may consist of entire words, sub-words, and even individual characters, depending on the model. The illustrated GPT-2 is an excellent reference in case you’d wish to dive deeper into this a part of text generation.

A model forward pass gets you the logits for the following token, which you’ll freely manipulate (e.g. set the probability of undesirable words or sequences to 0). The next step in text generation is to pick out the following token from these logits. Common strategies include picking the almost certainly token, often called greedy decoding, or sampling from their distribution, also called multinomial sampling. Chaining model forward passes with next token selection iteratively gets you text generation. This explanation is the tip of the iceberg in terms of decoding methods; please check with our blog post on text generation for an in-depth exploration.

From the outline above, the latency bottleneck in text generation is obvious: running a model forward pass for big models is slow, and you might must do lots of of them in a sequence. But let’s dive deeper: why are forward passes slow? Forward passes are typically dominated by matrix multiplications and, after a fast visit to the corresponding wikipedia section, you possibly can tell that memory bandwidth is the limitation on this operation (e.g. from the GPU RAM to the GPU compute cores). In other words, the bottleneck within the forward pass comes from loading the model layer weights into the computation cores of your device, not from performing the computations themselves.

For the time being, you’ve three major avenues you possibly can explore to get probably the most out of text generation, all tackling the performance of the model forward pass. First, you’ve the hardware-specific model optimizations. As an illustration, your device could also be compatible with Flash Attention, which quickens the eye layer through a reorder of the operations, or INT8 quantization, which reduces the scale of the model weights.

Second, when you realize you’ll get concurrent text generation requests, you possibly can batch the inputs and massively increase the throughput with a small latency penalty. The model layer weights loaded into the device at the moment are used on several input rows in parallel, which implies that you simply’ll get more tokens out for about the identical memory bandwidth burden. The catch with batching is that you simply need additional device memory (or to dump the memory somewhere) – at the top of this spectrum, you possibly can see projects like FlexGen which optimize throughput on the expense of latency.

from transformers import AutoModelForCausalLM, AutoTokenizer

import time

tokenizer = AutoTokenizer.from_pretrained("distilgpt2")

model = AutoModelForCausalLM.from_pretrained("distilgpt2").to("cuda")

inputs = tokenizer(["Hello world"], return_tensors="pt").to("cuda")

def print_tokens_per_second(batch_size):

new_tokens = 100

cumulative_time = 0

model.generate(

**inputs, do_sample=True, max_new_tokens=new_tokens, num_return_sequences=batch_size

)

for _ in range(10):

start = time.time()

model.generate(

**inputs, do_sample=True, max_new_tokens=new_tokens, num_return_sequences=batch_size

)

cumulative_time += time.time() - start

print(f"Tokens per second: {new_tokens * batch_size * 10 / cumulative_time:.1f}")

print_tokens_per_second(1)

print_tokens_per_second(64)

Finally, if you’ve multiple devices available to you, you possibly can distribute the workload using Tensor Parallelism and acquire lower latency. With Tensor Parallelism, you split the memory bandwidth burden across multiple devices, but you now have to contemplate inter-device communication bottlenecks along with the monetary cost of running multiple devices. The advantages depend largely on the model size: models that easily fit on a single consumer device see very limited advantages. Taking the outcomes from this DeepSpeed blog post, you see that you would be able to spread a 17B parameter model across 4 GPUs to cut back the latency by 1.5x (Figure 7).

These three kinds of improvements might be utilized in tandem, leading to high throughput solutions. Nevertheless, after applying hardware-specific optimizations, there are limited options to cut back latency – and the present options are expensive. Let’s fix that!

Language decoder forward pass, revisited

You’ve read above that every model forward pass yields the logits for the following token, but that’s actually an incomplete description. During text generation, the everyday iteration consists within the model receiving as input the most recent generated token, plus cached internal computations for all other previous inputs, returning the following token logits. Caching is used to avoid redundant computations, leading to faster forward passes, but it surely’s not mandatory (and might be used partially). When caching is disabled, the input incorporates your entire sequence of tokens generated thus far and the output incorporates the logits corresponding to the following token for all positions within the sequence! The logits at position N correspond to the distribution for the following token if the input consisted of the primary N tokens, ignoring all subsequent tokens within the sequence. In the actual case of greedy decoding, in case you pass the generated sequence as input and apply the argmax operator to the resulting logits, you’ll obtain the generated sequence back.

from transformers import AutoModelForCausalLM, AutoTokenizer

tok = AutoTokenizer.from_pretrained("distilgpt2")

model = AutoModelForCausalLM.from_pretrained("distilgpt2")

inputs = tok(["The"], return_tensors="pt")

generated = model.generate(**inputs, do_sample=False, max_new_tokens=10)

forward_confirmation = model(generated).logits.argmax(-1)

print(generated[0, 1:].tolist() == forward_confirmation[0, :-1].tolist())

This implies that you would be able to use a model forward pass for a unique purpose: along with feeding some tokens to predict the following one, it’s also possible to pass a sequence to the model and double-check whether the model would generate that very same sequence (or a part of it).

Let’s consider for a second that you’ve access to a magical latency-free oracle model that generates the identical sequence as your model, for any given input. For argument’s sake, it will possibly’t be used directly, it’s limited to being an assistant to your generation procedure. Using the property described above, you might use this assistant model to get candidate output tokens followed by a forward pass together with your model to substantiate that they’re indeed correct. On this utopian scenario, the latency of text generation could be reduced from O(n) to O(1), with n being the variety of generated tokens. For long generations, we’re talking about several orders of magnitude.

Walking a step towards reality, let’s assume the assistant model has lost its oracle properties. Now it’s a latency-free model that gets among the candidate tokens flawed, based on your model. Resulting from the autoregressive nature of the duty, as soon because the assistant gets a token flawed, all subsequent candidates should be invalidated. Nevertheless, that doesn’t prevent you from querying the assistant again, after correcting the flawed token together with your model, and repeating this process iteratively. Even when the assistant fails a couple of tokens, text generation would have an order of magnitude less latency than in its original form.

Obviously, there aren’t any latency-free assistant models. Nevertheless, it is comparatively easy to seek out a model that approximates another model’s text generation outputs – smaller versions of the identical architecture trained similarly often fit this property. Furthermore, when the difference in model sizes becomes significant, the price of using the smaller model as an assistant becomes an afterthought after factoring in the advantages of skipping a couple of forward passes! You now understand the core of assisted generation.

Greedy decoding with assisted generation

Assisted generation is a balancing act. You would like the assistant to quickly generate a candidate sequence while being as accurate as possible. If the assistant has poor quality, your get the price of using the assistant model with little to no advantages. However, optimizing the standard of the candidate sequences may imply using slow assistants, leading to a net slowdown. While we will not automate the collection of the assistant model for you, we’ve included a further requirement and a heuristic to make sure the time spent with the assistant stays in check.

First, the requirement – the assistant will need to have the very same tokenizer as your model. If this requirement was not in place, expensive token decoding and re-encoding steps would need to be added. Moreover, these additional steps would need to occur on the CPU, which in turn might have slow inter-device data transfers. Fast usage of the assistant is critical for the advantages of assisted generation to point out up.

Finally, the heuristic. By this point, you’ve probably noticed the similarities between the movie Inception and assisted generation – you’re, in spite of everything, running text generation inside text generation. There will likely be one assistant model forward pass per candidate token, and we all know that forward passes are expensive. While you possibly can’t know prematurely the variety of tokens that the assistant model will get right, you possibly can keep track of this information and use it to limit the variety of candidate tokens requested to the assistant – some sections of the output are easier to anticipate than others.

Wrapping all up, here’s our original implementation of the assisted generation loop (code):

- Use greedy decoding to generate a certain variety of candidate tokens with the assistant model, producing

candidates. The variety of produced candidate tokens is initialized to5the primary time assisted generation is known as. - Using our model, do a forward pass with

candidates, obtaininglogits. - Use the token selection method (

.argmax()for greedy search or.multinomial()for sampling) to get thenext_tokensfromlogits. - Compare

next_tokenstocandidatesand get the variety of matching tokens. Do not forget that this comparison must be done with left-to-right causality: after the primary mismatch, all candidates are invalidated. - Use the variety of matches to slice things up and discard variables related to unconfirmed candidate tokens. In essence, in

next_tokens, keep the matching tokens plus the primary divergent token (which our model generates from a legitimate candidate subsequence). - Adjust the variety of candidate tokens to be produced in the following iteration — our original heuristic increases it by

2if ALL tokens match and reduces it by1otherwise.

We’ve designed the API in 🤗 Transformers such that this process is hassle-free for you. All you must do is to pass the assistant model under the brand new assistant_model keyword argument and reap the latency gains! On the time of the discharge of this blog post, assisted generation is restricted to a batch size of 1.

from transformers import AutoModelForCausalLM, AutoTokenizer

import torch

prompt = "Alice and Bob"

checkpoint = "EleutherAI/pythia-1.4b-deduped"

assistant_checkpoint = "EleutherAI/pythia-160m-deduped"

device = "cuda" if torch.cuda.is_available() else "cpu"

tokenizer = AutoTokenizer.from_pretrained(checkpoint)

inputs = tokenizer(prompt, return_tensors="pt").to(device)

model = AutoModelForCausalLM.from_pretrained(checkpoint).to(device)

assistant_model = AutoModelForCausalLM.from_pretrained(assistant_checkpoint).to(device)

outputs = model.generate(**inputs, assistant_model=assistant_model)

print(tokenizer.batch_decode(outputs, skip_special_tokens=True))

Is the extra internal complexity value it? Let’s have a take a look at the latency numbers for the greedy decoding case (results for sampling are in the following section), considering a batch size of 1. These results were pulled directly out of 🤗 Transformers with none additional optimizations, so it’s best to have the opportunity to breed them in your setup.

Glancing on the collected numbers, we see that assisted generation can deliver significant latency reductions in diverse settings, but it surely just isn’t a silver bullet – it’s best to benchmark it before applying it to your use case. We are able to conclude that assisted generation:

- 🤏 Requires access to an assistant model that’s no less than an order of magnitude smaller than your model (the larger the difference, the higher);

- 🚀 Gets as much as 3x speedups within the presence of INT8 and as much as 2x otherwise, when the model suits within the GPU memory;

- 🤯 When you’re fidgeting with models that don’t slot in your GPU and are counting on memory offloading, you possibly can see as much as 10x speedups;

- 📄 Shines in input-grounded tasks, like automatic speech recognition or summarization.

Sample with assisted generation

Greedy decoding is fitted to input-grounded tasks (automatic speech recognition, translation, summarization, …) or factual knowledge-seeking. Open-ended tasks requiring large levels of creativity, similar to most uses of a language model as a chatbot, should use sampling as an alternative. Assisted generation is of course designed for greedy decoding, but that doesn’t mean that you would be able to’t use assisted generation with multinomial sampling!

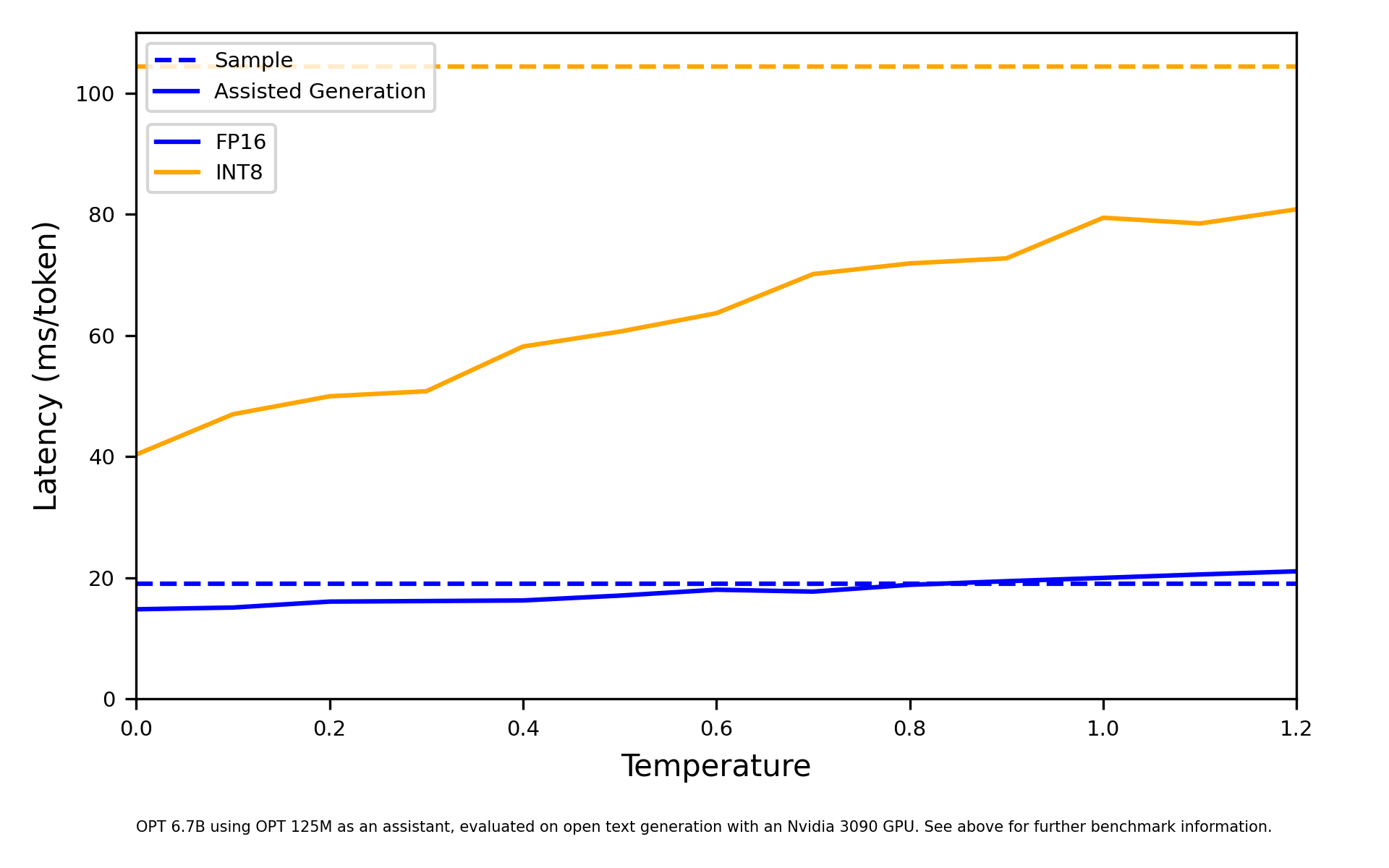

Drawing samples from a probability distribution for the following token will cause our greedy assistant to fail more often, reducing its latency advantages. Nevertheless, we will control how sharp the probability distribution for the following tokens is, using the temperature coefficient that’s present in most sampling-based applications. At one extreme, with temperatures near 0, sampling will approximate greedy decoding, favoring the almost certainly token. At the opposite extreme, with the temperature set to values much larger than 1, sampling will likely be chaotic, drawing from a uniform distribution. Low temperatures are, subsequently, more favorable to your assistant model, retaining many of the latency advantages from assisted generation, as we will see below.

Why don’t you see it for yourself, so get a sense of assisted generation?

Future directions

Assisted generation shows that modern text generation strategies are ripe for optimization. Understanding that it’s currently a memory-bound problem, not a compute-bound problem, allows us to use easy heuristics to get probably the most out of the available memory bandwidth, alleviating the bottleneck. We imagine that further refinement of using assistant models will get us even larger latency reductions – as an example, we may have the opportunity to skip a couple of more forward passes if we request the assistant to generate several candidate continuations. Naturally, releasing high-quality small models for use as assistants will likely be critical to realizing and amplifying the advantages.

Initially released under our 🤗 Transformers library, for use with the .generate() function, we expect to supply it throughout the Hugging Face universe. Its implementation can also be completely open-source so, in case you’re working on text generation and never using our tools, be at liberty to make use of it as a reference.

Finally, assisted generation resurfaces a vital query in text generation. The sphere has been evolving with the constraint where all recent tokens are the results of a set amount of compute, for a given model. One token per homogeneous forward pass, in pure autoregressive fashion. This blog post reinforces the concept that it shouldn’t be the case: large subsections of the generated output can be equally generated by models which are a fraction of the scale. For that, we’ll need recent model architectures and decoding methods – we’re excited to see what the long run holds!

Related Work

After the unique release of this blog post, it got here to my attention that other works have explored the identical core principle (use a forward pass to validate longer continuations). Particularly, have a take a look at the next works:

Citation

@misc {gante2023assisted,

creator = { {Joao Gante} },

title = { Assisted Generation: a brand new direction toward low-latency text generation },

yr = 2023,

url = { https://huggingface.co/blog/assisted-generation },

doi = { 10.57967/hf/0638 },

publisher = { Hugging Face Blog }

}

Acknowledgements

I’d wish to thank Sylvain Gugger, Nicolas Patry, and Lewis Tunstall for sharing many useful suggestions to enhance this blog post. Finally, kudos to Chunte Lee for designing the gorgeous cover you possibly can see in our web page.