LLMs are known to be large, and running or training them in consumer hardware is a large challenge for users and accessibility.

Our LLM.int8 blogpost showed how the techniques within the LLM.int8 paper were integrated in transformers using the bitsandbytes library.

As we try to make models much more accessible to anyone, we decided to collaborate with bitsandbytes again to permit users to run models in 4-bit precision. This features a large majority of HF models, in any modality (text, vision, multi-modal, etc.). Users may train adapters on top of 4bit models leveraging tools from the Hugging Face ecosystem. This can be a recent method introduced today within the QLoRA paper by Dettmers et al. The abstract of the paper is as follows:

We present QLoRA, an efficient finetuning approach that reduces memory usage enough to finetune a 65B parameter model on a single 48GB GPU while preserving full 16-bit finetuning task performance. QLoRA backpropagates gradients through a frozen, 4-bit quantized pretrained language model into Low Rank Adapters~(LoRA). Our greatest model family, which we name Guanaco, outperforms all previous openly released models on the Vicuna benchmark, reaching 99.3% of the performance level of ChatGPT while only requiring 24 hours of finetuning on a single GPU. QLoRA introduces a variety of innovations to avoid wasting memory without sacrificing performance: (a) 4-bit NormalFloat (NF4), a brand new data type that’s information theoretically optimal for normally distributed weights (b) double quantization to scale back the common memory footprint by quantizing the quantization constants, and (c) paged optimizers to administer memory spikes. We use QLoRA to finetune greater than 1,000 models, providing an in depth evaluation of instruction following and chatbot performance across 8 instruction datasets, multiple model types (LLaMA, T5), and model scales that may be infeasible to run with regular finetuning (e.g. 33B and 65B parameter models). Our results show that QLoRA finetuning on a small high-quality dataset results in state-of-the-art results, even when using smaller models than the previous SoTA. We offer an in depth evaluation of chatbot performance based on each human and GPT-4 evaluations showing that GPT-4 evaluations are an affordable and reasonable alternative to human evaluation. Moreover, we discover that current chatbot benchmarks usually are not trustworthy to accurately evaluate the performance levels of chatbots. A lemon-picked evaluation demonstrates where Guanaco fails in comparison with ChatGPT. We release all of our models and code, including CUDA kernels for 4-bit training.

Resources

This blogpost and release include several resources to start with 4bit models and QLoRA:

Introduction

If you happen to usually are not acquainted with model precisions and essentially the most common data types (float16, float32, bfloat16, int8), we advise you to fastidiously read the introduction in our first blogpost that goes over the small print of those concepts in easy terms with visualizations.

For more information we recommend reading the basics of floating point representation through this wikibook document.

The recent QLoRA paper explores different data types, 4-bit Float and 4-bit NormalFloat. We are going to discuss here the 4-bit Float data type because it is less complicated to grasp.

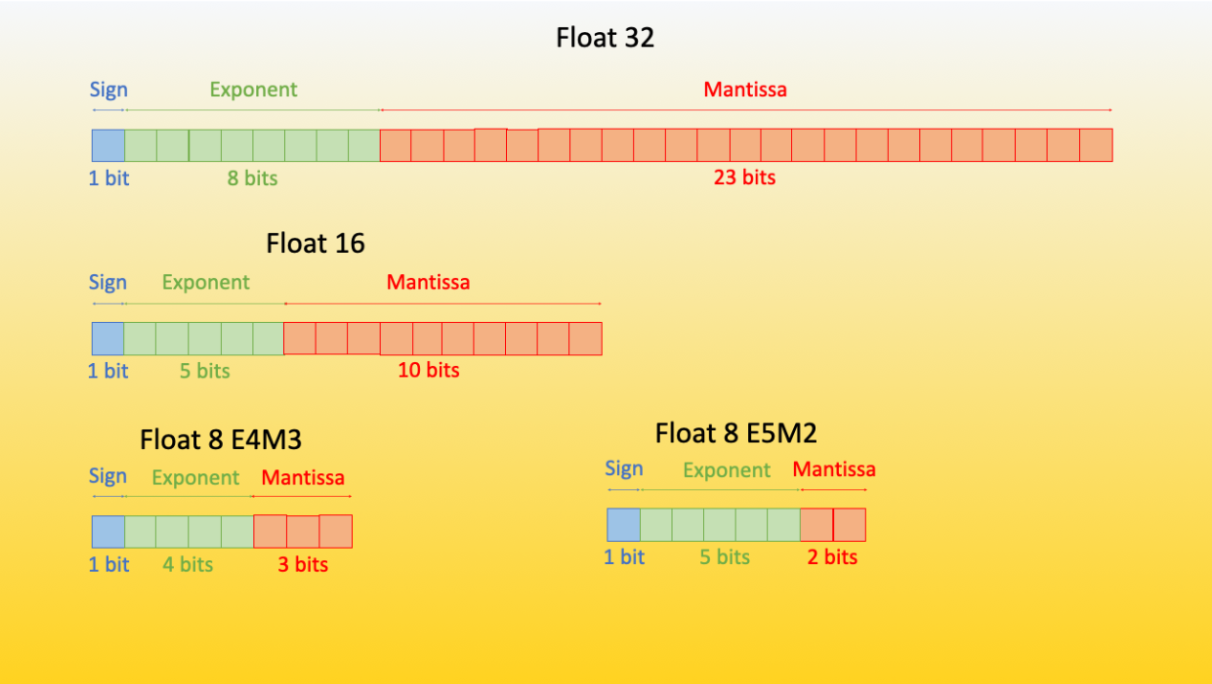

FP8 and FP4 stand for Floating Point 8-bit and 4-bit precision, respectively. They’re a part of the minifloats family of floating point values (amongst other precisions, the minifloats family also includes bfloat16 and float16).

Let’s first have a take a look at learn how to represent floating point values in FP8 format, then understand how the FP4 format looks like.

FP8 format

As discussed in our previous blogpost, a floating point incorporates n-bits, with each bit falling into a particular category that’s accountable for representing a component of the number (sign, mantissa and exponent). These represent the next.

The FP8 (floating point 8) format has been first introduced within the paper “FP8 for Deep Learning” with two different FP8 encodings: E4M3 (4-bit exponent and 3-bit mantissa) and E5M2 (5-bit exponent and 2-bit mantissa).

|

|---|

Overview of Floating Point 8 (FP8) format. Source: Original content from sgugger |

Although the precision is substantially reduced by reducing the variety of bits from 32 to eight, each versions may be utilized in a wide range of situations. Currently one could use Transformer Engine library that can also be integrated with HF ecosystem through speed up.

The potential floating points that may be represented within the E4M3 format are within the range -448 to 448, whereas within the E5M2 format, because the variety of bits of the exponent increases, the range increases to -57344 to 57344 – but with a lack of precision since the variety of possible representations stays constant.

It has been empirically proven that the E4M3 is best suited to the forward pass, and the second version is best suited to the backward computation

FP4 precision in a couple of words

The sign bit represents the sign (+/-), the exponent bits a base two to the ability of the integer represented by the bits (e.g. 2^{010} = 2^{2} = 4), and the fraction or mantissa is the sum of powers of negative two that are “energetic” for every bit that’s “1”. If a bit is “0” the fraction stays unchanged for that power of 2^-i where i is the position of the bit within the bit-sequence. For instance, for mantissa bits 1010 we now have (0 + 2^-1 + 0 + 2^-3) = (0.5 + 0.125) = 0.625. To get a worth, we add 1 to the fraction and multiply all results together, for instance, with 2 exponent bits and one mantissa bit the representations 1101 could be:

-1 * 2^(2) * (1 + 2^-1) = -1 * 4 * 1.5 = -6

For FP4 there isn’t a fixed format and as such one can try mixtures of various mantissa/exponent mixtures. Generally, 3 exponent bits do a bit higher normally. But sometimes 2 exponent bits and a mantissa bit yield higher performance.

QLoRA paper, a brand new way of democratizing quantized large transformer models

In few words, QLoRA reduces the memory usage of LLM finetuning without performance tradeoffs compared to straightforward 16-bit model finetuning. This method enables 33B model finetuning on a single 24GB GPU and 65B model finetuning on a single 46GB GPU.

More specifically, QLoRA uses 4-bit quantization to compress a pretrained language model. The LM parameters are then frozen and a comparatively small variety of trainable parameters are added to the model in the shape of Low-Rank Adapters. During finetuning, QLoRA backpropagates gradients through the frozen 4-bit quantized pretrained language model into the Low-Rank Adapters. The LoRA layers are the one parameters being updated during training. Read more about LoRA within the original LoRA paper.

QLoRA has one storage data type (often 4-bit NormalFloat) for the bottom model weights and a computation data type (16-bit BrainFloat) used to perform computations. QLoRA dequantizes weights from the storage data type to the computation data type to perform the forward and backward passes, but only computes weight gradients for the LoRA parameters which use 16-bit bfloat. The weights are decompressed only once they are needed, due to this fact the memory usage stays low during training and inference.

QLoRA tuning is shown to match 16-bit finetuning methods in a big selection of experiments. As well as, the Guanaco models, which use QLoRA finetuning for LLaMA models on the OpenAssistant dataset (OASST1), are state-of-the-art chatbot systems and are near ChatGPT on the Vicuna benchmark. That is an extra demonstration of the ability of QLoRA tuning.

For a more detailed reading, we recommend you read the QLoRA paper.

The right way to use it in transformers?

On this section allow us to introduce the transformers integration of this method, learn how to use it and which models may be effectively quantized.

Getting began

As a quickstart, load a model in 4bit by (on the time of this writing) installing speed up and transformers from source, and make sure that you will have installed the most recent version of bitsandbytes library (0.39.0).

pip install -q -U bitsandbytes

pip install -q -U git+https://github.com/huggingface/transformers.git

pip install -q -U git+https://github.com/huggingface/peft.git

pip install -q -U git+https://github.com/huggingface/speed up.git

Quickstart

The fundamental solution to load a model in 4bit is to pass the argument load_in_4bit=True when calling the from_pretrained method by providing a tool map (pass "auto" to get a tool map that will likely be robotically inferred).

from transformers import AutoModelForCausalLM

model = AutoModelForCausalLM.from_pretrained("facebook/opt-350m", load_in_4bit=True, device_map="auto")

...

That is all you wish!

As a general rule, we recommend users to not manually set a tool once the model has been loaded with device_map. So any device task call to the model, or to any model’s submodules must be avoided after that line – unless what you’re doing.

Be mindful that loading a quantized model will robotically forged other model’s submodules into float16 dtype. You possibly can change this behavior, (if for instance you wish to have the layer norms in float32), by passing torch_dtype=dtype to the from_pretrained method.

Advanced usage

You possibly can play with different variants of 4bit quantization similar to NF4 (normalized float 4 (default)) or pure FP4 quantization. Based on theoretical considerations and empirical results from the paper, we recommend using NF4 quantization for higher performance.

Other options include bnb_4bit_use_double_quant which uses a second quantization after the primary one to avoid wasting an extra 0.4 bits per parameter. And at last, the compute type. While 4-bit bitsandbytes stores weights in 4-bits, the computation still happens in 16 or 32-bit and here any combination may be chosen (float16, bfloat16, float32 etc).

The matrix multiplication and training will likely be faster if one uses a 16-bit compute dtype (default torch.float32). One should leverage the recent BitsAndBytesConfig from transformers to vary these parameters. An example to load a model in 4bit using NF4 quantization below with double quantization with the compute dtype bfloat16 for faster training:

from transformers import BitsAndBytesConfig

nf4_config = BitsAndBytesConfig(

load_in_4bit=True,

bnb_4bit_quant_type="nf4",

bnb_4bit_use_double_quant=True,

bnb_4bit_compute_dtype=torch.bfloat16

)

model_nf4 = AutoModelForCausalLM.from_pretrained(model_id, quantization_config=nf4_config)

Changing the compute dtype

As mentioned above, you too can change the compute dtype of the quantized model by just changing the bnb_4bit_compute_dtype argument in BitsAndBytesConfig.

import torch

from transformers import BitsAndBytesConfig

quantization_config = BitsAndBytesConfig(

load_in_4bit=True,

bnb_4bit_compute_dtype=torch.bfloat16

)

Nested quantization

For enabling nested quantization, you should utilize the bnb_4bit_use_double_quant argument in BitsAndBytesConfig. This can enable a second quantization after the primary one to avoid wasting an extra 0.4 bits per parameter. We also use this feature within the training Google colab notebook.

from transformers import BitsAndBytesConfig

double_quant_config = BitsAndBytesConfig(

load_in_4bit=True,

bnb_4bit_use_double_quant=True,

)

model_double_quant = AutoModelForCausalLM.from_pretrained(model_id, quantization_config=double_quant_config)

And naturally, as mentioned at first of the section, all of those components are composable. You possibly can mix all these parameters together to search out the optimal use case for you. A rule of thumb is: use double quant if you will have problems with memory, use NF4 for higher precision, and use a 16-bit dtype for faster finetuning. As an illustration within the inference demo, we use nested quantization, bfloat16 compute dtype and NF4 quantization to suit gpt-neo-x-20b (40GB) entirely in 4bit in a single 16GB GPU.

Common questions

On this section, we will even address some common questions anyone could have regarding this integration.

Does FP4 quantization have any hardware requirements?

Note that this method is just compatible with GPUs, hence it is just not possible to quantize models in 4bit on a CPU. Amongst GPUs, there mustn’t be any hardware requirement about this method, due to this fact any GPU might be used to run the 4bit quantization so long as you will have CUDA>=11.2 installed.

Keep also in mind that the computation is just not done in 4bit, the weights and activations are compressed to that format and the computation continues to be kept in the specified or native dtype.

What are the supported models?

Similarly as the mixing of LLM.int8 presented in this blogpost the mixing heavily relies on the speed up library. Subsequently, any model that supports speed up loading (i.e. the device_map argument when calling from_pretrained) must be quantizable in 4bit. Note also that this is completely agnostic to modalities, so long as the models may be loaded with the device_map argument, it is feasible to quantize them.

For text models, at the moment of writing, this may include most used architectures similar to Llama, OPT, GPT-Neo, GPT-NeoX for text models, Blip2 for multimodal models, and so forth.

At the moment of writing, the models that support speed up are:

[

'bigbird_pegasus', 'blip_2', 'bloom', 'bridgetower', 'codegen', 'deit', 'esm',

'gpt2', 'gpt_bigcode', 'gpt_neo', 'gpt_neox', 'gpt_neox_japanese', 'gptj', 'gptsan_japanese',

'lilt', 'llama', 'longformer', 'longt5', 'luke', 'm2m_100', 'mbart', 'mega', 'mt5', 'nllb_moe',

'open_llama', 'opt', 'owlvit', 'plbart', 'roberta', 'roberta_prelayernorm', 'rwkv', 'switch_transformers',

't5', 'vilt', 'vit', 'vit_hybrid', 'whisper', 'xglm', 'xlm_roberta'

]

Note that in case your favorite model is just not there, you’ll be able to open a Pull Request or raise a problem in transformers so as to add the support of speed up loading for that architecture.

Can we train 4bit/8bit models?

It is just not possible to perform pure 4bit training on these models. Nonetheless, you’ll be able to train these models by leveraging parameter efficient effective tuning methods (PEFT) and train for instance adapters on top of them. That’s what is completed within the paper and is officially supported by the PEFT library from Hugging Face. We also provide a training notebook and recommend users to examine the QLoRA repository in the event that they are desirous about replicating the outcomes from the paper.

|

|---|

| The output activations original (frozen) pretrained weights (left) are augmented by a low rank adapter comprised of weight matrics A and B (right). |

What other consequences are there?

This integration can open up several positive consequences to the community and AI research as it will possibly affect multiple use cases and possible applications.

In RLHF (Reinforcement Learning with Human Feedback) it is feasible to load a single base model, in 4bit and train multiple adapters on top of it, one for the reward modeling, and one other for the worth policy training. A more detailed blogpost and announcement will likely be made soon about this use case.

We’ve got also made some benchmarks on the impact of this quantization method on training large models on consumer hardware. We’ve got run several experiments on finetuning 2 different architectures, Llama 7B (15GB in fp16) and Llama 13B (27GB in fp16) on an NVIDIA T4 (16GB) and listed here are the outcomes

| Model name | Half precision model size (in GB) | Hardware type / total VRAM | quantization method (CD=compute dtype / GC=gradient checkpointing / NQ=nested quantization) | batch_size | gradient accumulation steps | optimizer | seq_len | Result |

|---|---|---|---|---|---|---|---|---|

| <10B scale models | ||||||||

| decapoda-research/llama-7b-hf | 14GB | 1xNVIDIA-T4 / 16GB | LLM.int8 (8-bit) + GC | 1 | 4 | AdamW | 512 | No OOM |

| decapoda-research/llama-7b-hf | 14GB | 1xNVIDIA-T4 / 16GB | LLM.int8 (8-bit) + GC | 1 | 4 | AdamW | 1024 | OOM |

| decapoda-research/llama-7b-hf | 14GB | 1xNVIDIA-T4 / 16GB | 4bit + NF4 + bf16 CD + no GC | 1 | 4 | AdamW | 512 | No OOM |

| decapoda-research/llama-7b-hf | 14GB | 1xNVIDIA-T4 / 16GB | 4bit + FP4 + bf16 CD + no GC | 1 | 4 | AdamW | 512 | No OOM |

| decapoda-research/llama-7b-hf | 14GB | 1xNVIDIA-T4 / 16GB | 4bit + NF4 + bf16 CD + no GC | 1 | 4 | AdamW | 1024 | OOM |

| decapoda-research/llama-7b-hf | 14GB | 1xNVIDIA-T4 / 16GB | 4bit + FP4 + bf16 CD + no GC | 1 | 4 | AdamW | 1024 | OOM |

| decapoda-research/llama-7b-hf | 14GB | 1xNVIDIA-T4 / 16GB | 4bit + NF4 + bf16 CD + GC | 1 | 4 | AdamW | 1024 | No OOM |

| 10B+ scale models | ||||||||

| decapoda-research/llama-13b-hf | 27GB | 2xNVIDIA-T4 / 32GB | LLM.int8 (8-bit) + GC | 1 | 4 | AdamW | 512 | No OOM |

| decapoda-research/llama-13b-hf | 27GB | 1xNVIDIA-T4 / 16GB | LLM.int8 (8-bit) + GC | 1 | 4 | AdamW | 512 | OOM |

| decapoda-research/llama-13b-hf | 27GB | 1xNVIDIA-T4 / 16GB | 4bit + FP4 + bf16 CD + no GC | 1 | 4 | AdamW | 512 | OOM |

| decapoda-research/llama-13b-hf | 27GB | 1xNVIDIA-T4 / 16GB | 4bit + FP4 + fp16 CD + no GC | 1 | 4 | AdamW | 512 | OOM |

| decapoda-research/llama-13b-hf | 27GB | 1xNVIDIA-T4 / 16GB | 4bit + NF4 + fp16 CD + GC | 1 | 4 | AdamW | 512 | No OOM |

| decapoda-research/llama-13b-hf | 27GB | 1xNVIDIA-T4 / 16GB | 4bit + NF4 + fp16 CD + GC | 1 | 4 | AdamW | 1024 | OOM |

| decapoda-research/llama-13b-hf | 27GB | 1xNVIDIA-T4 / 16GB | 4bit + NF4 + fp16 CD + GC + NQ | 1 | 4 | AdamW | 1024 | No OOM |

We’ve got used the recent SFTTrainer from TRL library, and the benchmarking script may be found here

Playground

Check out the Guananco model cited on the paper on the playground or directly below

Acknowledgements

The HF team would really like to acknowledge all of the people involved on this project from University of Washington, and for making this available to the community.

The authors would also prefer to thank Pedro Cuenca for kindly reviewing the blogpost, Olivier Dehaene and Omar Sanseviero for his or her quick and powerful support for the mixing of the paper’s artifacts on the HF Hub.