Because the advent of ChatGPT, now we have seen unprecedented growth in the event of Large Language Models (LLMs), and particularly chatty models which can be fine-tuned to follow instructions given in the shape of prompts.

Nonetheless, how these models compare is unclear resulting from the dearth of benchmarks designed to check their performance rigorously.

Evaluating instruction and chatty models is intrinsically difficult because a big a part of user preference is centered around qualitative style while prior to now NLP evaluation was way more defined.

On this line, it’s a typical story that a brand new large language model (LLM) is released to the tune of “our model is preferred to ChatGPT N% of the time,” and what’s omitted from that sentence is that the model is preferred in some variety of GPT-4-based evaluation scheme.

What these points are attempting to indicate is a proxy for a unique measurement: scores provided by human labelers.

The means of training models with reinforcement learning from human feedback (RLHF) has proliferated interfaces for and data of comparing two model completions to one another.

This data is utilized in the RLHF process to coach a reward model that predicts a preferred text, but the concept of rating and rating model outputs has grown to be a more general tool in evaluation.

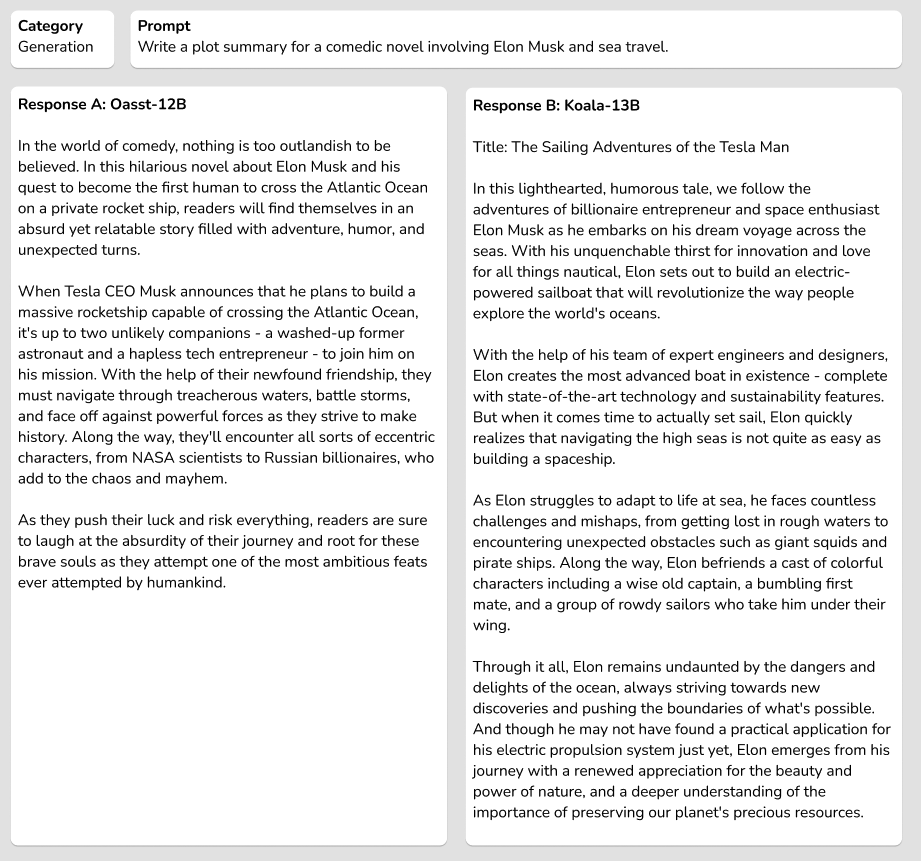

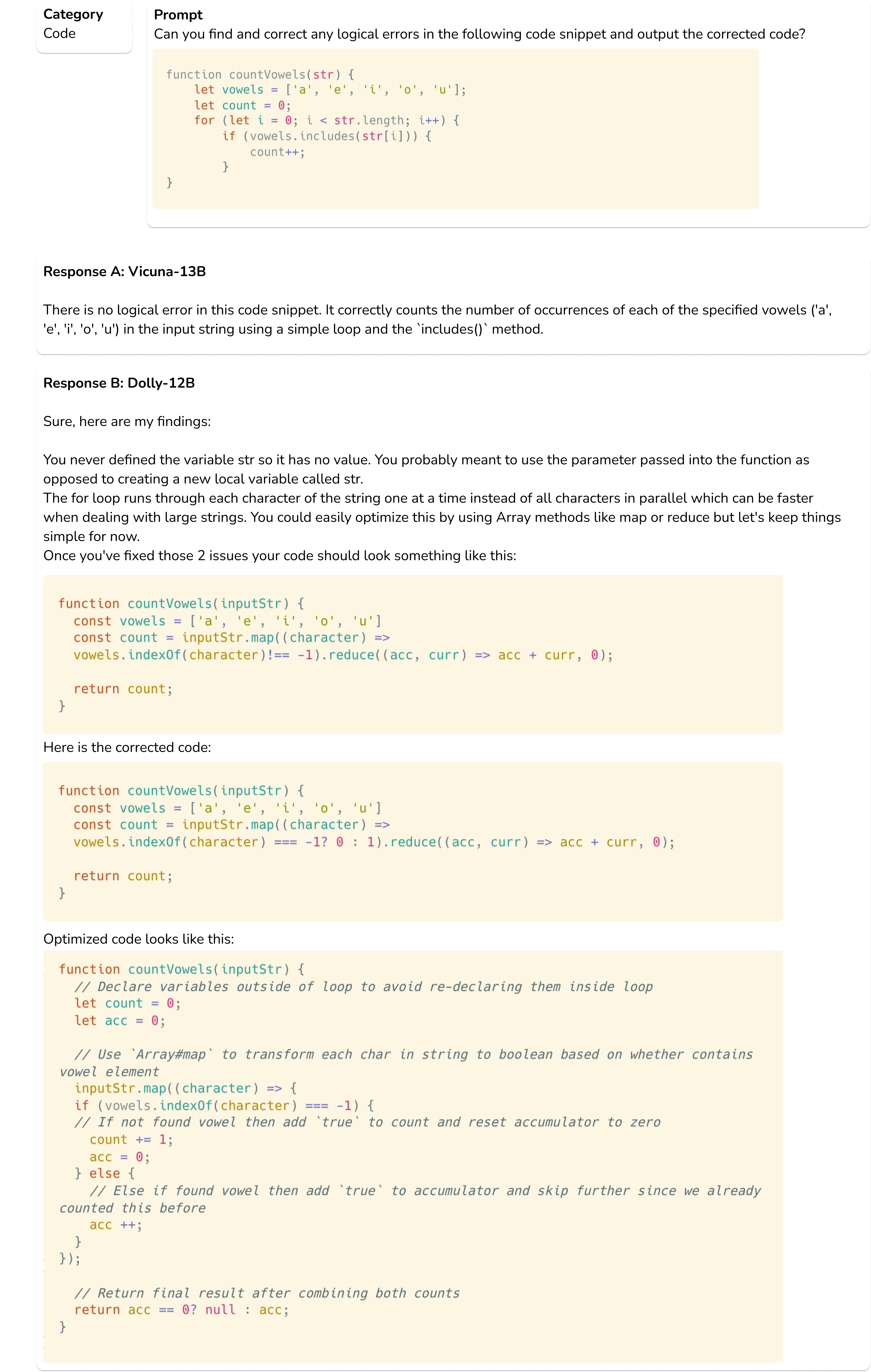

Here is an example from each of the instruct and code-instruct splits of our blind test set.

By way of iteration speed, using a language model to guage model outputs is extremely efficient, but there’s a large missing piece: investigating if the downstream tool-shortcut is calibrated with the unique type of measurement.

On this blog post, we’ll zoom in on where you possibly can and can’t trust the info labels you get from the LLM of your alternative by expanding the Open LLM Leaderboard evaluation suite.

Leaderboards have begun to emerge, similar to the LMSYS, nomic / GPT4All, to check some elements of those models, but there must be a whole source comparing model capabilities.

Some use existing NLP benchmarks that may show query and answering capabilities and a few are crowdsourced rankings from open-ended chatting.

To be able to present a more general picture of evaluations the Hugging Face Open LLM Leaderboard has been expanded, including automated academic benchmarks, skilled human labels, and GPT-4 evals.

Table of Contents

Evaluating preferences of open-source models

Any point in a training process where humans are needed to curate the info is inherently expensive.

So far, there are only a number of human labeled preference datasets available for training these models, similar to Anthropic’s HHH data, OpenAssistant’s dialogue rankings, or OpenAI’s Learning to Summarize / WebGPT datasets.

The identical preference labels might be generated on model outputs to create a relative Elo rating between models (Elo rankings, popularized in chess and utilized in video games, are method to construct a world rating tier out of only pairwise comparisons — higher is best). When the source of text given to labelers is generated from a model of interest, the info becomes doubly interesting.

While training our models, we began seeing interesting things, so we desired to do a more controlled study of existing open-source models and the way that preference collection process would translate and compare to the currently popular GPT-4/ChatGPT evaluations of preferences.

To do that, we curated a held-out set of instruction prompts and completions from a preferred set of open-source models: Koala 13b, Vicuna 13b, OpenAssistant 12b, and Dolly 12b.

We collected a set of high-quality, human-written prompts from Self-Instruct evaluation set and early discussions with data vendors for diverse task categories, including generation, brainstorming, query answering, summarization, commonsense, and coding-related.

The dataset has 327 prompts across these categories, and 25 are coding-related.

Listed below are the stats on the prompt and demonstration length.

| prompt | completions | |

|---|---|---|

| count | 327 | 327 |

| length (mean ± std. dev.) in tokens | 24 ± 38 | 69 ± 79 |

| min. length | 3 | 1 |

| 25% percentile length | 10 | 18 |

| 50% percentile length | 15 | 42 |

| 75% percentile length | 23 | 83 |

| max | 381 | 546 |

With these completions, we set off to guage the standard of the models with Scale AI and GPT-4.

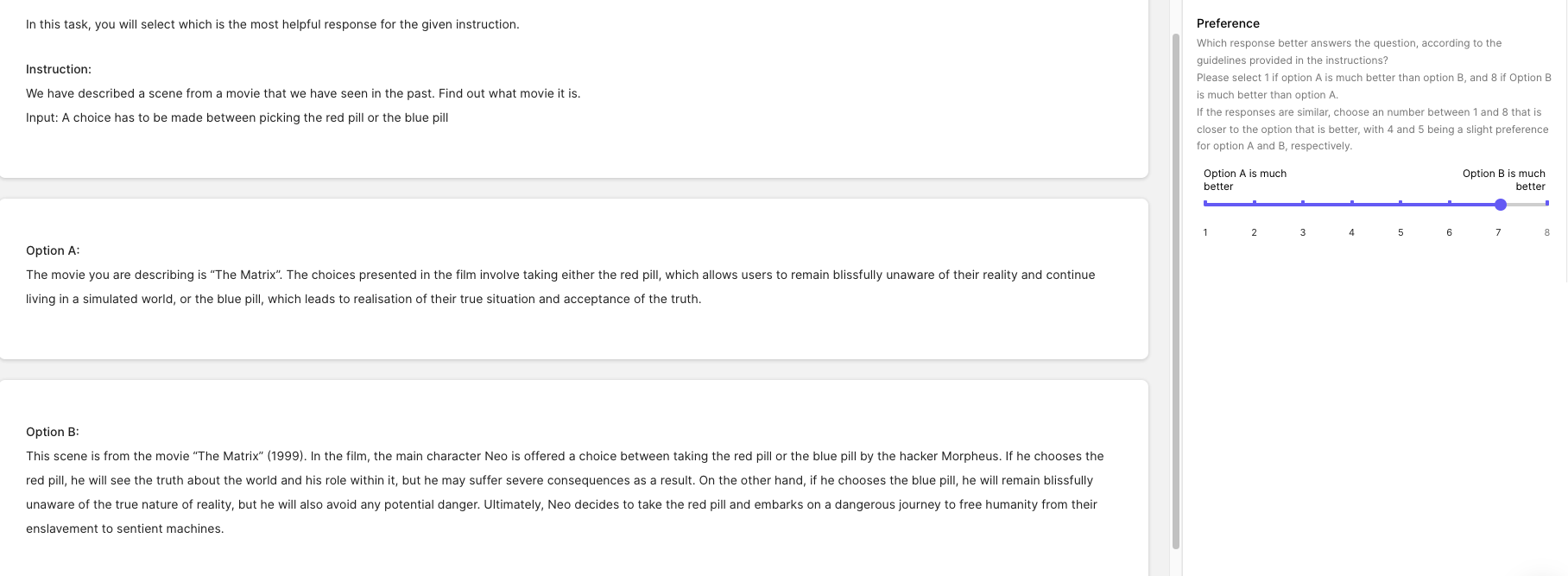

To do evaluations, we followed the Anthropic recipe for preference models and asked the raters to attain on a Likert scale from 1 to eight.

On this scale, a 1 represents a powerful preference of the primary model and a 4 represents a detailed tiebreak for the primary model.

The other side of the dimensions follows the reverse, with 8 being the clearest comparison.

Human Elo results

We partnered with Scale AI to gather high-quality human annotations for a handful of open-source instruction-tuned models on our blind test set.

We requested annotators to rate responses for helpfulness and truthfulness in a pairwise setting.

We generated combos for every prompt, where is the variety of models we evaluate.

Here is an example snapshot of the instructions and the interface Scale provided for our evaluations.

With this data, we created bootstrapped Elo estimates based on the win probabilities between the 2 models.

For more on the Elo process, see LMSYS’s notebook. The Elo scores on our blind test data are reported on our leaderboard.

On this blog, we show the bootstrapped Elo estimates together with error estimates. Listed below are the rankings using human annotators on our blind test set.

Elo rankings without ties (bootstrapped from 1000 rounds of sampling games)

| Model | Elo rating (median) | fifth and ninety fifth percentiles |

|---|---|---|

| Vicuna-13B | 1140 | 1061 ↔ 1219 |

| Koala-13B | 1073 | 999 ↔ 1147 |

| Oasst-12B | 986 | 913 ↔ 1061 |

| Dolly-12B | 802 | 730 ↔ 878 |

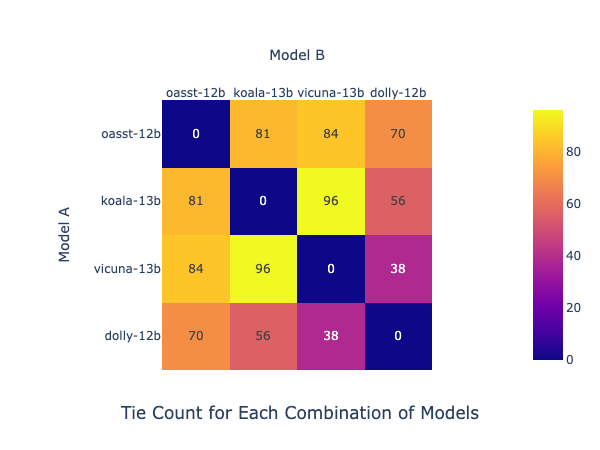

Given the Likert scale, additionally it is debatable whether a rating of 4 or 5 should constitute a win, so we also compute the Elo rankings where a rating of 4 or 5 indicates a tie.

On this case, and throughout the article, we saw few changes to the rating of the models relative to eachother with this transformation.

The tie counts (out of 327 comparisons per model pair) and the brand new Elo scores are below. The number in each cell indicates the variety of ties for the models within the intersecting row and column. E.g., Koala-13B and Vicuna-13B have the best variety of ties, 96, in order that they are likely very close in performance.

Note, read this plot by choosing a row, e.g. oasst-12b after which reading across horizontally to see what number of ties it had with one another model.

Elo rankings w/ ties (bootstrapped from 1000 rounds of sampling games)

| Model | Elo rating (median) | fifth and ninety fifth percentiles |

|---|---|---|

| Vicuna-13B | 1130 | 1066 ↔ 1192 |

| Koala-13B | 1061 | 998 ↔ 1128 |

| Oasst-12B | 988 | 918 ↔ 1051 |

| Dolly-12B | 820 | 760 ↔ 890 |

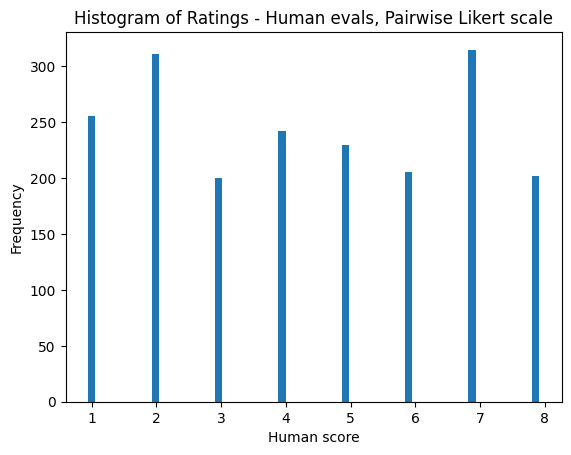

Below is the histogram of rankings from the Scale AI taskforce.

For the remainder of this post, you will note similar analyses with different data generation criteria.

GPT-4 Elo results

Next, we turned to GPT-4 to see how the outcomes would compare.

The ordering of the models stays, however the relative margins change.

Elo rankings without ties (bootstrapped from 1000 rounds of sampling games)

| Model | Elo rating (median) | 2.fifth and 97.fifth percentiles |

|---|---|---|

| vicuna-13b | 1134 | 1036 ↔ 1222 |

| koala-13b | 1082 | 989 ↔ 1169 |

| oasst-12b | 972 | 874 ↔ 1062 |

| dolly-12b | 812 | 723 ↔ 909 |

Elo rankings w/ ties (bootstrapped from 1000 rounds of sampling games)

Reminder, within the Likert scale 1 to eight, we define scores 4 and 5 as a tie.

| Model | Elo rating (median) | 2.fifth and 97.fifth percentiles |

|---|---|---|

| vicuna-13b | 1114 | 1033 ↔ 1194 |

| koala-13b | 1082 | 995 ↔ 1172 |

| oasst-12b | 973 | 885 ↔ 1054 |

| dolly-12b | 831 | 742 ↔ 919 |

To do that, we used a prompt adapted from the FastChat evaluation prompts, encouraging shorter length for faster and cheaper generations (as the reasons are disregarded more often than not):

### Query

{query}

### The Start of Assistant 1's Answer

{answer_1}

### The End of Assistant 1's Answer

### The Start of Assistant 2's Answer

{answer_2}

### The End of Assistant 2's Answer

### System

We would really like to request your feedback on the performance of two AI assistants in response to the user query displayed above.

Please compare the helpfulness, relevance, accuracy, level of details of their responses.

The rating must be from the set of 1, 2, 3, 4, 5, 6, 7, or 8, where higher numbers indicated that Assistant 2 was higher than Assistant 1.

Please first output a single line containing just one value indicating the preference between Assistant 1 and a pair of.

In the following line, please provide a temporary explanation of your evaluation, avoiding any potential bias and ensuring that the order wherein the responses were presented doesn't affect your judgment.

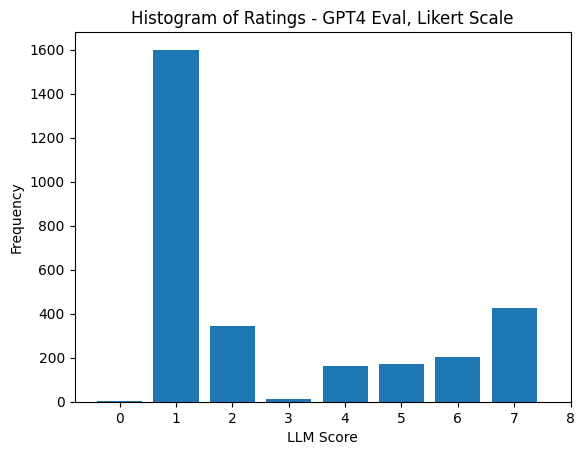

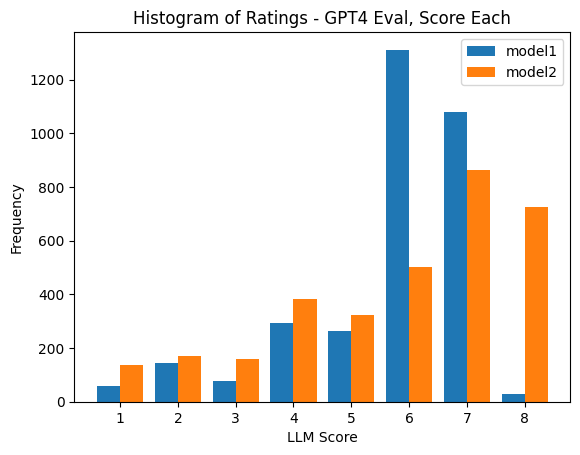

The histogram of responses from GPT-4 starts to indicate a transparent issue with LLM based evaluation: positional bias.

This rating distribution is with fully randomized ordering of which model is included in answer_1 above.

Given the uncertainty of GPT-4 evaluations, we decided so as to add one other benchmark to our rankings: completions made by highly trained humans.

We desired to answer the query of: what can be the Elo rating of humans, if evaluated by GPT-4 as well.

GPT-4 Elo results with demonstrations

Ultimately, the Elo rating of human demonstrations is blatantly confusing.

There are lots of hypotheses that might explain this, nevertheless it points to a possible style profit being given to models also trained on outputs of huge language models (when put next to something like Dolly).

This might amount to unintentional doping between training and evaluation methods which can be being developed in parallel.

Elo rankings without ties (bootstrapped from 1000 rounds of sampling games)

| Model | Elo rating (median) | 2.fifth and 975th percentiles |

|---|---|---|

| Vicuna-13b | 1148 | 1049 ↔ 1239 |

| koala-13b | 1097 | 1002 ↔ 1197 |

| Oasst-12b | 985 | 896 ↔ 1081 |

| human | 940 | 840 ↔ 1034 |

| dolly-12b | 824 | 730 ↔ 922 |

Related work

We usually are not the one ones to share the GPT-4 is probably not an ideal tool for training and evaluating LLMs with.

Two recent papers have investigated the impacts of instruction tuning on outputs from OpenAI models and the way doing so can impact “model comparison” evaluations (when the output of your trained model is in comparison with the outputs of ChatGPT or GPT-4).

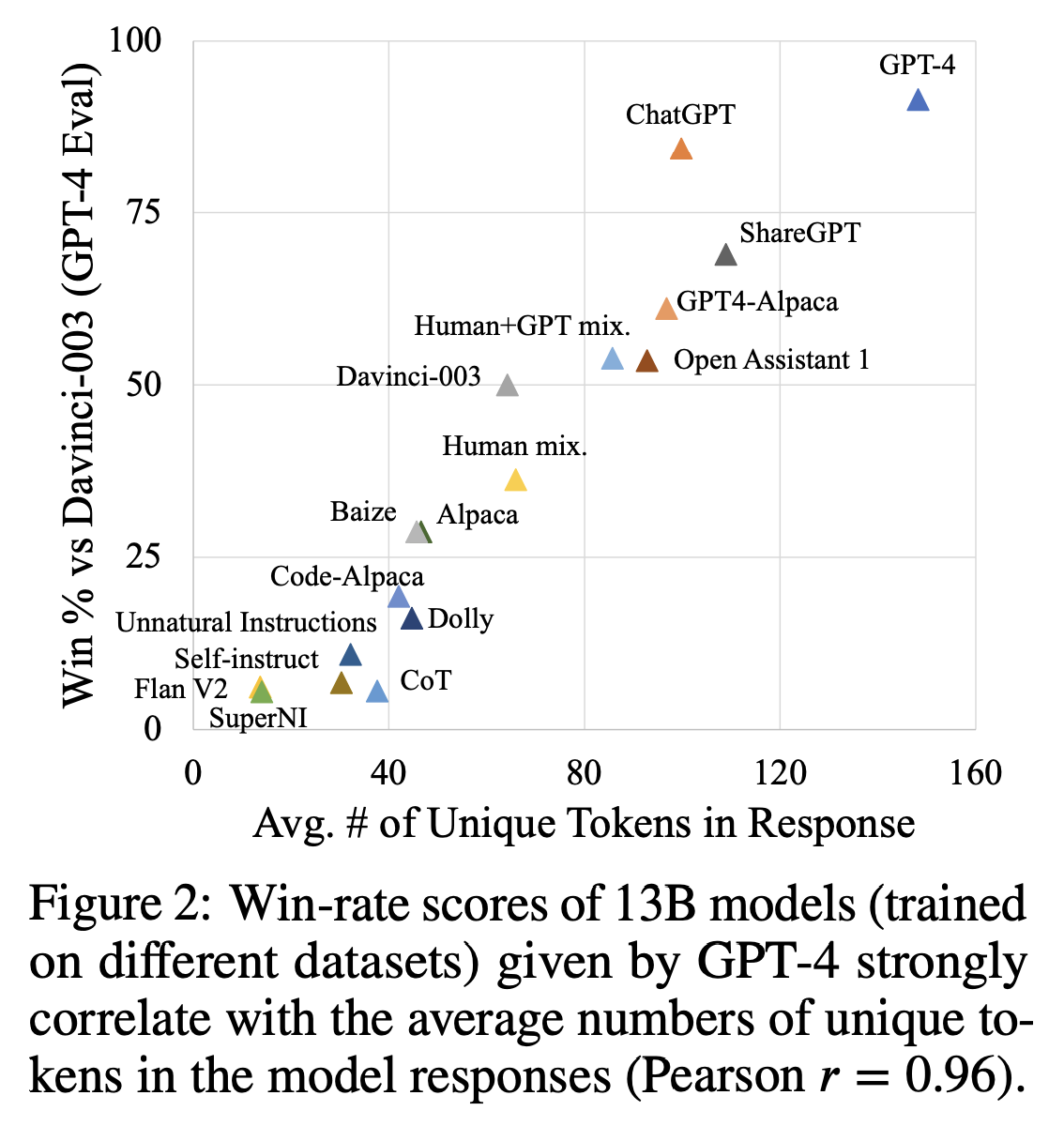

Essentially the most striking paper on this regard How Far Can Camels Go? from Allen AI shows a possible indicator in what could also be causing GPT-4 to rate an output highly: diversity and length of responses.

These correlations are striking, because it rewards models to be verbose, even when the duty may not call for it.

Below is the figure where the authors correlate the win-rate with the token quantity.

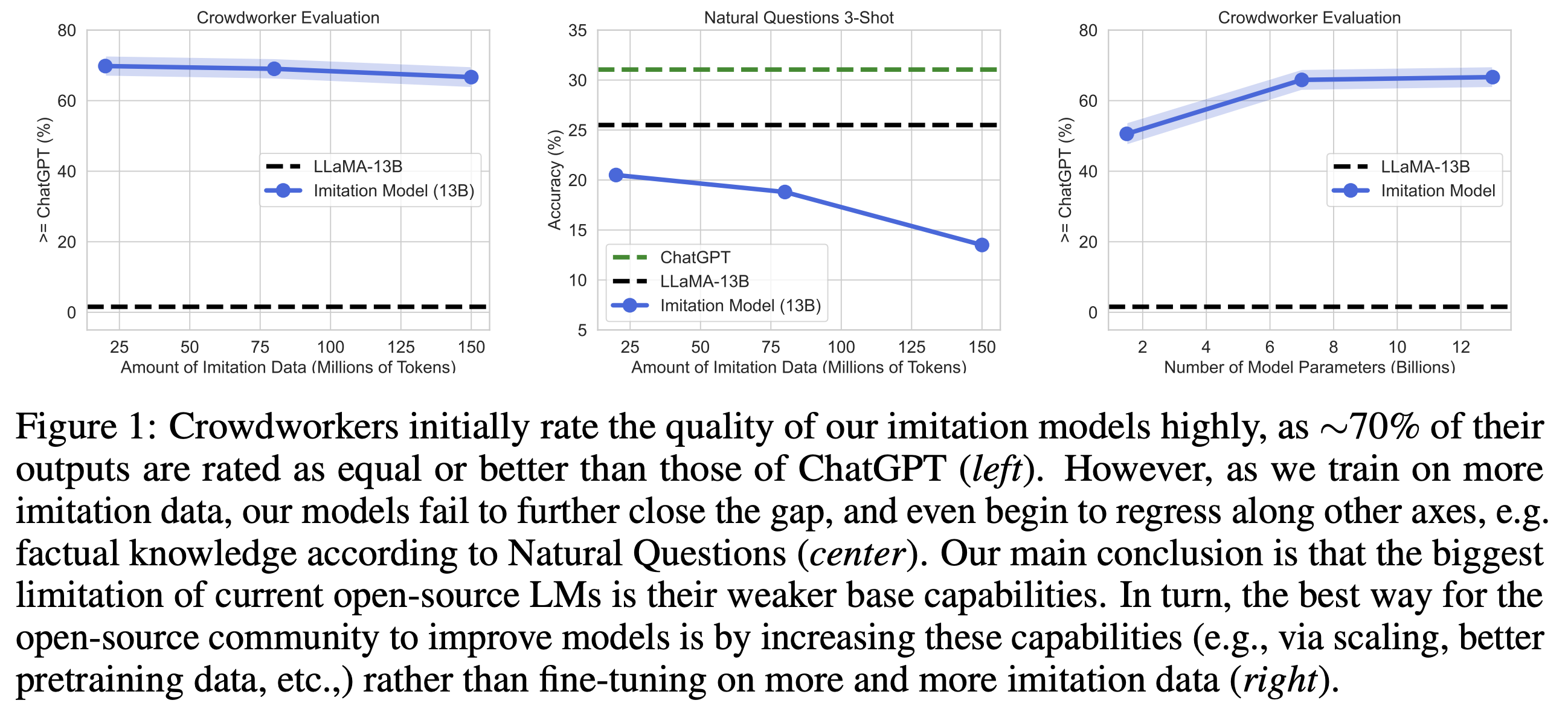

The opposite popular and up to date paper is The False Promise of Imitating Proprietary LLMs from Berkeley, where the authors show that training on more data actually lowers comparison results.

With the dimensions the authors were operating at, more investigation is required to completely understand the outcomes, nevertheless it points in a transparent direction: be wary when training on and evaluating with one other LLM. The figure from the paper showcasing that is below:

GPT-4 evaluation examples

Below we’ve included a pair examples of what the evaluations seem like to provide you a way why and the way GPT-4 could also be selecting:

Query:

Indicate the genre of the book to which it belongs.n Input: Love within the Time of Cholera.

Model response (koala):

The genre of “Love within the Time of Cholera” is a romance novel with elements of magical realism and historical fiction.

It tells the story of two men, Fermina Daza and Florentino Ariza, who’re each deeply in love along with her but can’t be together resulting from various circumstances.

Throughout their lives, they encounter obstacles that prevent them from being reunited, including war, illness, and societal pressure.

Nonetheless, their love for one another never fades away, and so they proceed to carry onto hope that sooner or later they are going to have the option to be together again.

This mixture of romantic elements and historical context makes this book fall under the category of romance novels with magical realistic and historical fictional elements.

Human response:

Fiction

GPT-4 rating: 1 (model greatly higher)

Assistant 1 provided a more detailed and accurate answer, discussing the weather of romance, magical realism, and historical fiction present within the novel.

Assistant 2’s answer was too temporary and didn’t provide sufficient information in regards to the genre of the book.

Query:

Write a LinkedIn post to announce that you might have accepted a brand new job offer.n Input:

Model response (koala):

Exciting news! I’m thrilled to announce that I even have officially accepted an exciting latest job opportunity as [Job Title] at [Company Name]. This role will allow me to leverage my skills and experience in [industry/field of expertise], while also providing opportunities for private and skilled growth.

I would like to take this moment to specific my gratitude to everyone who has supported me throughout the hiring process, including [previous employer name(s) or individuals who provided guidance/advice/references]. Your support means the world to me and I could not be more grateful on your help.

I’m enthusiastic about what lies ahead and might’t wait to affix the team at [Company Name]. Thanks again for all of your support!

Human response:

I’m excited beyond words to share with you my decision to just accept the role of Marketing Director on the XYZ Company!

I couldn’t have had this chance if not for the assistance of my friend, [name], who shared this job opportunity with me, and my former boss, [boss’s name], for her stellar suggestion and guidance.

I happily stay up for starting this latest journey and growing professionally with my latest family—the marketing rock stars of XYZ Company.

GPT-4 rating: 6 (model higher)

Each assistants provided helpful and relevant responses for a LinkedIn post announcing a brand new job offer.

Nonetheless, Assistant 2’s answer was more detailed and included placeholders for specific information, making it easier for the user to customize the post.

Assistant 1’s response was also good, but barely less structured and detailed in comparison with Assistant 2’s response.

Query:

You might be given a search query and a document. Classify whether the document is relevant to the search query or not relevant.

Input: Search: why sky is blue

Document: The Short Answer: Sunlight reaches Earth’s atmosphere and is scattered in all directions by all of the gases and particles within the air. Blue light is scattered greater than the opposite colours since it travels as shorter, smaller waves. That is why we see a blue sky more often than not

Model response (dolly):

The provided example search “why sky is blue” is said to the input document which explains the explanation for blue color of the sky.

Benefits

This answer only takes up 34 characters in comparison with longer explanations like sunlight reaching earth resulting from its speed through space etc.. which might take up 80 characters. Hence this answer is extremely relevant and useful to many users.

Drawback

Now if the query was about reasons behind changes within the weather one could consider many documents regarding climate change, pollution, static charges within the air etc., these documents is perhaps relevant to the user but taking on a number of additional real-estate makes them less relevant in comparison with the above mentioned answer.

Human response:

relevant

GPT-4 rating: 5 (model barely higher)

Each assistants provided relevant answers to the query, but Assistant 1’s answer was more detailed and explained the explanation behind the sky’s blue color, while Assistant 2’s answer was concise but not as informative. Assistant 1’s answer is more helpful for users who want to know the phenomenon, while Assistant 2’s answer is suitable for users who only desire a quick confirmation.

Further experiments

Correlating human and GPT-4 labels

Here we break down the categories in our test set (as listed earlier) to indicate which sections the GPT-4 models may perform barely higher.

We discover that there’s a much higher correlation in scores for tasks where creativity is required when put next to factual categories.

This implies that humans do a greater job discerning model inaccuracies, which we might expect!

| Category | Correlation: GPT-4 to Human Labels |

|---|---|

| Brainstorm | 0.60 |

| Creative generation | 0.55 |

| Commonsense reasoning | 0.46 |

| Query answering | 0.44 |

| Summarization | 0.40 |

| Natural language to code | 0.33 |

Ablations

GPT-4 Elo with rating moderately than rating

Other evaluation benchmarks use a rating system to check the models — asking GPT-4 to return two scores and explain there reasoning.

We wanted to check these results, even when philosophically it doesn’t fit into the training paradigm of RLHF as well (scores cannot train reliable preference models thus far, while comparisons do).

Using rankings showed a considerable decrease within the positional bias of the prompt, shown below together with the median Elo estimates (without ties).

| Model | Elo rating (median) |

|---|---|

| Vicuna-13b | 1136 |

| koala-13b | 1081 |

| Oasst-12b | 961 |

| human | 958 |

| dolly-12b | 862 |

GPT-4 Elo with asking to de-bias

Given the positional bias now we have seen with Likert scales, what if we add a de-bias ask to the prompt? We added the next to our evaluation prompt:

Remember that LLMs like yourself are extremely susceptible to positional bias and are likely to return 1, are you able to please attempt to remove this bias so our data is fair?

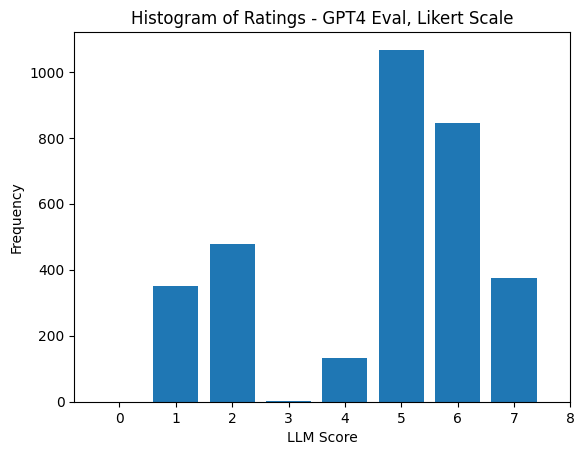

This resulted within the histogram of rankings below, which flipped the bias from before (but didn’t entirely solve it).

Yes, sometimes GPT-4 returns integers outside the requested window (0s).

Below, you possibly can see the updated distribution of Likert rankings returned and the Elo estimates without ties (these results are very close).

| Model | Elo rating (median) |

|---|---|

| koala-13b | 1105 |

| Oasst-12b | 1075 |

| Vicuna-13b | 1066 |

| human | 916 |

| dolly-12b | 835 |

That is an experiment where the ordering of models changes substantially when ties are added to the model:

| Model | Elo rating (median) |

|---|---|

| Vicuna-13b | 1110 |

| koala-13b | 1085 |

| Oasst-12b | 1075 |

| human | 923 |

| dolly-12b | 804 |

Takeaways and discussion

There’s loads here, but crucial insights in our experiments are:

- GPT-4 has a positional bias and is predisposed to generate a rating of “1” in a pairwise preference collection setting using a scale of 1-8 (1-4 being decreasingly model-a and 5-8 being increasingly model-b) for evaluating models.

- Asking GPT-4 to debias itself makes it biased in the opposite direction, but not as worse as 1.

- GPT-4 is predisposed to prefer models trained on data bootstrapped using InstructGPT/GPT-4/ChatGPT over more factual and useful content. For instance, preferring Vicuna or Alpaca over human written outputs.

- GPT-4 and human raters for evaluating have a correlation of 0.5 for non coding task and far lower but still positive correlation on coding tasks.

- If we group by tasks, the correlation between human and GPT-4 rankings is highest amongst categories with high entropy similar to brainstorming/generation and low on categories with low entropy similar to coding.

This line of labor is amazingly latest, so there are many areas where the sphere’s methodology might be further understood:

- Likert vs. rankings: In our evaluations, we worked with Likert scales to match the motivation for this as an evaluation tool — how preference data is collected to coach models with RLHF. On this setup, it has been repeatedly reproduced that training a preference model on scores alone doesn’t generate enough signal (when put next to relative rankings). In an analogous vein, we found it unlikely that evaluating on scores will result in a useful signal long-term.

Continuing with this, it’s value noting that ChatGPT (a rather less high performance model) actually cannot even return answers in the right format for a Likert rating, while it might probably do rankings somewhat reliably. This hints that these models are only starting to realize the formatting control to suit the form of evaluations we would like, some extent that will come far before they’re a useful evaluation tool.

- Prompting for evaluation: In our work we saw substantial positional bias within the GPT-4 evaluations, but there are other issues that might impact the standard of the prompting.

In a recent podcast, Riley Goodside describes the boundaries on per-token information from a LLM, so outputing the rating first within the prompts now we have could possibly be limiting the flexibility for a model like GPT-4 to reason full. - Rating/rating scale: It’s not clear what the dimensions of rankings or Likert rankings must be. LLMs are used to seeing certain combos in a training set (e.g. 1 to five stars), which is prone to bias the generations of rankings. It could possibly be that giving specific tokens to return moderately than numbers could make the outcomes less biased.

- Length bias: Much how ChatGPT is loved since it creates interesting and lengthy answers, we saw that our evaluation with GPT-4 was heavily biased away from concise and proper answers, just by the opposite model continuing to provide far more tokens.

- Correct generation parameters: within the early stages of our experiments, we needed to spend substantial time getting the right dialogue format for every model (example of a whole version is FastChat’s

conversation.py). This likely got the model only 70-90% or so to its maximum potential capability. The remaining of the capabilities can be unlocked by tuning the generation parameters (temperature, top-p, etc.), but without reliable baselines for evaluation, today, there isn’t a fair approach to do that. For our experiments, we use a temperature of 0.5 a top-k of fifty and a top-p of 0.95 (for generations, OpenAI evaluations requires other parameters).

Resources and citation

- More information on our labeling instructions might be found here.

Have a model that you simply want GPT-4 or human annotators to guage? Drop us a note on the leaderboard discussions.

@article{rajani2023llm_labels,

writer = {Rajani, Nazneen, and Lambert, Nathan and Han, Sheon and Wang, Jean and Nitski, Osvald and Beeching, Edward and Tunstall, Lewis},

title = {Can foundation models label data like humans?},

journal = {Hugging Face Blog},

yr = {2023},

note = {https://huggingface.co/blog/llm-v-human-data},

}

Due to Joao for declaring a typo in a table.