What’s the Hugging Face Hub?

Hugging Face goals to make high-quality machine learning accessible to everyone. This goal is pursued in various ways, including developing open-source code libraries equivalent to the widely-used Transformers library, offering free courses, and providing the Hugging Face Hub.

The Hugging Face Hub is a central repository where people can share and access machine learning models, datasets and demos. The Hub hosts over 190,000 machine learning models, 33,000 datasets and over 100,000 machine learning applications and demos. These models cover a big selection of tasks from pre-trained language models, text, image and audio classification models, object detection models, and a big selection of generative models.

The models, datasets and demos hosted on the Hub span a big selection of domains and languages, with regular community efforts to expand the scope of what is offered via the Hub. This blog post intends to supply people working in or with the galleries, libraries, archives and museums (GLAM) sector to know how they will use — and contribute to — the Hugging Face Hub.

You may read the entire post or jump to probably the most relevant sections!

What are you able to find on the Hugging Face Hub?

Models

The Hugging Face Hub provides access to machine learning models covering various tasks and domains. Many machine learning libraries have integrations with the Hugging Face Hub, allowing you to directly use or share models to the Hub via these libraries.

Datasets

The Hugging Face hub hosts over 30,000 datasets. These datasets cover a spread of domains and modalities, including text, image, audio and multi-modal datasets. These datasets are precious for training and evaluating machine learning models.

Spaces

Hugging Face Spaces is a platform that means that you can host machine learning demos and applications. These Spaces range from easy demos allowing you to explore the predictions made by a machine learning model to more involved applications.

Spaces make hosting and making your application accessible for others to make use of rather more straightforward. You should use Spaces to host Gradio and Streamlit applications, or you need to use Spaces to custom docker images. Using Gradio and Spaces together often means you’ll be able to have an application created and hosted with access for others to make use of inside minutes. You should use Spaces to host a Docker image in the event you want complete control over your application. There are also Docker templates that may offer you quick access to a hosted version of many popular tools, including the Argailla and Label Studio annotations tools.

How will you use the Hugging Face Hub: finding relevant models on the Hub

There are various potential use cases within the GLAM sector where machine learning models will be helpful. Whilst some institutions could have the resources required to coach machine learning models from scratch, you need to use the Hub to seek out openly shared models that either already do what you wish or are very near your goal.

For instance, in the event you are working with a group of digitized Norwegian documents with minimal metadata. One approach to higher understand what’s in the gathering is to make use of a Named Entity Recognition (NER) model. This model extracts entities from a text, for instance, identifying the locations mentioned in a text. Knowing which entities are contained in a text generally is a precious way of higher understanding what a document is about.

We are able to find NER models on the Hub by filtering models by task. On this case, we elect token-classification, which is the duty which incorporates named entity recognition models. This filter returns models labelled as doing token-classification. Since we’re working with Norwegian documents, we might also need to filter by language; this gets us to a smaller set of models we wish to explore. A lot of these models will even contain a model widget, allowing us to check the model.

A model widget can quickly show how well a model will likely perform on our data. Once you’ve got found a model that interests you, the Hub provides other ways of using that tool. In the event you are already conversant in the Transformers library, you’ll be able to click the use in Transformers button to get a pop-up which shows methods to load the model in Transformers.

In the event you prefer to make use of a model via an API, clicking the

deploy button in a model repository gives you various options for hosting the model behind an API. This will be particularly useful if you should check out a model on a bigger amount of knowledge but need the infrastructure to run models locally.

The same approach can be used to seek out relevant models and datasets

on the Hugging Face Hub.

Walkthrough: how are you going to add a GLAM dataset to the Hub?

We are able to make datasets available via the Hugging Face hub in various ways. I’ll walk through an example of adding a CSV dataset to the Hugging Face hub.

Overview of the strategy of uploading a dataset to the Hub via the browser interface

For our example, we’ll work on making the On the Books Training Set

available via the Hub. This dataset comprises a CSV file containing data that will be used to coach a text classification model. For the reason that CSV format is one in every of the supported formats for uploading data to the Hugging Face Hub, we will share this dataset directly on the Hub without having to write down any code.

Create a brand new dataset repository

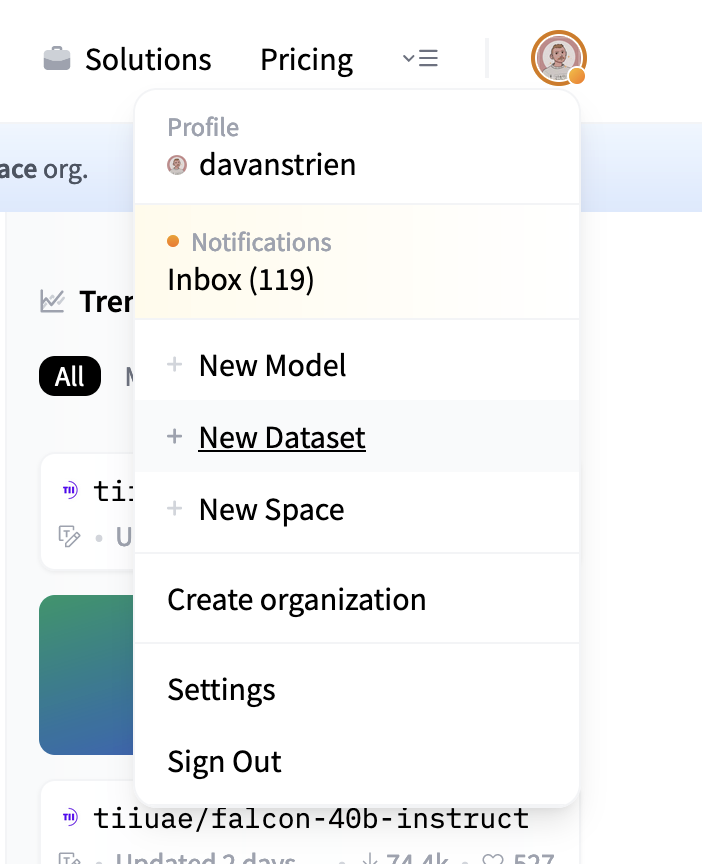

Step one to uploading a dataset to the Hub is to create a brand new dataset repository. This will be done by clicking the Latest Dataset button on the dropdown menu in the highest right-hand corner of the Hugging Face hub.

Once you’ve gotten done this you’ll be able to select a reputation to your latest dataset repository. You too can create the dataset under a unique owner i.e. a corporation, and optionally specify a license.

Upload files

Once you’ve gotten created a dataset repository you will have to upload the information files. You may do that by clicking on Add file under the Files tab on the dataset repository.

You may now select the information you want to upload to the Hub.

You may upload a single file or multiple files using the upload interface. Once you’ve gotten uploaded your file, you commit your changes to finalize the upload.

Adding metadata

It can be crucial so as to add metadata to your dataset repository to make your dataset more discoverable and helpful for others. It will allow others to seek out your dataset and understand what it accommodates.

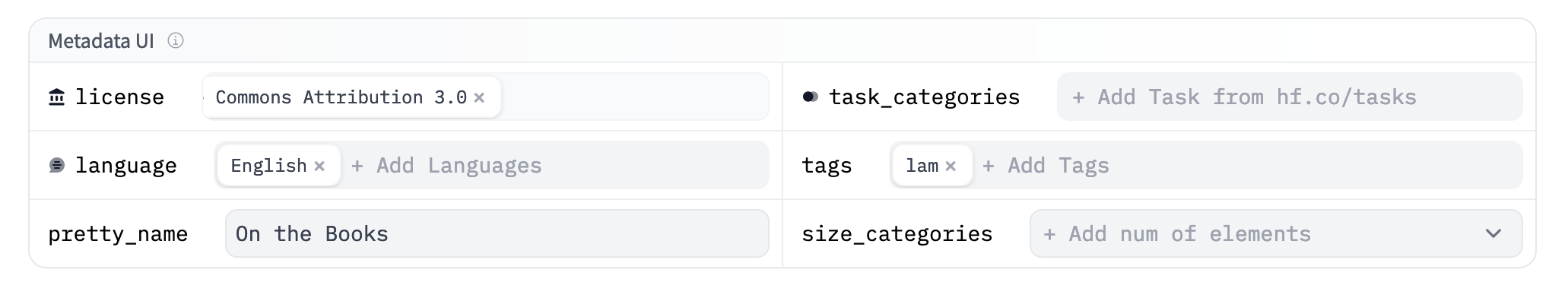

You may edit metadata using the Metadata UI editor. This means that you can specify the license, language, tags etc., for the dataset.

Additionally it is very helpful to stipulate in additional detail what your dataset is, how and why it was constructed, and it’s strengths and weaknesses. This will be done in a dataset repository by filling out the README.md file. This file will function a dataset card to your dataset. A dataset card is a semi-structured type of documentation for machine learning datasets that goals to make sure datasets are sufficiently well documented. While you edit the README.md file you will probably be given the choice to import a template dataset card. This template provides you with helpful prompts for what is helpful to incorporate in a dataset card.

Tip: Writing a very good dataset card will be a variety of work. Nonetheless, you don’t want to do all of this work in a single go necessarily, and since people can ask questions or make suggestions for datasets hosted on the Hub the processes of documenting datasets generally is a collective activity.

Datasets preview

Once we have uploaded our dataset to the Hub, we’ll get a preview of the dataset. The dataset preview generally is a useful way of higher understanding the dataset.

Other ways of sharing datasets

You should use many other approaches for sharing datasets on the Hub. The datasets documentation will aid you higher understand what’s going to likely work best to your particular use case.

Why might Galleries, Libraries, Archives and Museums need to use the Hugging Face hub?

There are various different explanation why institutions need to contribute to

the Hugging Face Hub:

-

Exposure to a brand new audience: the Hub has develop into a central destination for people working in machine learning, AI and related fields. Sharing on the Hub will help expose your collections and work to this audience. This also opens up the chance for further collaboration with this audience.

-

Community: The Hub has many community-oriented features, allowing users and potential users of your material to ask questions and have interaction with materials you share via the Hub. Sharing trained models and machine learning datasets also allows people to construct on one another’s work and lowers the barrier to using machine learning within the sector.

-

Diversity of coaching data: Considered one of the barriers to the GLAM using machine learning is the provision of relevant data for training and evaluation of machine learning models. Machine learning models that work well on benchmark datasets may not work as well on GLAM organizations’ data. Constructing a community to share domain-specific datasets will ensure machine learning will be more effectively pursued within the GLAM sector.

-

Climate change: Training machine learning models produces a carbon footprint. The scale of this footprint is dependent upon various aspects. A technique we will collectively reduce this footprint is to share trained models with the community so that individuals aren’t duplicating the identical models (and generating more carbon emissions in the method).

Example uses of the Hugging Face Hub

Individuals and organizations already use the Hugging Face hub to share machine learning models, datasets and demos related to the GLAM sector.

BigLAM

An initiative developed out of the BigScience project is targeted on making datasets from GLAM with relevance to machine learning are made more accessible. BigLAM has to date remodeled 30 datasets related to GLAM available via the Hugging Face hub.

Nasjonalbiblioteket AI Lab

The AI lab on the National Library of Norway is a really energetic user of the Hugging Face hub, with ~120 models, 23 datasets and 6 machine learning demos shared publicly. These models include language models trained on Norwegian texts from the National Library of Norway and Whisper (speech-to-text) models trained on Sámi languages.

Smithsonian Institution

The Smithsonian shared an application hosted on Hugging Face Spaces, demonstrating two machine learning models trained to discover Amazon fish species. This project goals to empower communities with tools that may allow more accurate measurement of fish species numbers within the Amazon. Making tools equivalent to this available via a Spaces demo further lowers the barrier for people wanting to make use of these tools.

Hub features for Galleries, Libraries, Archives and Museums

The Hub supports many features which help make machine learning more accessible. Some features which could also be particularly helpful for GLAM institutions include:

- Organizations: you’ll be able to create a corporation on the Hub. This means that you can create a spot to share your organization’s artefacts.

- Minting DOIs: A DOI (Digital Object Identifier) is a persistent digital identifier for an object. DOIs have develop into essential for creating persistent identifiers for publications, datasets and software. A persistent identifier is commonly required by journals, conferences or researcher funders when referencing academic outputs. The Hugging Face Hub supports issuing DOIs for models, datasets, and demos shared on the Hub.

- Usage tracking: you’ll be able to view download stats for datasets and models hosted within the Hub monthly or see the full variety of downloads over all time. These stats generally is a precious way for institutions to reveal their impact.

- Script-based dataset sharing: in the event you have already got dataset hosted somewhere, you’ll be able to still provide access to them via the Hugging Face hub using a dataset loading script.

- Model and dataset gating: there are circumstances where you wish more control over who’s accessing models and datasets. The Hugging Face hub supports model and dataset gating, allowing you so as to add access controls.

How can I get help using the Hub?

The Hub docs go into more detail concerning the various features of the Hugging Face Hub. You too can find more details about sharing datasets on the Hub and knowledge about sharing Transformers models to the Hub.

In the event you require any assistance while using the Hugging Face Hub, there are several avenues you’ll be able to explore. You could seek help by utilizing the discussion forum or through a Discord.