[Updated on July 24, 2023: Added Llama 2.]

Text generation and conversational technologies have been around for ages. Earlier challenges in working with these technologies were controlling each the coherence and variety of the text through inference parameters and discriminative biases. More coherent outputs were less creative and closer to the unique training data and sounded less human. Recent developments overcame these challenges, and user-friendly UIs enabled everyone to try these models out. Services like ChatGPT have recently put the highlight on powerful models like GPT-4 and caused an explosion of open-source alternatives like Llama to go mainstream. We predict these technologies can be around for a very long time and turn out to be increasingly integrated into on a regular basis products.

This post is split into the next sections:

- Transient background on text generation

- Licensing

- Tools within the Hugging Face Ecosystem for LLM Serving

- Parameter Efficient Tremendous Tuning (PEFT)

Transient Background on Text Generation

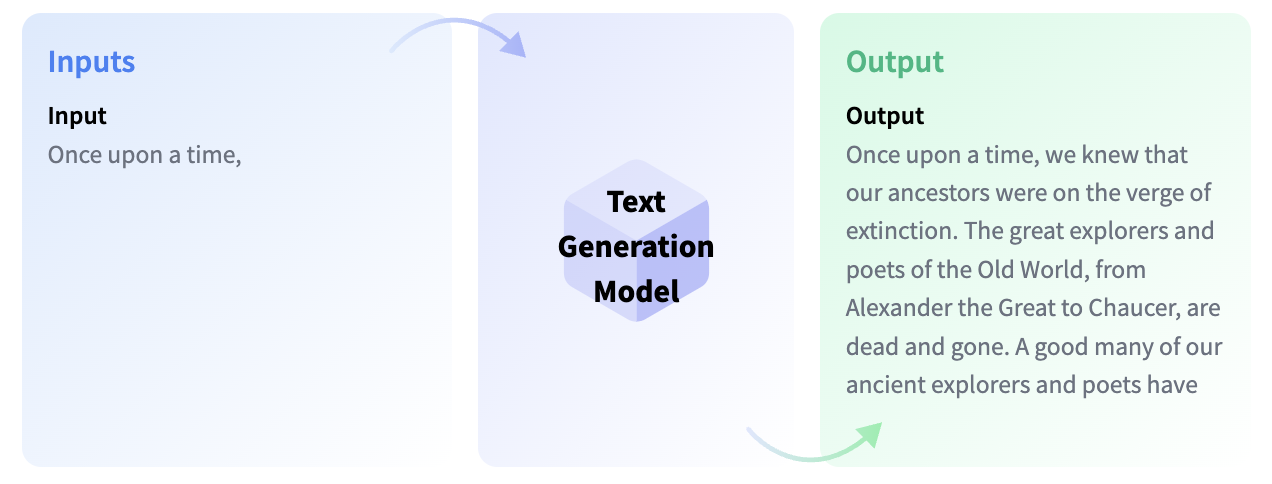

Text generation models are essentially trained with the target of completing an incomplete text or generating text from scratch as a response to a given instruction or query. Models that complete incomplete text are called Causal Language Models, and famous examples are GPT-3 by OpenAI and Llama by Meta AI.

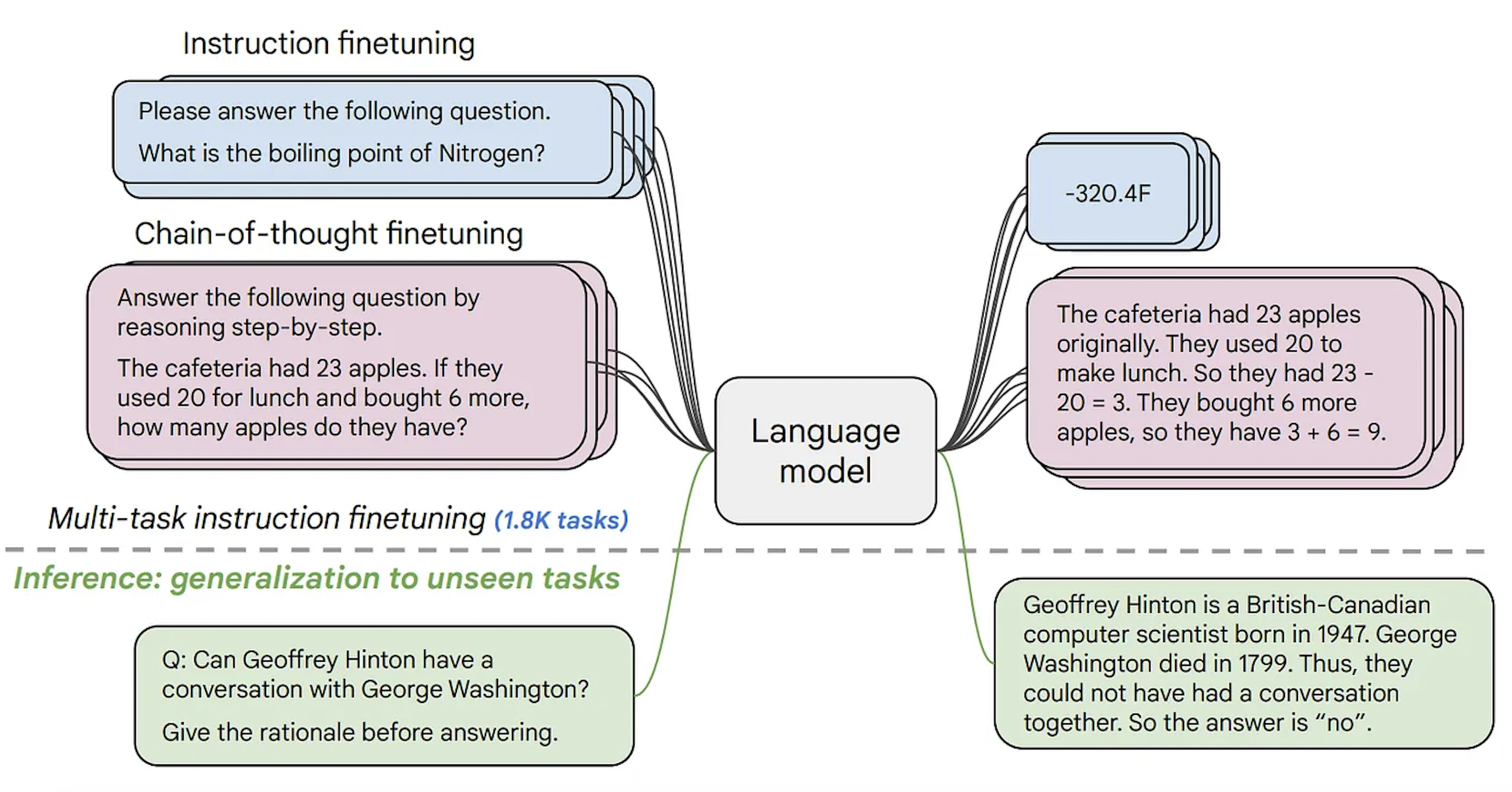

One concept it’s good to know before we move on is fine-tuning. That is the means of taking a really large model and transferring the knowledge contained on this base model to a different use case, which we call a downstream task. These tasks can are available in the shape of instructions. Because the model size grows, it will possibly generalize higher to instructions that don’t exist within the pre-training data, but were learned during fine-tuning.

Causal language models are adapted using a process called reinforcement learning from human feedback (RLHF). This optimization is especially revamped how natural and coherent the text sounds moderately than the validity of the reply. Explaining how RLHF works is outside the scope of this blog post, but you will discover more details about this process here.

For instance, GPT-3 is a causal language base model, while the models within the backend of ChatGPT (which is the UI for GPT-series models) are fine-tuned through RLHF on prompts that may consist of conversations or instructions. It’s a crucial distinction to make between these models.

On the Hugging Face Hub, you will discover each causal language models and causal language models fine-tuned on instructions (which we’ll give links to later on this blog post). Llama is considered one of the primary open-source LLMs to have outperformed/matched closed-source ones. A research group led by Together has created a reproduction of Llama’s dataset, called Red Pajama, and trained LLMs and instruction fine-tuned models on it. You possibly can read more about it here and find the model checkpoints on Hugging Face Hub. By the point this blog post is written, three of the biggest causal language models with open-source licenses are MPT-30B by MosaicML, XGen by Salesforce and Falcon by TII UAE, available completely open on Hugging Face Hub.

Recently, Meta released Llama 2, an open-access model with a license that permits business use. As of now, Llama 2 outperforms all the other open-source large language models on different benchmarks. Llama 2 checkpoints on Hugging Face Hub are compatible with transformers, and the biggest checkpoint is on the market for everybody to try at HuggingChat. You possibly can read more about tips on how to fine-tune, deploy and prompt with Llama 2 in this blog post.

The second kind of text generation model is usually known as the text-to-text generation model. These models are trained on text pairs, which will be questions and answers or instructions and responses. The preferred ones are T5 and BART (which, as of now, aren’t state-of-the-art). Google has recently released the FLAN-T5 series of models. FLAN is a recent technique developed for instruction fine-tuning, and FLAN-T5 is basically T5 fine-tuned using FLAN. As of now, the FLAN-T5 series of models are state-of-the-art and open-source, available on the Hugging Face Hub. Note that these are different from instruction-tuned causal language models, although the input-output format may appear similar. Below you possibly can see an illustration of how these models work.

Having more variation of open-source text generation models enables firms to maintain their data private, to adapt models to their domains faster, and to chop costs for inference as a substitute of counting on closed paid APIs. All open-source causal language models on Hugging Face Hub will be found here, and text-to-text generation models will be found here.

Models created with love by Hugging Face with BigScience and BigCode 💗

Hugging Face has co-led two science initiatives, BigScience and BigCode. Consequently of them, two large language models were created, BLOOM 🌸 and StarCoder 🌟.

BLOOM is a causal language model trained on 46 languages and 13 programming languages. It’s the primary open-source model to have more parameters than GPT-3. You could find all of the available checkpoints within the BLOOM documentation.

StarCoder is a language model trained on permissive code from GitHub (with 80+ programming languages 🤯) with a Fill-in-the-Middle objective. It’s not fine-tuned on instructions, and thus, it serves more as a coding assistant to finish a given code, e.g., translate Python to C++, explain concepts (what’s recursion), or act as a terminal. You possibly can try all the StarCoder checkpoints on this application. It also comes with a VSCode extension.

Snippets to make use of all models mentioned on this blog post are given in either the model repository or the documentation page of that model type in Hugging Face.

Licensing

Many text generation models are either closed-source or the license limits business use. Fortunately, open-source alternatives are starting to look and being embraced by the community as constructing blocks for further development, fine-tuning, or integration with other projects. Below you will discover an inventory of a few of the large causal language models with fully open-source licenses:

There are two code generation models, StarCoder by BigCode and Codegen by Salesforce. There are model checkpoints in several sizes and open-source or open RAIL licenses for each, aside from Codegen fine-tuned on instruction.

The Hugging Face Hub also hosts various models fine-tuned for instruction or chat use. They are available in various shapes and sizes depending in your needs.

- MPT-30B-Chat, by Mosaic ML, uses the CC-BY-NC-SA license, which doesn’t allow business use. Nevertheless, MPT-30B-Instruct uses CC-BY-SA 3.0, which will be used commercially.

- Falcon-40B-Instruct and Falcon-7B-Instruct each use the Apache 2.0 license, so business use can also be permitted.

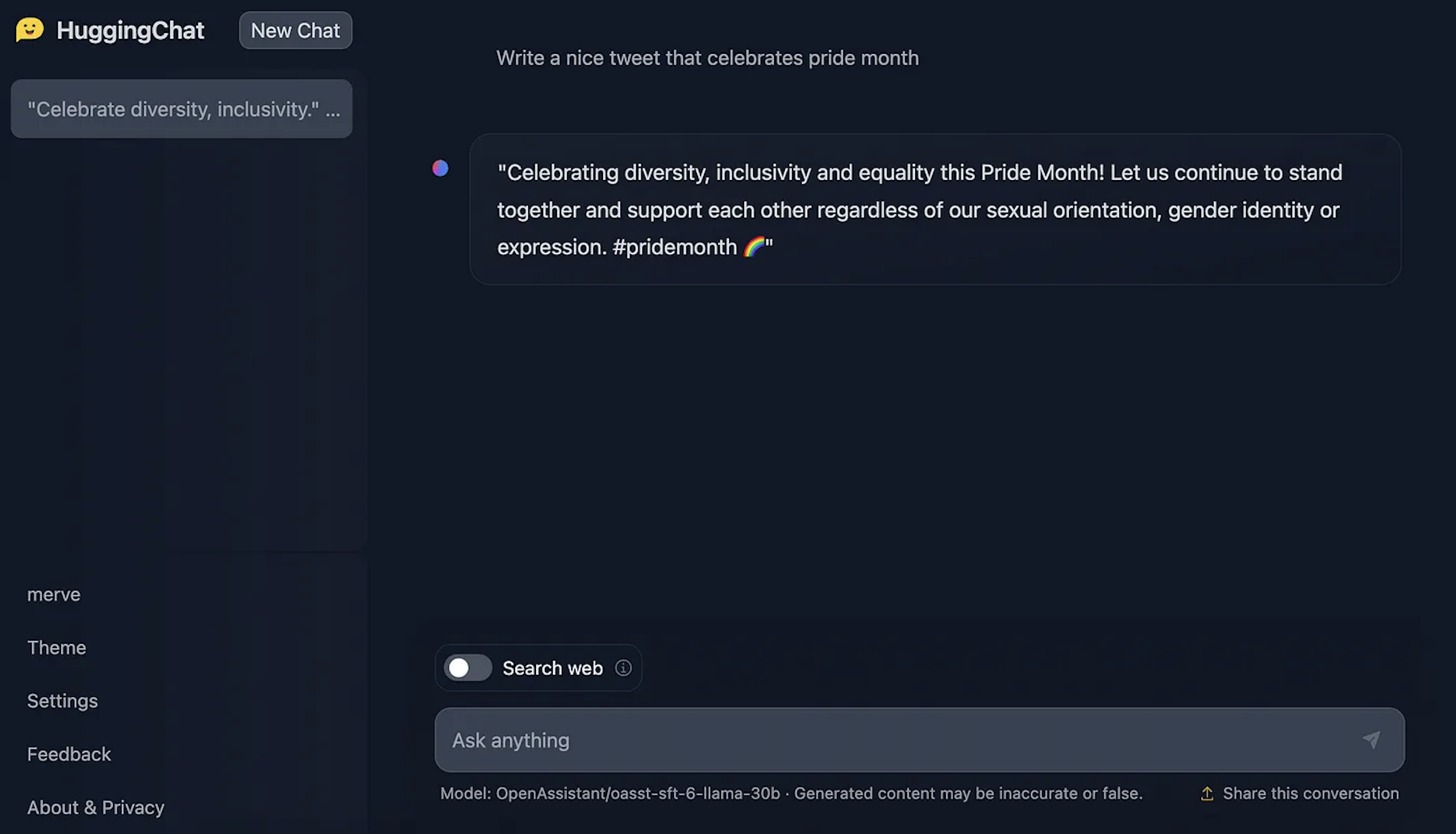

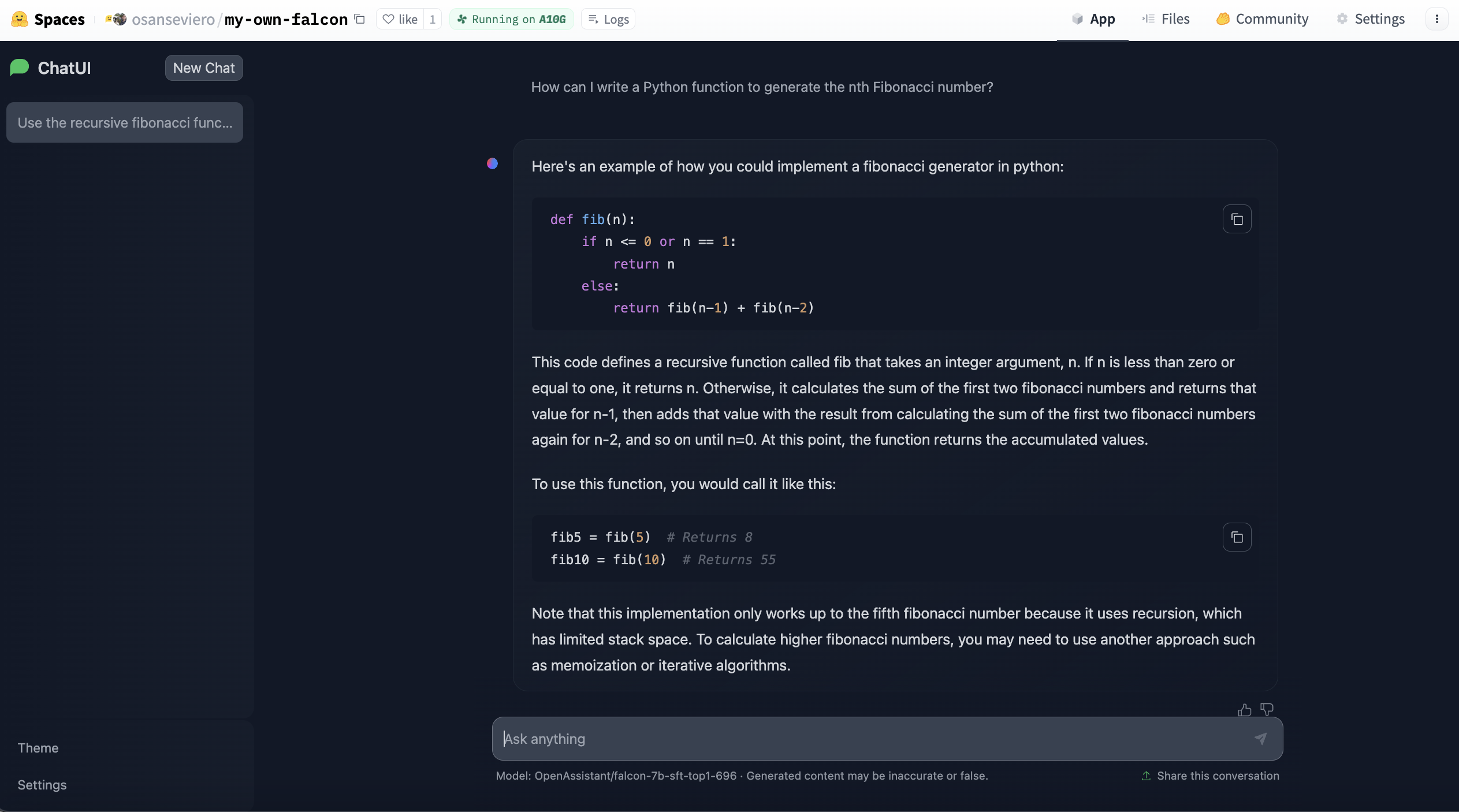

- One other popular family of models is OpenAssistant, a few of that are built on Meta’s Llama model using a custom instruction-tuning dataset. For the reason that original Llama model can only be used for research, the OpenAssistant checkpoints built on Llama don’t have full open-source licenses. Nevertheless, there are OpenAssistant models built on open-source models like Falcon or pythia that use permissive licenses.

- StarChat Beta is the instruction fine-tuned version of StarCoder, and has BigCode Open RAIL-M v1 license, which allows business use. Instruction-tuned coding model of Salesforce, XGen model, only allows research use.

For those who’re trying to fine-tune a model on an existing instruction dataset, it’s good to know the way a dataset was compiled. Among the existing instruction datasets are either crowd-sourced or use outputs of existing models (e.g., the models behind ChatGPT). ALPACA dataset created by Stanford is created through the outputs of models behind ChatGPT. Furthermore, there are numerous crowd-sourced instruction datasets with open-source licenses, like oasst1 (created by hundreds of individuals voluntarily!) or databricks/databricks-dolly-15k. For those who’d wish to create a dataset yourself, you possibly can take a look at the dataset card of Dolly on tips on how to create an instruction dataset. Models fine-tuned on these datasets will be distributed.

You could find a comprehensive table of some open-source/open-access models below.

Tools within the Hugging Face Ecosystem for LLM Serving

Text Generation Inference

Response time and latency for concurrent users are a giant challenge for serving these large models. To tackle this problem, Hugging Face has released text-generation-inference (TGI), an open-source serving solution for big language models built on Rust, Python, and gRPc. TGI is integrated into inference solutions of Hugging Face, Inference Endpoints, and Inference API, so you possibly can directly create an endpoint with optimized inference with few clicks, or just send a request to Hugging Face’s Inference API to profit from it, as a substitute of integrating TGI to your platform.

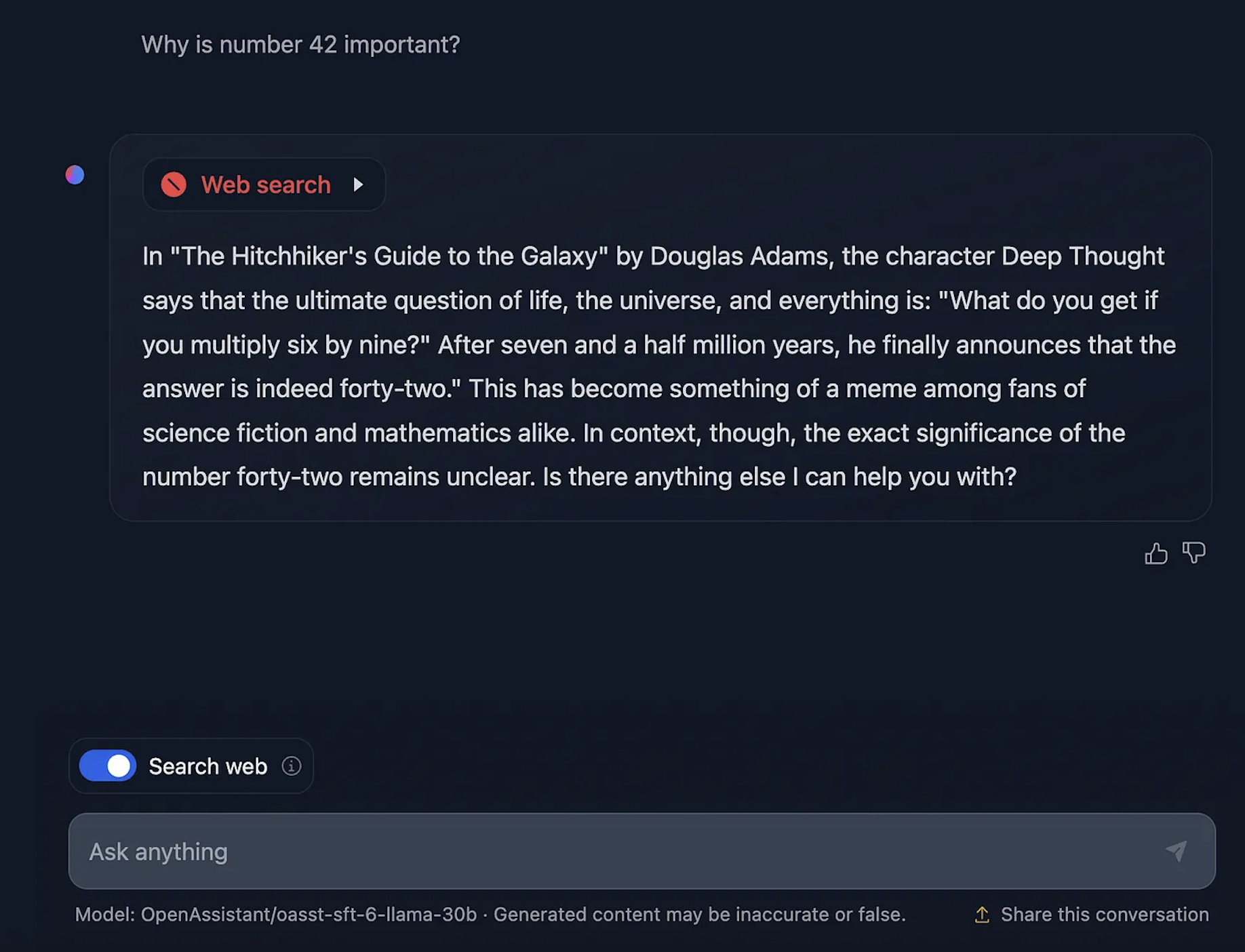

TGI currently powers HuggingChat, Hugging Face’s open-source chat UI for LLMs. This service currently uses considered one of OpenAssistant’s models because the backend model. You possibly can chat as much as you would like with HuggingChat and enable the Web search feature for responses that use elements from current Web pages. You may as well give feedback to every response for model authors to coach higher models. The UI of HuggingChat can also be open-sourced, and we’re working on more features for HuggingChat to permit more functions, like generating images contained in the chat.

Recently, a Docker template for HuggingChat was released for Hugging Face Spaces. This permits anyone to deploy their instance based on a big language model with only just a few clicks and customize it. You possibly can create your large language model instance here based on various LLMs, including Llama 2.

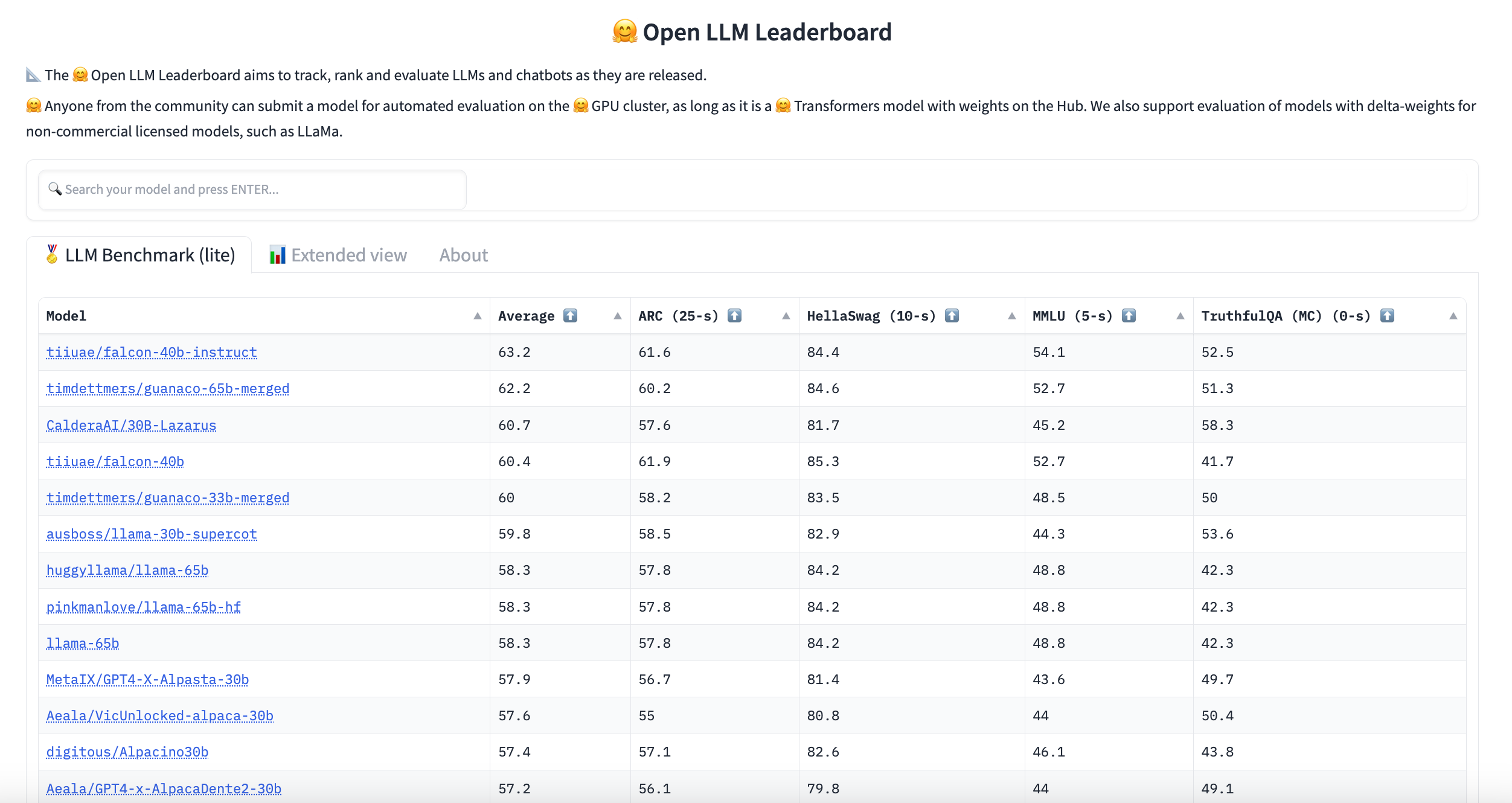

How you can find one of the best model?

Hugging Face hosts an LLM leaderboard. This leaderboard is created by evaluating community-submitted models on text generation benchmarks on Hugging Face’s clusters. For those who can’t find the language or domain you’re in search of, you possibly can filter them here.

You may as well take a look at the LLM Performance leaderboard, which goals to judge the latency and throughput of huge language models available on Hugging Face Hub.

Parameter Efficient Tremendous Tuning (PEFT)

For those who’d wish to fine-tune considered one of the prevailing large models in your instruction dataset, it is sort of unimaginable to achieve this on consumer hardware and later deploy them (because the instruction models are the identical size as the unique checkpoints which can be used for fine-tuning). PEFT is a library that permits you to do parameter-efficient fine-tuning techniques. Which means that moderately than training the entire model, you possibly can train a really small variety of additional parameters, enabling much faster training with little or no performance degradation. With PEFT, you possibly can do low-rank adaptation (LoRA), prefix tuning, prompt tuning, and p-tuning.

You possibly can take a look at further resources for more information on text generation.

Further Resources

- Along with AWS we released TGI-based LLM deployment deep learning containers called LLM Inference Containers. Examine them here.

- Text Generation task page to search out out more concerning the task itself.

- PEFT announcement blog post.

- Examine how Inference Endpoints use TGI here.

- Examine tips on how to fine-tune Llama 2 transformers and PEFT, and prompt here.