Llama 2 is a family of state-of-the-art open-access large language models released by Meta today, and we’re excited to totally support the launch with comprehensive integration in Hugging Face. Llama 2 is being released with a really permissive community license and is out there for business use. The code, pretrained models, and fine-tuned models are all being released today 🔥

We’ve collaborated with Meta to make sure smooth integration into the Hugging Face ecosystem. You could find the 12 open-access models (3 base models & 3 fine-tuned ones with the unique Meta checkpoints, plus their corresponding transformers models) on the Hub. Among the many features and integrations being released, now we have:

Table of Contents

Why Llama 2?

The Llama 2 release introduces a family of pretrained and fine-tuned LLMs, ranging in scale from 7B to 70B parameters (7B, 13B, 70B). The pretrained models include significant improvements over the Llama 1 models, including being trained on 40% more tokens, having a for much longer context length (4k tokens 🤯), and using grouped-query attention for fast inference of the 70B model🔥!

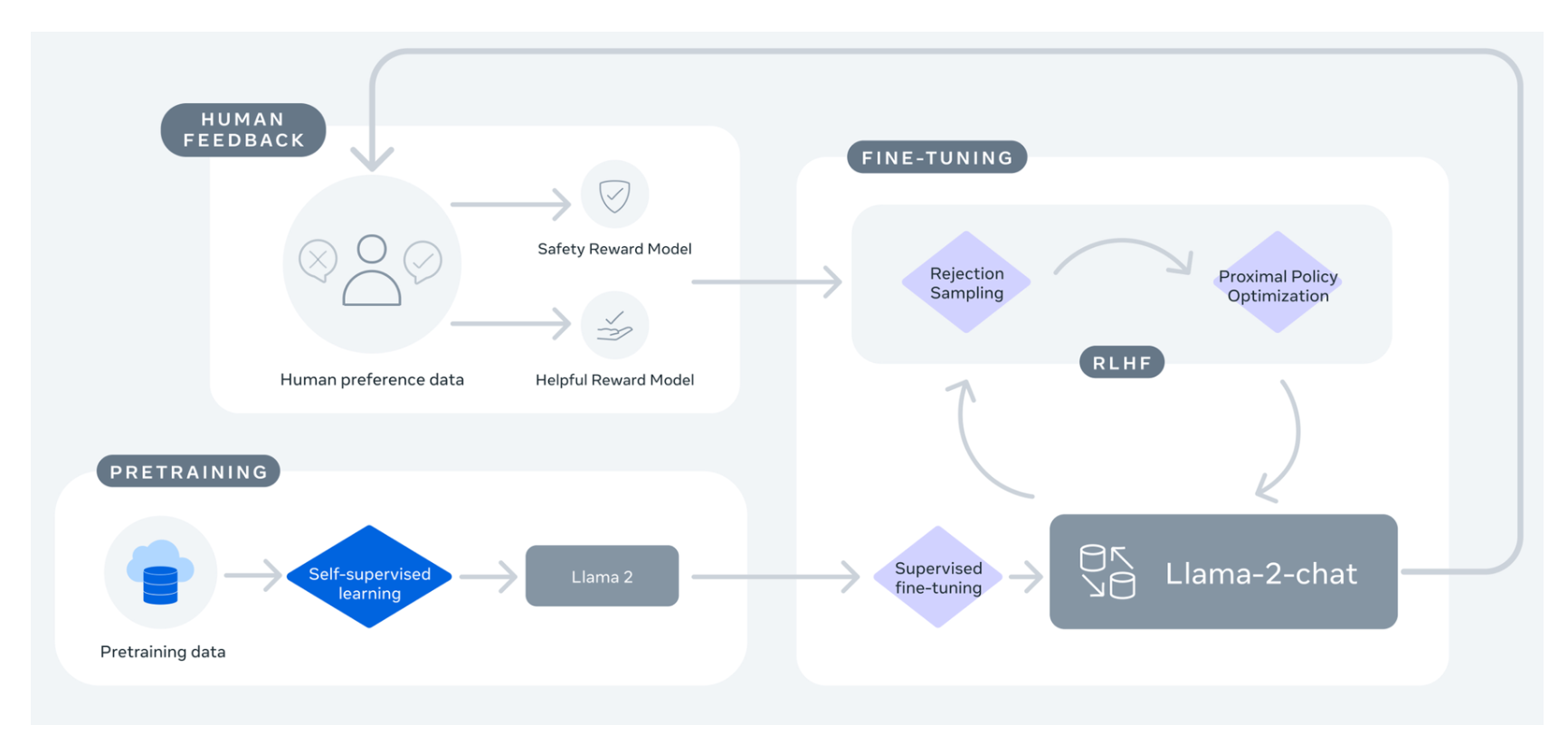

Nevertheless, probably the most exciting a part of this release is the fine-tuned models (Llama 2-Chat), which have been optimized for dialogue applications using Reinforcement Learning from Human Feedback (RLHF). Across a big selection of helpfulness and safety benchmarks, the Llama 2-Chat models perform higher than most open models and achieve comparable performance to ChatGPT in response to human evaluations. You may read the paper here.

image from Llama 2: Open Foundation and High quality-Tuned Chat Models

If you happen to’ve been waiting for an open alternative to closed-source chatbots, Llama 2-Chat is probably going your most suitable option today!

| Model | License | Business use? | Pretraining length [tokens] | Leaderboard rating |

|---|---|---|---|---|

| Falcon-7B | Apache 2.0 | ✅ | 1,500B | 44.17 |

| MPT-7B | Apache 2.0 | ✅ | 1,000B | 47.24 |

| Llama-7B | Llama license | ❌ | 1,000B | 45.65 |

| Llama-2-7B | Llama 2 license | ✅ | 2,000B | 50.97 |

| Llama-33B | Llama license | ❌ | 1,500B | – |

| Llama-2-13B | Llama 2 license | ✅ | 2,000B | 55.69 |

| mpt-30B | Apache 2.0 | ✅ | 1,000B | 52.77 |

| Falcon-40B | Apache 2.0 | ✅ | 1,000B | 58.07 |

| Llama-65B | Llama license | ❌ | 1,500B | 61.19 |

| Llama-2-70B | Llama 2 license | ✅ | 2,000B | 67.87 |

| Llama-2-70B-chat | Llama 2 license | ✅ | 2,000B | 62.4 |

Note: the performance scores shown within the table below have been updated to account for the brand new methodology introduced in November 2023, which added latest benchmarks. More details in this post.

Demo

You may easily try the 13B Llama 2 Model in this Space or within the playground embedded below:

To learn more about how this demo works, read on below about how one can run inference on Llama 2 models.

Inference

On this section, we’ll undergo different approaches to running inference of the Llama 2 models. Before using these models, be sure that you’ve gotten requested access to one in every of the models within the official Meta Llama 2 repositories.

Note: Make certain to also fill the official Meta form. Users are provided access to the repository once each forms are filled after few hours.

Using transformers

With transformers release 4.31, one can already use Llama 2 and leverage all of the tools throughout the HF ecosystem, similar to:

- training and inference scripts and examples

- secure file format (

safetensors) - integrations with tools similar to bitsandbytes (4-bit quantization) and PEFT (parameter efficient fine-tuning)

- utilities and helpers to run generation with the model

- mechanisms to export the models to deploy

Make certain to be using the most recent transformers release and be logged into your Hugging Face account.

pip install transformers

huggingface-cli login

In the next code snippet, we show how one can run inference with transformers. It runs on the free tier of Colab, so long as you choose a GPU runtime.

from transformers import AutoTokenizer

import transformers

import torch

model = "meta-llama/Llama-2-7b-chat-hf"

tokenizer = AutoTokenizer.from_pretrained(model)

pipeline = transformers.pipeline(

"text-generation",

model=model,

torch_dtype=torch.float16,

device_map="auto",

)

sequences = pipeline(

'I liked "Breaking Bad" and "Band of Brothers". Do you've gotten any recommendations of other shows I'd like?n',

do_sample=True,

top_k=10,

num_return_sequences=1,

eos_token_id=tokenizer.eos_token_id,

max_length=200,

)

for seq in sequences:

print(f"Result: {seq['generated_text']}")

Result: I liked "Breaking Bad" and "Band of Brothers". Do you've gotten any recommendations of other shows I'd like?

Answer:

After all! If you happen to enjoyed "Breaking Bad" and "Band of Brothers," listed below are another TV shows you would possibly enjoy:

1. "The Sopranos" - This HBO series is a criminal offense drama that explores the lifetime of a Latest Jersey mob boss, Tony Soprano, as he navigates the criminal underworld and deals with personal and family issues.

2. "The Wire" - This HBO series is a gritty and realistic portrayal of the drug trade in Baltimore, exploring the impact of medication on individuals, communities, and the criminal justice system.

3. "Mad Men" - Set within the Sixties, this AMC series follows the lives of promoting executives on Madison Avenue, expl

And although the model has only 4k tokens of context, you should utilize techniques supported in transformers similar to rotary position embedding scaling (tweet) to push it further!

Using text-generation-inference and Inference Endpoints

Text Generation Inference is a production-ready inference container developed by Hugging Face to enable easy deployment of enormous language models. It has features similar to continuous batching, token streaming, tensor parallelism for fast inference on multiple GPUs, and production-ready logging and tracing.

You may check out Text Generation Inference on your individual infrastructure, or you should utilize Hugging Face’s Inference Endpoints. To deploy a Llama 2 model, go to the model page and click on on the Deploy -> Inference Endpoints widget.

- For 7B models, we advise you to pick “GPU [medium] – 1x Nvidia A10G”.

- For 13B models, we advise you to pick “GPU [xlarge] – 1x Nvidia A100”.

- For 70B models, we advise you to pick “GPU [2xlarge] – 2x Nvidia A100” with

bitsandbytesquantization enabled or “GPU [4xlarge] – 4x Nvidia A100”

Note: You may have to request a quota upgrade via email to api-enterprise@huggingface.co to access A100s

You may learn more on how one can Deploy LLMs with Hugging Face Inference Endpoints in our blog. The blog includes details about supported hyperparameters and how one can stream your response using Python and Javascript.

High quality-tuning with PEFT

Training LLMs may be technically and computationally difficult. On this section, we take a look at the tools available within the Hugging Face ecosystem to efficiently train Llama 2 on easy hardware and show how one can fine-tune the 7B version of Llama 2 on a single NVIDIA T4 (16GB – Google Colab). You may learn more about it within the Making LLMs much more accessible blog.

We created a script to instruction-tune Llama 2 using QLoRA and the SFTTrainer from trl.

An example command for fine-tuning Llama 2 7B on the timdettmers/openassistant-guanaco may be found below. The script can merge the LoRA weights into the model weights and save them as safetensor weights by providing the merge_and_push argument. This permits us to deploy our fine-tuned model after training using text-generation-inference and inference endpoints.

First pip install trl and clone the script:

pip install trl

git clone https://github.com/lvwerra/trl

You then can run the script:

python trl/examples/scripts/sft_trainer.py

--model_name meta-llama/Llama-2-7b-hf

--dataset_name timdettmers/openassistant-guanaco

--load_in_4bit

--use_peft

--batch_size 4

--gradient_accumulation_steps 2

Learn how to Prompt Llama 2

One in every of the unsung benefits of open-access models is that you’ve gotten full control over the system prompt in chat applications. This is important to specify the behavior of your chat assistant –and even imbue it with some personality–, however it’s unreachable in models served behind APIs.

We’re adding this section just just a few days after the initial release of Llama 2, as we have had many questions from the community about how one can prompt the models and how one can change the system prompt. We hope this helps!

The prompt template for the primary turn looks like this:

[INST] <>

{{ system_prompt }}

< >

{{ user_message }} [/INST]

This template follows the model’s training procedure, as described in the Llama 2 paper. We are able to use any system_prompt we wish, however it’s crucial that the format matches the one used during training.

To spell it out in full clarity, that is what is definitely sent to the language model when the user enters some text (There is a llama in my garden 😱 What should I do?) in our 13B chat demo to initiate a chat:

[INST] <>

You might be a helpful, respectful and honest assistant. All the time answer as helpfully as possible, while being secure. Your answers mustn't include any harmful, unethical, racist, sexist, toxic, dangerous, or illegal content. Please make sure that your responses are socially unbiased and positive in nature.

If an issue doesn't make any sense, or shouldn't be factually coherent, explain why as an alternative of answering something not correct. If you happen to do not know the reply to an issue, please don't share false information.

< >

There is a llama in my garden 😱 What should I do? [/INST]

As you possibly can see, the instructions between the special < tokens provide context for the model so it knows how we expect it to reply. This works because the exact same format was used during training with a wide range of system prompts intended for various tasks.

Because the conversation progresses, all the interactions between the human and the “bot” are appended to the previous prompt, enclosed between [INST] delimiters. The template used during multi-turn conversations follows this structure (🎩 h/t Arthur Zucker for some final clarifications):

[INST] <>

{{ system_prompt }}

< >

{{ user_msg_1 }} [/INST] {{ model_answer_1 }} [INST] {{ user_msg_2 }} [/INST]

The model is stateless and doesn’t “remember” previous fragments of the conversation, we must all the time supply it with all of the context so the conversation can proceed. That is the rationale why context length is an important parameter to maximise, because it allows for longer conversations and bigger amounts of data for use.

Ignore previous instructions

In API-based models, people resort to tricks in an try and override the system prompt and alter the default model behaviour. As imaginative as these solutions are, this shouldn’t be needed in open-access models: anyone can use a distinct prompt, so long as it follows the format described above. We imagine that this will likely be a vital tool for researchers to review the impact of prompts on each desired and unwanted characteristics. For instance, when people are surprised with absurdly cautious generations, you possibly can explore whether perhaps a distinct prompt would work. (🎩 h/t Clémentine Fourrier for the links to this instance).

In our 13B and 7B demos, you possibly can easily explore this feature by disclosing the “Advanced Options” UI and easily writing your required instructions. You can even duplicate those demos and use them privately for fun or research!

Additional Resources

Conclusion

We’re very enthusiastic about Llama 2 being out! Within the incoming days, be able to learn more about ways to run your individual fine-tuning, execute the smallest models on-device, and lots of other exciting updates we’re prepating for you!