tl;dr: We’re using machine learning to detect the language of Hub datasets with no language metadata, and librarian-bots to make pull requests so as to add this metadata.

The Hugging Face Hub has turn out to be the repository where the community shares machine learning models, datasets, and applications. Because the variety of datasets grows, metadata becomes increasingly vital as a tool for locating the best resource in your use case.

On this blog post, I’m excited to share some early experiments which seek to make use of machine learning to enhance the metadata for datasets hosted on the Hugging Face Hub.

Language Metadata for Datasets on the Hub

There are currently ~50K public datasets on the Hugging Face Hub. Metadata in regards to the language utilized in a dataset could be specified using a YAML field at the highest of the dataset card.

All public datasets specify 1,716 unique languages via a language tag of their metadata. Note that a few of them might be the results of languages being laid out in alternative ways i.e. en vs eng vs english vs English.

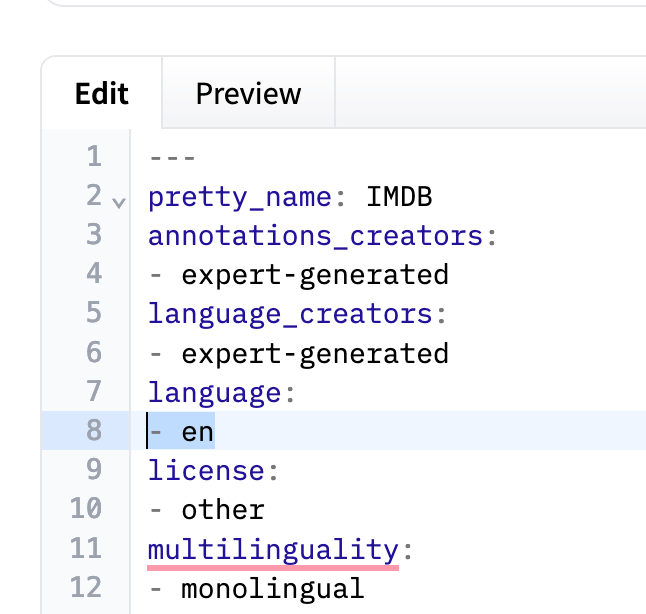

For instance, the IMDB dataset specifies en within the YAML metadata (indicating English):

Section of the YAML metadata for the IMDB dataset

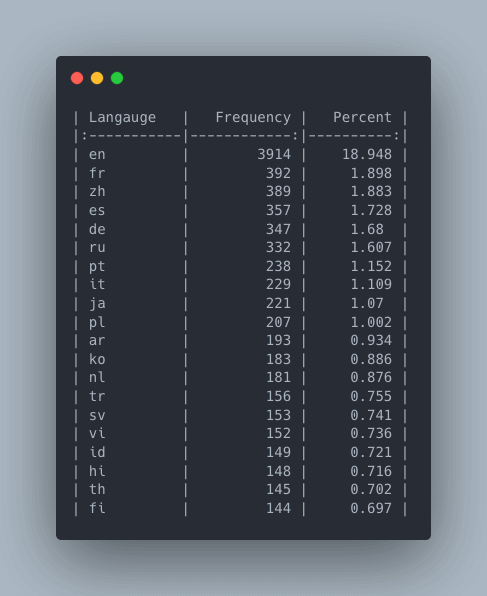

It is probably unsurprising that English is by far essentially the most common language for datasets on the Hub, with around 19% of datasets on the Hub listing their language as en (not including any variations of en, so the actual percentage is probably going much higher).

The frequency and percentage frequency for datasets on the Hugging Face Hub

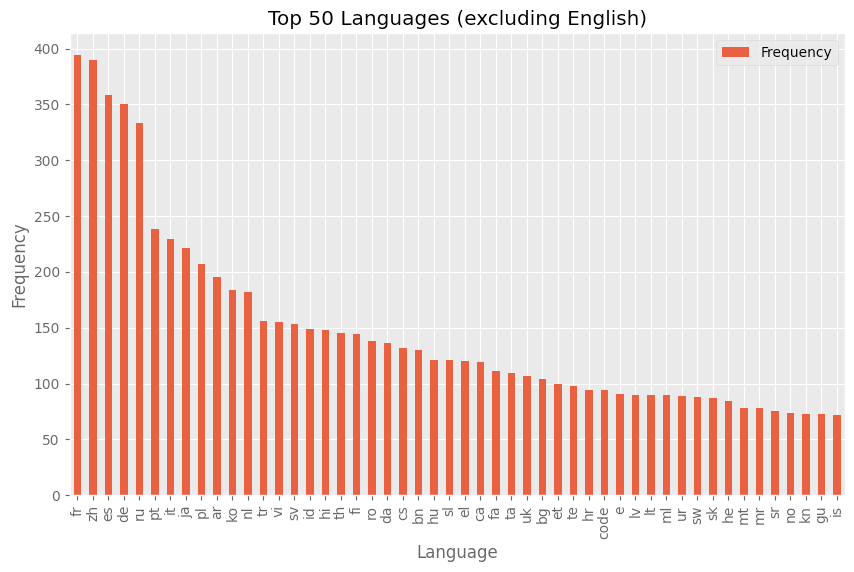

What does the distribution of languages appear like if we exclude English? We are able to see that there’s a grouping of a couple of dominant languages and after that there’s a pretty smooth fall within the frequencies at which languages appear.

Distribution of language tags for datasets on the hub excluding English.

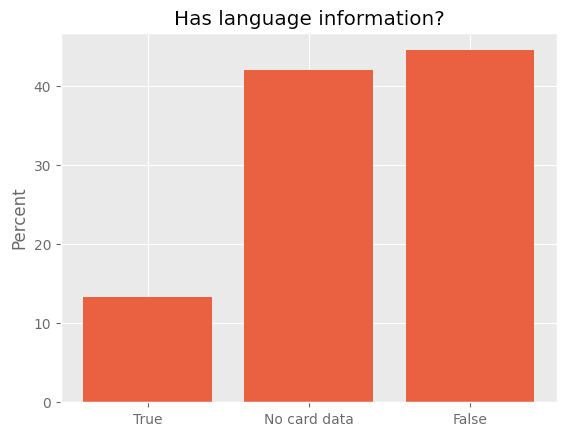

Nevertheless, there may be a serious caveat to this. Most datasets (around 87%) don’t specify the language used; only roughly 13% of datasets include language information of their metadata.

The percent of datasets which have language metadata. True indicates language metadata is specified, False means no language data is listed. No card data implies that there is no metadata or it couldn’t be loaded by the `huggingface_hub` Python library.

Why is Language Metadata Essential?

Language metadata generally is a vital tool for locating relevant datasets. The Hugging Face Hub means that you can filter datasets by language. For instance, if we would like to search out datasets with Dutch language we will use a filter on the Hub to incorporate only datasets with Dutch data.

Currently this filter returns 184 datasets. Nevertheless, there are datasets on the Hub which include Dutch but don’t specify this within the metadata. These datasets turn out to be harder to search out, particularly because the variety of datasets on the Hub grows.

Many individuals wish to find a way to search out datasets for a specific language. Considered one of the key barriers to training good open source LLMs for a specific language is a scarcity of top quality training data.

If we switch to the duty of finding relevant machine learning models, knowing what languages were included within the training data for a model may also help us find models for the language we’re all in favour of. This relies on the dataset specifying this information.

Finally, knowing what languages are represented on the Hub (and which will not be), helps us understand the language biases of the Hub and helps inform community efforts to handle gaps specifically languages.

Predicting the Languages of Datasets Using Machine Learning

We’ve already seen that lots of the datasets on the Hugging Face Hub haven’t included metadata for the language used. Nevertheless, since these datasets are already shared openly, perhaps we will have a look at the dataset and take a look at to discover the language using machine learning.

Getting the Data

A method we could access some examples from a dataset is through the use of the datasets library to download the datasets i.e.

from datasets import load_dataset

dataset = load_dataset("biglam/on_the_books")

Nevertheless, for a number of the datasets on the Hub, we could be keen to not download the entire dataset. We could as a substitute attempt to load a sample of the dataset. Nevertheless, depending on how the dataset was created, we’d still find yourself downloading more data than we’d need onto the machine we’re working on.

Luckily, many datasets on the Hub can be found via the dataset viewer API. It allows us to access datasets hosted on the Hub without downloading the dataset locally. The API powers the dataset viewer you will notice for a lot of datasets hosted on the Hub.

For this primary experiment with predicting language for datasets, we define a listing of column names and data types prone to contain textual content i.e. text or prompt column names and string features are prone to be relevant image isn’t. This implies we will avoid predicting the language for datasets where language information is less relevant, for instance, image classification datasets. We use the dataset viewer API to get 20 rows of text data to pass to a machine learning model (we could modify this to take more or fewer examples from the dataset).

This approach implies that for the vast majority of datasets on the Hub we will quickly request the contents of likely text columns for the primary 20 rows in a dataset.

Predicting the Language of a Dataset

Once we now have some examples of text from a dataset, we want to predict the language. There are numerous options here, but for this work, we used the facebook/fasttext-language-identification fastText model created by Meta as a part of the No Language Left Behind work. This model can detect 217 languages which is able to likely represent the vast majority of languages for datasets hosted on the Hub.

We pass 20 examples to the model representing rows from a dataset. This leads to 20 individual language predictions (one per row) for every dataset.

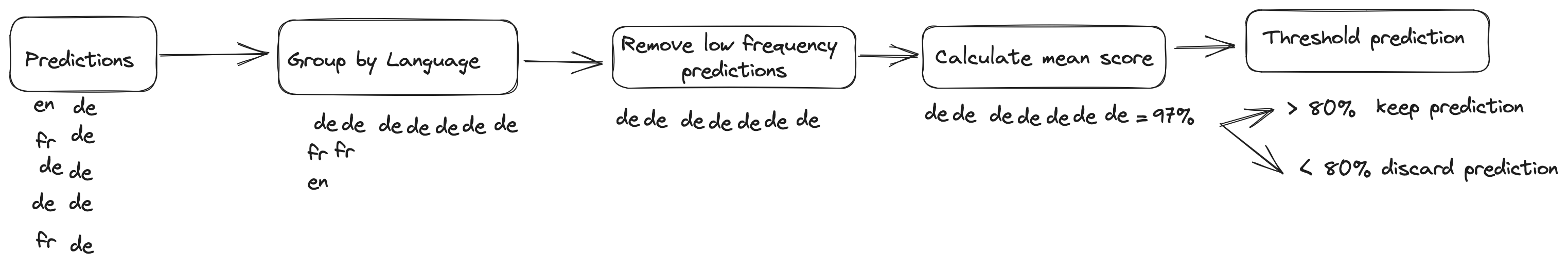

Once we now have these predictions, we do some additional filtering to find out if we are going to accept the predictions as a metadata suggestion. This roughly consists of:

- Grouping the predictions for every dataset by language: some datasets return predictions for multiple languages. We group these predictions by the language predicted i.e. if a dataset returns predictions for English and Dutch, we group the English and Dutch predictions together.

- For datasets with multiple languages predicted, we count what number of predictions we now have for every language. If a language is predicted lower than 20% of the time, we discard this prediction. i.e. if we now have 18 predictions for English and only 2 for Dutch we discard the Dutch predictions.

- We calculate the mean rating for all predictions for a language. If the mean rating related to a languages prediction is below 80% we discard this prediction.

Diagram showing how predictions are handled.

Once we’ve done this filtering, we now have an additional step of deciding the best way to use these predictions. The fastText language prediction model returns predictions as an ISO 639-3 code (a global standard for language codes) together with a script type. i.e. kor_Hang is the ISO 693-3 language code for Korean (kor) + Hangul script (Hang) a ISO 15924 code representing the script of a language.

We discard the script information since this is not currently captured consistently as metadata on the Hub and, where possible, we convert the language prediction returned by the model from ISO 639-3 to ISO 639-1 language codes. This is basically done because these language codes have higher support within the Hub UI for navigating datasets.

For some ISO 639-3 codes, there isn’t a ISO 639-1 equivalent. For these cases we manually specify a mapping if we deem it to make sense, for instance Standard Arabic (arb) is mapped to Arabic (ar). Where an obvious mapping isn’t possible, we currently don’t suggest metadata for this dataset. In future iterations of this work we may take a unique approach. It is vital to recognise this approach does include downsides, because it reduces the variety of languages which could be suggested and likewise relies on subjective judgments about what languages could be mapped to others.

But the method doesn’t stop here. In any case, what use is predicting the language of the datasets if we won’t share that information with the remaining of the community?

Using Librarian-Bot to Update Metadata

To make sure this precious language metadata is incorporated back into the Hub, we turn to Librarian-Bot! Librarian-Bot takes the language predictions generated by Meta’s facebook/fasttext-language-identification fastText model and opens pull requests so as to add this information to the metadata of every respective dataset.

This method not only updates the datasets with language information, but in addition does it swiftly and efficiently, without requiring manual work from humans. If the owner of a repo decided to approve and merge the pull request, then the language metadata becomes available for all users, significantly enhancing the usability of the Hugging Face Hub. You may keep track of what the librarian-bot is doing here!

Next Steps

Because the variety of datasets on the Hub grows, metadata becomes increasingly vital. Language metadata, specifically, could be incredibly precious for identifying the proper dataset in your use case.

With the help of the dataset viewer API and the Librarian-Bots, we will update our dataset metadata at a scale that would not be possible manually. Because of this, we’re enriching the Hub and making it a good more powerful tool for data scientists, linguists, and AI enthusiasts around the globe.

Because the machine learning librarian at Hugging Face, I proceed exploring opportunities for automatic metadata enrichment for machine learning artefacts hosted on the Hub. Be at liberty to succeed in out (daniel at thiswebsite dot co) if you might have ideas or wish to collaborate on this effort!