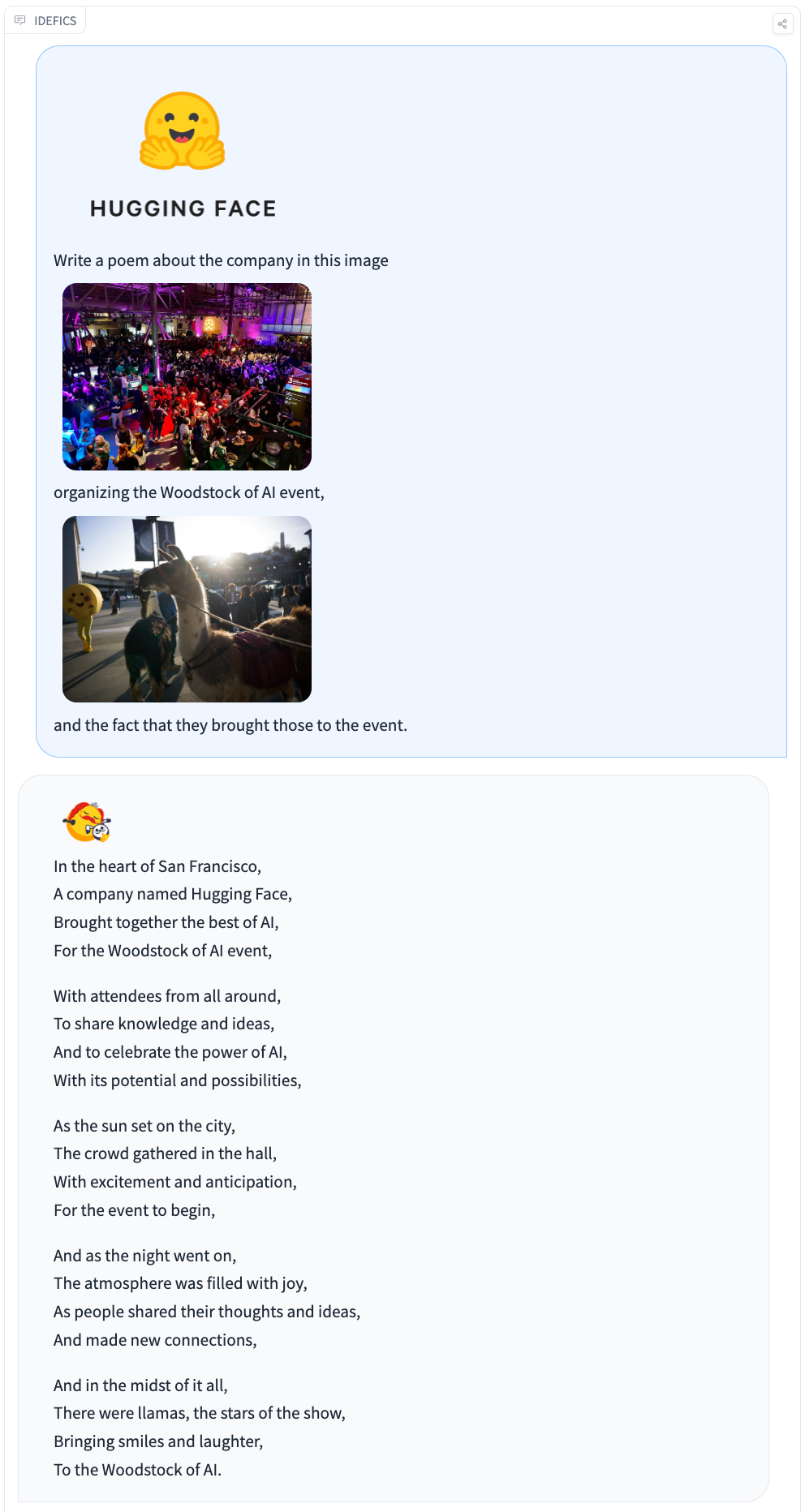

We’re excited to release IDEFICS (Image-aware Decoder Enhanced à la Flamingo with Interleaved Cross-attentionS), an open-access visual language model. IDEFICS is predicated on Flamingo, a state-of-the-art visual language model initially developed by DeepMind, which has not been released publicly. Similarly to GPT-4, the model accepts arbitrary sequences of image and text inputs and produces text outputs. IDEFICS is built solely on publicly available data and models (LLaMA v1 and OpenCLIP) and is available in two variants—the bottom version and the instructed version. Each variant is on the market on the 9 billion and 80 billion parameter sizes.

The event of state-of-the-art AI models needs to be more transparent. Our goal with IDEFICS is to breed and supply the AI community with systems that match the capabilities of huge proprietary models like Flamingo. As such, we took vital steps contributing to bringing transparency to those AI systems: we used only publicly available data, we provided tooling to explore training datasets, we shared technical lessons and mistakes of constructing such artifacts and assessed the model’s harmfulness by adversarially prompting it before releasing it. We’re hopeful that IDEFICS will function a solid foundation for more open research in multimodal AI systems, alongside models like OpenFlamingo-another open reproduction of Flamingo on the 9 billion parameter scale.

Check out the demo and the models on the Hub!

What’s IDEFICS?

IDEFICS is an 80 billion parameters multimodal model that accepts sequences of images and texts as input and generates coherent text as output. It will possibly answer questions on images, describe visual content, create stories grounded in multiple images, etc.

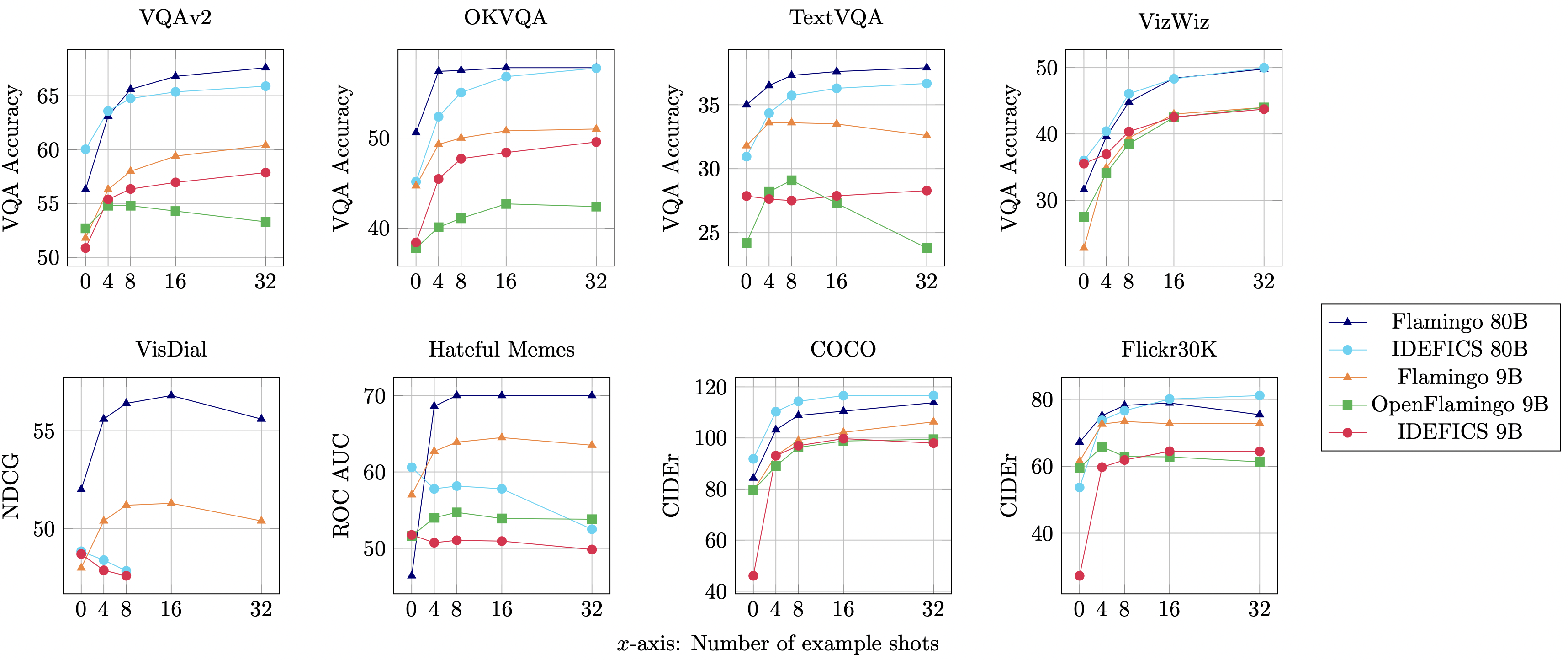

IDEFICS is an open-access reproduction of Flamingo and is comparable in performance with the unique closed-source model across various image-text understanding benchmarks. It is available in two variants – 80 billion parameters and 9 billion parameters.

We also provide fine-tuned versions idefics-80B-instruct and idefics-9B-instruct adapted for conversational use cases.

Training Data

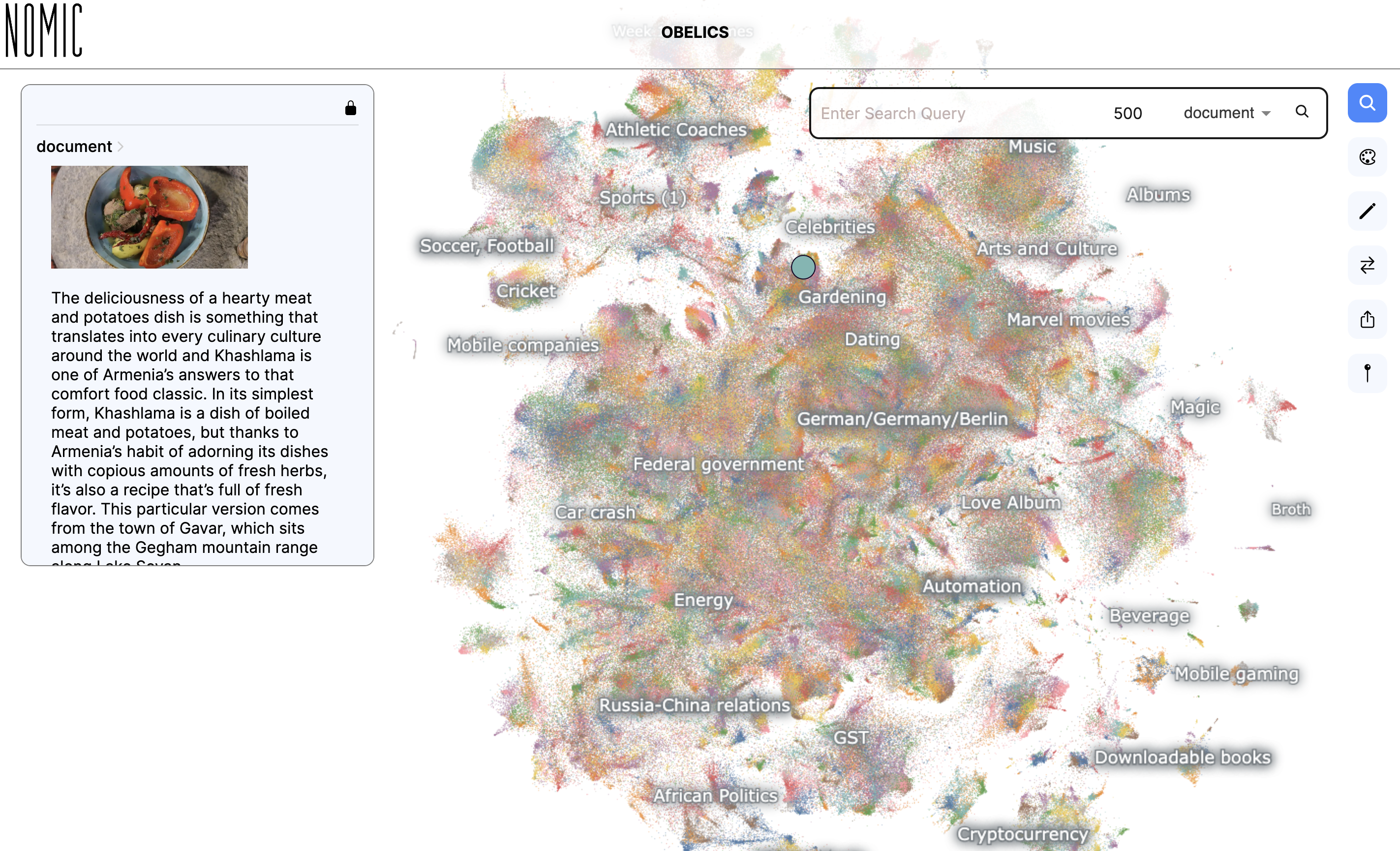

IDEFICS was trained on a combination of openly available datasets: Wikipedia, Public Multimodal Dataset, and LAION, in addition to a brand new 115B token dataset called OBELICS that we created. OBELICS consists of 141 million interleaved image-text documents scraped from the net and comprises 353 million images.

We offer an interactive visualization of OBELICS that enables exploring the content of the dataset with Nomic AI.

The small print of IDEFICS’ architecture, training methodology, and evaluations, in addition to information concerning the dataset, can be found within the model card and our research paper. Moreover, now we have documented technical insights and learnings from the model’s training, offering useful perspective on IDEFICS’ development.

Ethical evaluation

On the outset of this project, through a set of discussions, we developed an ethical charter that might help steer decisions made throughout the project. This charter sets out values, including being self-critical, transparent, and fair which now we have sought to pursue in how we approached the project and the discharge of the models.

As a part of the discharge process, we internally evaluated the model for potential biases by adversarially prompting the model with images and text which may elicit responses we are not looking for from the model (a process often known as red teaming).

Please check out IDEFICS with the demo, take a look at the corresponding model cards and dataset card and tell us your feedback using the community tab! We’re committed to improving these models and making large multimodal AI models accessible to the machine learning community.

License

The model is built on top of two pre-trained models: laion/CLIP-ViT-H-14-laion2B-s32B-b79K and huggyllama/llama-65b. The primary was released under an MIT license, while the second was released under a selected non-commercial license focused on research purposes. As such, users should comply with that license by applying on to Meta’s form.

The 2 pre-trained models are connected to one another with newly initialized parameters that we train. These will not be based on any of the 2 base frozen models forming the composite model. We release the extra weights we trained under an MIT license.

Getting Began with IDEFICS

IDEFICS models can be found on the Hugging Face Hub and supported within the last transformers version. Here’s a code sample to try it out:

import torch

from transformers import IdeficsForVisionText2Text, AutoProcessor

device = "cuda" if torch.cuda.is_available() else "cpu"

checkpoint = "HuggingFaceM4/idefics-9b-instruct"

model = IdeficsForVisionText2Text.from_pretrained(checkpoint, torch_dtype=torch.bfloat16).to(device)

processor = AutoProcessor.from_pretrained(checkpoint)

prompts = [

[

"User: What is in this image?",

"https://upload.wikimedia.org/wikipedia/commons/8/86/Id%C3%A9fix.JPG",

"" ,

"nAssistant: This picture depicts Idefix, the dog of Obelix in Asterix and Obelix. Idefix is running on the ground." ,

"nUser:",

"https://static.wikia.nocookie.net/asterix/images/2/25/R22b.gif/revision/latest?cb=20110815073052",

"And who is that?" ,

"nAssistant:",

],

]

inputs = processor(prompts, add_end_of_utterance_token=False, return_tensors="pt").to(device)

exit_condition = processor.tokenizer("" , add_special_tokens=False).input_ids

bad_words_ids = processor.tokenizer(["" , "" ], add_special_tokens=False).input_ids

generated_ids = model.generate(**inputs, eos_token_id=exit_condition, bad_words_ids=bad_words_ids, max_length=100)

generated_text = processor.batch_decode(generated_ids, skip_special_tokens=True)

for i, t in enumerate(generated_text):

print(f"{i}:n{t}n")