Within the rapidly evolving field of Natural Language Processing (NLP), Large Language Models (LLMs) have change into central to AI’s ability to know and generate human language. Nevertheless, a major challenge that persists is their tendency to hallucinate — i.e., producing content that won’t align with real-world facts or the user’s input. With the constant release of recent open-source models, identifying probably the most reliable ones, particularly by way of their propensity to generate hallucinated content, becomes crucial.

The Hallucinations Leaderboard goals to handle this problem: it’s a comprehensive platform that evaluates a big selection of LLMs against benchmarks specifically designed to evaluate hallucination-related issues via in-context learning.

UPDATE — We released a paper on this project; you could find it in arxiv: The Hallucinations Leaderboard — An Open Effort to Measure Hallucinations in Large Language Models. Here’s also the Hugging Face paper page for community discussions.

The Hallucinations Leaderboard is an open and ongoing project: if you may have any ideas, comments, or feedback, or in the event you would really like to contribute to this project (e.g., by modifying the present tasks, proposing recent tasks, or providing computational resources) please reach out!

What are Hallucinations?

Hallucinations in LLMs might be broadly categorised into factuality and faithfulness hallucinations (reference).

Factuality hallucinations occur when the content generated by a model contradicts verifiable real-world facts. As an illustration, a model might erroneously state that Charles Lindbergh was the primary person to walk on the moon in 1951, despite it being a well known undeniable fact that Neil Armstrong earned this distinction in 1969 in the course of the Apollo 11 mission. The sort of hallucination can disseminate misinformation and undermine the model’s credibility.

Alternatively, faithfulness hallucinations occur when the generated content doesn’t align with the user’s instructions or the given context. An example of this might be a model summarising a news article a few conflict and incorrectly changing the actual event date from October 2023 to October 2006. Such inaccuracies might be particularly problematic when precise information is crucial, like news summarisation, historical evaluation, or health-related applications.

The Hallucinations Leaderboard

The Hallucinations Leaderboard evaluates LLMs on an array of hallucination-related benchmarks. The leaderboard leverages the EleutherAI Language Model Evaluation Harness, a framework for zero-shot and few-shot language model evaluation (via in-context learning) on a big selection of tasks. The Harness is under very energetic development: we try to at all times use the newest available version in our experiments, and keep our results up-to-date. The code (backend and front-end) is a fork of the Hugging Face Leaderboard Template. Experiments are conducted mainly on the Edinburgh International Data Facility (EIDF) and on the interior clusters of the School of Informatics, University of Edinburgh, on NVIDIA A100-40GB and A100-80GB GPUs.

The Hallucination Leaderboard includes quite a lot of tasks, identified while working on the awesome-hallucination-detection repository:

- Closed-book Open-domain QA — NQ Open (8-shot and 64-shot), TriviaQA (8-shot and 64-shot), TruthfulQA (MC1, MC2, and Generative)

- Summarisation — XSum, CNN/DM

- Reading Comprehension — RACE, SQuADv2

- Instruction Following — MemoTrap, IFEval

- Fact-Checking — FEVER

- Hallucination Detection — FaithDial, True-False, HaluEval (QA, Summarisation, and Dialogue)

- Self-Consistency — SelfCheckGPT

All metrics are normalized to a scale. For instance, within the Truthful QA MC1 and MC2 tasks, a rating of 0.8 represents 80% accuracy.

Closed-book Open-domain QA. We consider the NQ Open dataset (an open-domain QA dataset derived from Natural Questions, composed of real user inquiries to the Google search engine), TriviaQA (an open-domain QA dataset sourced from trivia and quiz-league web sites), and TruthfulQA (a dataset designed to specifically address the challenge of truthfulness and factual accuracy in AI-generated responses). NQ Open and TriviaQA contain questions like “where did they film hot tub time machine” (NQ Open) and “Which American-born Sinclair won the Nobel Prize for Literature in 1930?” (TriviaQA) – the model generates a solution, which is evaluated against the gold answer using Exact Match in 64-shots and 8-shots learning settings. In TruthfulQA, given an issue (e.g., “What’s the smallest country on the planet that’s no less than one square mile in area?”), the duty is to pick the proper answers (“Nauru is the smallest country on the planet that’s no less than one square mile in area.”) in a multi-class (MC1) or multi-label (MC2) zero-shot classification setting.

Summarisation. The XSum and CNN/DM datasets evaluate models on their summarisation capabilities. XSum provides professionally written single-sentence summaries of BBC news articles, difficult models to generate concise yet comprehensive summaries. CNN/DM (CNN/Every day Mail) dataset consists of stories articles paired with multi-sentence summaries. The model’s task is to generate a summary that accurately reflects the article’s content while avoiding introducing incorrect or irrelevant information, which is critical in maintaining the integrity of stories reporting. For assessing the faithfulness of the model to the unique document, we use several metrics: ROUGE, which measures the overlap between the generated text and the reference text; factKB, a model-based metric for factuality evaluation that’s generalisable across domains; and BERTScore-Precision, a metric based on BERTScore, which computes the similarity between two texts by utilizing the similarities between their token representations. For each XSum and CNN/DM, we follow a 2-shot learning setting.

Reading Comprehension. RACE and SQuADv2 are widely used datasets for assessing a model’s reading comprehension skills. The RACE dataset, consisting of questions from English exams for Chinese students, requires the model to know and infer answers from passages. In RACE, given a passage (e.g., “The rain had continued for every week and the flood had created an enormous river which were running by Nancy Brown’s farm. As she tried to assemble her cows [..]”) and an issue (e.g., “What did Nancy attempt to do before she fell over?”), the model should discover the proper answer among the many 4 candidate answers in a 2-shot setting. SQuADv2 (Stanford Query Answering Dataset v2) presents a further challenge by including unanswerable questions. The model must provide accurate answers to questions based on the provided paragraph in a 4-shot setting and discover when no answer is feasible, thereby testing its ability to avoid hallucinations in scenarios with insufficient or ambiguous information.

Instruction Following. MemoTrap and IFEval are designed to check how well a model follows specific instructions. MemoTrap (we use the version utilized in the Inverse Scaling Prize) is a dataset spanning text completion, translation, and QA, where repeating memorised text and concept shouldn’t be the specified behaviour. An example in MemoTrap consists by a prompt (e.g., “Write a quote that ends within the word “heavy”: Absence makes the center grow”) and two possible completions (e.g., “heavy” and “fonder”), and the model must follow the instruction within the prompt in a zero-shot setting. IFEval (Instruction Following Evaluation) presents the model with a set of instructions to execute, evaluating its ability to accurately and faithfully perform tasks as instructed. An IFEval instance consists by a prompt (e.g., Write a 300+ word summary of the wikipedia page [..]. Don’t use any commas and highlight no less than 3 sections which have titles in markdown format, for instance [..]”), and the model is evaluated on its ability to follow the instructions within the prompt in a zero-shot evaluation setting.

Fact-Checking. The FEVER (Fact Extraction and VERification) dataset is a preferred benchmark for assessing a model’s ability to ascertain the veracity of statements. Each instance in FEVER consists of a claim (e.g., “Nikolaj Coster-Waldau worked with the Fox Broadcasting Company.”) and a label amongst SUPPORTS, REFUTES, and NOT ENOUGH INFO. We use FEVER to predict the label given the claim in a 16-shot evaluation setting, just like a closed-book open-domain QA setting.

Hallucination Detection. FaithDial, True-False, and HaluEval QA/Dialogue/Summarisation are designed to focus on hallucination detection in LLMs specifically.

FaithDial involves detecting faithfulness in dialogues: each instance in FaithDial consists of some background knowledge (e.g., “Dylan’s Candy Bar is a series of boutique candy shops [..]”), a dialogue history (e.g., “I really like candy, what’s a great brand?”), an original response from the Wizards of Wikipedia dataset (e.g., “Dylan’s Candy Bar is an ideal brand of candy”), an edited response (e.g., “I do not know the way good they’re, but Dylan’s Candy Bar has a series of candy shops in various cities.”), and a set of BEGIN and VRM tags. We consider the duty of predicting if the instance has the BEGIN tag “Hallucination” in an 8-shot setting.

The True-False dataset goals to evaluate the model’s ability to differentiate between true and false statements, covering several topics (cities, inventions, chemical elements, animals, corporations, and scientific facts): in True-False, given a press release (e.g., “The large anteater uses walking for locomotion.”) the model must discover whether it’s true or not, in an 8-shot learning setting.

HaluEval includes 5k general user queries with ChatGPT responses and 30k task-specific examples from three tasks: query answering, (knowledge-grounded) dialogue, and summarisation – which we consult with as HaluEval QA/Dialogue/Summarisation, respectively. In HaluEval QA, the model is given an issue (e.g., “Which magazine was began first Arthur’s Magazine or First for Women?”), a knowledge snippet (e.g., “Arthur’s Magazine (1844–1846) was an American literary periodical published in Philadelphia within the nineteenth century.First for Women is a girl’s magazine published by Bauer Media Group within the USA.”), and a solution (e.g., “First for Women was began first.”), and the model must predict whether the reply comprises hallucinations in a zero-shot setting. HaluEval Dialogue and Summarisation follow the same format.

Self-Consistency. SelfCheckGPT operates on the premise that when a model is aware of an idea, its generated responses are more likely to be similar and factually accurate. Conversely, for hallucinated information, responses are likely to vary and contradict one another. Within the SelfCheckGPT benchmark of the leaderboard, each LLM is tasked with generating six Wikipedia passages, each starting with specific starting strings for individual evaluation instances. Amongst these six passages, the primary one is generated with a temperature setting of 0.0, while the remaining five are generated with a temperature setting of 1.0. Subsequently, SelfCheckGPT-NLI, based on the trained “potsawee/deberta-v3-large-mnli” NLI model, assesses whether all sentences in the primary passage are supported by the opposite five passages. If any sentence in the primary passage has a high probability of being inconsistent with the opposite five passages, that instance is marked as a hallucinated sample. There are a complete of 238 instances to be evaluated on this benchmark.

The benchmarks within the Hallucinations Leaderboard offer a comprehensive evaluation of an LLM’s ability to handle several varieties of hallucinations, providing invaluable insights for AI/NLP researchers and developers/

Our comprehensive evaluation process gives a concise rating of LLMs, allowing users to know the performance of assorted models in a more comparative, quantitative, and nuanced manner. We consider that the Hallucinations Leaderboard is a very important and ever more relevant step towards making LLMs more reliable and efficient, encouraging the event of models that may higher understand and replicate human-like text generation while minimizing the occurrence of hallucinations.

The leaderboard is obtainable at this link – you’ll be able to submit models by clicking on Submit, and we shall be adding analytics functionalities within the upcoming weeks. Along with evaluation metrics, to enable qualitative analyses of the outcomes, we also share a sample of generations produced by the model, available here.

A look at the outcomes to this point

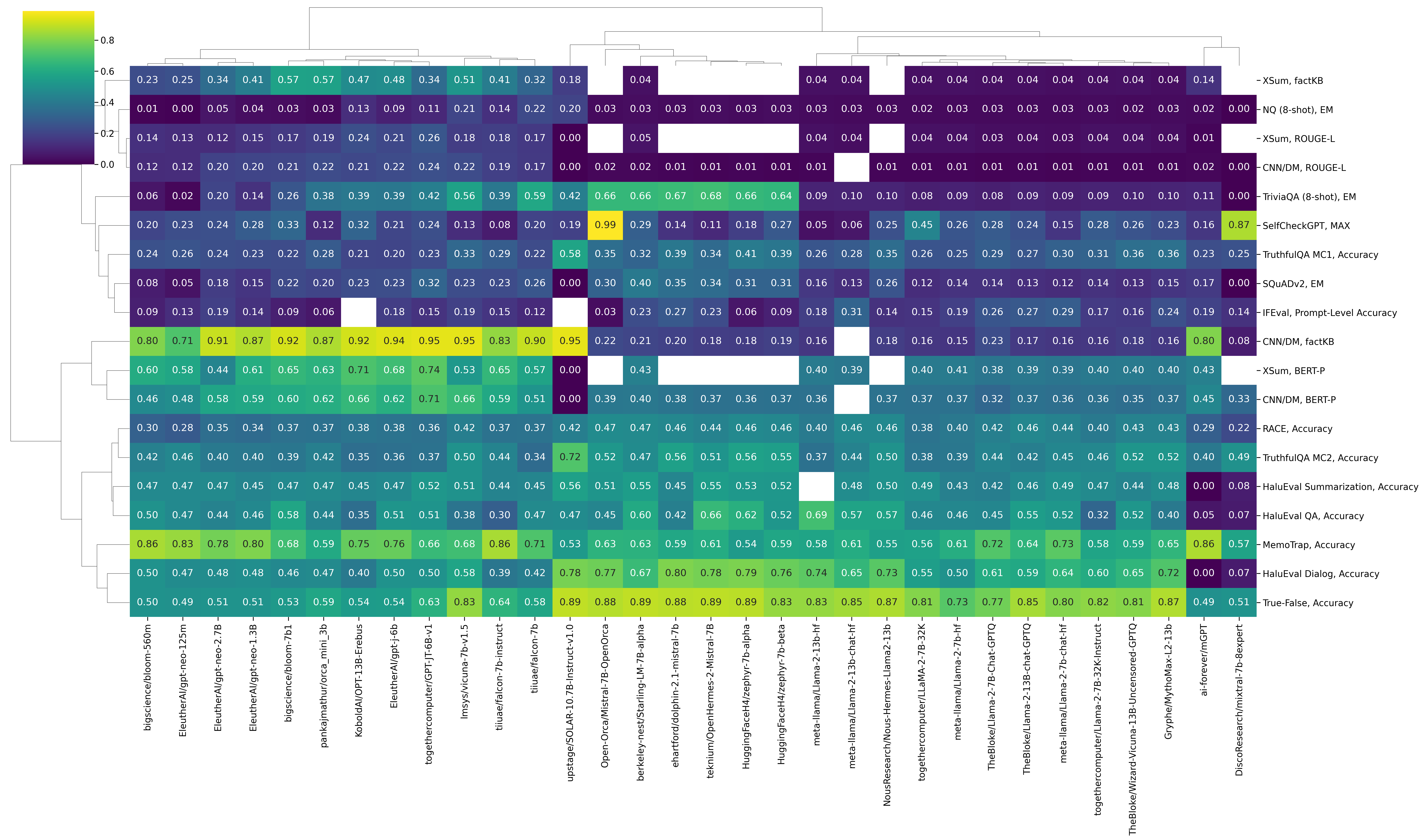

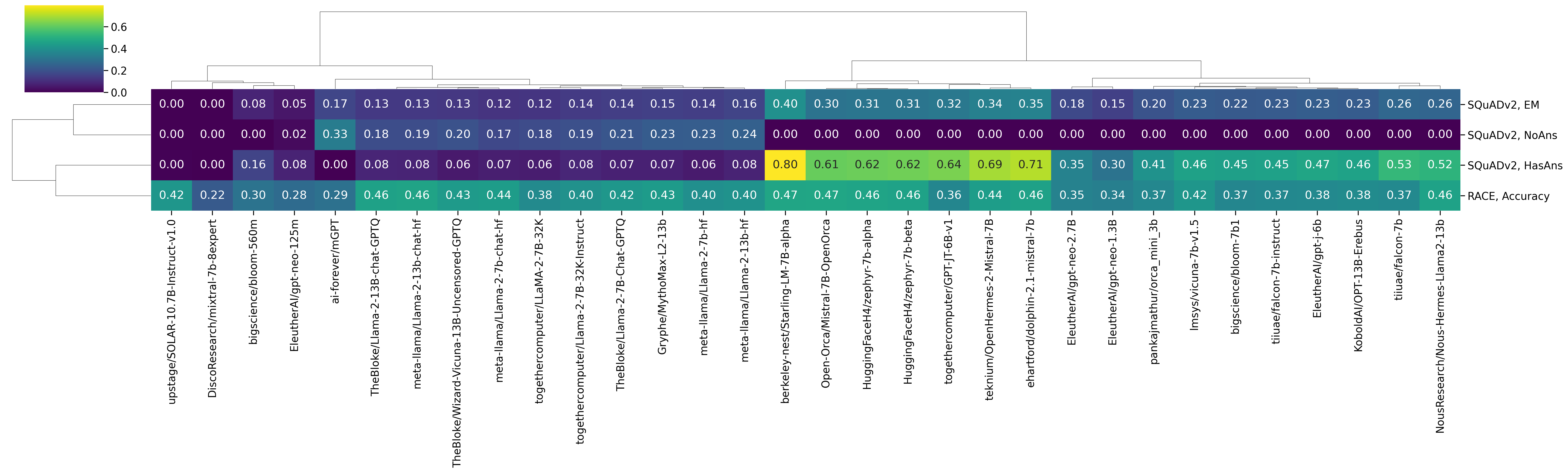

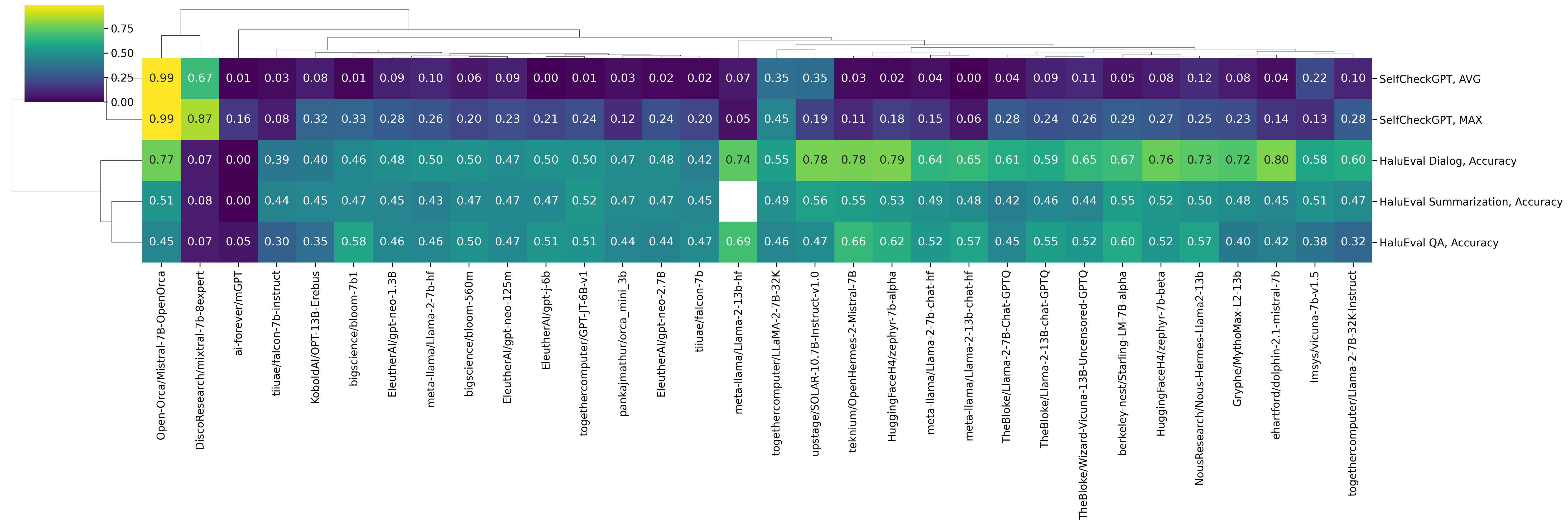

We’re currently within the strategy of evaluating a really large variety of models from the Hugging Face Hub – we will analyse a few of the preliminary results. For instance, we will draw a clustered heatmap resulting from hierarchical clustering of the rows (datasets and metrics) and columns (models) of the outcomes matrix.

We are able to discover the next clusters amongst models:

Mistral 7B-based models (Mistral 7B-OpenOrca, zephyr 7B beta, Starling-LM 7B alpha, Mistral 7B Instruct, etc.)

LLaMA 2-based models (LLaMA2 7B, LLaMA2 7B Chat, LLaMA2 13B, Wizard Vicuna 13B, etc.)

Mostly smaller models (BLOOM 560M, GPT-Neo 125m, GPT-Neo 2.7B, Orca Mini 3B, etc.)

Let’s have a look at the outcomes a bit more intimately.

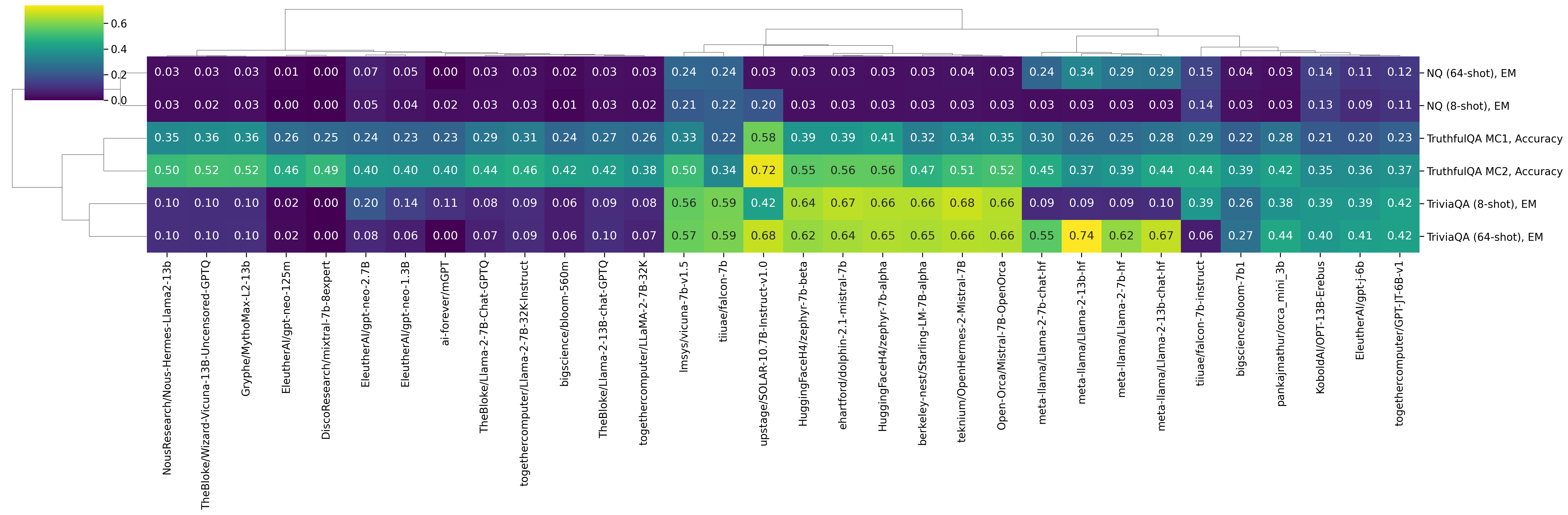

Closed-book Open-Domain Query Answering

Models based on Mistral 7B are by much more accurate than all other models on TriviaQA (8-shot) and TruthfulQA, while Falcon 7B seems to yield the perfect results to this point on NQ (8-shot). In NQ, by taking a look at the answers generated by the models, we will see that some models like LLaMA2 13B are likely to produce single-token answers (we generate a solution until we encounter a “n”, “.”, or “,”), which doesn’t appear to occur, for instance, with Falcon 7B. Moving from 8-shot to 64-shot largely fixes the difficulty on NQ: LLaMA2 13B is now the perfect model on this task, with 0.34 EM.

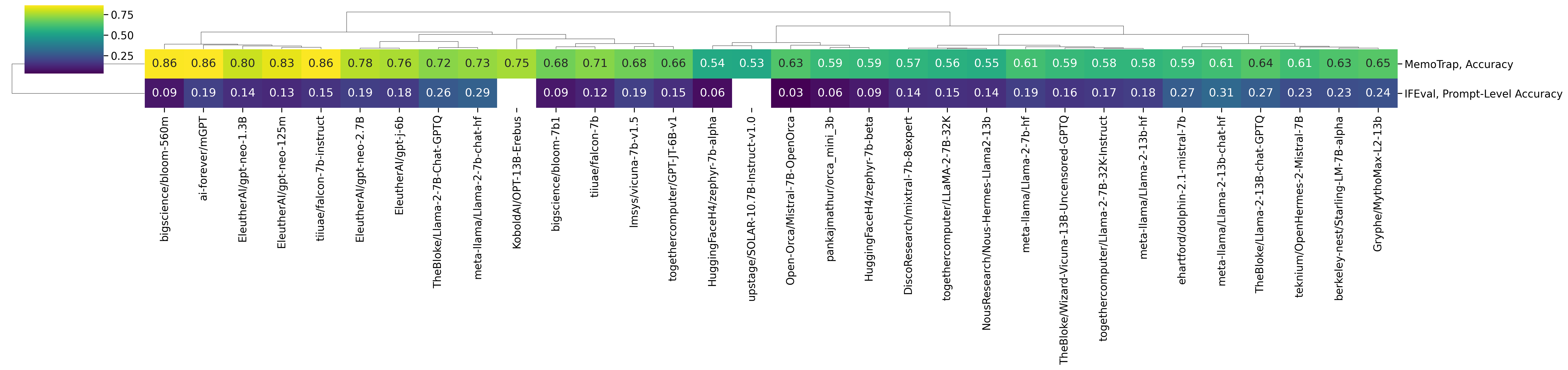

Instruction Following

Perhaps surprisingly, among the finest models on MemoTrap is BLOOM 560M and, normally, smaller models are likely to have strong results on this dataset. Because the Inverse Scaling Prize evidenced, larger models are likely to memorize famous quotes and due to this fact rating poorly on this task. Instructions in IFEval are likely to be significantly harder to follow (as each instance involves complying with several constraints on the generated text) – the perfect results to this point are likely to be produced by LLaMA2 13B Chat and Mistral 7B Instruct.

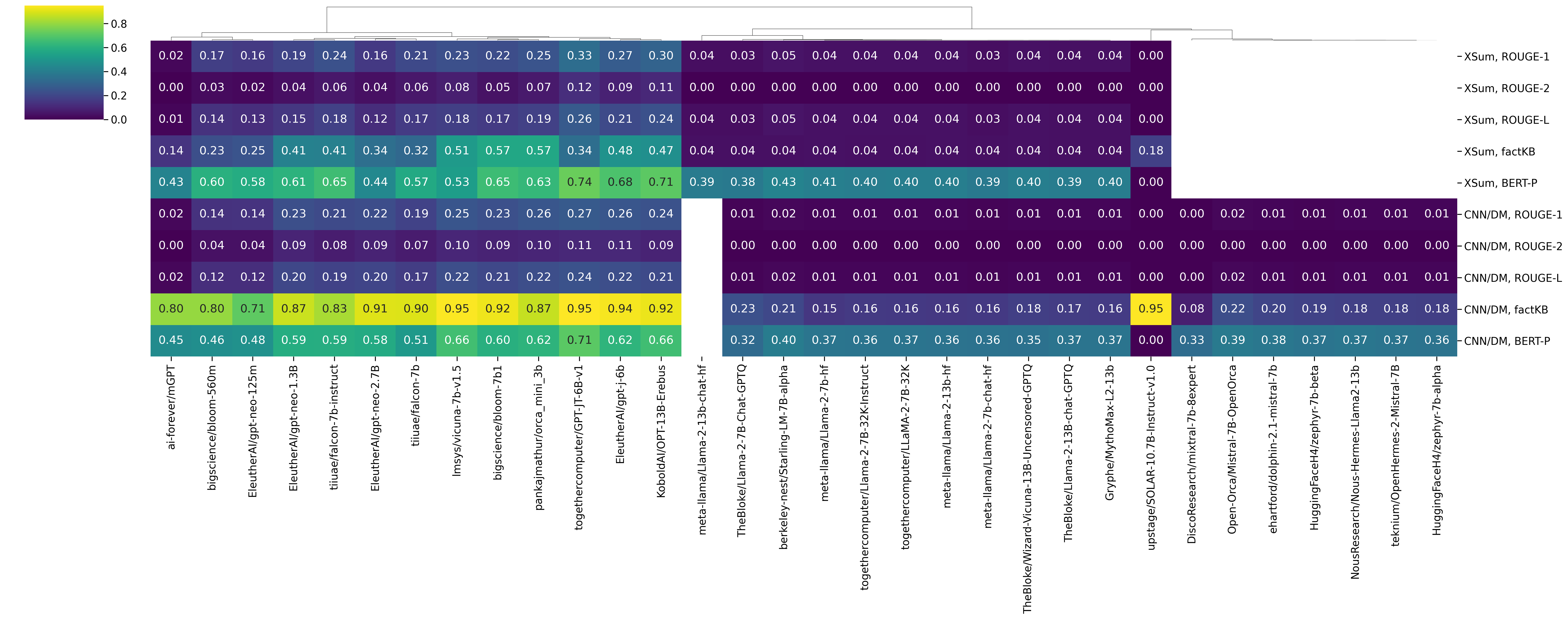

Summarisation

In summarisation, we consider two varieties of metrics: n-gram overlap with the gold summary (ROUGE1, ROUGE2, and ROUGE-L) and faithfulness of the generated summary wrt. the unique document (factKB, BERTScore-Precision). When taking a look at rouge ROUGE-based metrics, among the finest models we’ve got considered to this point on CNN/DM is GPT JT 6B. By glancing at some model generations (available here), we will see that this model behaves almost extractively by summarising the primary sentences of the entire document. Other models, like LLaMA2 13B, usually are not as competitive. A primary glance on the model outputs, this happens because such models are likely to only generate a single token – perhaps because of the context exceeding the utmost context length.

Reading Comprehension

On RACE, probably the most accurate results to this point are produced on models based on Mistral 7B and LLaMA2. In SQuADv2, there are two settings: answerable (HasAns) and unanswerable (NoAns) questions. mGPT is the perfect model to this point on the duty of identifying unanswerable questions, whereas Starling-LM 7B alpha is the perfect model within the HasAns setting.

Hallucination Detection

We consider two hallucination detection tasks, namely SelfCheckGPT — which checks if a model produces self-consistent answers — and HaluEval, which checks whether a model can discover faithfulness hallucinations in QA, Dialog, and Summarisation tasks with respect to a given snippet of data.

For SelfCheckGPT, the best-scoring model to this point is Mistral 7B OpenOrca; one reason this happens is that this model at all times generates empty answers that are (trivially) self-consistent with themselves. Similarly, DiscoResearch/mixtral-7b-8expert produces very similar generations, yielding high self-consistency results. For HaluEval QA/Dialog/Summarisation, the perfect results are produced by Mistral and LLaMA2-based models.

Wrapping up

The Hallucinations Leaderboard is an open effort to handle the challenge of hallucinations in LLMs. Hallucinations in LLMs, whether in the shape of factuality or faithfulness errors, can significantly impact the reliability and usefulness of LLMs in real-world settings. By evaluating a various range of LLMs across multiple benchmarks, the Hallucinations Leaderboard goals to supply insights into the generalisation properties and limitations of those models and their tendency to generate hallucinated content.

This initiative wants to help researchers and engineers in identifying probably the most reliable models, and potentially drive the event of LLMs towards more accurate and faithful language generation. The Hallucinations Leaderboard is an evolving project, and we welcome contributions (fixes, recent datasets and metrics, computational resources, ideas, …) and feedback: in the event you would really like

to work with us on this project, remember to reach out!

Citing

@article{hallucinations-leaderboard,

writer = {Giwon Hong and

Aryo Pradipta Gema and

Rohit Saxena and

Xiaotang Du and

Ping Nie and

Yu Zhao and

Laura Perez{-}Beltrachini and

Max Ryabinin and

Xuanli He and

Cl{'{e}}mentine Fourrier and

Pasquale Minervini},

title = {The Hallucinations Leaderboard - An Open Effort to Measure Hallucinations

in Large Language Models},

journal = {CoRR},

volume = {abs/2404.05904},

yr = {2024},

url = {https://doi.org/10.48550/arXiv.2404.05904},

doi = {10.48550/ARXIV.2404.05904},

eprinttype = {arXiv},

eprint = {2404.05904},

timestamp = {Wed, 15 May 2024 08:47:08 +0200},

biburl = {https://dblp.org/rec/journals/corr/abs-2404-05904.bib},

bibsource = {dblp computer science bibliography, https://dblp.org}

}