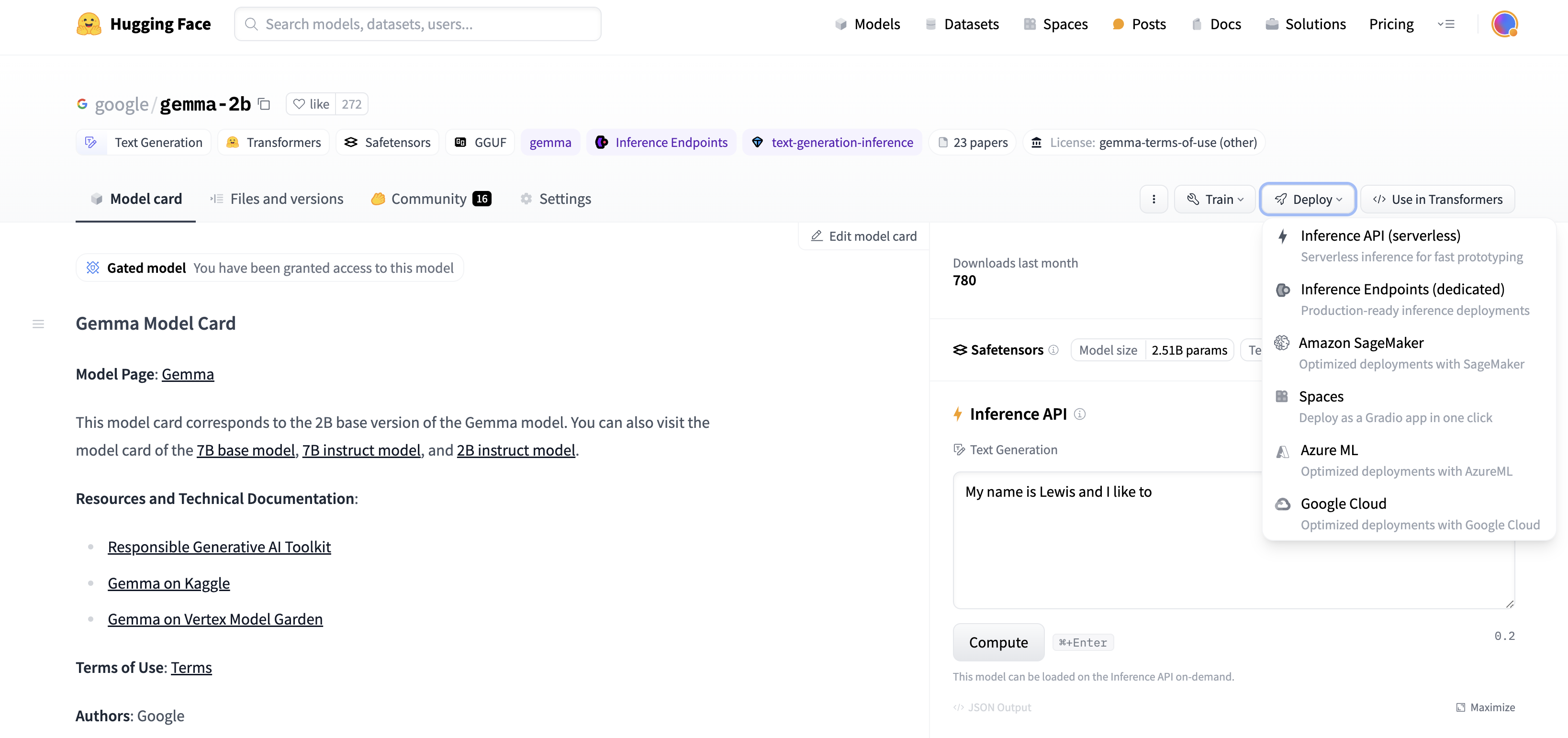

We recently announced that Gemma, the open weights language model from Google Deepmind, is on the market for the broader open-source community via Hugging Face. It’s available in 2 billion and seven billion parameter sizes with pretrained and instruction-tuned flavors. It’s available on Hugging Face, supported in TGI, and simply accessible for deployment and fine-tuning within the Vertex Model Garden and Google Kubernetes Engine.

The Gemma family of models also happens to be well suited to prototyping and experimentation using the free GPU resource available via Colab. On this post we are going to briefly review how you’ll be able to do Parameter Efficient FineTuning (PEFT) for Gemma models, using the Hugging Face Transformers and PEFT libraries on GPUs and Cloud TPUs for anyone who desires to fine-tune Gemma models on their very own dataset.

Why PEFT?

The default (full weight) training for language models, even for modest sizes, tends to be memory and compute-intensive. On one hand, it may be prohibitive for users counting on openly available compute platforms for learning and experimentation, corresponding to Colab or Kaggle. Alternatively, and even for enterprise users, the fee of adapting these models for various domains is a vital metric to optimize. PEFT, or parameter-efficient tremendous tuning, is a well-liked technique to perform this at low price.

PyTorch on GPU and TPU

Gemma models in Hugging Face transformers are optimized for each PyTorch and PyTorch/XLA. This allows each TPU and GPU users to access and experiment with Gemma models as needed. Along with the Gemma release, we’ve also improved the FSDP experience for PyTorch/XLA in Hugging Face. This FSDP via SPMD integration also allows other Hugging Face models to make the most of TPU acceleration via PyTorch/XLA. On this post, we are going to deal with PEFT, and more specifically on Low-Rank Adaptation (LoRA), for Gemma models. For a more comprehensive set of LoRA techniques, we encourage readers to review the Scaling Right down to Scale Up, from Lialin et al. and this excellent post post by Belkada et al.

Low-Rank Adaptation for Large Language Models

Low-Rank Adaptation (LoRA) is considered one of the parameter-efficient fine-tuning techniques for giant language models (LLMs). It addresses only a fraction of the full variety of model parameters to be fine-tuned, by freezing the unique model and only training adapter layers which can be decomposed into low-rank matrices. The PEFT library provides a simple abstraction that permits users to pick the model layers where adapter weights needs to be applied.

from peft import LoraConfig

lora_config = LoraConfig(

r=8,

target_modules=["q_proj", "o_proj", "k_proj", "v_proj", "gate_proj", "up_proj", "down_proj"],

task_type="CAUSAL_LM",

)

On this snippet, we confer with all nn.Linear layers because the goal layers to be adapted.

In the next example, we are going to leverage QLoRA, from Dettmers et al., with a purpose to quantize the bottom model in 4-bit precision for a more memory efficient fine-tuning protocol. The model will be loaded with QLoRA by first installing the bitsandbytes library in your environment, after which passing a BitsAndBytesConfig object to from_pretrained when loading the model.

Before we start

So as to access Gemma model artifacts, users are required to simply accept the consent form.

Now let’s start with the implementation.

Learning to cite

Assuming that you’ve submitted the consent form, you’ll be able to access the model artifacts from the Hugging Face Hub.

We start by downloading the model and the tokenizer. We also include a BitsAndBytesConfig for weight only quantization.

import torch

import os

from transformers import AutoTokenizer, AutoModelForCausalLM, BitsAndBytesConfig

model_id = "google/gemma-2b"

bnb_config = BitsAndBytesConfig(

load_in_4bit=True,

bnb_4bit_quant_type="nf4",

bnb_4bit_compute_dtype=torch.bfloat16

)

tokenizer = AutoTokenizer.from_pretrained(model_id, token=os.environ['HF_TOKEN'])

model = AutoModelForCausalLM.from_pretrained(model_id, quantization_config=bnb_config, device_map={"":0}, token=os.environ['HF_TOKEN'])

Now we test the model before starting the finetuning, using a famous quote:

text = "Quote: Imagination is more"

device = "cuda:0"

inputs = tokenizer(text, return_tensors="pt").to(device)

outputs = model.generate(**inputs, max_new_tokens=20)

print(tokenizer.decode(outputs[0], skip_special_tokens=True))

The model does an affordable completion with some extra tokens:

Quote: Imagination is more vital than knowledge. Knowledge is restricted. Imagination encircles the world.

-Albert Einstein

I

But this will not be precisely the format we might love the reply to be. Let’s see if we will use fine-tuning to show the model to generate the reply in the next format.

Quote: Imagination is more vital than knowledge. Knowledge is restricted. Imagination encircles the world.

Writer: Albert Einstein

To start with, let’s select an English quotes dataset Abirate/english_quotes.

from datasets import load_dataset

data = load_dataset("Abirate/english_quotes")

data = data.map(lambda samples: tokenizer(samples["quote"]), batched=True)

Now let’s finetune this model using the LoRA config stated above:

import transformers

from trl import SFTTrainer

def formatting_func(example):

text = f"Quote: {example['quote'][0]}nAuthor: {example['author'][0]}"

return [text]

trainer = SFTTrainer(

model=model,

train_dataset=data["train"],

args=transformers.TrainingArguments(

per_device_train_batch_size=1,

gradient_accumulation_steps=4,

warmup_steps=2,

max_steps=10,

learning_rate=2e-4,

fp16=True,

logging_steps=1,

output_dir="outputs",

optim="paged_adamw_8bit"

),

peft_config=lora_config,

formatting_func=formatting_func,

)

trainer.train()

Finally, we’re able to test the model over again with the identical prompt we’ve used earlier:

text = "Quote: Imagination is"

device = "cuda:0"

inputs = tokenizer(text, return_tensors="pt").to(device)

outputs = model.generate(**inputs, max_new_tokens=20)

print(tokenizer.decode(outputs[0], skip_special_tokens=True))

This time we get the response within the format we like:

Quote: Imagination is more vital than knowledge. Knowledge is restricted. Imagination encircles the world.

Writer: Albert Einstein

Speed up with FSDP via SPMD on TPU

As mentioned earlier, Hugging Face transformers now supports PyTorch/XLA’s latest FSDP implementation. This may greatly speed up the fine-tuning speed. To enable that, one just needs so as to add a FSDP config to the transformers.Trainer:

from transformers import DataCollatorForLanguageModeling, Trainer, TrainingArguments

fsdp_config = {

"fsdp_transformer_layer_cls_to_wrap": ["GemmaDecoderLayer"],

"xla": True,

"xla_fsdp_v2": True,

"xla_fsdp_grad_ckpt": True

}

trainer = Trainer(

model=model,

train_dataset=data,

args=TrainingArguments(

per_device_train_batch_size=64,

num_train_epochs=100,

max_steps=-1,

output_dir="./output",

optim="adafactor",

logging_steps=1,

dataloader_drop_last = True,

fsdp="full_shard",

fsdp_config=fsdp_config,

),

data_collator=DataCollatorForLanguageModeling(tokenizer, multi level marketing=False),

)

trainer.train()

Next Steps

We walked through this easy example adapted from the source notebook for instance the LoRA finetuning method applied to Gemma models. The total colab for GPU will be found here, and the total script for TPU will be found here. We’re excited concerning the limitless possibilities for research and learning due to this recent addition to our open source ecosystem. We encourage users to also visit the Gemma documentation, in addition to our launch blog for more examples to coach, finetune and deploy Gemma models.