Due to their impressive abilities, large language models (LLMs) require significant computing power, which is seldom available on personal computers. Consequently, we have now no selection but to deploy them on powerful bespoke AI servers hosted on-premises or within the cloud.

Why local LLM inference is desirable

What if we could run state-of-the-art open-source LLMs on a typical pc? Would not we enjoy advantages like:

- Increased privacy: our data wouldn’t be sent to an external API for inference.

- Lower latency: we’d save network round trips.

- Offline work: we could work without network connectivity (a frequent flyer’s dream!).

- Lower cost: we would not spend any money on API calls or model hosting.

- Customizability: each user could find the models that best fit the tasks they work on every day, and so they could even fine-tune them or use local Retrieval-Augmented Generation (RAG) to extend relevance.

This all sounds very exciting indeed. So why aren’t we doing it already? Returning to our opening statement, your typical within your means laptop doesn’t pack enough compute punch to run LLMs with acceptable performance. There isn’t any multi-thousand-core GPU and no lightning-fast High Memory Bandwidth in sight.

A lost cause, then? In fact not.

Why local LLM inference is now possible

There’s nothing that the human mind cannot make smaller, faster, more elegant, and more cost effective. In recent months, the AI community has worked hard to shrink models without compromising their predictive quality. Three areas are exciting:

-

Hardware acceleration: modern CPU architectures embed hardware dedicated to accelerating essentially the most common deep learning operators, reminiscent of matrix multiplication or convolution, enabling latest Generative AI applications on AI PCs and significantly improving their speed and efficiency.

-

Small Language Models (SLMs): due to revolutionary architectures and training techniques, these models are on par and even higher than larger models. Because they’ve fewer parameters, inference requires less computing and memory, making them excellent candidates for resource-constrained environments.

-

Quantization: Quantization is a process that lowers memory and computing requirements by reducing the bit width of model weights and activations, for instance, from 16-bit floating point (

fp16) to 8-bit integers (int8). Reducing the variety of bits signifies that the resulting model requires less memory at inference time, speeding up latency for memory-bound steps just like the decoding phase when text is generated. As well as, operations like matrix multiplication will be performed faster due to integer arithmetic when quantizing each the weights and activations.

On this post, we’ll leverage all the above. Ranging from the Microsoft Phi-2 model, we are going to apply 4-bit quantization on the model weights, due to the Intel OpenVINO integration in our Optimum Intel library. Then, we are going to run inference on a mid-range laptop powered by an Intel Meteor Lake CPU.

NOTE: In the event you’re enthusiastic about applying quantization on each weights and activations, you will discover more information in our documentation.

Let’s get to work.

Intel Meteor Lake

Launched in December 2023, Intel Meteor Lake, now renamed to Core Ultra, is a brand new architecture optimized for high-performance laptops.

The primary Intel client processor to make use of a chiplet architecture, Meteor Lake includes:

-

A power-efficient CPU with as much as 16 cores,

-

An integrated GPU (iGPU) with as much as 8 Xe cores, each featuring 16 Xe Vector Engines (XVE). Because the name implies, an XVE can perform vector operations on 256-bit vectors. It also implements the DP4a instruction, which computes a dot product between two vectors of 4-byte values, stores the lead to a 32-bit integer, and adds it to a 3rd 32-bit integer.

-

A Neural Processing Unit (NPU), a primary for Intel architectures. The NPU is a dedicated AI engine built for efficient client AI. It’s optimized to handle demanding AI computations efficiently, freeing up the fundamental CPU and graphics for other tasks. In comparison with using the CPU or the iGPU for AI tasks, the NPU is designed to be more power-efficient.

To run the demo below, we chosen a mid-range laptop powered by a Core Ultra 7 155H CPU. Now, let’s pick a stunning small language model to run on this laptop.

NOTE: To run this code on Linux, install your GPU driver by following these instructions.

The Microsoft Phi-2 model

Released in December 2023, Phi-2 is a 2.7-billion parameter model trained for text generation.

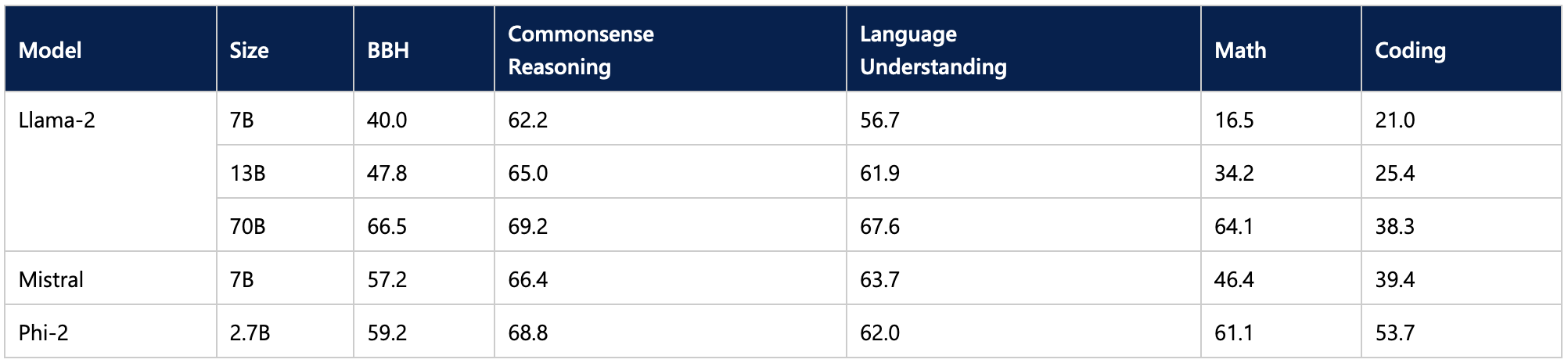

On reported benchmarks, unfazed by its smaller size, Phi-2 outperforms a few of the very best 7-billion and 13-billion LLMs and even stays inside striking distance of the much larger Llama-2 70B model.

This makes it an exciting candidate for laptop inference. Curious readers may additionally need to experiment with the 1.1-billion TinyLlama model.

Now, let’s examine how we are able to shrink the model to make it smaller and faster.

Quantization with Intel OpenVINO and Optimum Intel

Intel OpenVINO is an open-source toolkit for optimizing AI inference on many Intel hardware platforms (Github, documentation), notably through model quantization.

Partnering with Intel, we have now integrated OpenVINO in Optimum Intel, our open-source library dedicated to accelerating Hugging Face models on Intel platforms (Github, documentation).

First ensure you will have the newest version of optimum-intel with all of the vital libraries installed:

pip install --upgrade-strategy eager optimum[openvino,nncf]

This integration makes quantizing Phi-2 to 4-bit straightforward. We define a quantization configuration, set the optimization parameters, and cargo the model from the hub. Once it has been quantized and optimized, we store it locally.

from transformers import AutoTokenizer, pipeline

from optimum.intel import OVModelForCausalLM, OVWeightQuantizationConfig

model_id = "microsoft/phi-2"

device = "gpu"

q_config = OVWeightQuantizationConfig(bits=4, group_size=128, ratio=0.8)

ov_config = {"PERFORMANCE_HINT": "LATENCY", "CACHE_DIR": "model_cache", "INFERENCE_PRECISION_HINT": "f32"}

tokenizer = AutoTokenizer.from_pretrained(model_id)

model = OVModelForCausalLM.from_pretrained(

model_id,

export=True,

quantization_config=q_config,

device=device,

ov_config=ov_config,

)

model.compile()

pipe = pipeline("text-generation", model=model, tokenizer=tokenizer)

results = pipe("He's a dreadful magician and")

save_directory = "phi-2-openvino"

model.save_pretrained(save_directory)

tokenizer.save_pretrained(save_directory)

The ratio parameter controls the fraction of weights we’ll quantize to 4-bit (here, 80%) and the remainder to 8-bit. The group_size parameter defines the scale of the burden quantization groups (here, 128), each group having its scaling factor. Decreasing these two values normally improves accuracy on the expense of model size and inference latency.

Yow will discover more information on weight quantization in our documentation.

NOTE: the complete notebook with text generation examples is available on Github.

So, how briskly is the quantized model on our laptop? Watch the next videos to see for yourself. Remember to pick the 1080p resolution for optimum sharpness.

The primary video asks our model a high-school physics query: “Lily has a rubber ball that she drops from the highest of a wall. The wall is 2 meters tall. How long will it take for the ball to succeed in the bottom?“

The second video asks our model a coding query: “Write a category which implements a totally connected layer with forward and backward functions using numpy. Use markdown markers for code.“

As you’ll be able to see in each examples, the generated answer may be very top quality. The quantization process hasn’t degraded the top quality of Phi-2, and the generation speed is adequate. I can be pleased to work locally with this model every day.

Conclusion

Due to Hugging Face and Intel, you’ll be able to now run LLMs in your laptop, having fun with the numerous advantages of local inference, like privacy, low latency, and low price. We hope to see more quality models optimized for the Meteor Lake platform and its successor, Lunar Lake. The Optimum Intel library makes it very easy to quantize models for Intel platforms, so why not give it a attempt to share your excellent models on the Hugging Face Hub? We will at all times use more!

Listed here are some resources to make it easier to start:

If you will have questions or feedback, we would love to reply them on the Hugging Face forum.

Thanks for reading!