Whisper is top-of-the-line open source speech recognition models and definitely the one most generally used. Hugging Face Inference Endpoints make it very easy to deploy any Whisper model out of the box. Nevertheless, should you’d wish to

introduce additional features, like a diarization pipeline to discover speakers, or assisted generation for speculative decoding, things get trickier. The explanation is that it is advisable mix Whisper with additional models, while still exposing a single API endpoint.

We’ll solve this challenge using a custom inference handler, which can implement the Automatic Speech Recognition (ASR) and Diarization pipeline on Inference Endpoints, in addition to supporting speculative decoding. The implementation of the diarization pipeline is inspired by the famous Insanely Fast Whisper, and it uses a Pyannote model for diarization.

This may also be an indication of how flexible Inference Endpoints are and which you could host just about anything there. Here is the code to follow along. Note that in initialization of the endpoint, the entire repository gets mounted, so your handler.py can consult with other files in your repository should you prefer to not have all of the logic in a single file. On this case, we decided to separate things into several files to maintain things clean:

handler.pycomprises initialization and inference codediarization_utils.pyhas all of the diarization-related pre- and post-processingconfig.pyhasModelSettingsandInferenceConfig.ModelSettingsdefine which models shall be utilized within the pipeline (you do not have to make use of all of them), andInferenceConfigdefines the default inference parameters

Starting with Pytorch 2.2, SDPA supports Flash Attention 2 out-of-the-box, so we’ll use that version for faster inference.

The major modules

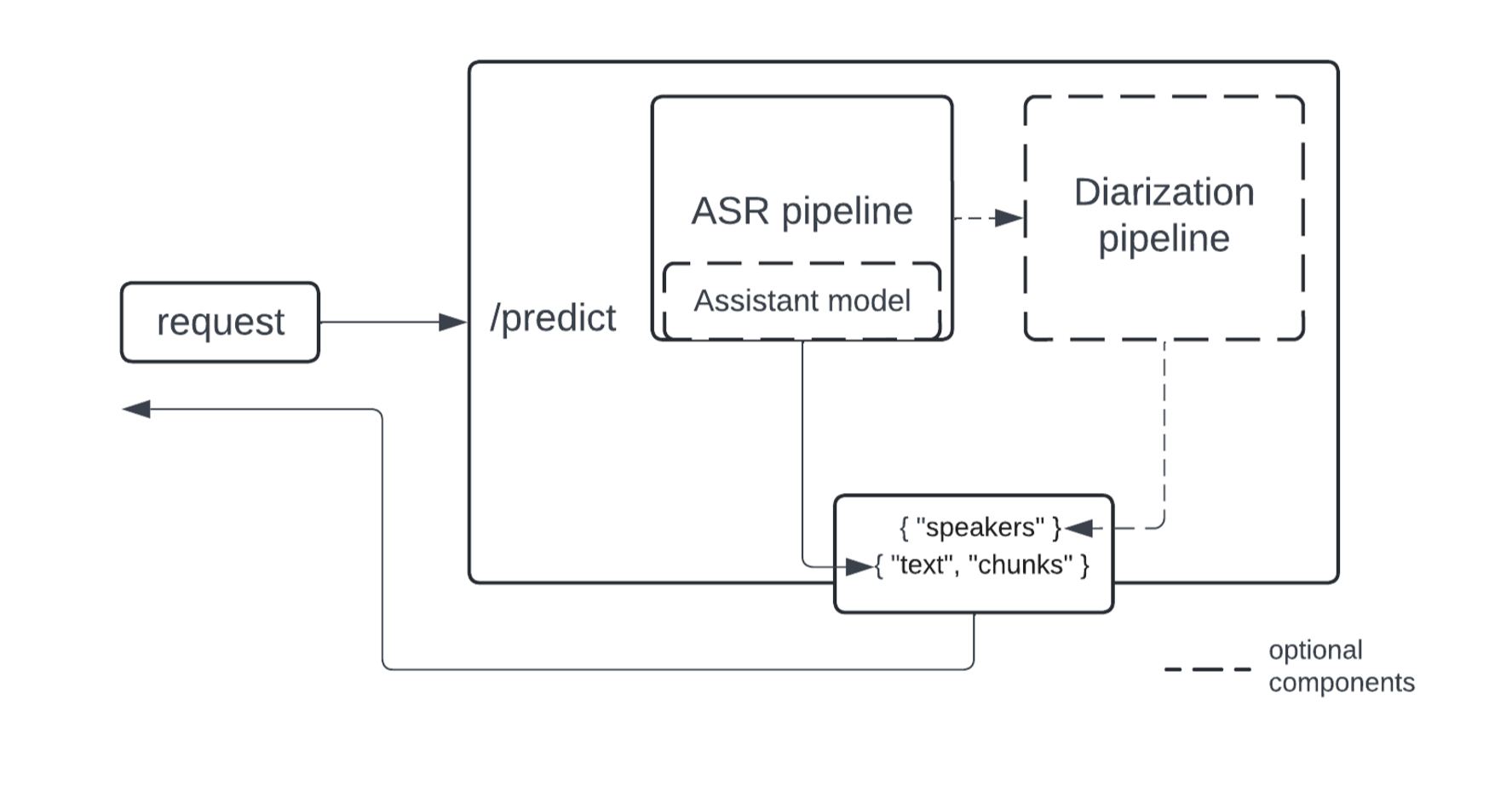

This can be a high-level diagram of what the endpoint looks like under the hood:

The implementation of ASR and diarization pipelines is modularized to cater to a wider range of use cases – the diarization pipeline operates on top of ASR outputs, and you should use only the ASR part if diarization just isn’t needed. For diarization, we propose using the Pyannote model, currently a SOTA open source implementation.

We’ll also add speculative decoding as a strategy to speed up inference. The speedup is achieved through the use of a smaller and faster model to suggest generations which might be validated by the larger model. Learn more about how it really works with Whisper specifically in this great blog post.

Speculative decoding comes with restrictions:

- at the least the decoder a part of an assistant model must have the identical architecture as that of the major model

- the batch size much be 1

Ensure to take the above under consideration. Depending in your production use case, supporting larger batches may be faster than speculative decoding. Should you don’t desire to make use of an assistant model, just keep the assistant_model within the configuration as None.

Should you do use an assistant model, an excellent selection for Whisper is a distilled version.

Arrange your personal endpoint

The simplest strategy to start is to clone the custom handler repository using the repo duplicator.

Here is the model loading piece from the handler.py:

from pyannote.audio import Pipeline

from transformers import pipeline, AutoModelForCausalLM

...

self.asr_pipeline = pipeline(

"automatic-speech-recognition",

model=model_settings.asr_model,

torch_dtype=torch_dtype,

device=device

)

self.assistant_model = AutoModelForCausalLM.from_pretrained(

model_settings.assistant_model,

torch_dtype=torch_dtype,

low_cpu_mem_usage=True,

use_safetensors=True

)

...

self.diarization_pipeline = Pipeline.from_pretrained(

checkpoint_path=model_settings.diarization_model,

use_auth_token=model_settings.hf_token,

)

...

You’ll be able to customize the pipeline based in your needs. ModelSettings, within the config.py file, holds the parameters used for initialization, defining the models to make use of during inference:

class ModelSettings(BaseSettings):

asr_model: str

assistant_model: Optional[str] = None

diarization_model: Optional[str] = None

hf_token: Optional[str] = None

The parameters may be adjusted by passing environment variables with corresponding names – this works each with a custom container and an inference handler. It’s a Pydantic feature. To pass environment variables to a container during construct time you’ll must create an endpoint via an API call (not via the interface).

You might hardcode model names as a substitute of passing them as environment variables, but note that the diarization pipeline requires a token to be passed explicitly (hf_token). You will not be allowed to hardcode your token for security reasons, which implies you’ll have to create an endpoint via an API call to be able to use a diarization model.

As a reminder, all of the diarization-related pre- and postprocessing utils are in diarization_utils.py

The one required component is an ASR model. Optionally, an assistant model may be specified for use for speculative decoding, and a diarization model may be used to partition a transcription by speakers.

Deploy on Inference Endpoints

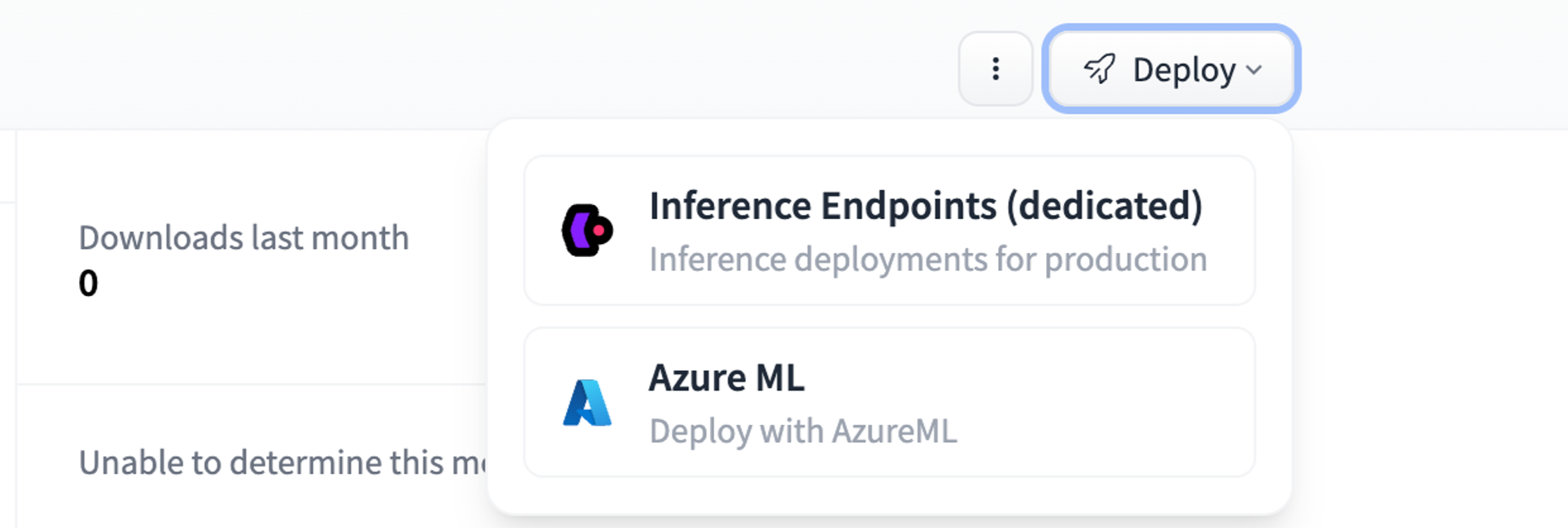

Should you only need the ASR part you possibly can specify asr_model/assistant_model within the config.py and deploy with a click of a button:

To pass environment variables to containers hosted on Inference Endpoints you’ll have to create an endpoint programmatically using the provided API. Below is an example call:

body = {

"compute": {

"accelerator": "gpu",

"instanceSize": "medium",

"instanceType": "g5.2xlarge",

"scaling": {

"maxReplica": 1,

"minReplica": 0

}

},

"model": {

"framework": "pytorch",

"image": {

"huggingface": {

"env": {

"HF_MODEL_DIR": "/repository",

"DIARIZATION_MODEL": "pyannote/speaker-diarization-3.1",

"HF_TOKEN": "" ,

"ASR_MODEL": "openai/whisper-large-v3",

"ASSISTANT_MODEL": "distil-whisper/distil-large-v3"

}

}

},

"repository": "sergeipetrov/asrdiarization-handler",

"task": "custom"

},

"name": "asr-diarization-1",

"provider": {

"region": "us-east-1",

"vendor": "aws"

},

"type": "private"

}

When to make use of an assistant model

To provide a greater idea on when using an assistant model is useful, here’s a benchmark performed with k6:

ASR_MODEL=openai/whisper-large-v3

ASSISTANT_MODEL=distil-whisper/distil-large-v3

long_assisted..................: avg=4.15s min=3.84s med=3.95s max=6.88s p(90)=4.03s p(95)=4.89s

long_not_assisted..............: avg=3.48s min=3.42s med=3.46s max=3.71s p(90)=3.56s p(95)=3.61s

short_assisted.................: avg=326.96ms min=313.01ms med=319.41ms max=960.75ms p(90)=325.55ms p(95)=326.07ms

short_not_assisted.............: avg=784.35ms min=736.55ms med=747.67ms max=2s p(90)=772.9ms p(95)=774.1ms

As you possibly can see, assisted generation gives dramatic performance gains when an audio is brief (batch size is 1). If an audio is long, inference will robotically chunk it into batches, and speculative decoding may hurt inference time due to the constraints we discussed before.

Inference parameters

All of the inference parameters are in config.py:

class InferenceConfig(BaseModel):

task: Literal["transcribe", "translate"] = "transcribe"

batch_size: int = 24

assisted: bool = False

chunk_length_s: int = 30

sampling_rate: int = 16000

language: Optional[str] = None

num_speakers: Optional[int] = None

min_speakers: Optional[int] = None

max_speakers: Optional[int] = None

In fact, you possibly can add or remove parameters as needed. The parameters related to the variety of speakers are passed to a diarization pipeline, while all of the others are mostly for the ASR pipeline. sampling_rate indicates the sampling rate of the audio to process and is used for preprocessing; the assisted flag tells the pipeline whether to make use of speculative decoding. Do not forget that for assisted generation the batch_size should be set to 1.

Payload

Once deployed, send your audio together with the inference parameters to your inference endpoint, like this (in Python):

import base64

import requests

API_URL = ""

filepath = "/path/to/audio"

with open(filepath, "rb") as f:

audio_encoded = base64.b64encode(f.read()).decode("utf-8")

data = {

"inputs": audio_encoded,

"parameters": {

"batch_size": 24

}

}

resp = requests.post(API_URL, json=data, headers={"Authorization": "Bearer " })

print(resp.json())

Here the “parameters” field is a dictionary that comprises all of the parameters you want to regulate from the InferenceConfig. Note that parameters not laid out in the InferenceConfig shall be ignored.

Or with InferenceClient (there’s also an async version):

from huggingface_hub import InferenceClient

client = InferenceClient(model = "" , token="" )

with open("/path/to/audio", "rb") as f:

audio_encoded = base64.b64encode(f.read()).decode("utf-8")

data = {

"inputs": audio_encoded,

"parameters": {

"batch_size": 24

}

}

res = client.post(json=data)

Recap

On this blog, we discussed learn how to arrange a modularized ASR + diarization + speculative decoding pipeline with Hugging Face Inference Endpoints. We did our greatest to make it easy to configure and adjust the pipeline as needed, and deployment with Inference Endpoints is at all times a chunk of cake! We’re lucky to have great models and tools openly available to the community that we utilized in the implementation:

There’s a repo that implements the identical pipeline together with the server part (FastAPI+Uvicorn). It might turn out to be useful should you’d wish to customize it even further or host elsewhere.