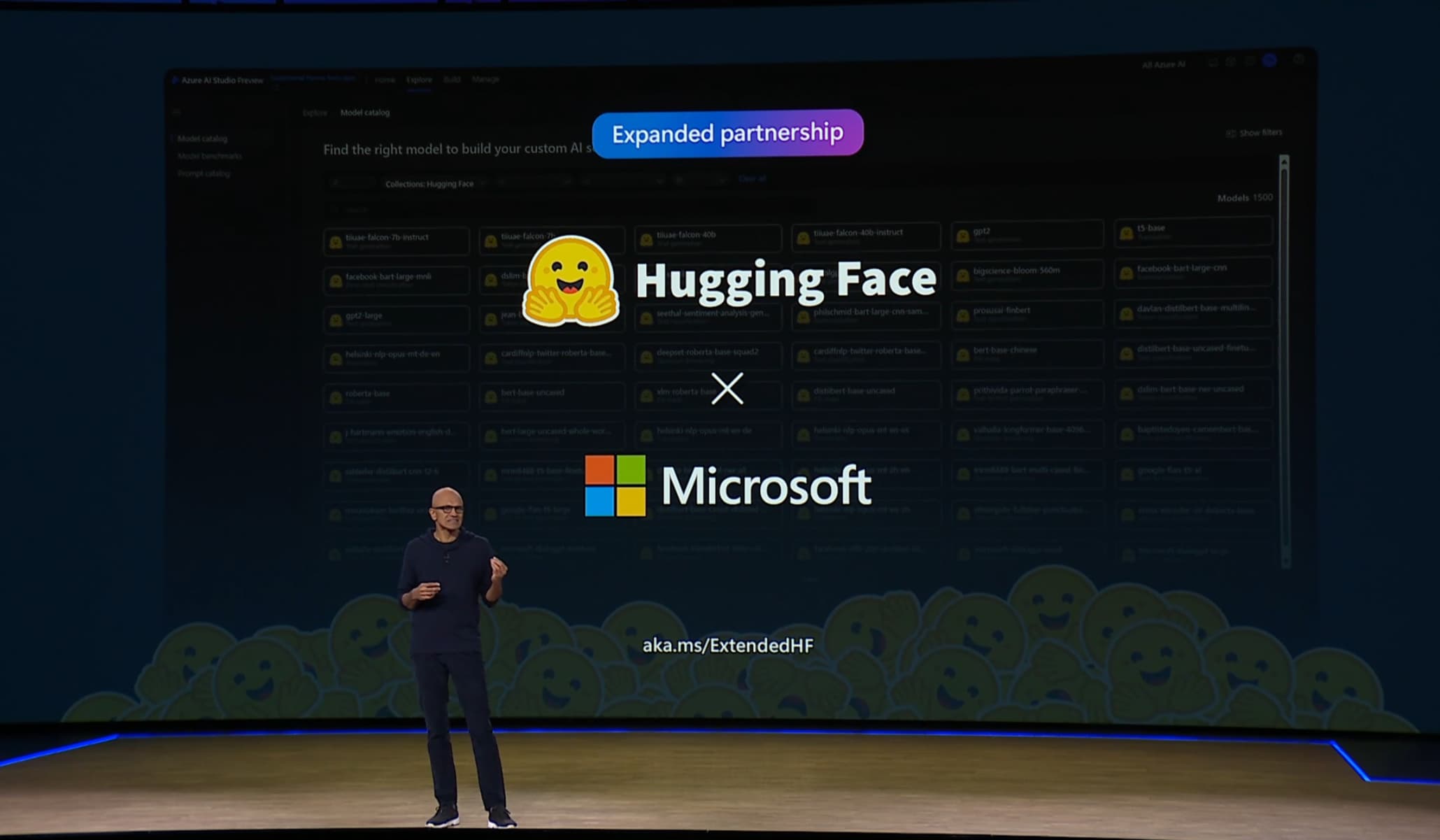

Today at Microsoft Construct we’re pleased to announce a broad set of recent features and collaborations as Microsoft and Hugging Face deepen their strategic collaboration to make open models and open source AI easier to make use of in all places. Together, we’ll work to enable AI builders across open science, open source, cloud, hardware and developer experiences – read on for announcements today on all fronts!

A collaboration for Cloud AI Builders

we’re excited to announce two major recent experiences to construct AI with open models on Microsoft Azure.

Expanded HF Collection in Azure Model Catalog

A 12 months ago, Hugging Face and Microsoft unveiled the Hugging Face Collection within the Azure Model Catalog. The Hugging Face Collection has been utilized by a whole bunch of Azure AI customers, with over a thousand open models available since its introduction. Today, we’re adding a number of the hottest open Large Language Models to the Hugging Face Collection to enable direct, 1-click deployment from Azure AI Studio.

The brand new models include Llama 3 from Meta, Mistral 7B from Mistral AI, Command R Plus from Cohere for AI, Qwen 1.5 110B from Qwen, and a number of the highest performing fine-tuned models on the Open LLM Leaderboard from the Hugging Face community.

To deploy the models in your individual Azure account, you’ll be able to start from the model card on the Hugging Face Hub, choosing the “Deploy on Azure” option:

Or you could find model directly in Azure AI Studio throughout the Hugging Face Collection, and click on “Deploy”

Construct AI with the brand new AMD MI300X on Azure

Today, Microsoft made recent Azure ND MI300X virtual machines (VMs) generally available on Azure, based on the newest AMD Instinct MI300 GPUs. Hugging Face collaborated with AMD and Microsoft to realize amazing performance and price/performance for Hugging Face models on the brand new virtual machines.

This work leverages our deep collaboration with AMD and our open source library Optimum-AMD, with optimization, ROCm integrations and continuous testing of Hugging Face open source libraries and models on AMD Instinct GPUs.

A Collaboration for Open Science

Microsoft has been releasing a number of the hottest open models on Hugging Face, with near 300 models currently available within the Microsoft organization on the Hugging Face Hub.

This includes the recent Phi-3 family of models, that are permissibly licensed under MIT, and offer performance way above their weight class. As an example, with only 3.8 billion parameters, Phi-3 mini outperforms most of the larger 7 to 10 billion parameter large language models, which makes the models excellent candidates for on-device applications.

To show the capabilities of Phi-3, Hugging Face deployed Phi-3 mini in Hugging Chat, its free consumer application to talk with the best open models and create assistants.

A Collaboration for Open Source

Hugging Face and Microsoft have been collaborating for 3 years to make it easy to export and use Hugging Face models with ONNX Runtime, through the optimum open source library.

Recently, Hugging Face and Microsoft have been specializing in enabling local inference through WebGPU, leveraging Transformers.js and ONNX Runtime Web. Read more concerning the collaboration on this community article by the ONNX Runtime team.

To see the ability of WebGPU in motion, consider this demo of Phi-3 generating over 70 tokens per second locally within the browser!

A Collaboration for Developers

Last but not least, today we’re unveiling a brand new integration that makes it easier than ever for developers to construct AI applications with Hugging Face Spaces and VS Code!

The Hugging Face community has created over 500,000 AI demo applications on the Hub with Hugging Face Spaces. With the brand new Spaces Dev Mode, Hugging Face users can easily connect their Space to their local VS Code, or spin up an online hosted VS Code environment.

Spaces Dev Mode is currently in beta, and available to PRO subscribers. To learn more about Spaces Dev Mode, take a look at Introducing Spaces Dev mode for a seamless developer experience or documentation.

What’s Next

We’re excited to deepen our strategic collaboration with Microsoft, to make open-source AI more accessible in all places. Stay tuned as we enable more models within the Azure AI Studio model catalog and introduce recent features and experiences within the months to return.