Good morning, AI enthusiasts. The app that pioneered filter culture is now declaring the curated aesthetic dead.

Instagram head Adam Mosseri says AI content has made polished posts worthless as proof of authenticity — and the platform that built its empire on the right grid is quickly scrambling to evolve to the brand new dynamics of social media within the AI age.

In today’s AI rundown:

-

IG head says platform must “evolve fast” because of AI

-

DeepSeek hints at next-gen AI architecture

-

Use Codex to write down code on the internet with AI agents

-

Report: OAI overhauling audio for upcoming device

-

4 recent AI tools, community workflows, and more

LATEST DEVELOPMENTS

📸 IG head says platform must “evolve fast” because of AI

Image source: @mosseri on Threads / The Rundown

The Rundown: Instagram leader Adam Mosseri just posted a year-end essay arguing that AI-generated content has killed the curated aesthetic that made the app famous, saying that raw, unpolished posts are actually the one proof that something is real.

The main points:

-

Mosseri says most users under 25 have already abandoned the polished grid for more personal direct message photos and “unflattering candids.”

-

He also pushed for camera makers to cryptographically sign photos at capture to confirm real media as a substitute of just removing fakes.

-

Mosseri said Instagram must “evolve” fast, predicting a shift from trusting what images you see to scrutinizing who posted it.

-

Instagram plans to label AI content, surface more context about accounts, and construct tools so creators can compete with AI.

Why it matters: IG was one among the pioneers of social media’s “filter culture”, so there’s some irony in now declaring the death of authenticity. However the trend feels accurate, with each a shift in how younger users communicate and the flood of AI images, video, and content completely upending traditional dynamics of social media platforms.

TOGETHER WITH NEBIUS

The Rundown: Nebius Token Factory just launched Post-training — the missing layer for teams constructing production-grade AI on open-source models. You possibly can now fine-tune frontier models like DeepSeek V3, GPT-OSS 20B & 120B, and Qwen3 Coder across multi-node GPU clusters with stability as much as 131k context.

What you get with Post-training:

-

Models deeply adapted to your domain, tone, structure, and workflows

-

One-click deployment with dedicated endpoints, SLAs, and zero-retnetino privacy

-

Shift from generic base models to custom production engines

Start fine-tuning now – GPT-OSS 20B & 120B (Full FT + LoRA FT) is free until Jan 9.

DEEPSEEK

📈 DeepSeek hints at next-gen model architecture

Image source: Nano Banana Pro / The Rundown

The Rundown: DeepSeek just published recent research that proposes changes to how neural networks are structured for breakthroughs in model cost and stability, a possible preview of efficiency gains heading into its next major release.

The main points:

-

The paper introduces mHC, a method that stabilizes and improves AI training at a big scale while adding minimal extra computing cost.

-

CEO Liang Wenfeng co-authored and personally uploaded the paper to arXiv, signaling continued hands-on involvement within the startup’s research.

-

Tests on 3B, 9B, and 27B parameter models showed improved benchmark scores over existing methods, especially reasoning tasks.

-

The timing aligns with previous papers telegraphing DeepSeek’s moves, with similar research dropping before R1 and V3.

Why it matters: Last 12 months’s DeepSeek moment made waves with R1 nearing frontier models at a fraction of the associated fee, and this paper hints that they is probably not done finding efficiencies. Between increased access to advanced AI chips and most of these research breakthroughs, China’s releases will likely be more competitive than ever in 2026.

AI TRAINING

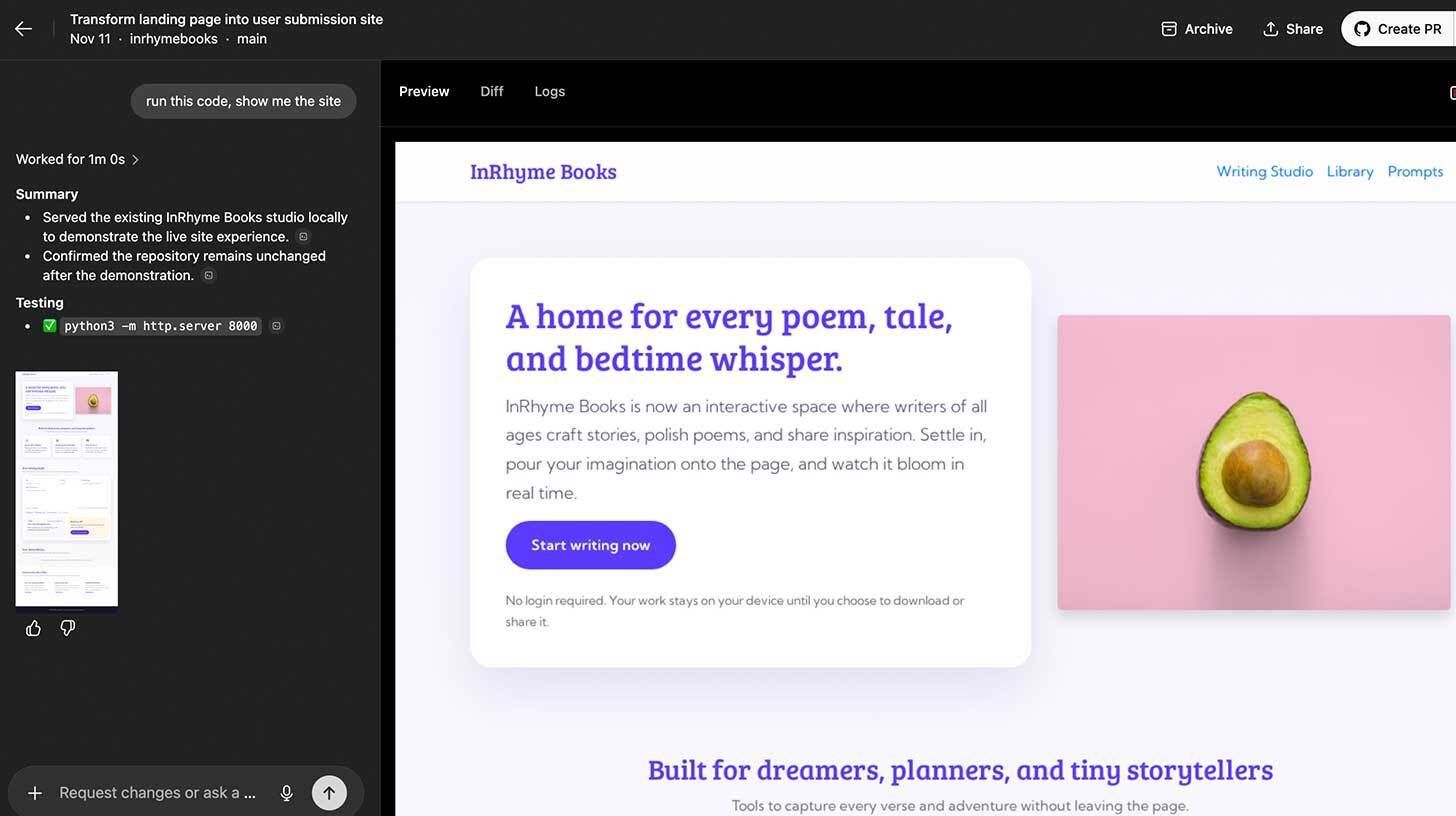

💻 Use Codex to write down code on the internet with AI agents

The Rundown: On this tutorial, you’ll learn use OpenAI’s Codex to ship your first change from a GitHub repository without writing code by hand — connecting a repo, planning changes, implementing them with AI agents, and opening pull requests.

Step-by-step:

-

Go to ChatGPT, open the left sidebar, and click on “Codex” to access it

-

Click “Manage environment,” select your GitHub organization and repo, then configure code execution settings

-

Select “Plan” to debate scope without coding, or “Execute” to make changes on a branch — example: “Are you able to give insights on what this project is about?”

-

Enter implementation prompt (e.g., “Turn this static landing page into a web site where users can paste their very own stories and poetry”), preview changes with “Run this code and show me the location,” then click “Create PR” when satisfied

Pro tip: Use branches for safety. Avoid writing code on to fundamental unless required.

PRESENTED BY CDATA

The Rundown: CData’s 2026 State of AI Data Connectivity Report surveyed 200+ data and AI leaders on what’s working (and breaking) when connecting AI to enterprise systems at scale.

The report covers:

-

Why only 6% of corporations are satisfied with their data integration architecture for AI adoption

-

How real-time connectivity and semantic intelligence define AI maturity

-

What leading orgs are constructing to scale GenAI and agentic AI systems in 2026

OPENAI

🎙️ Report: OAI overhauling audio for upcoming device

Image source: OpenAI

The Rundown: OpenAI has reportedly consolidated multiple teams to enhance its audio AI models, in accordance with The Information — laying the groundwork for the corporate’s Jony Ive-led, voice-first personal device expected in a few 12 months.

The main points:

-

OAI’s voice models are reportedly behind the text-based ChatGPT in accuracy and response speed, prompting the inner restructuring.

-

An upgraded model due in Q1 2026 will let users talk over the AI mid-response without breaking conversation flow for more natural interactions.

-

The primary device launch is reportedly still around a 12 months out and can prioritize voice over screens, with glasses and a wise speaker also discussed.

-

Ive’s design firm io, acquired for ~$6.5B in May, is leading the hardware — with an explicit goal of avoiding smartphone-style addiction.

Why it matters: OpenAI’s device ambitions are well publicized at this point, and the last word reveal of the shape factor for its hardware will likely be a giant moment to look at in 2026. Ive’s involvement brings the pedigree and hype, but a graveyard of other AI wearables shows the category remains to be waiting for a real breakout success.

QUICK HITS

🛠️ Trending AI Tools

-

📑 Qwen Image Layered – Image AI that breaks outputs into layers for edits

-

🌌 ChatGPT Images – OpenAI’s upgraded image generation system

-

🤖 GLM-4.7 – Z AI’s recent SOTA open-source model

-

📪 CC – Google Labs’ experimental AI productivity agent in Gmail

📰 The whole lot else in AI today

Chinese AI lab IQuest Labs released IQuest-Coder-V1, a brand new model family that claims to surpass rivals like Claude Sonnet 4.5 and GPT 5.1 on coding benchmarks.

LMArena posted the 2025 results for top AI models, with Google’s Gemini 3 Pro leading text, vision, and search, and Veo 3.1 models topping video rankings.

Chinese AI startup Kimi reportedly raised $500M in a brand new Series C round, bringing the corporate’s valuation to $4.3B.

SoftBank is acquiring DigitalBridge for $4B, adding a knowledge center and digital infrastructure portfolio to the Japanese giant’s growing AI bet.

X user Martin_DeVido shared an experiment giving Claude full control of keeping a tomato plant alive for over a month, controlling systems without human intervention.

COMMUNITY

🤝 Community AI workflows

Every newsletter, we showcase how a reader is using AI to work smarter, save time, or make life easier.

Today’s workflow comes from reader Prasanna A. in Atlanta, GA:

“When reading e-books, it will possibly be difficult to retain and connect key concepts. To resolve this, I leverage Google Gemini’s large context window by uploading the book’s text. I even have the AI explain the important thing points of every chapter and analyze how they relate to previous sections using real-world examples tailored to my specific goals.

To make sure mastery, I conclude each chapter with a knowledge check and perform a comprehensive exam once the book is finished. The true ‘magic’ happens on the intersection of the book’s theory and practical application.”

How do you employ AI? Tell us here.

🎓 Highlights: News, Guides & Events

-

Read our last AI newsletter: The Rundown’s 2025 12 months in review

-

Read our last Tech newsletter: Meta buys AI startup Manus for $2B

-

Read our last Robotics newsletter: Robotaxis that plug into your brain

-

Today’s AI tool guide: Use Codex to write down code on the internet with AI agents

That is it for today!Before you go we’d like to know what you considered today’s newsletter to assist us improve The Rundown experience for you.

|

|

|

Login or Subscribe to take part in polls. |

See you soon,

Rowan, Joey, Zach, Shubham, and Jennifer — the humans behind The Rundown