Good morning, AI enthusiasts. Adobe just wrapped up MAX 2025, taking its most ambitious leap yet toward an AI-powered way forward for creativity.

From embedding agentic assistants directly inside its apps to uniting the highest AI models under one plan, the corporate is reshaping how creators imagine, design, and deliver content.

To explore these innovations and what they mean for each creators and enterprises, we sat down with David Wadhwani, President of Digital Media business at Adobe, for an exclusive Q&A.

In today’s AI rundown:

-

Adobe’s vision for the AI era

-

Adobe Firefly, the all-in-one creative AI studio

-

AI assistants for creative work

-

Partnering to advance AI-powered creativity

-

The last word creative skill for the AI age

LATEST DEVELOPMENTS

ADOBE’S VISION

🔮 Adobe’s vision for the AI era

Image: Kiki Wu / The Rundown

The Rundown: At MAX 2025, Adobe unveiled a unified AI strategy bringing the highest creative models—spanning image, audio, and video—into one plan, while introducing conversational experiences powered by agentic AI across Adobe Firefly, Photoshop, and Adobe Express.

Cheung: You only wrapped up Adobe MAX, where AI took center stage. How is Adobe evolving its vision for this era of creativity, and what stood out most this yr?

Wadhwani: I’ve attended MAX for 22 years, and our strategy has never been more necessary as creators navigate rapid changes in AI. At MAX, we introduced a single plan with access to leading models and artistic tools for images, audio, and video.

Users can now generate unlimited images and videos through Dec. 1. The response has been incredible — creators are exploring the brand new Firefly app, and businesses are scaling up content production with our enterprise solutions.

Wadhwani added: As AI reshapes how we are able to express ideas, our vision is to place creators at the middle of two major forces reshaping creativity today: generative tools powered by AI models and conversational interfaces powered by AI agents.

We’re developing the perfect creative AI tools for a growing universe of consumers. Our apps now integrate models from Adobe, Google, OpenAI, Runway, Luma AI, and others. We’re also bringing conversational experiences to Firefly, Adobe Express, and Photoshop.

Why it matters: By doubling down on generative and agentic experiences, Adobe is reimagining creativity as a shared process where humans imagine and AI builds — amplifying ideas at unprecedented scale. The corporate’s big bet: the subsequent creative advances might be via collaboration between human intuition and machine intelligence.

FIREFLY

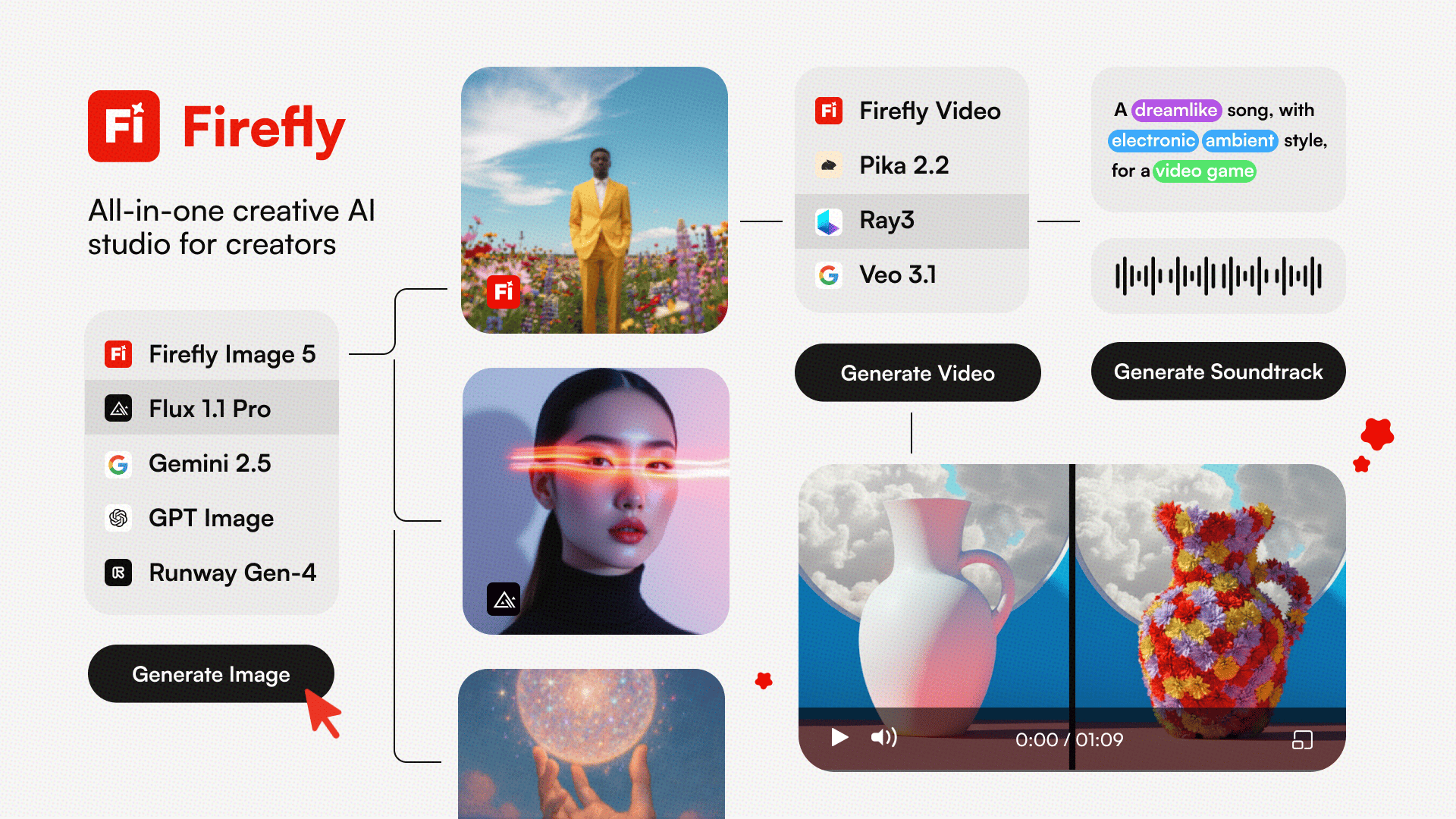

🧠 Adobe Firefly, the all-in-one creative AI studio

Image: Kiki Wu / The Rundown

The Rundown: Adobe is popping the Firefly app right into a full creative AI studio, combining third-party models and its own commercially secure AI with pro-grade video, audio, and image tools so creators can ideate, produce, and deliver all the pieces in a single place.

Cheung: The Adobe Firefly app has evolved into an all-in-one creative AI studio. What’s the largest change in how users will experience it?

Wadhwani: Firefly now supports every stage of content creation, with several AI model options and video, audio, imaging, and design tools—including studio-quality features like Generate Soundtrack, Generate Speech, and a timeline-based video editor.

Creators can now go from a spark of inspiration to a finished piece of content without ever leaving the app.

Cheung: You’ve also continued to work on Firefly models. Are you able to tell me more in regards to the latest Firefly model innovations showcased at MAX?

Wadhwani: We introduced Firefly Image Model 5, which generates native 4MP images with photorealistic lighting, natural textures, and anatomical accuracy, while maintaining coherence across complex scenes.

Recent editing features like Prompt to Edit and Layered Editing give creators more control and suppleness. Audio capabilities have also expanded, with Generate Soundtrack powered by the Firefly Audio Model and Generate Speech, a text-to-speech tool built on Firefly Speech Model and ElevenLabs’ AI.

Wadhwani added: With all Firefly models trained only on content Adobe has rights to make use of, and never on user data, our models ensure creators can confidently use what they generate of their work and campaigns.

Why it matters: Adobe is bridging the fragmented creative experience that has long forced creators to stitch together different tools. With the Firefly app, the whole process (from idea to output) happens seamlessly in a single place. It’s a shift from juggling software to easily creating.

AGENTIC AI

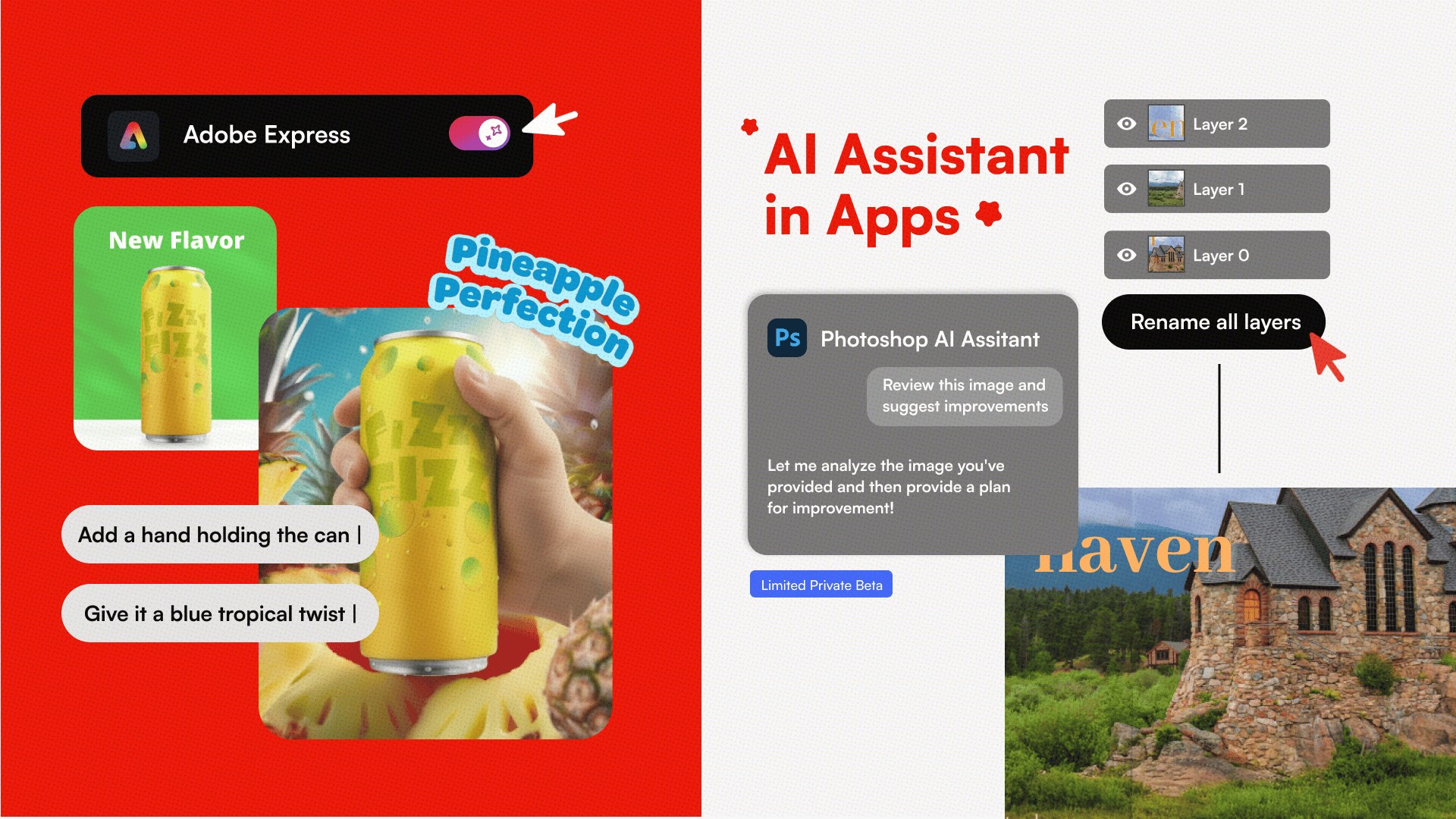

🤖 AI assistants for creative work

Image: Kiki Wu / The Rundown

The Rundown: Adobe is embedding agentic AI in its apps, turning assistants into energetic teammates that may perform repetitive tasks, automate workflows, and coordinate across projects, in order that creators can give attention to creating.

Cheung: Tell us in regards to the agentic capabilities built by Adobe. What led to introducing agents directly inside tools, reasonably than offering them as recent standalone products?

Wadhwani: By embedding the AI assistants directly into apps like Photoshop, Express, and Firefly, these assistants will help creators move faster by automating repetitive tasks, offering personalized suggestions, and guiding creatives through complex workflows, with every motion happening in context.

In Express, you’ll be able to iterate on content with a straightforward chat and make changes without starting over. In Photoshop, you’ll be able to ask for help organizing assets or applying edits in bulk and simply switch between conversation and hands-on editing – without ever leaving your canvas.

Wadhwani added: We also previewed Project Moonlight, a private orchestration assistant for Firefly that connects workflows across multiple Adobe apps and beyond.

Why it matters: Agents that execute, adapt, and collaborate free creators from repetitive tasks and switch time once spent managing processes into time spent making ideas real. As these agents grow more capable, they might evolve into true creative partners that anticipate needs, refine ideas, and speed up the whole creative cycle.

PARTNERSHIPS

🤝 Partnering to advance AI-powered creativity

Image: Kiki Wu / The Rundown

The Rundown: Adobe has partnered with Google and YouTube to present creators more alternative and suppleness to work with industry-leading tools, access top AI models, and create with confidence.

Cheung: You furthermore mght announced several recent partnerships at Adobe MAX. Are you able to elaborate on what they’re and why they’re necessary?

Wadhwani: The partnerships we announced reflect our commitment to giving creators the perfect tools, the perfect models, and the boldness to bring their ideas to life on their terms and true to their vision.

Our expanded partnership with Google Cloud will bring Google’s advanced AI models, including Gemini, Veo, and Imagen, into Adobe apps. Through Adobe Firefly Foundry, our enterprise customers may even have the option to customize Google’s models with their very own proprietary data to generate on-brand content experiences at scale.

We also announced a recent partnership with YouTube, where creators will have the option to make use of Premiere Mobile to access exclusive YouTube Shorts templates with Premiere’s pro-level mobile video editing, making it easier than ever to capture, edit, and publish standout content directly from their smartphones.

Why it matters: By partnering with giants like Google, Adobe is betting that the longer term of creativity might be shaped by collaboration, not competition. These partnerships also mark a shift toward ecosystem flexibility, where professionals can mix the perfect technology while staying true to their brand, workflow, and vision.

FUTURE

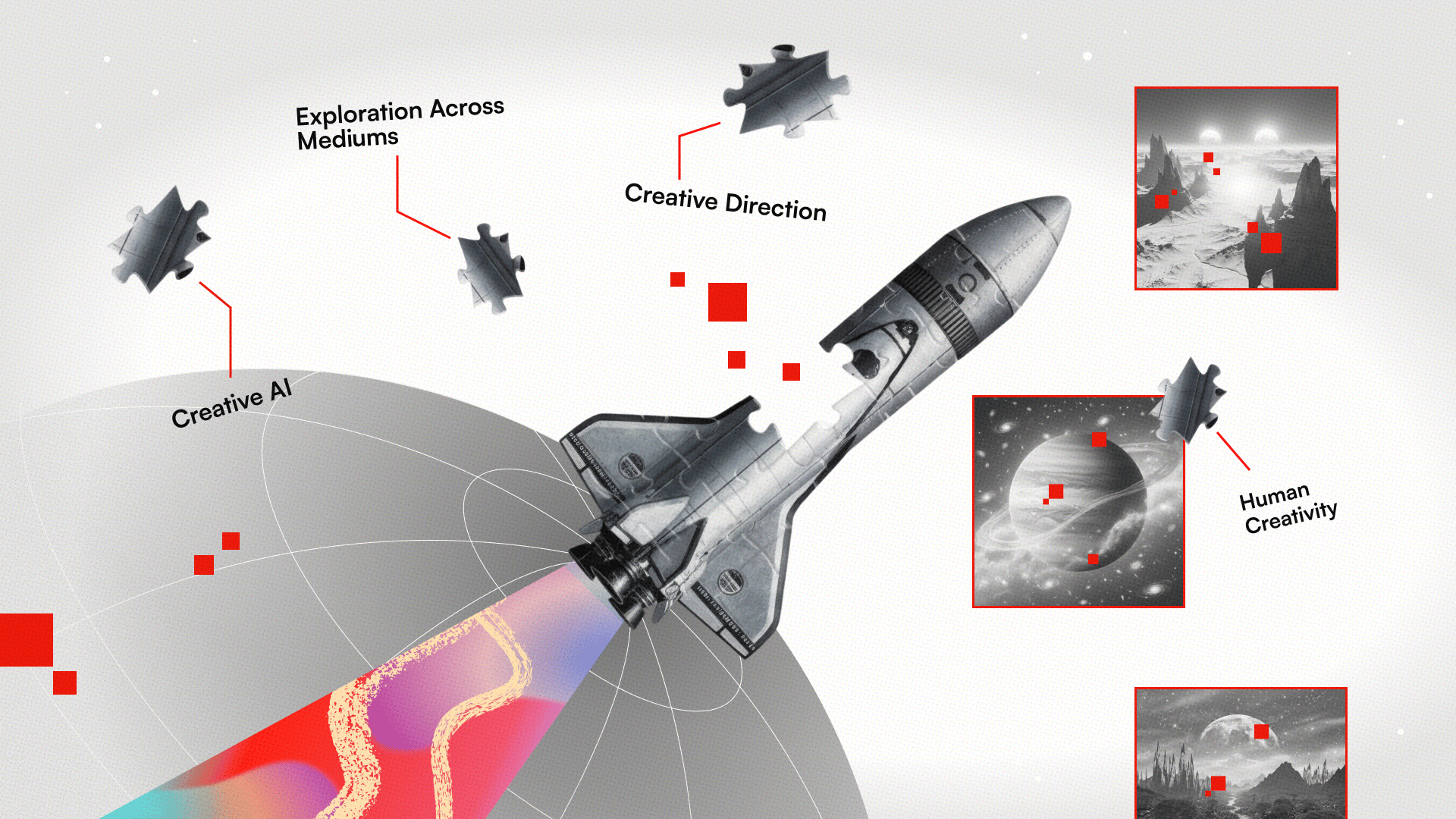

🚀 The last word skill for the age of creative AI

Image: Kiki Wu / The Rundown

The Rundown: As AI takes on more of the technical heavy lifting, Adobe believes the creators who stand out might be those that bring creative direction—the flexibility to guide the technology with vision and magnificence—to the forefront.

Cheung: What skills or mindsets will develop into most crucial for creators to thrive on this AI-driven world?

Wadhwani: The most beneficial skill within the AI era isn’t technical, it’s creative direction: the flexibility to guide technology with imagination, taste, and intent.

As AI handles more of the mechanical, repetitive work, what is going to proceed to set creators apart is their vision, storytelling, and willingness to experiment across mediums. Those that embrace AI as one other instrument of their creative toolbox will unlock entirely recent ways to specific themselves.

At Adobe, we’re constructing tools that make AI-powered creation effortless, so creators can give attention to what only humans can do: imagine, connect, and move people through the facility of creativity. Human creativity and emotion can’t get replaced.

Why it matters: In a world where AI can generate with speed and scale, Wadhwani believes human creativity stays the final word edge. Like many others in creative AI, Adobe also believes the longer term of creative work belongs to those that see AI as a collaborator, one which enhances imagination and brings ideas to life with greater impact.