Good morning. It’s Monday, October sixth.

On at the present time in tech history: In 1983, a Fukushima lab paper that detailed how the neocognitron actually worked. It covered layer-by-layer receptive fields, unsupervised competition between the “S” and “C” cells, and the way the model handled shift-invariant pattern recognition. It’s considered one of the clearest early ancestors of contemporary CNNs, with actual training details and implementation matrices included.

-

OpenAI’s DevDay 2025

-

Tesla Optimus Knows Kung Fu

-

Gamer builds 5M-parameter ChatGPT model inside Minecraft

-

5 Latest AI Tools

-

Latest AI Research Papers

You read. We listen. Tell us what you think that by replying to this email.

In partnership with WorkOS

Thanks for supporting our sponsors!

Today’s trending AI news stories

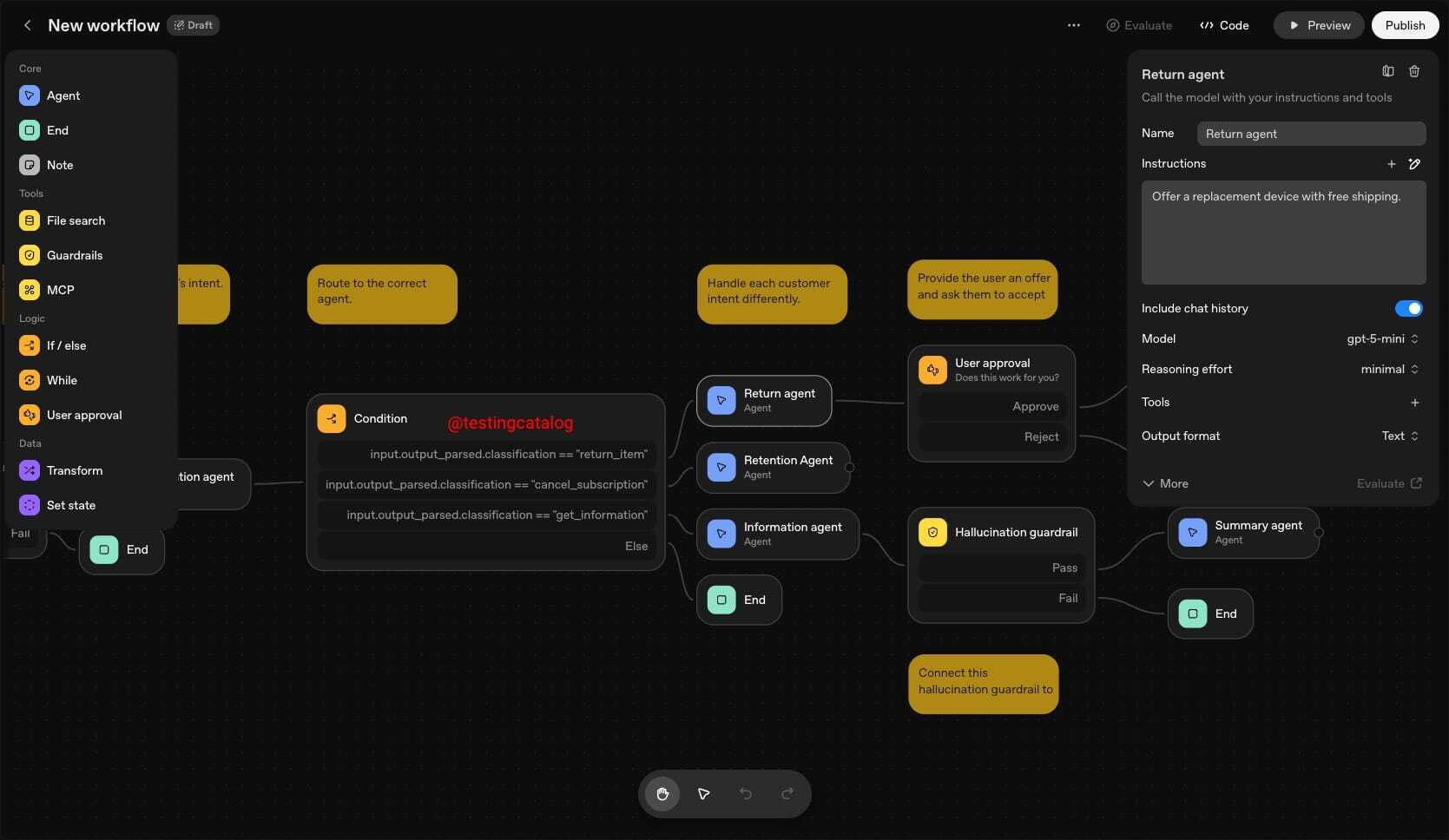

DevDay 2025 opens as OpenAI rolls out Agent Builder while Sora enters IP lockdown

OpenAI’s third DevDay on October 6 in San Francisco is pulling in greater than 1,500 developers as Sam Altman opens with a slate of product drops centered on recent model capabilities, API updates, and agent infrastructure.

The marquee reveal is Agent Builder, a visible orchestration tool that lets developers drag together entire AI workflows with blocks for logic, loops, approvals, data transforms, file search, MCP connectors, and ChatKit modules. It’s built to handle each quick prototyping and production deployment. The remaining of the agenda covers model behavior research, speech tooling, platform integrations, and Sora showcases, including interactive demos.

But Sora can be the corporate’s biggest liability in the meanwhile. After users pumped out viral videos riffing on South Park and other protected IP, OpenAI is abandoning its “opt-out and take care of it later” stance and shifting to full opt-in for IP owners. Altman says studios and rights holders will get granular controls modeled after the system Sora already uses to dam unauthorized biometric cameos. A possible revenue-sharing setup is on the table, though nobody seems to know what that appears like yet. Enforcement goes to be messy. Style mixing, overlapping ownership claims, and the still-missing Media Manager tool mean OpenAI is plugging leaks with out a real framework. Legal pressure is clearly dictating the timeline.

This Sora 2 copyright guardrail bs is just so sad. I had a good time seeing familiar IPs having videos fabricated from them. SpongeBob and Rick and Morty and all that. I don’t understand it. These tiny fan made videos profit these firms. I haven’t considered Rick and Morty in perpetually.

– and (@and60784096)

7:16 AM • Oct 4, 2025

And yet, the tech continues to advance: in a benchmark test from Epoch AI, Sora 2 answered GPQA science questions by generating short videos of a professor holding handwritten responses. It scored 55 percent, not near GPT-5’s 72 percent via text, but enough to point out video generation is beginning to double as reasoning output.

On hardware, the Jony Ive collaboration is hitting delays that will push it past the 2026 goal. Sources say the team continues to be stuck on core technical problems: how the assistant should sound and behave, methods to protect privacy on a tool that’s all the time listening, and methods to afford the compute needed for low-latency inference in a tiny, screenless form factor. The privacy model can be unclear with on-device versus cloud processing continues to be being debated. And the hardware needed to make all of it work at scale may blow past budget constraints.

OpenAI can be continuing its quiet talent land grab. It just acqui-hired Roi, a personalised finance assistant that lets users define each tone and behavior while tracking assets across crypto, stocks, DeFi, and real estate. The move aligns with OpenAI’s growing concentrate on consumer-facing, adaptive products. Read more.

Tesla Optimus hits real-time kung fu milestone with AI-driven motion

Tesla’s humanoid robot, Optimus, just got a serious upgrade. A brand new 36-second clip shows it sparring in Kung Fu with a human partner—real-time moves, not sped-up footage. Optimus blocks, sidesteps, and even lands a sidekick, showing off improved balance, weight-shifting, and recovery. Footwork is smoother, but hands remain mostly idle, hinting the 22-DOF hands are still within the lab.

Elon Musk confirms the demo is AI-driven, not remote-controlled. Optimus v2.5 is processing inputs and generating responses on the fly, an enormous step toward robots that may actually interact with humans and handle unpredictable environments. Kung Fu isn’t the tip goal—it’s a stress test for speed, stability, and flexibility, skills critical for lifting, carrying, and walking over uneven terrain. Tesla plans 5,000 units in 2025, scaling parts production toward 50,000 by 2026. Read more.

Gamer builds 5M-parameter ChatGPT model inside Minecraft using 439M blocks

Sammyuri has built CraftGPT, a 5-million-parameter language model running entirely inside Minecraft using 439 million Redstone blocks. The model has six layers, a 240-dimensional embedding space, a 1920-token vocabulary, and a 64-token context window, with most weights at 8-bit and key embeddings and LayerNorm weights at 18–24 bits.

Trained on TinyChat, it runs on an in-game 1020×260×1656 Redstone computer, processing prompts in hours even at a 40,000× tick speed. Outputs are rough, often off-topic or ungrammatical, however the project is a masterclass in virtual computation, showing how AI logic, memory, and tokenization could be mapped to pure game mechanics. It’s less about usability and more about proving the boundaries of computation in an abstract, fully sandboxed environment. Read more.

5 recent AI-powered tools from around the net

arXiv is a free online library where researchers share pre-publication papers.

Your feedback is invaluable. Reply to this email and tell us how you think that we could add more value to this article.

Occupied with reaching smart readers such as you? To develop into an AI Breakfast sponsor, reply to this email or DM us on 𝕏!