Good morning, AI enthusiasts. Every AI user has experienced it: an LLM’s supremely confident answers that transform completely made up. Now, OpenAI thinks they’ve finally cracked why chatbots cannot stop hallucinating.

The corporate’s latest research paper suggests that solving AI hallucinations might come right down to something surprisingly easy — teaching models that it’s okay to say “I do not know.”

In today’s AI rundown:

-

OpenAI reveals why chatbots hallucinate

-

Anthropic agrees to $1.5B creator settlement

-

Automate web monitoring with AI agents

-

OpenAI’s own AI chips with Broadcom

-

4 recent AI tools, community workflows, and more

LATEST DEVELOPMENTS

AI RESEARCH

🔬 OpenAI reveals why chatbots hallucinate

Image source: Gemini / The Rundown

The Rundown: OpenAI just published a brand new paper arguing that AI systems hallucinate because standard training methods reward confident guessing over admitting uncertainty, potentially uncovering a path towards solving AI quality issues.

The small print:

-

Researchers found that models make up facts because training test scoring gives full points for lucky guesses but zero for saying “I do not know.”

-

The paper shows this creates a conflict: models trained to maximise accuracy learn to all the time guess, even when completely uncertain about answers.

-

OAI tested this theory by asking models for specific birthdays and dissertation titles, finding they confidently produced different fallacious answers every time.

-

Researchers proposed redesigning evaluation metrics to explicitly penalize confident errors greater than after they express uncertainty.

Why it matters: This research potentially makes the hallucination problem a problem that could be higher solved in training. If AI labs begin to reward honesty over lucky guesses, we could see models that know their limits — trading some performance metrics for the reliability that truly matters when systems handle critical tasks.

TOGETHER WITH CONCIERGE

👋 Your brand’s AI answer engine

The Rundown: Today’s SaaS buyers use AI on daily basis to reply their questions, and haven’t any patience for a scavenger hunt. Concierge is a Perplexity-style answer engine, trained on your organization, that lives in your website and delivers accurate, personalized responses to ultra-specific questions.

Modern brands use Concierge to:

-

Handle any buyer query (irrespective of how technical) with advanced RAG in your sources & media

-

Control and visibility over every conversation, with guardrails and sentiment evaluation

-

Construct trust with website visitors before they’re willing to commit to a demo

Use Concierge to show every query right into a conversation — and each conversation into revenue.

ANTHROPIC

💰 Anthropic agrees to $1.5B creator settlement

Image source: Ideogram / The Rundown

The Rundown: Anthropic just agreed to pay no less than $1.5B to settle a class-action lawsuit from authors, marking the primary major payout from an AI company for using copyrighted works to coach its models.

The small print:

-

Authors sued after discovering Anthropic downloaded over 7M pirated books from shadow libraries like LibGen to construct its training dataset for Claude.

-

A federal judge ruled in June that training on legally purchased books constitutes fair use, but downloading pirated copies violates copyright law.

-

The settlement covers roughly. 500,000 books at $3,000 per work, with additional payments if more pirated materials are present in training data.

-

Anthropic must also destroy all pirated files and copies as a part of the agreement, which doesn’t grant future training permissions.

Why it matters: This precedent-setting payout is the primary major resolution in the various copyright lawsuits outstanding against the AI labs — though the ruling comes down on piracy, not the “fair use” of legal texts. While $1.5B seems like a hefty sum at first glance, the corporate’s recent $13B raise at a $183B valuation likely softens the blow.

AI TRAINING

📝 Automate web monitoring with AI agents

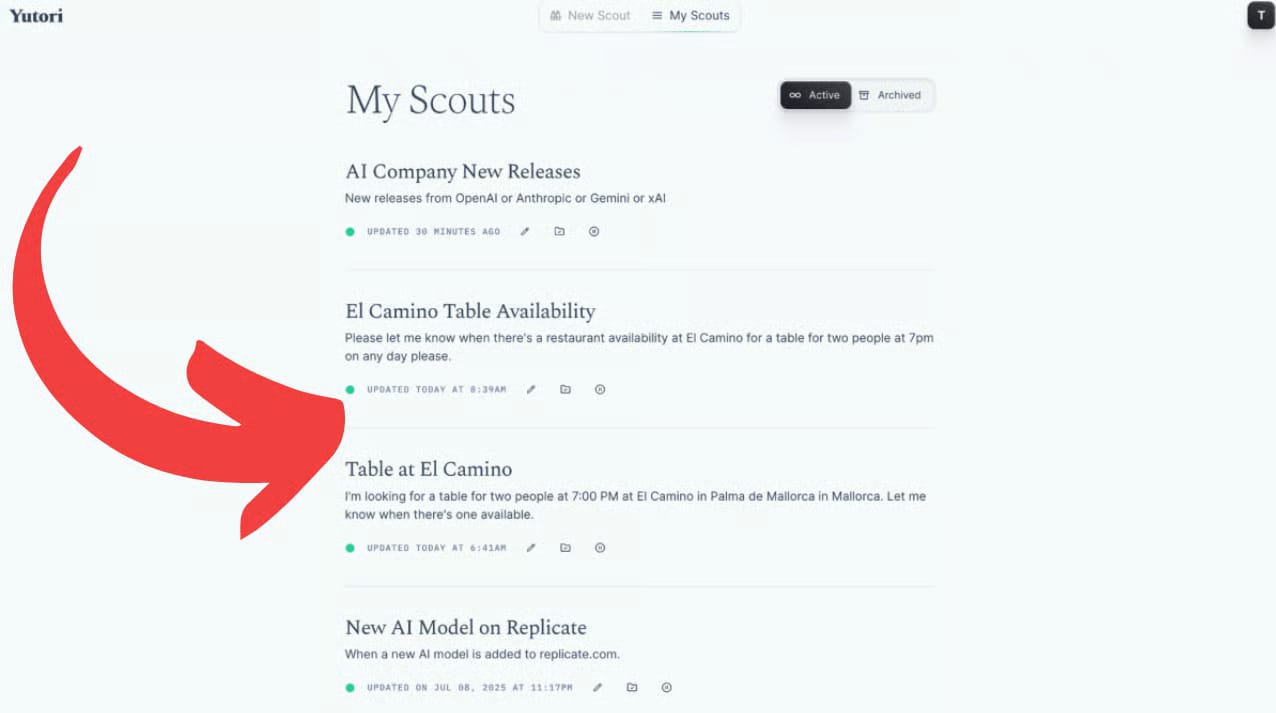

The Rundown: On this tutorial, you’ll learn the best way to use Yutori Scouts, an AI web monitoring agent that watches for specific updates online and alerts you via email. No more refreshing pages or manually checking for changes.

Step-by-step:

-

Enter your request within the Yutori homepage input box (e.g., “Latest releases from OpenAI or Anthropic or Gemini or xAI”) to create your Scout

-

Select how often you wish alerts — easy, every day, or weekly — then click Start scouting to activate it

-

View and manage all of your energetic Scouts from the “My Scouts” dashboard, where you’ll be able to edit, pause, or delete them anytime

-

Check reports sent to your email or in-app, each with clear findings and a direct link to the source for quick motion

Pro tip: Use Scouts for time-sensitive opportunities like reservations, product restocks, or industry news, and pair with automations to get updates.

PRESENTED BY POPCORN

The Rundown: Popcorn’s agentic product transforms how movies are made. Drop one conceptual prompt and get a whole 1-3-minute movie with narrative arcs, dialogue, lip-sync, soundtrack, and sound effects.

Unlike tools that just produce individual shots, Popcorn handles:

-

Your entire creative process, from ideation to agentic production and auto-editing

-

Creation of full narrative movies from a single prompt

-

Easy style, genre, and narrative changes

-

Consistent character, props, and sets libraries

Try Popcorn and create your first movie without cost with code AGENTIC.

OPENAI

🔧 OpenAI’s own AI chips with Broadcom

Image source: Ideogram / The Rundown

The Rundown: OpenAI will begin mass production of its own custom AI chips next 12 months through a partnership with Broadcom, in line with a report from the Financial Times — joining other tech giants racing to scale back dependence on Nvidia’s hardware.

The small print:

-

Broadcom’s CEO revealed a mystery customer committed $10B in chip orders, with sources confirming OpenAI because the client planning internal deployment only.

-

The custom chips will help OpenAI double its compute inside five months to satisfy surging demand from GPT-5 and address ongoing GPU shortages.

-

OpenAI initiated the Broadcom collaboration last 12 months, though production timelines remained unclear until this week’s earnings announcement.

-

Google, Amazon, and Meta have already created custom chips, with analysts expecting proprietary options to proceed siphoning market share from Nvidia.

Why it matters: The highest AI labs are all pushing to secure more compute, and Nvidia’s kingmaker status is beginning to be clouded by each Chinese domestic chip production efforts and tech giants bringing custom options in-house. Owning the total stack can even eventually help reduce OAI’s massive costs being incurred on external hardware.

QUICK HITS

🛠️ Trending AI Tools

-

📱 EmbeddingGemma – Google’s open-source, on-device embedding model

-

❤️ Lovable Voice Mode – Code and construct apps with voice commands

-

🤖 Qwen3-Max – Alibaba’s massive recent 1T parameter model

-

💄 Higgsfield Ads 2.0 – Create realistic custom AI product placement ads

📰 Every part else in AI today

MongoDB.local NYC, Sep. 17 — Unlock recent possibilities in your data within the age of AI. Register here and use code SOCIAL50 to avoid wasting 50%.*

Alibaba introduced Qwen3-Max, a 1T+ model that surpasses other Qwen3 variants, Kimi K2, Deepseek V3.1, and Claude Opus 4 (non-reasoning) across benchmarks.

OpenAI revealed that it plans to burn through $115B in money over the subsequent 4 years as a result of data center, talent, and compute costs, an $80B increase over its projections.

French AI startup Mistral is reportedly raising $1.7B in a brand new Series C funding round, which can make it the most dear company in Europe with a $11.7B valuation.

OpenAI Model Behavior lead Joanne Jang announced OAI Labs, a team dedicated to “inventing and prototyping recent interfaces for the way people collaborate with AI.”

A bunch of authors filed a category motion lawsuit against Apple, accusing the tech giant of coaching its OpenELM LLMs using a pirated dataset of books.

*Sponsored Listing

COMMUNITY

🤝 Community AI workflows

Every newsletter, we showcase how a reader is using AI to work smarter, save time, or make life easier.

Today’s workflow comes from reader Avi F. in Portland, ME:

“I plugged my 100+ page medical insurance coverage document right into a custom GPT and asked it how I can use my coverage and what things cost. It seems I actually have free, unlimited therapy via telehealth, and have been in a position to find answers hidden in otherwise nebulous language. Before AI, I might have needed to call, which could take over an hour to get to the precise person through customer support, and sometimes they would not even know the reply. This took about five minutes to construct after which just just a few minutes to ask and get answers.”

How do you utilize AI? Tell us here.

🎓 Highlights: News, Guides & Events

-

Read our last AI newsletter: OpenAI takes on LinkedIn with jobs platform

-

Read our last Tech newsletter: OpenAI to make its own AI chip

-

Read our last Robotics newsletter: Apple loses robotics result in Meta

-

Today’s AI tool guide: Automate web monitoring with AI agents

-

RSVP to our next workshop 9/9 @ 2 PM EST: Intro to agentic development

That is it for today!Before you go we’d like to know what you considered today’s newsletter to assist us improve The Rundown experience for you.

|

|

|

Login or Subscribe to take part in polls. |

See you soon,

Rowan, Joey, Zach, Shubham, and Jennifer — the humans behind The Rundown