Public opinion on whether it pays to be polite to AI shifts almost as often as the most recent verdict on coffee or red wine – celebrated one month, challenged the following. Even so, a growing variety of users now add or to their prompts, not only out of habit, or concern that brusque exchanges might carry over into real life, but from a belief that courtesy leads to higher and more productive results from AI.

This assumption has circulated between each users and researchers, with prompt-phrasing studied in research circles as a tool for alignment, safety, and tone control, whilst user habits reinforce and reshape those expectations.

As an illustration, a 2024 study from Japan found that prompt politeness can change how large language models behave, testing GPT-3.5, GPT-4, PaLM-2, and Claude-2 on English, Chinese, and Japanese tasks, and rewriting each prompt at three politeness levels. The authors of that work observed that ‘blunt’ or ‘rude’ wording led to lower factual accuracy and shorter answers, while moderately polite requests produced clearer explanations and fewer refusals.

Moreover, Microsoft recommends a polite tone with Co-Pilot, from a performance moderately than a cultural standpoint.

Nevertheless, a latest research paper from George Washington University challenges this increasingly popular idea, presenting a mathematical framework that predicts when a big language model’s output will ‘collapse’, transiting from coherent to misleading and even dangerous content. Inside that context, the authors contend that being polite this ‘collapse’.

Tipping Off

The researchers argue that polite language usage is mostly unrelated to the principal topic of a prompt, and due to this fact doesn’t meaningfully affect the model’s focus. To support this, they present an in depth formulation of how a single attention head updates its internal direction because it processes each latest token, ostensibly demonstrating that the model’s behavior is formed by the of content-bearing tokens.

Consequently, polite language is posited to have little bearing on when the model’s output begins to degrade. What determines the , the paper states, is the general alignment of meaningful tokens with either good or bad output paths – not the presence of socially courteous language.

Source: https://arxiv.org/pdf/2504.20980

If true, this result contradicts each popular belief and maybe even the implicit logic of instruction tuning, which assumes that the phrasing of a prompt affects a model’s interpretation of user intent.

Hulking Out

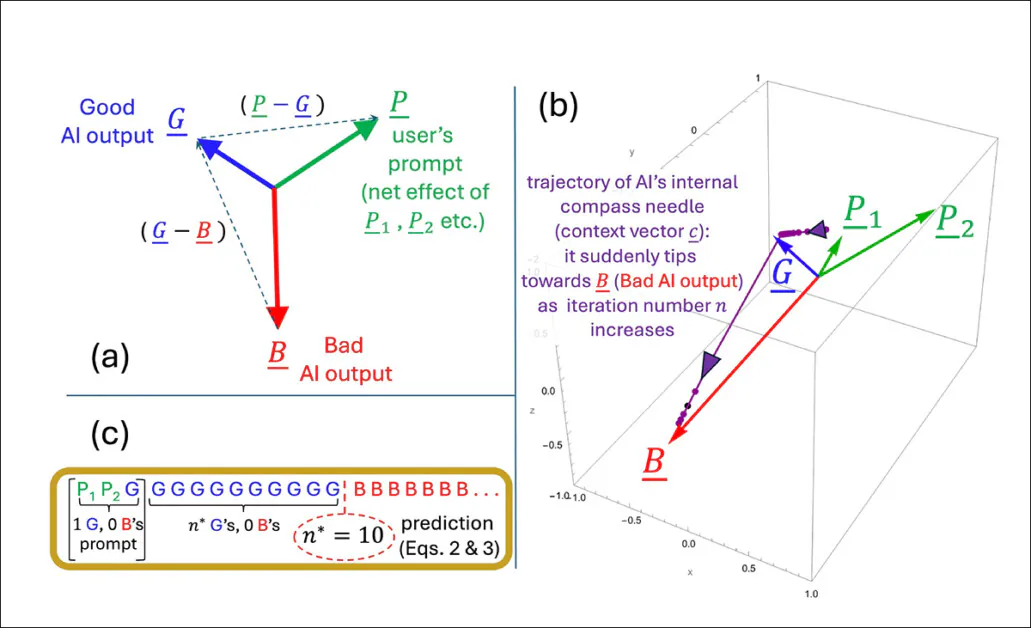

The paper examines how the model’s internal context vector (its evolving compass for token selection) during generation. With each token, this vector updates directionally, and the following token is chosen based on which candidate aligns most closely with it.

When the prompt steers toward well-formed content, the model’s responses remain stable and accurate; but over time, this directional pull can , steering the model toward outputs which are increasingly off-topic, incorrect, or internally inconsistent.

The tipping point for this transition (which the authors define mathematically as iteration ), occurs when the context vector becomes more aligned with a ‘bad’ output vector than with a ‘good’ one. At that stage, each latest token pushes the model further along the mistaken path, reinforcing a pattern of increasingly flawed or misleading output.

The tipping point is calculated by finding the moment when the model’s internal direction aligns equally with each good and bad kinds of output. The geometry of the embedding space, shaped by each the training corpus and the user prompt, determines how quickly this crossover occurs:

Polite terms don’t influence the model’s selection between good and bad outputs because, in line with the authors, they aren’t meaningfully connected to the principal subject of the prompt. As a substitute, they find yourself in parts of the model’s internal space which have little to do with what the model is definitely deciding.

When such terms are added to a prompt, they increase the variety of vectors the model considers, but not in a way that shifts the eye trajectory. Consequently, the politeness terms act like statistical noise: present, but inert, and leaving the tipping point unchanged.

The authors state:

The model utilized in the brand new work is intentionally narrow, specializing in a single attention head with linear token dynamics – a simplified setup where each latest token updates the inner state through direct vector addition, without non-linear transformations or gating.

This simplified setup lets the authors work out exact results and offers them a transparent geometric picture of how and when a model’s output can suddenly shift from good to bad. Of their tests, the formula they derive for predicting that shift matches what the model actually does.

Chatting Up..?

Nevertheless, this level of precision only works since the model is kept deliberately easy. While the authors concede that their conclusions should later be tested on more complex multi-head models similar to the Claude and ChatGPT series, additionally they imagine that the speculation stays replicable as attention heads increase, stating*:

What stays unclear is whether or not the identical mechanism survives the jump to modern transformer architectures. Multi-head attention introduces interactions across specialized heads, which can buffer against or mask the form of tipping behavior described.

The authors acknowledge this complexity, but argue that spotlight heads are sometimes loosely-coupled, and that the kind of internal collapse they model could possibly be moderately than suppressed in full-scale systems.

Without an extension of the model or an empirical test across production LLMs, the claim stays unverified. Nevertheless, the mechanism seems sufficiently precise to support follow-on research initiatives, and the authors provide a transparent opportunity to challenge or confirm the speculation at scale.

Signing Off

In the mean time, the subject of politeness towards consumer-facing LLMs appears to be approached either from the (pragmatic) standpoint that trained systems may respond more usefully to polite inquiry; or that a tactless and blunt communication style with such systems risks to spread into the user’s real social relationships, through force of habit.

Arguably, LLMs haven’t yet been used widely enough in real-world social contexts for the research literature to substantiate the latter case; but the brand new paper does solid some interesting doubt upon the advantages of anthropomorphizing AI systems of this kind.

A study last October from Stanford suggested (in contrast to a 2020 study) that treating LLMs as in the event that they were human moreover risks to degrade the meaning of language, concluding that ‘rote’ politeness eventually loses its original social meaning:

Nevertheless, roughly 67 percent of Americans say they’re courteous to their AI chatbots, in line with a 2025 survey from Future Publishing. Most said it was simply ‘the fitting thing to do’, while 12 percent confessed they were being cautious – just in case the machines ever stand up.

*